We last took an in-depth look at integrated graphics performance four years ago, using a combination of desktop and laptop hardware. Things have changed a lot in the intervening years, and while no one is going to replace a high-end GPU with an integrated solution any time soon, budget gaming solutions are clearly in sight. Perhaps that's why we haven't really seen any true budget GPU solutions from Nvidia for several years, with the least expensive models often adding several hundred dollars to the price of a PC or laptop.

With Intel's Lunar Lake and AMD's Ryzen AI mobile processors now launched, it's time for a rematch. We've got three roughly equivalent laptops for testing, all from Asus (via AMD and Intel). The newest is the Asus Zenbook S14, equipped with a Core Ultra 7 258V processor and Arc Graphics 140V (aka Lunar Lake or LNL). It's mostly the same chassis as the previous generation Asus Zenbook OLED 14, which has a Core Ultra 7 155H and Arc Graphics (aka Meteor Lake or MTL). And from the AMD camp, we have the larger Asus Zenbook S16 with a Ryzen AI 9 HX 370 processor with an Radeon 890M GPU (aka Strix Point).

We did some preliminary benchmarks last month, showing how Lunar Lake's graphics fared against the other options. But after both user and vendor feedback, we felt a deeper comparison was in order. Also, Intel has updated drivers for Lunar Lake that improve the situation (though they're still not on a unified driver version). This time, we're going whole hog with a selection of 24 games, including many recent releases.

We're interested in both performance and compatibility, and have notified the appropriate companies of any issues we encountered (which means we told Intel one of the games we tested is borked). We also took steps to ensure a level playing field, as much as possible. That means — and this is an absolutely critical point — that we are not using things like FSR 1/2/3, frame generation, XeSS, HYPR-RX, AFMF2, or any other proprietary technologies. Because we know, from experience, that even if XeSS and FSR2 can run on GPUs from the other vendors, they don't necessarily run optimally.

We're looking at the underlying GPU performance, at native resolution, plain and simple. Is that "fair?" And what does fair even mean? We think it's best to level the playing field without bringing in outside software solutions for a primary performance comparison, but AMD is proud of HYPR-RX, AFMF, FSR, and its other technologies and has a blog post that coincidentally also went up this morning, where AMD claims a 75% lead over Intel's 258V — with both sides using all the software tweaks available. (On a related note, this is precisely why we haven't been testing with DLSS in every game where it's an option on our desktop GPU reviews for the past six years.)

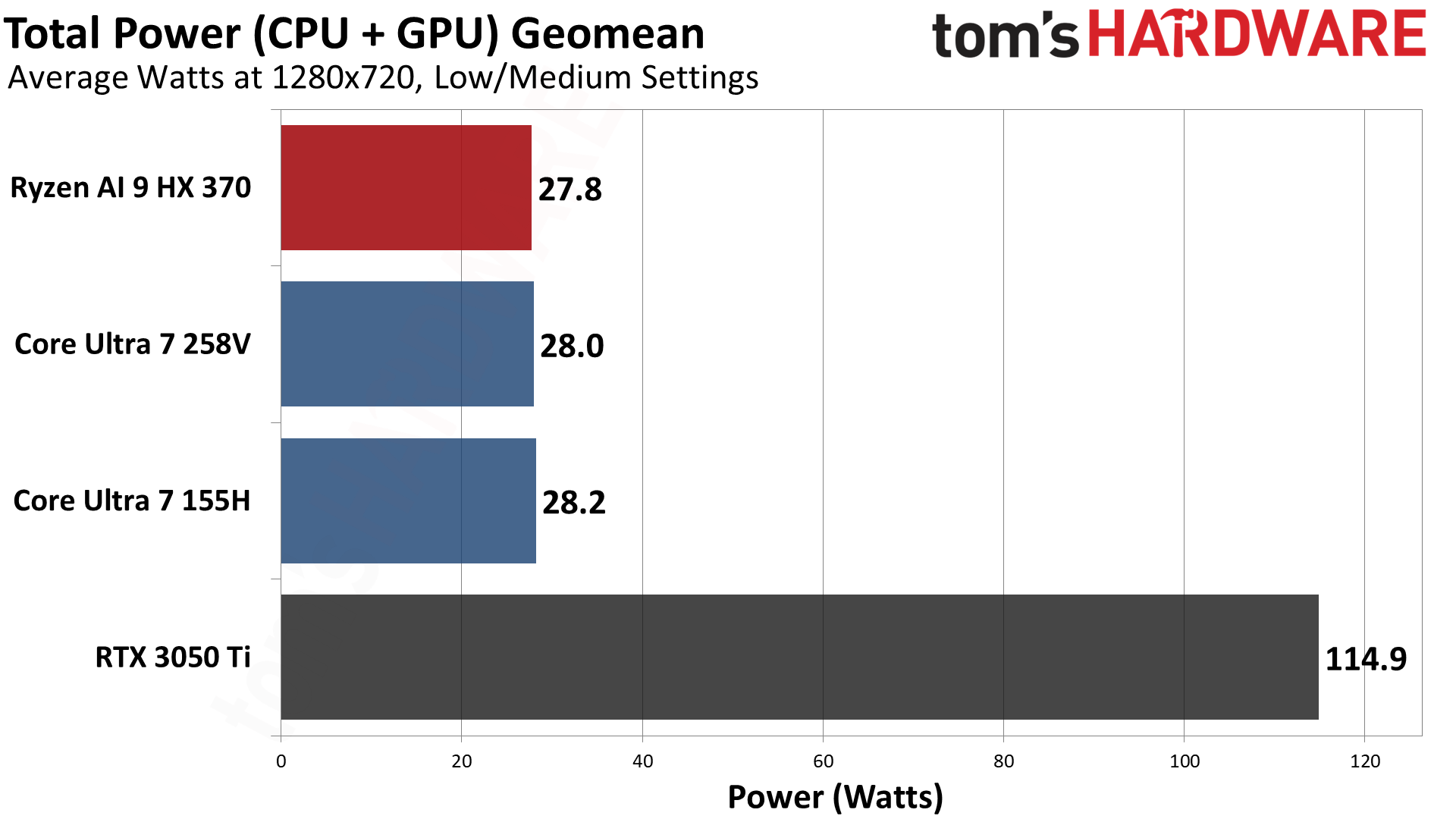

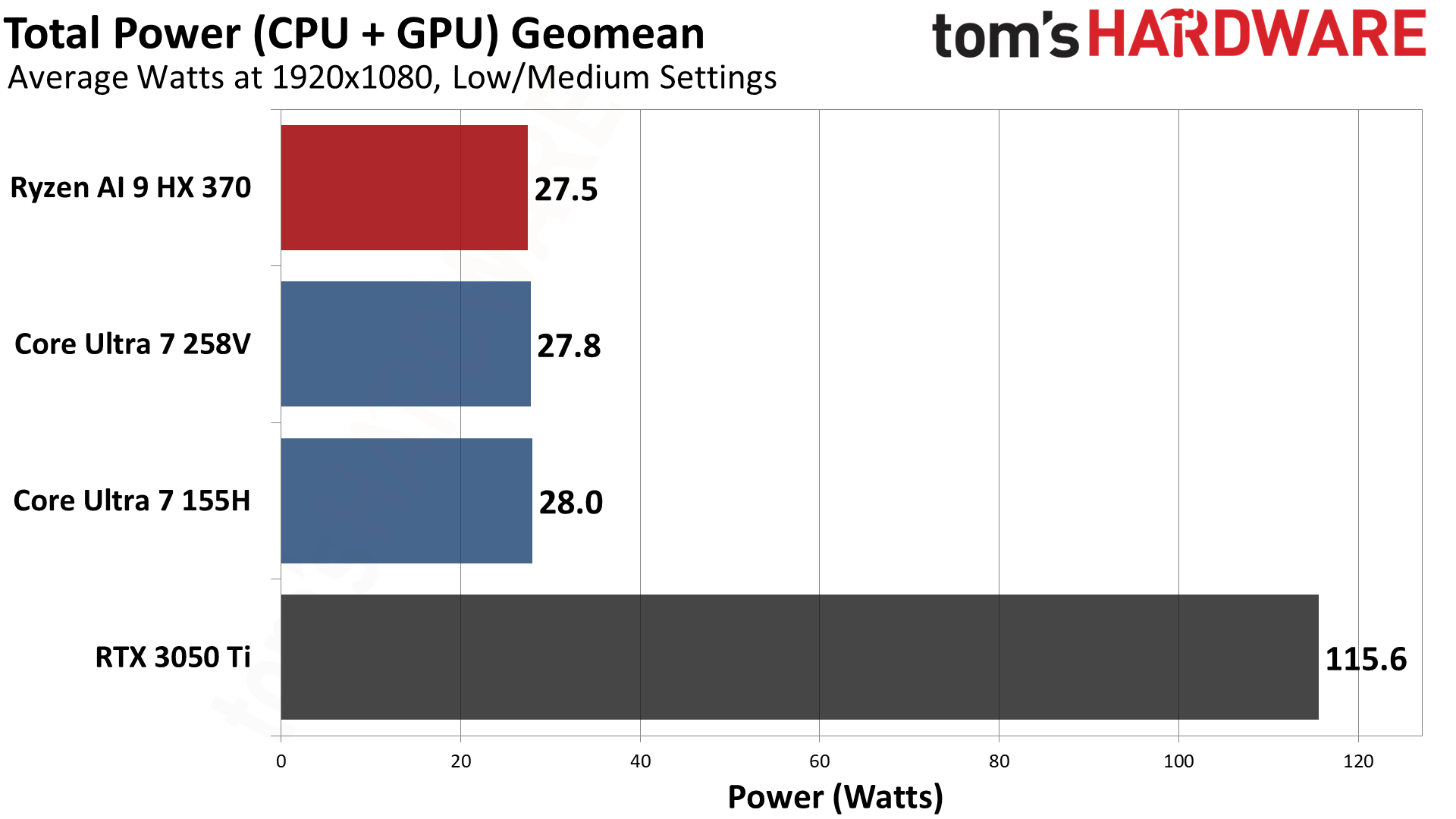

We also ensured that all three laptops have a CPU package power of around 28W — though Intel's Lunar Lake has DRAM on package, which draws about 1.5W of power, so technically it's at a slight disadvantage... but it also has faster LPDDR5x-8533 memory, which certainly helps in some of the games we tested.

And finally, for good measure, we ran the same tests on an older Dell G15 laptop that has a Core i5-12500H and an RTX 3050 Ti 4GB dedicated graphics card — which, for the record, was also tested without using DLSS. We expect the dGPU to be faster, but we wanted to see how much of a gap exists between a relatively-budget gaming laptop and these newer integrated GPUs. It's definitely not an apples-to-apples matchup, however, as the G15 comes with a single DDR5-4800 8GB stick of RAM, and that doesn't do it any favors. Consider it a point of reference rather than a definitive statement on the RTX 3050 Ti or any other dedicated GPU.

Testing Overview

Before we get to the test results, let's also quickly discuss the high level specs of the various laptops. These are, more or less, the top three integrated graphics solutions from AMD and Intel for the current and previous (Meteor Lake) laptop processors. While AMD's chip is the Ryzen AI 9, we've found little difference between Intel's Core Ultra 9 288V and the 'slower' Core Ultra 7 258V — there's even a performance deficit for the higher tier processor in some cases.

Starting with Lunar Lake, it's clear Intel set out to do something quite different from its previous architectures. On the CPU front, there are four Lion Cove P-cores (performance optimized cores), without Hyper-Threading Then there are four Skymont E-cores (efficiency optimized cores), also without Hyper-Threading. That gives eight CPU cores with eight threads total, which represents a sizeable step down from the up to 16 cores and 22 threads of the prior generation.

On the GPU side of things, Lunar Lake has a second generation 'Battlemage' Xe2 architecture chip, with eight Xe-cores and 1,024 shader ALUs (Arithmetic Logic Units) — as well as full XMX support. Architectural differences mean that real-world performance will be better, but theoretical performance lands at 4.0 teraflops for FP32 and 67 teraflops of FP16 for AI workloads. There's a new NPU (Neural Processing Unit) for AI as well that provides 48 TOPS of INT8 computational power.

Intel tapped TSMC for the most of the processor, using the cutting edge N3B node for the compute tile (CPU, GPU, NPU, and cache) and the older N6 node for the platform tile (memory controller, PCIe, USB, and other functionality). These reside on a Foveros tile that's made using Intel's older (2012) 22nm FinFET node.

Next up is the prior generation Meteor Lake laptop in the form of the Asus Zenbook 14 OLED. It's quite a different beast from new Lunar Lake, with four different tiles. Technically, it's not the first tiled architecture from Intel — that would be the short-lived Lakefield architecture in 2020. But after that initial debut and apparently proof of concept, Meteor Lake was the true coming out party for Foveros chip stacking.

The compute tile houses the CPU cores and is made on Intel's own Intel 4 node. It houses the six Redwood Cove P-cores and eight Crestmont E-cores, plus associated caches and sundry other pieces. There are two additional low power E-cores on the SOC tile, which is made using TSMC's N6 node. That's 16 cores and 22 threads of CPU, though do note that the LP E-cores run at significantly lower clocks. Two of the P-cores are also higher performance cores that can hit higher clocks.

The GPU uses the TSMC N5 node, with eight Xe-cores based on the Alchemist architecture. However, unlike the dedicated desktop and laptop Arc GPUs, Meteor Lake's GPU lacks the XMX units (matrix/vector units that help accelerate AI workloads). With clocks of up to 2250 MHz, however, it has a theoretical rating of 4.6 teraflops FP32 — higher than Lunar Lake's GPU! We'll see in the benchmarks that architectural upgrades ultimately make Battlemage the faster part, which hopefully means good things for the upcoming dedicated Battlemage GPUs.

The SOC tile also contains Intel's first generation NPU, which offers a relatively tame 10 TOPS of INT8 compute — not enough for Microsoft's Copilot+ certification. There's also an IO tile for things like PCIe, WiFi, USB, and more that's made on TSMC N6.

Compared to both Lunar Lake and Meteor Lake, AMD's Strix Point architecture is far more traditional. It's a single monolithic die, manufactured on TSMC N4P — basically a refinement of the N5 node that's better tuned for higher performance (read: clocks). That means, on the whole, AMD's chip lands between Lunar Lake's compute tile and IO tile and ahead of Meteor Lake, at least on paper.

The CPU consists of four full performance Zen 5 cores, plus eight more Zen 5c density optimized cores. All the cores support the same instruction set and features, but the Zen 5 cores can clock much higher. All told, that's 12 cores and 24 threads for the CPU.

The GPU uses AMD's efficiency optimized RDNA 3.5 architecture, with some tweaks specifically intended to help with performance. It has 16 compute units (CUs), for 1,024 shader ALUs total — the same number as both Intel GPUs. It has a maximum boost clock of 2900 MHz, giving a potential 5.9 teraflops of FP32 compute, but in practice we measured much lower real-world clocks ranging between 1.8~2.2 GHz (3.7~4.5 teraflops).

Strix Point also has an NPU rated at 50 TOPS of INT8, which makes it the most performant of the three NPUs in theory. Both Lunar Lake and Strix Point are certified for Microsoft's Copilot+ features, if that's something you care about.

And as noted, we have an older gaming laptop, the budget-friendly Dell G15 (5520) that came out in 2022. You can get an updated and better-equipped Dell G15 starting at $799 today, but the model we had on hand for testing has an RTX 3050 Ti. We wanted that specifically, or the RTX 3050, just to see how a budget dedicated RTX GPU stands up to the latest integrated solutions.

As mentioned earlier, this particular unit has a single 8GB DDR5-4800 SO-DIMM, which also potentially hinders performance. The lack of RAM likely also was to blame for one of the games (The Last of Us, Part 1) refusing to run, though it might have also been the 4GB of VRAM.

Integrated graphics 720p and 1080p gaming performance

We have 24 different games that we've elected to test, and we even went so far as to enable ray tracing (DXR, aka DirectX Raytracing) in three of the games. That... did not go very well, as you'll see in the results. Everything else was run at low or medium settings, depending on whether the game in question could achieve reasonable levels of performance.

We tested at both 720p and 1080p, without any upscaling or frame generation. We're not opposed to people using those features, particularly on low-end hardware — and AMD offers driver-side AMD Fluid Motion Frames 2 (AFMF2) frame generation to potentially improve the experience. But for the apples to apples benchmarks, we wanted to render at native resolution to see how the laptops fare.

All three Asus laptops have different fan profiles, which also determine power and performance to a large degree. We used the "Performance" profile on the Strix Point and Meteor Lake laptops, which allows for 28W of power to the CPU package. On the Lunar Lake laptop, the same "Performance" profile only gives the chip 24W of headroom, so we opted for the "Full Speed" profile that bumps it up to the same 28W TDP. Basically, we're trying to be as fair as possible, though it's impossible to truly make everything identical — motherboard and BIOS firmware being one key point of differentiation, not to mention memory speeds.

Speaking of which, the three laptops all have 32GB of dual-channel LPDDR5x memory. That's good, though the Meteor Lake and Strix Point 'only' have 7500 MT/s memory while Lunar Lake gets 8533 MT/s DRAM. The DRAM is part of the Lunar Lake package, an expensive one-off decision apparently, and Intel elected to use higher speed memory on all of the chips.

We use FrameView to capture frametimes in each game, and it also captures CPU package power (and GPU power if there's a dedicated GPU), CPU and GPU clocks, and some other metrics that we'll share below. Every test gets run at least three times, with the first result being discarded, to ensure consistency.

For testing, we used the latest Intel 6130 (Meteor Lake) / 6048 (Lunar Lake) drivers — it's a single driver package released at the end of October. For AMD, we're using the latest 24.10.1 drivers. All three laptops are running Windows 11 24H2 (though the Dell G15 is still on 23H2, and was tested with the 566.03 drivers).

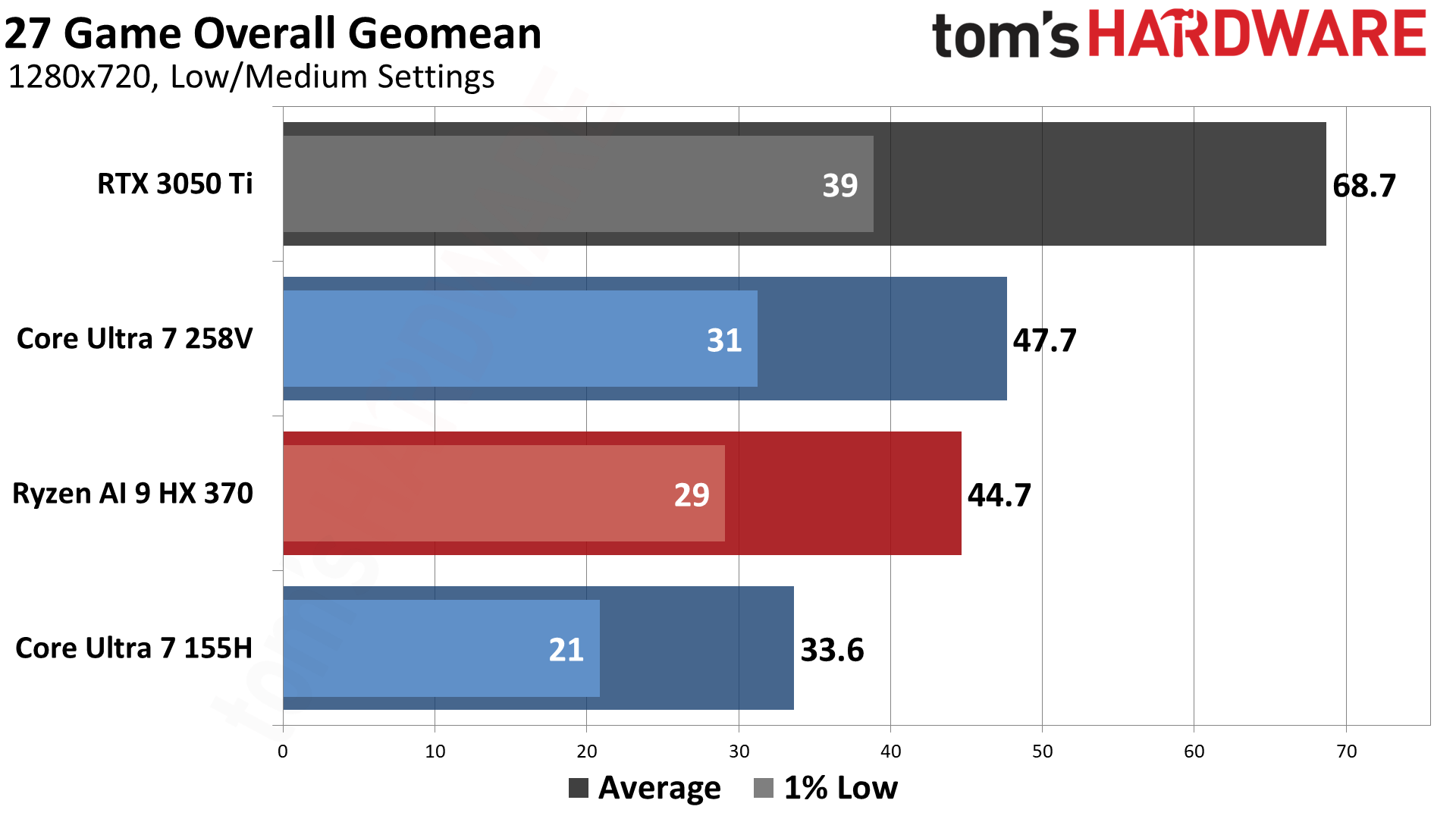

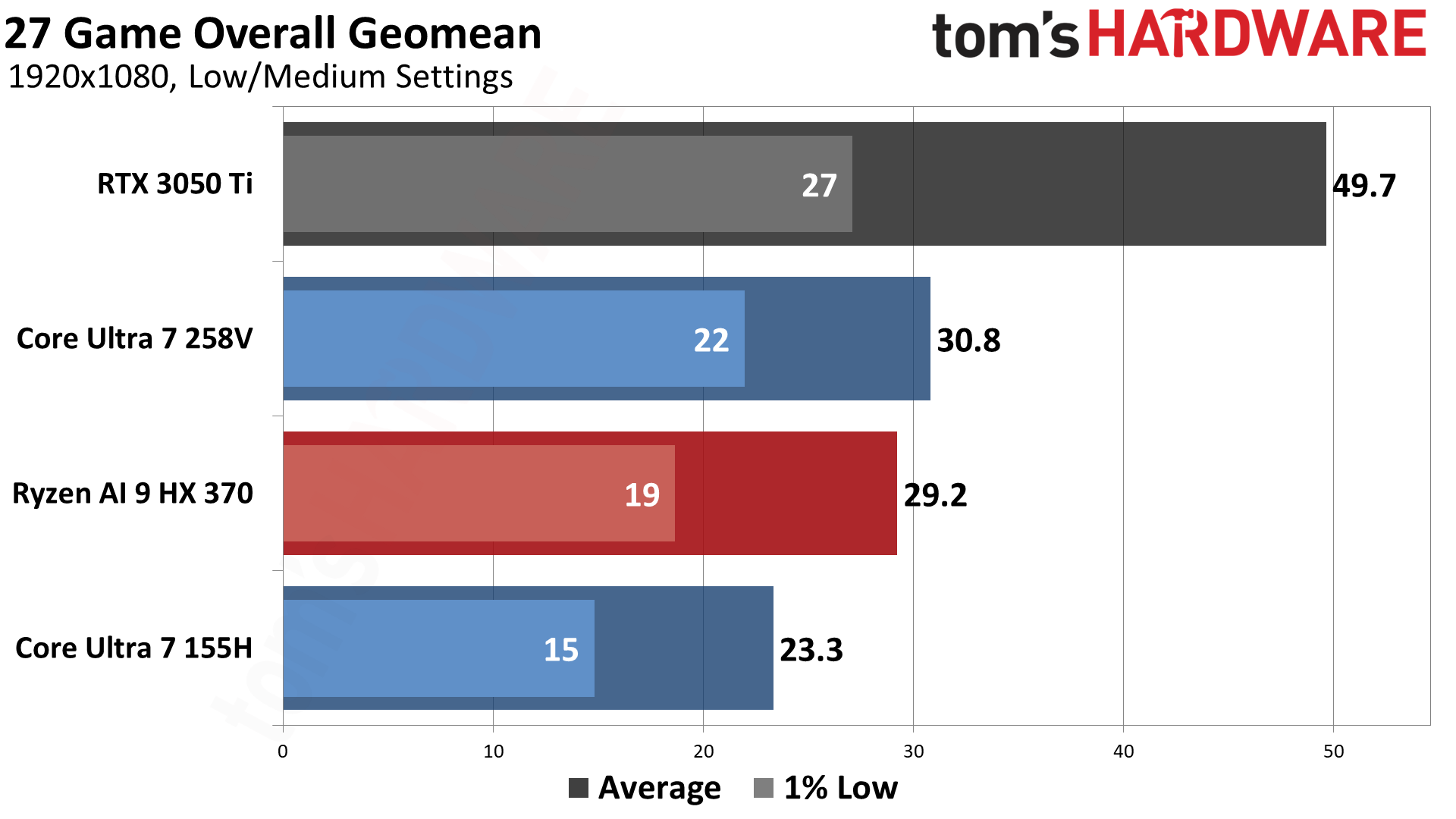

Starting with the overall geometric mean — which gives equal weight to every performance result — the standings have Intel's latest Arc 140V iGPU in the top position, leading AMD's Radeon 890M by 7% at 720p and 5% at 1080p. The sheer number of games used for testing means that no single title has too much of an impact, and as we'll see in our individual results below, Intel routinely comes out on top.

Lunar Lake also provides a big improvement in gaming performance compared to Meteor Lake: 42% at 720p and 32% at 1080p. It's interesting that the 1080p results, both with Strix Point and Meteor Lake, narrows, but it does make sense. Memory bandwidth becomes a bigger factor at higher resolutions, and while Lunar Lake does have more bandwidth — 136.5 GB/s compared to 120 GB/s — that's only 14% more bandwidth overall. We can't say exactly how much compute versus bandwidth factor into each individual result, but the 720p numbers do show that Lunar Lake has better graphics throughput in general.

But there's still a big gap, even when compared to a three years old RTX 3050 Ti that's held back by the 8GB of system RAM. Nvidia's budget GPU delivers 44% higher performance at 720p and 61% more performance at 1080p compared to Lunar Lake. Again, bandwidth looks to be a factor, and the 3050 Ti has 192 GB/s of dedicate memory bandwidth.

The individual results show a wide spread, and drivers are clearly an issue at times — and not solely for Intel. We'll discuss the specifics in each of the games below, in alphabetical order.

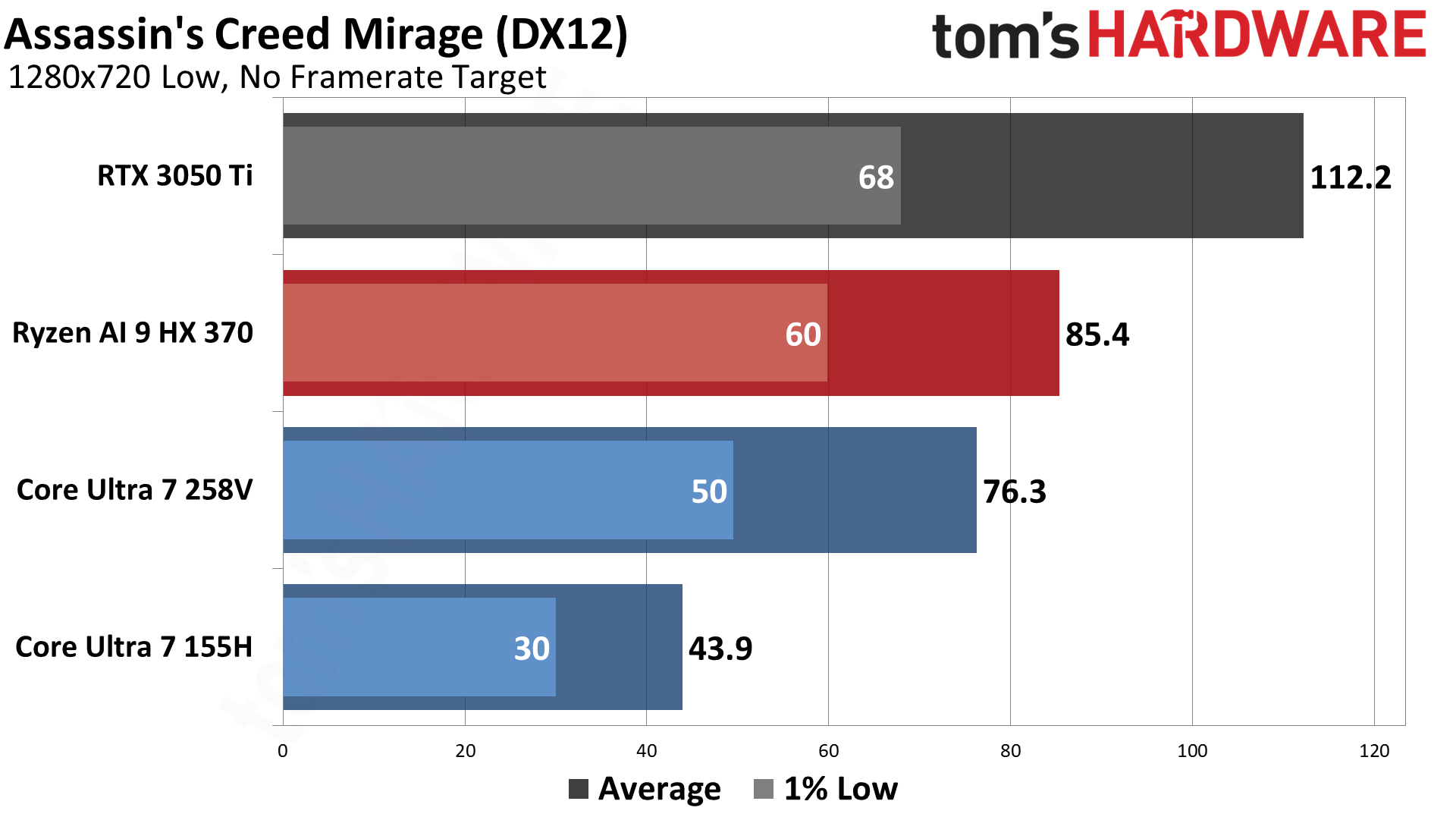

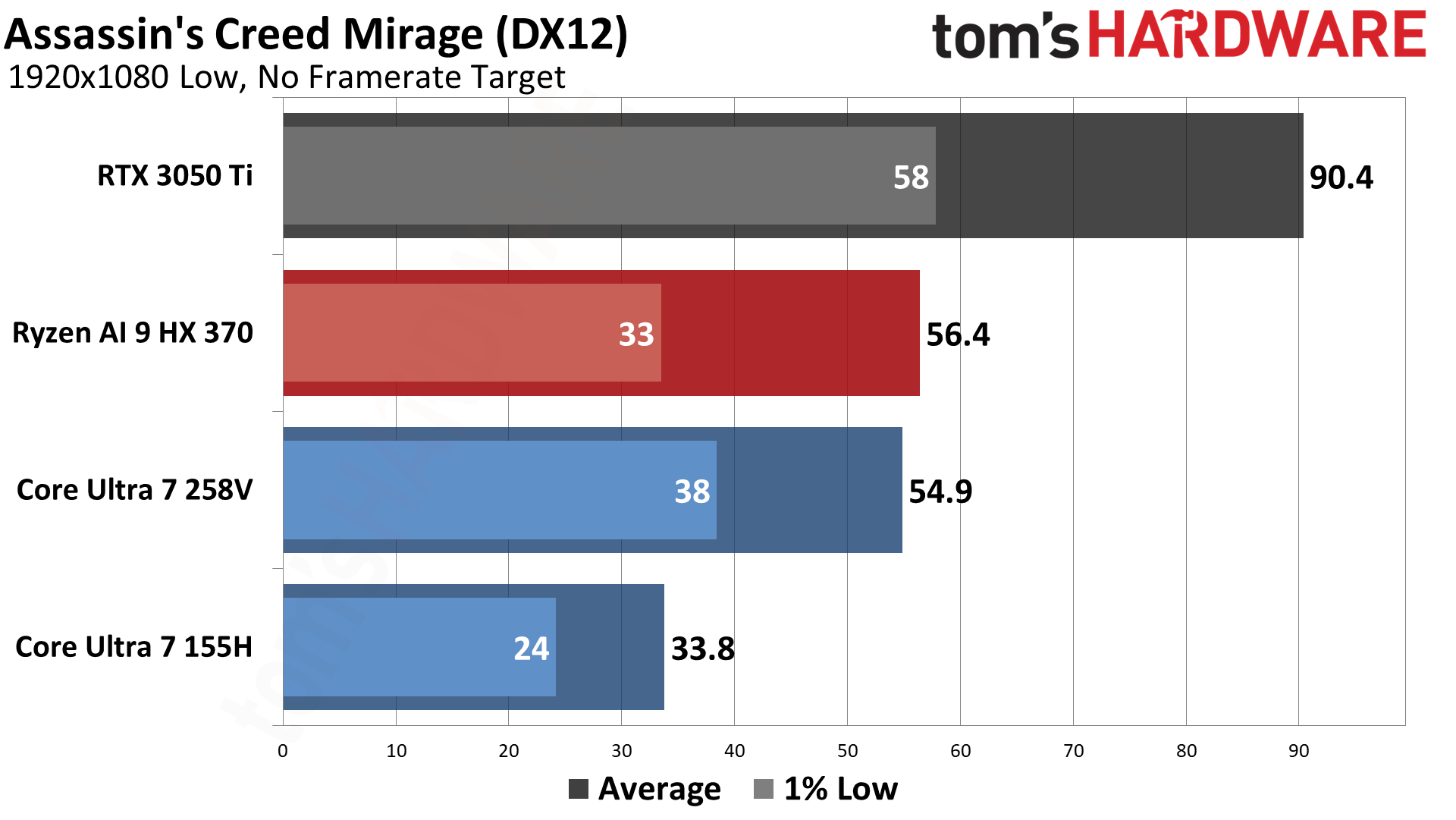

Assassin's Creed Mirage gives AMD's 890M the win, by 12% at 720p but only 3% at 1080p. Lunar Lake easily beats its predecessor, however, offering a 74% performance uplift at 720p and 62% at 1080p. The RTX 3050 Ti mostly matches the overall standings, with 47% higher 720p performance and a 65% higher 1080p result.

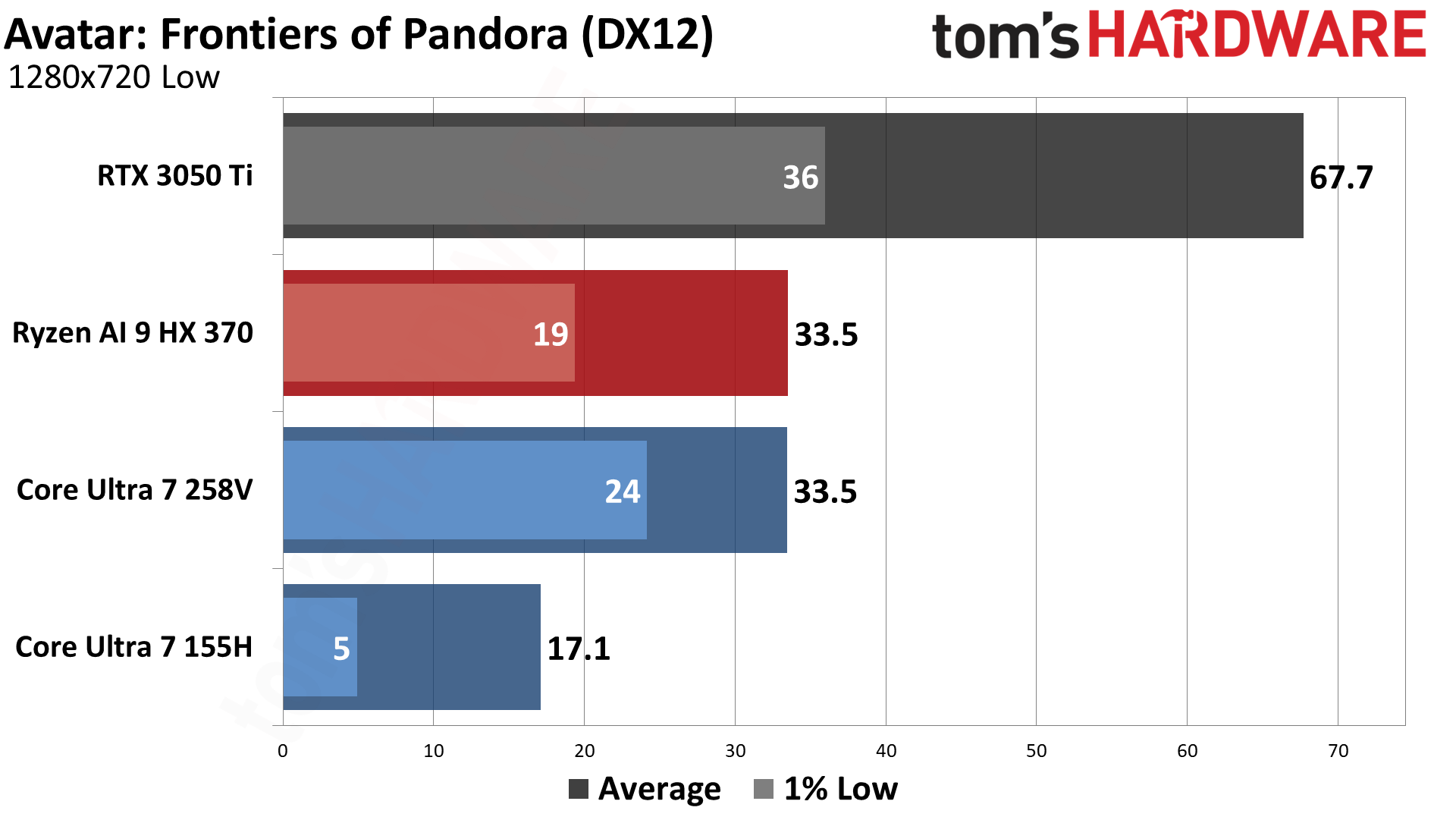

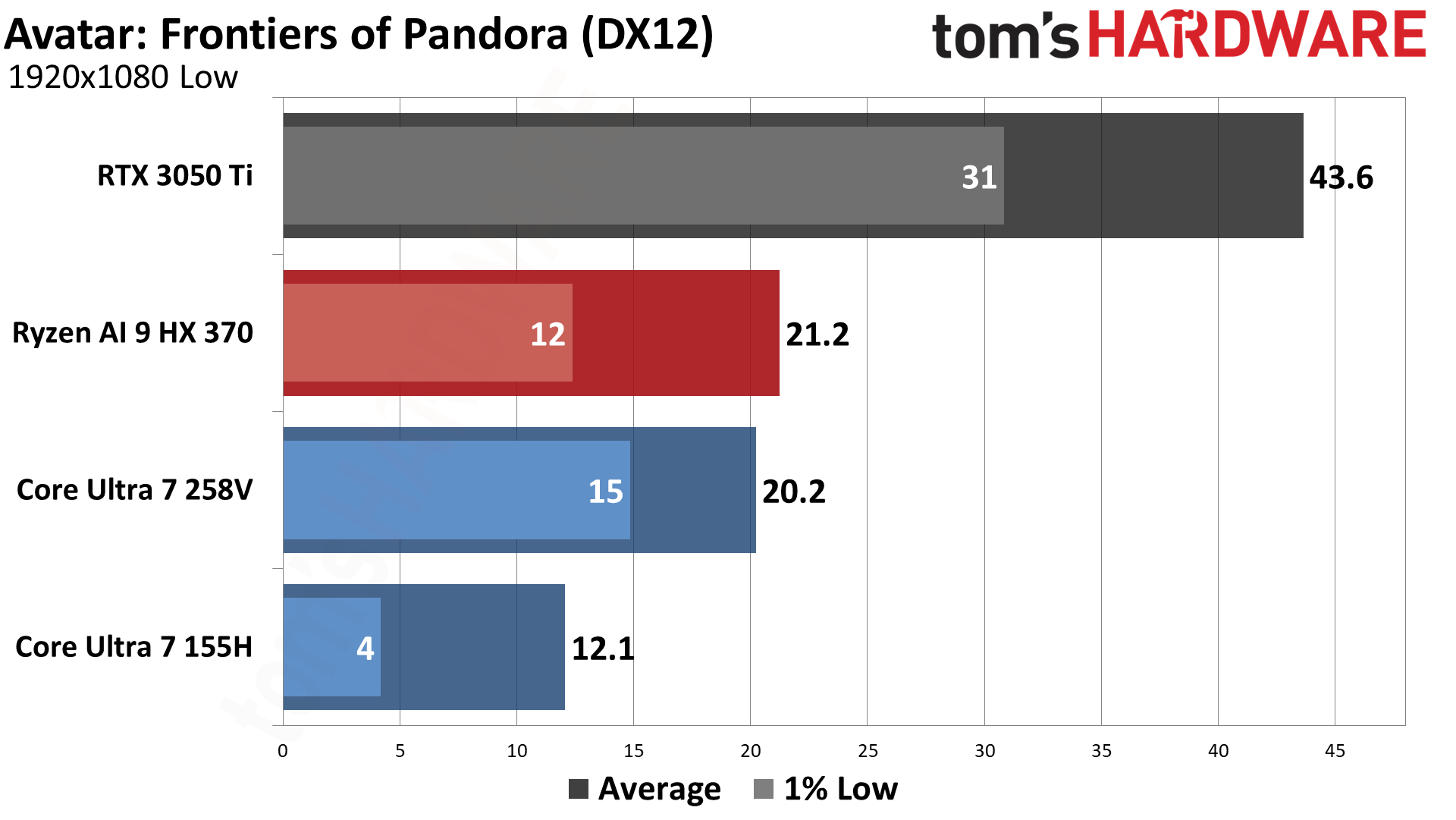

Avatar does support some ray tracing effects, though we wouldn't expect any of those to be active on the low preset. Lunar Lake and Strix Point are tied at 720p, while AMD has a 5% lead at 1080p. LNL also shows nearly a doubling in performance compared to MTL at 720p, and a 68% improvement at 1080p.

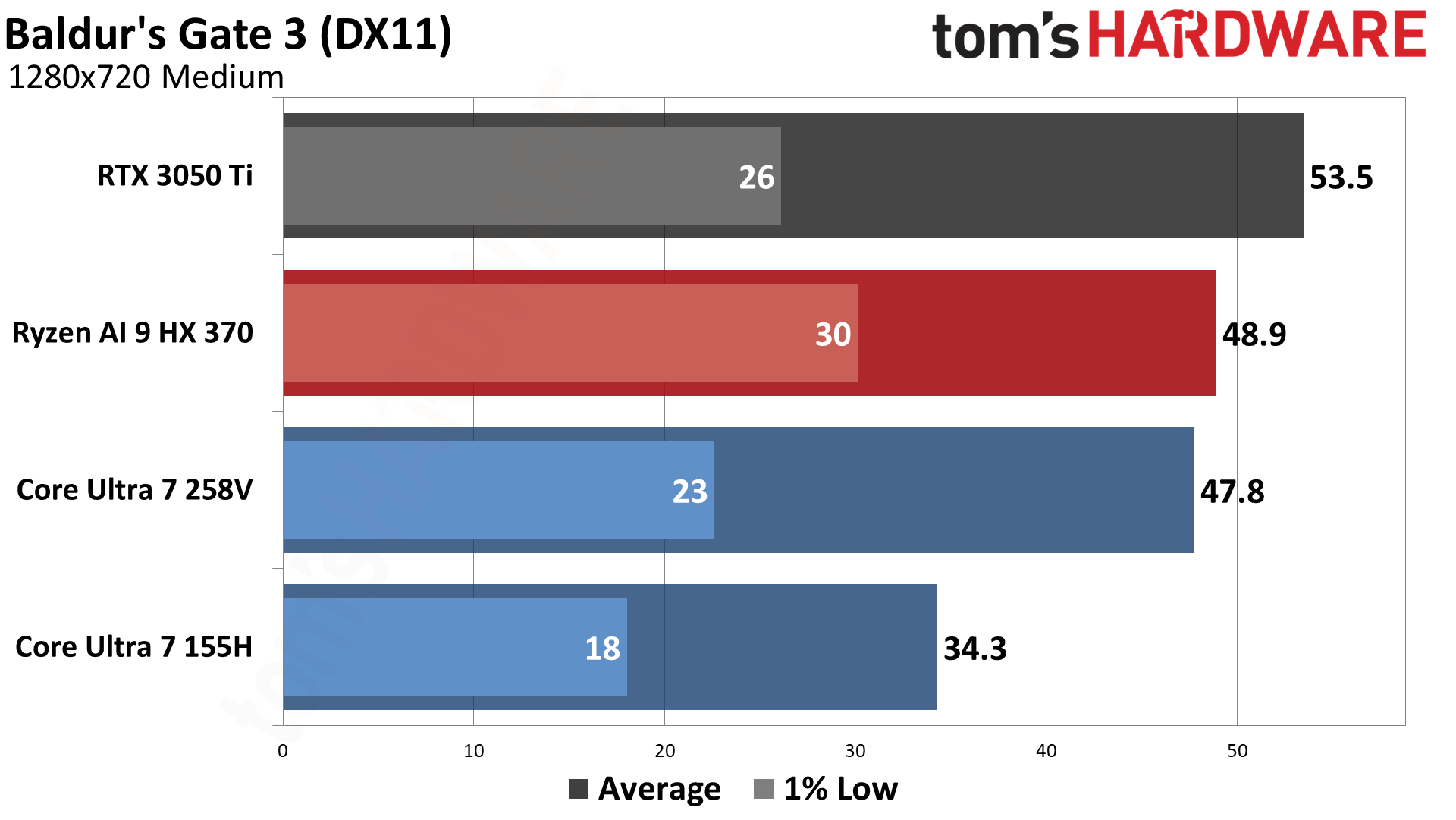

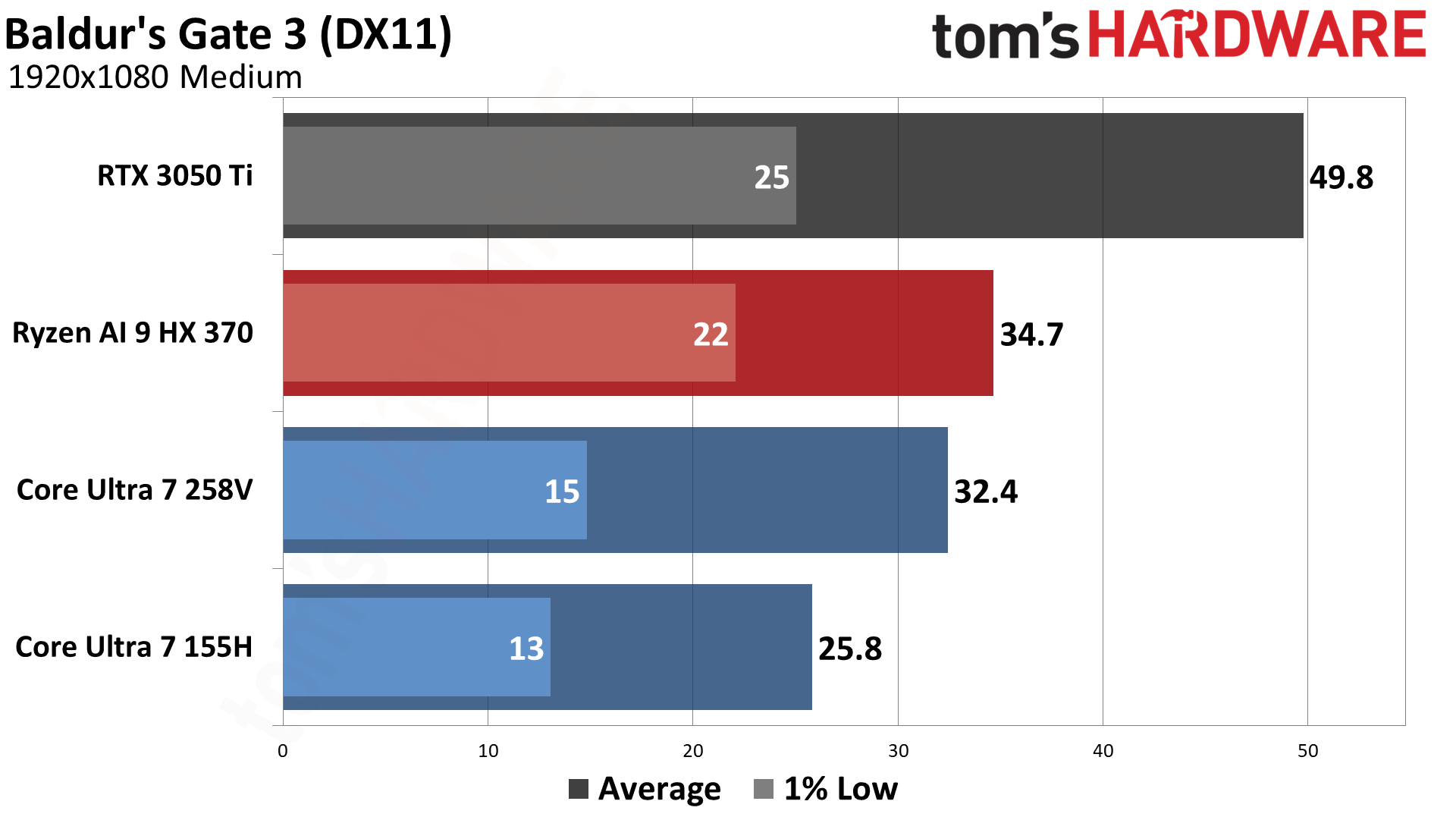

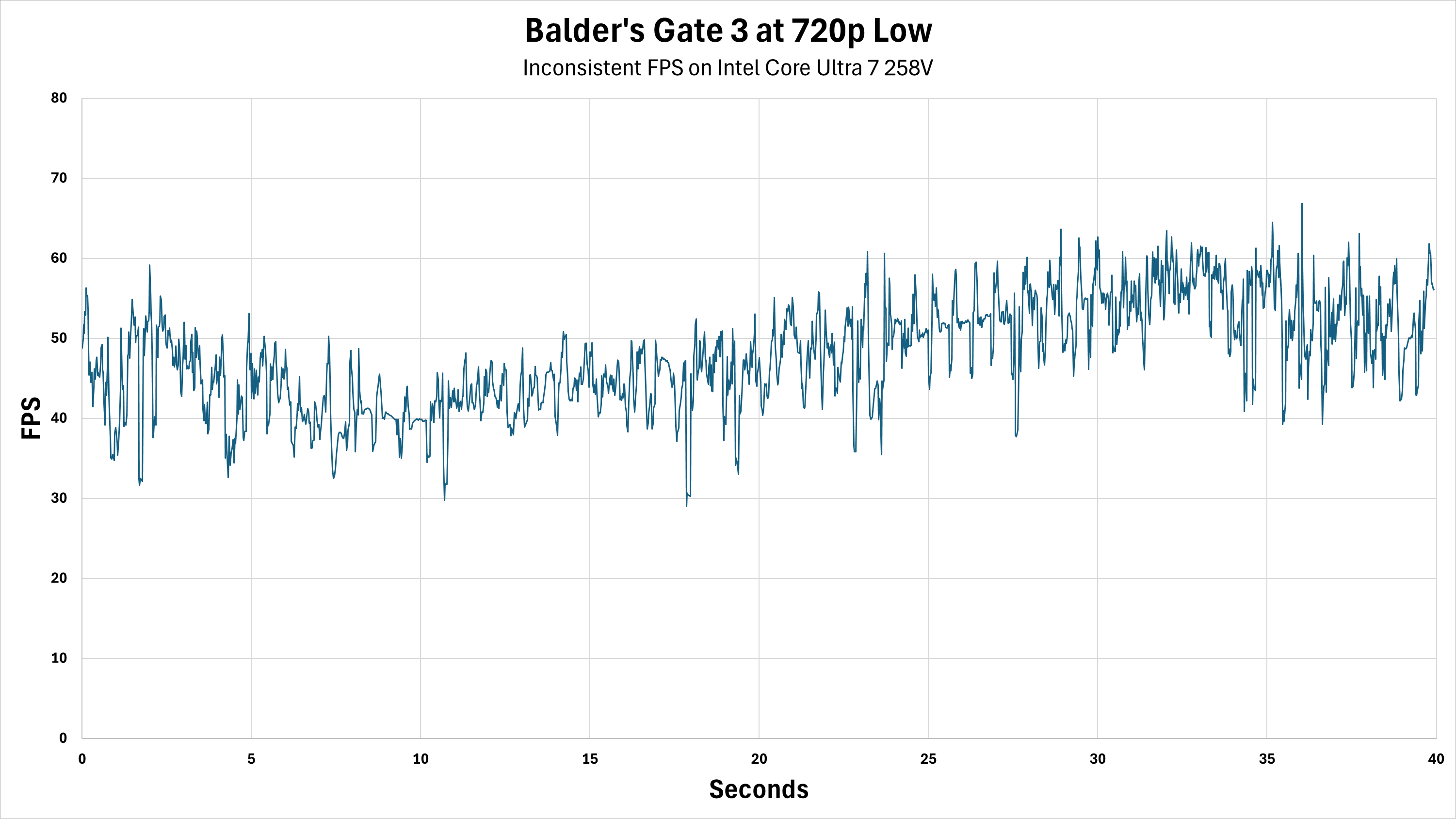

Baldur's Gate 3 is one of several games that didn't run all that great on any of the GPUs. AMD's Radeon 890M manages the best result of the iGPUs, but both old and new Intel GPUs have a "pulsing" effect — we provided an FPS over time chart in the above gallery to illustrate this. You get a fast-then-slow-then-fast repeated cycle that results in a very poor overall experience. This could potentially be related to VRAM use, as the RTX 3050 Ti and even AMD's GPU also have relatively poor 1% low FPS.

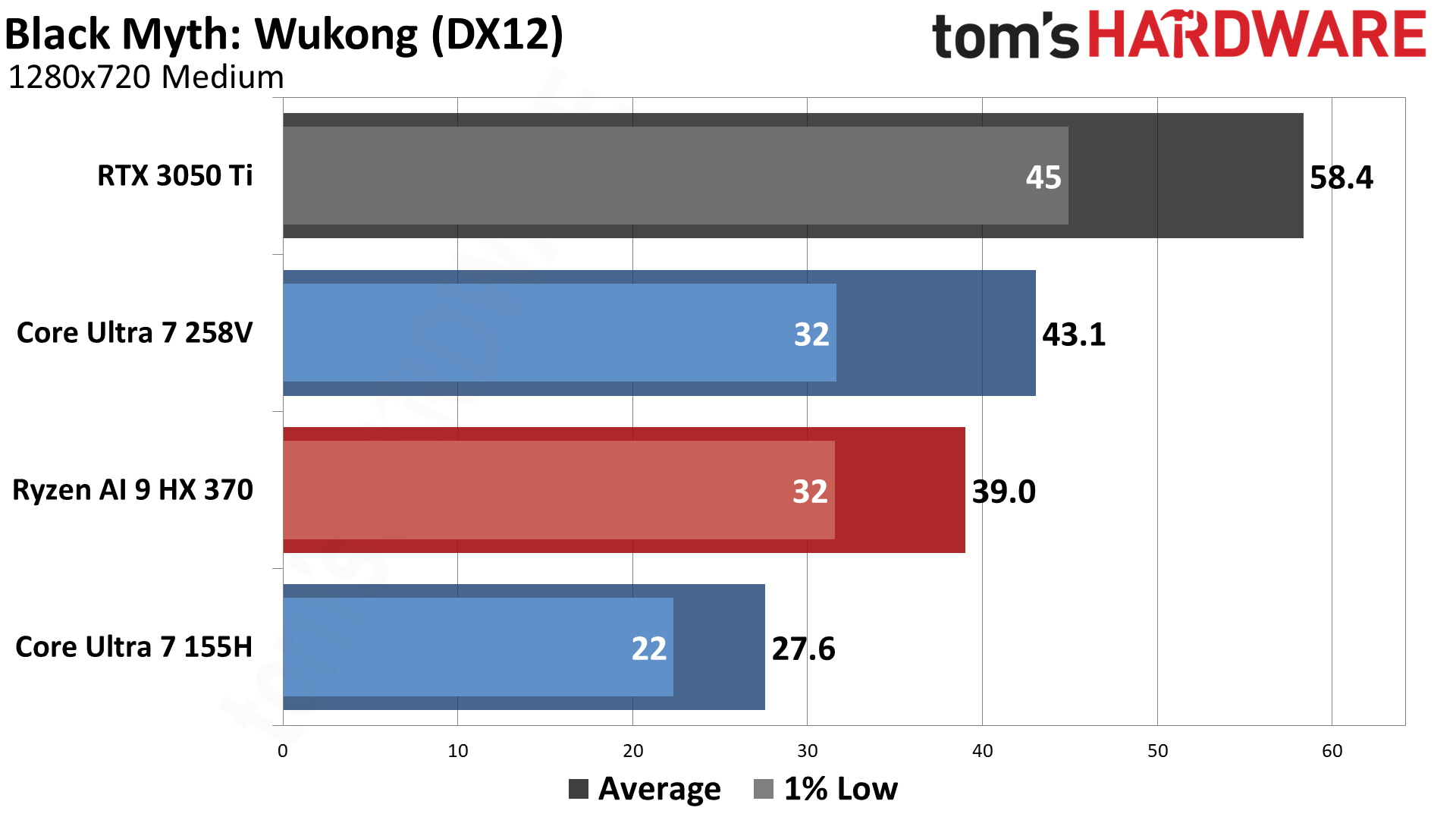

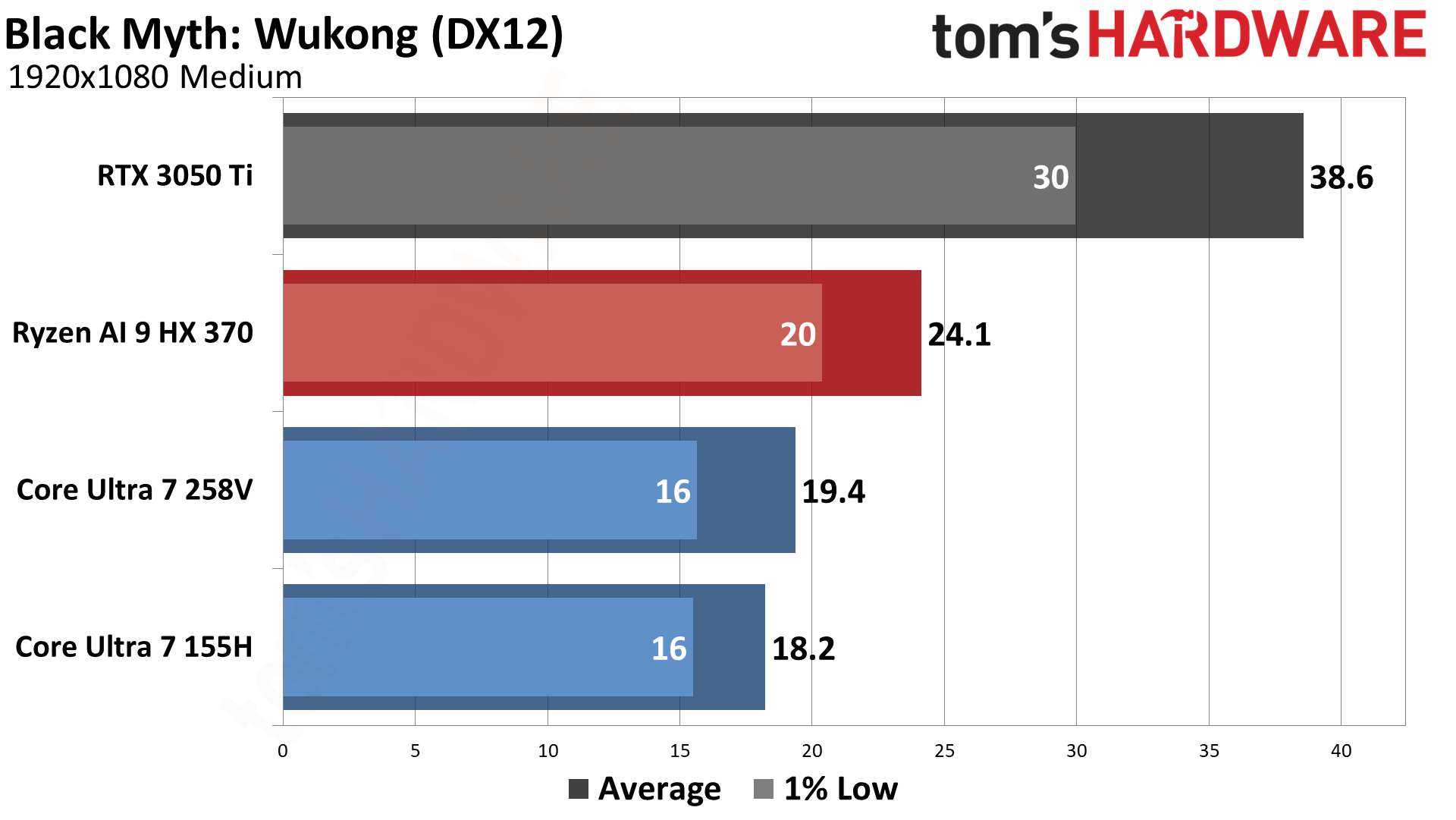

Black Myth: Wukong was one of the games we originally looked at, but we had FSR3 upscaling and framegen enabled and that messed with the results. Without any upscaling, the 258V manages 10% higher performance at 720p, but falls behind by 20% at 1080p. Intel's new chip shows 56% higher performance than the prior gen at 720p, but only 6% higher performance at 1080p.

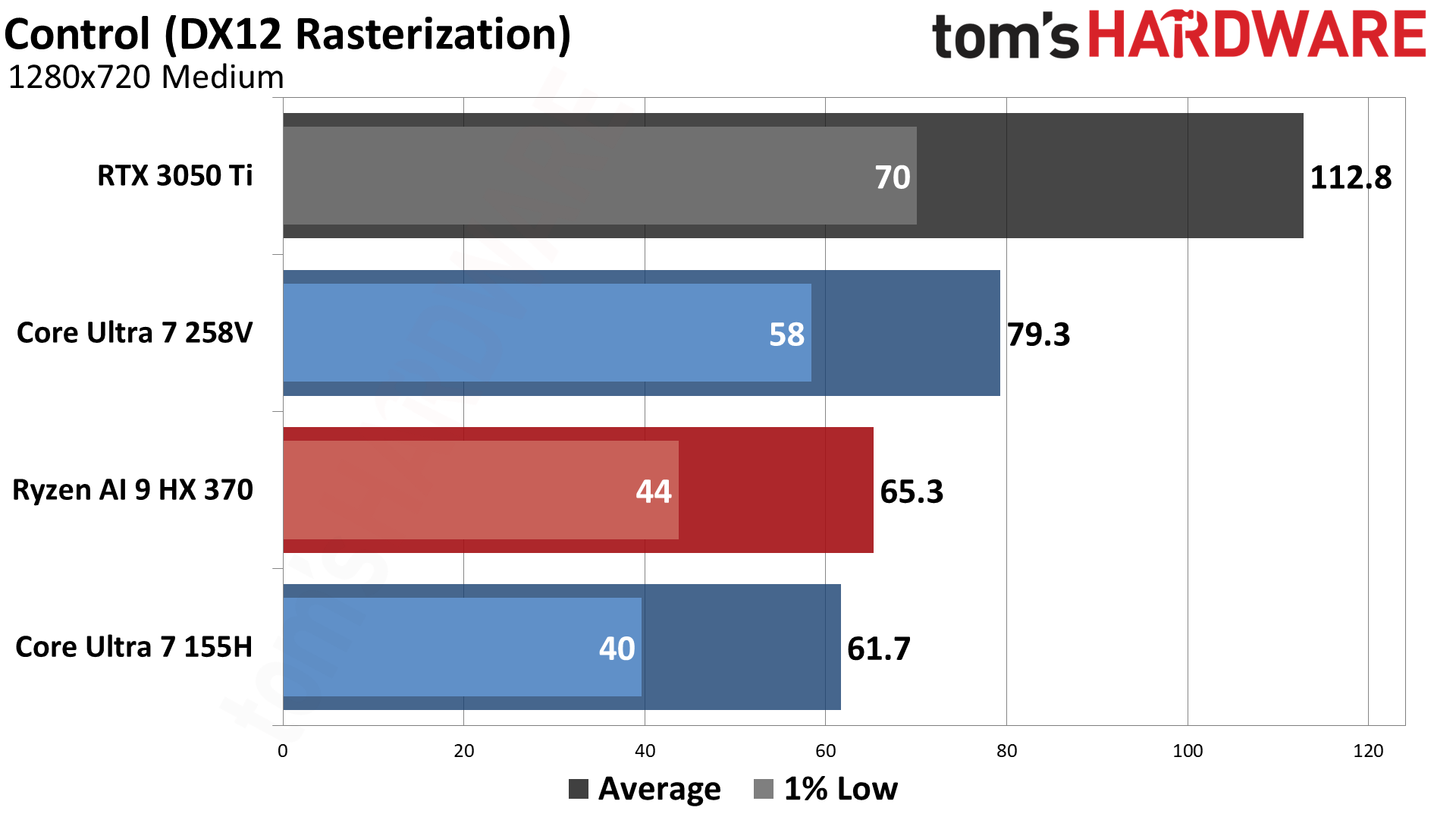

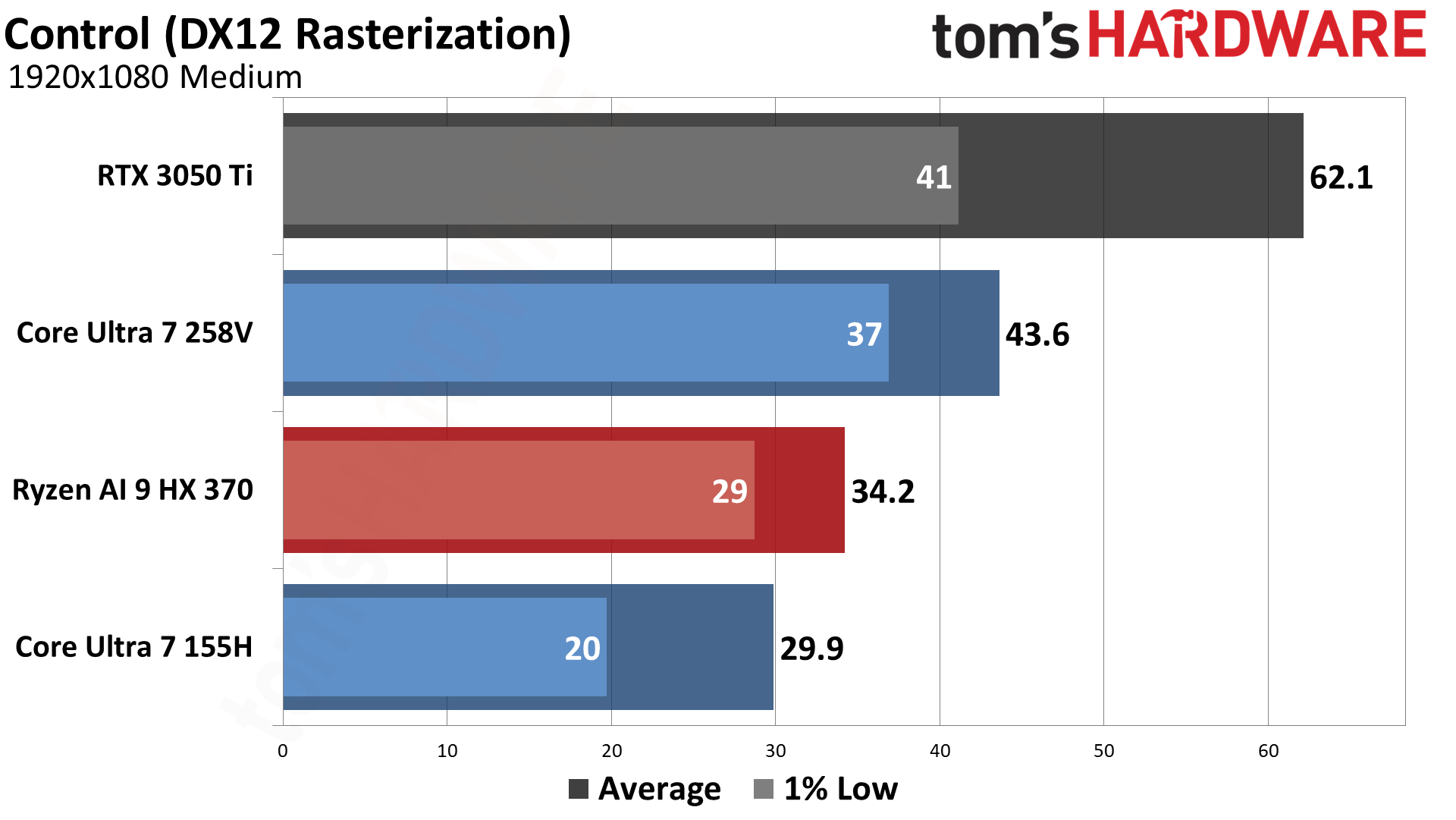

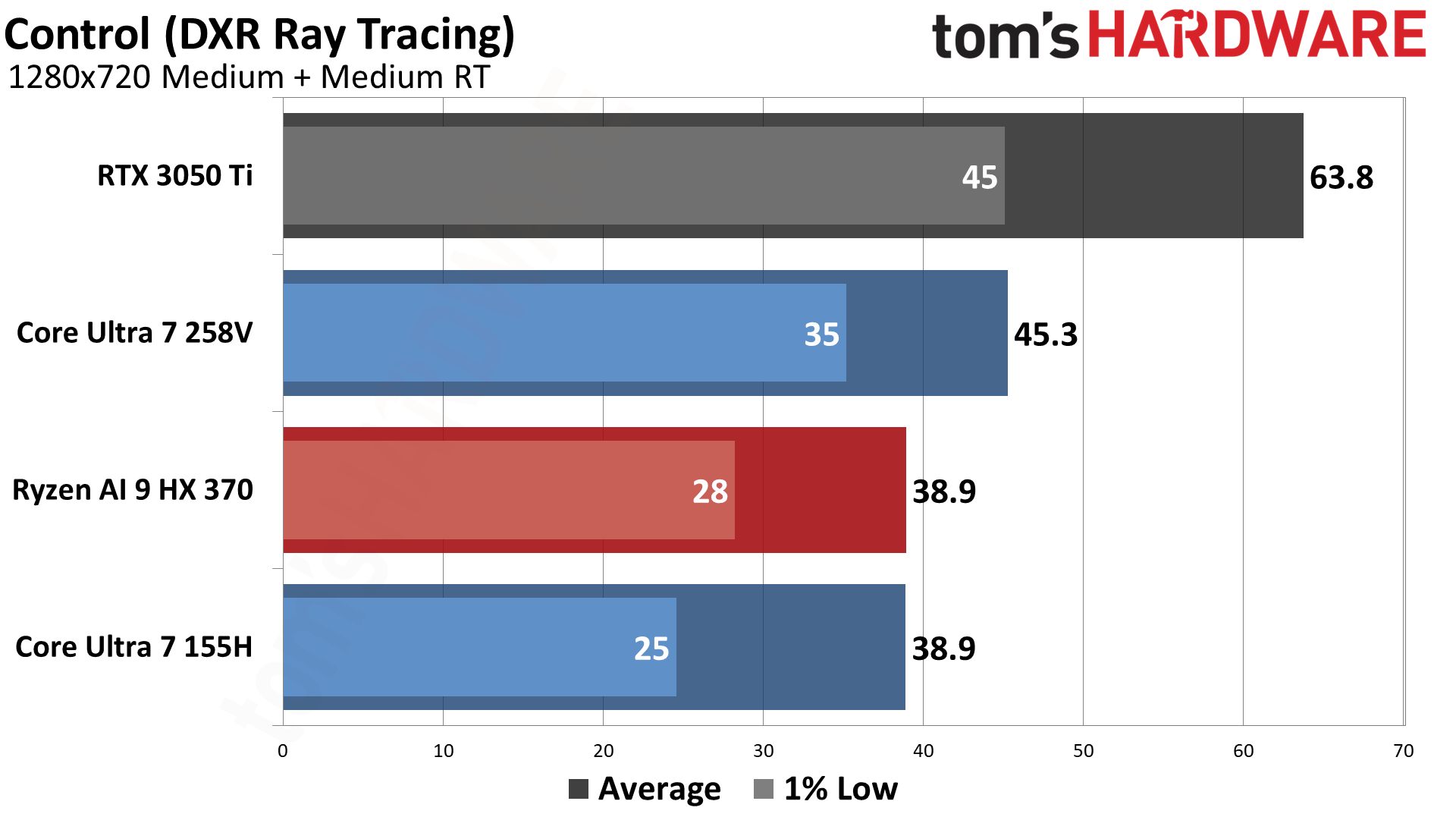

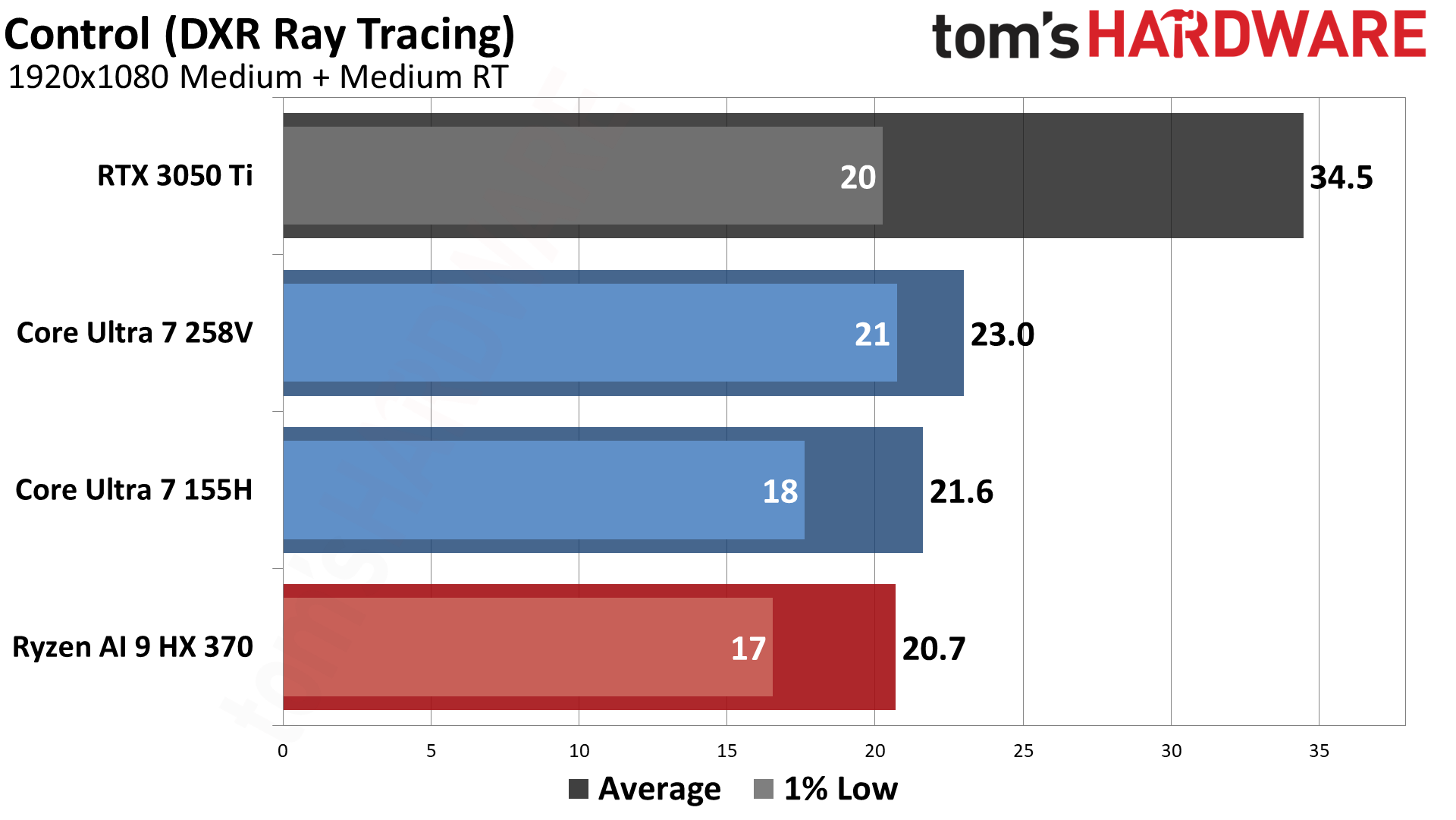

Control (Ultimate Edition) is one of the three games where we also tested ray tracing performance. Without enabling RT, Intel's Arc 140V beats the Radeon 890M by 21% at 720p and 27% at 1080p. It's also 29% and 46% faster than the Arc Graphics in the 155H. With RT turned on (using the medium setting), it's 16% and 11% faster than AMD's iGPU, and likewise 16% and 6% faster than Meteor Lake.

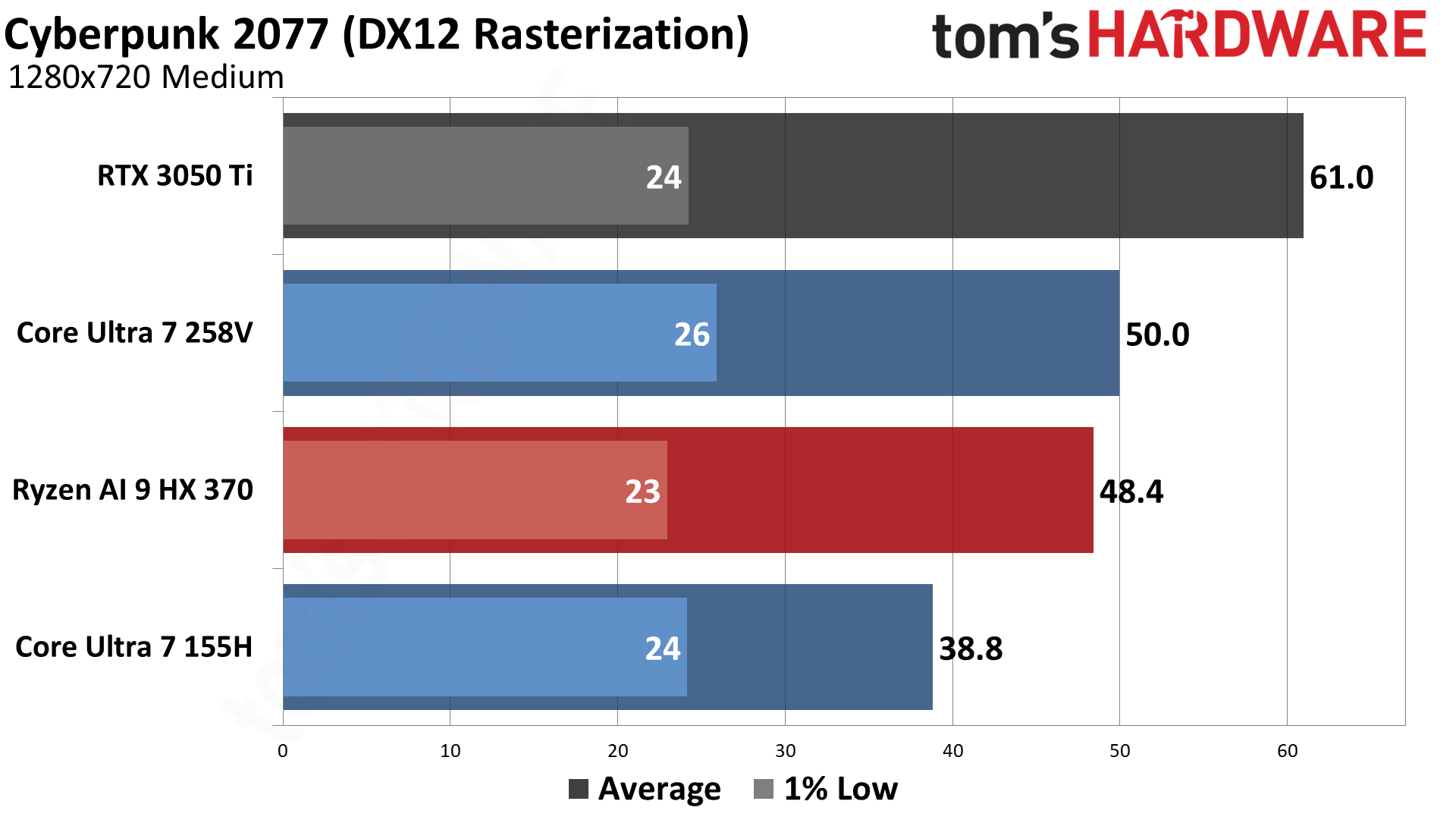

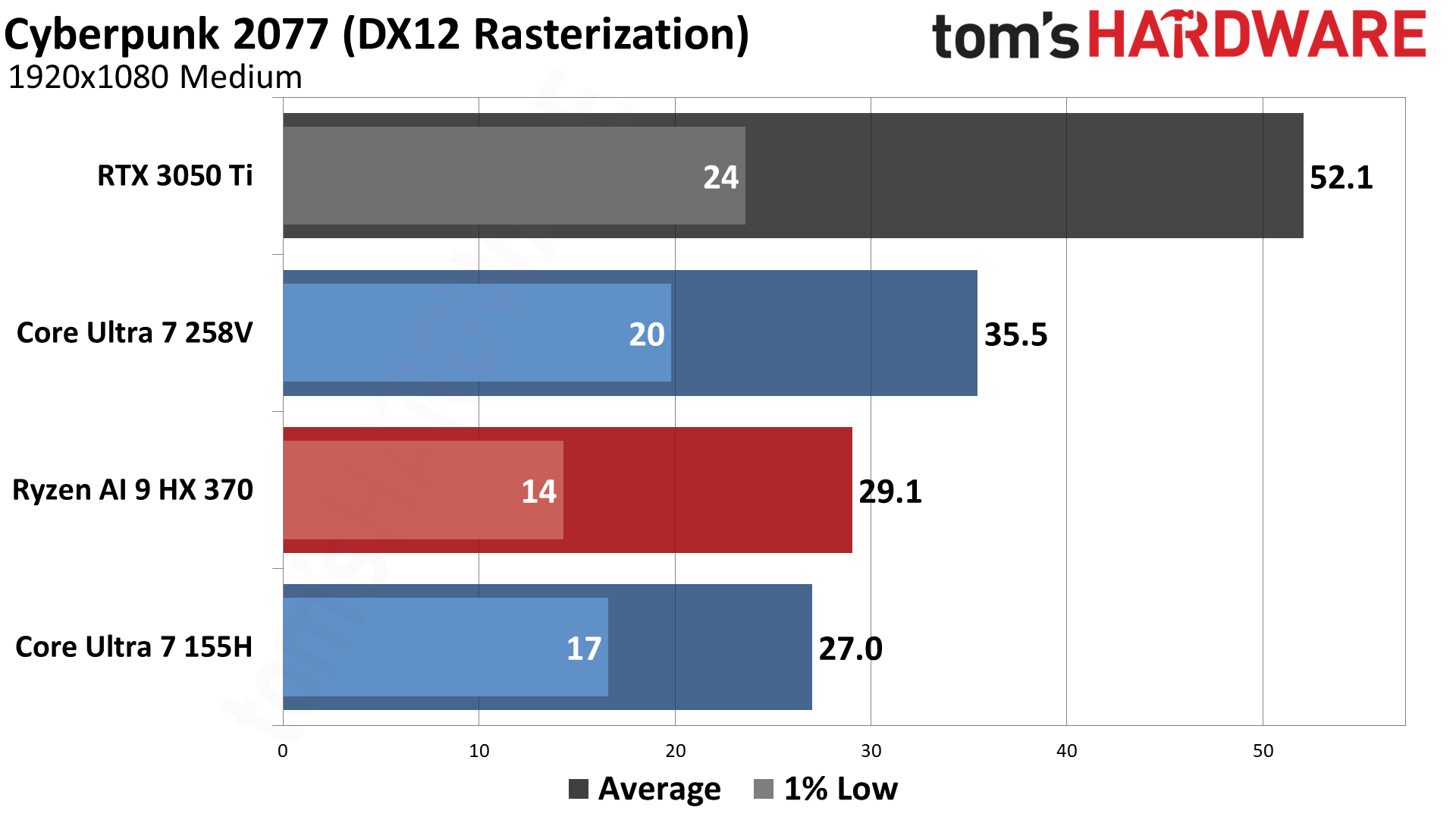

We also enabled ray tracing in Cyberpunk 2077, which really didn't go so well for any of the iGPUs. Using traditional rasterization rendering, Lunar Lake delivers a solid 50 fps at 720p with medium settings, and 36 fps at 1080p, beating Strix Point by 3% and 22%, respectively. LNL is also 29% and 31% faster than MTL.

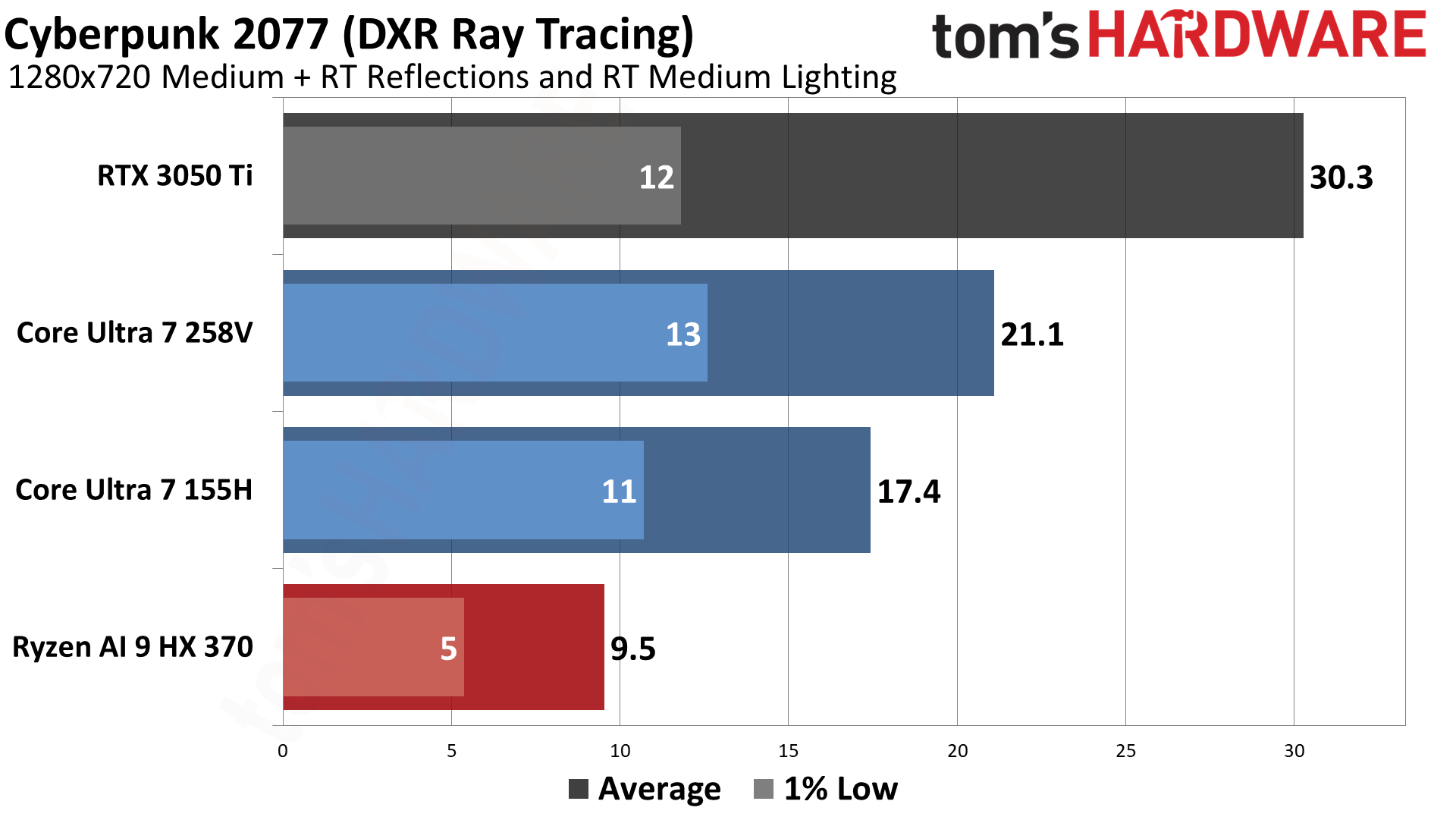

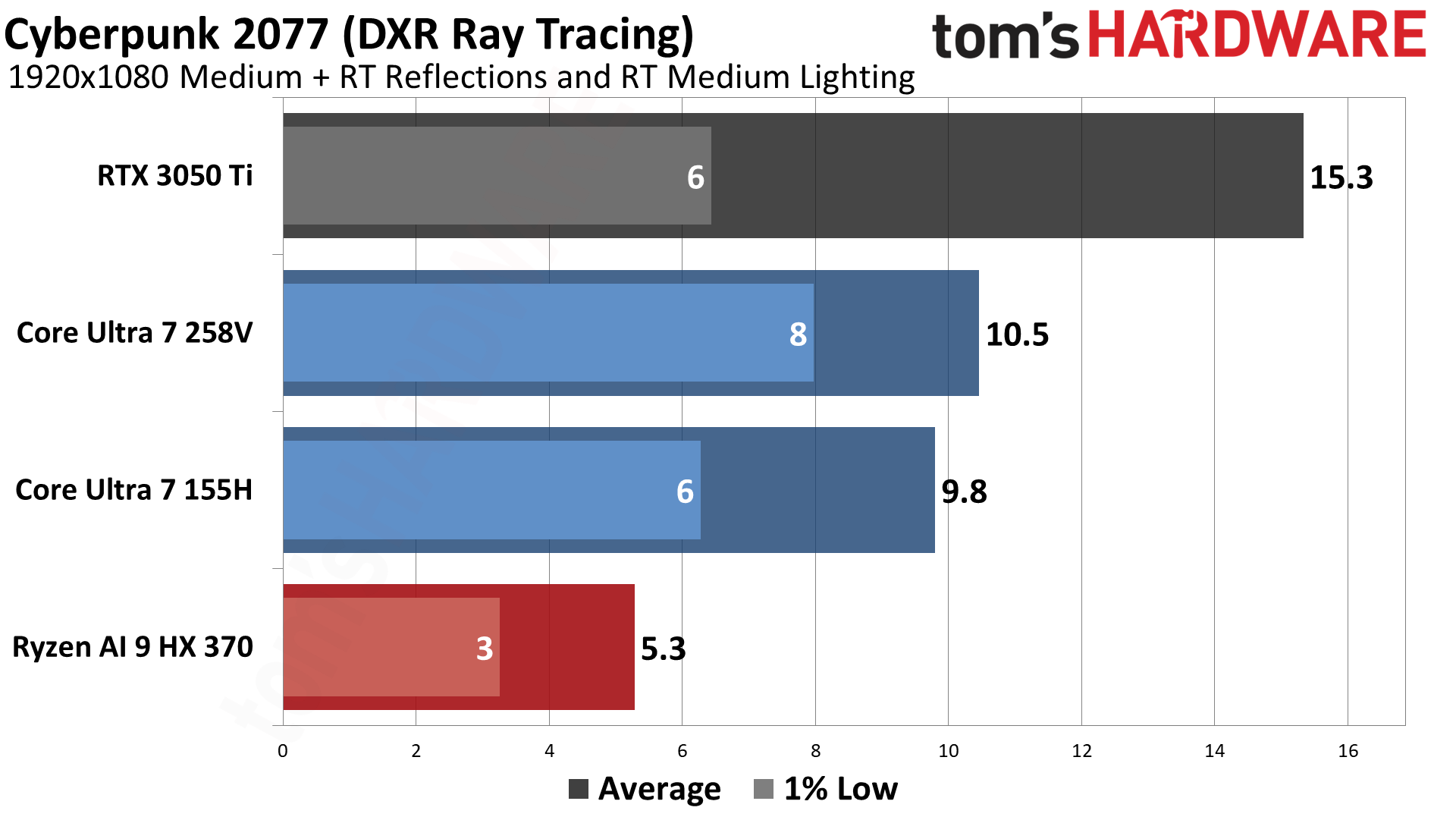

With ray tracing enabled, Arc 140V ends up as the fastest of the three iGPUs, but only with 21 FPS at 720p and 10.5 fps at 1080p. AMD's 890M choked badly in our testing, failing to get into the double digits — one of the few cases (the DXR Control and Minecraft being the others) where AMD's newer chip performed worse than Intel's Meteor Lake.

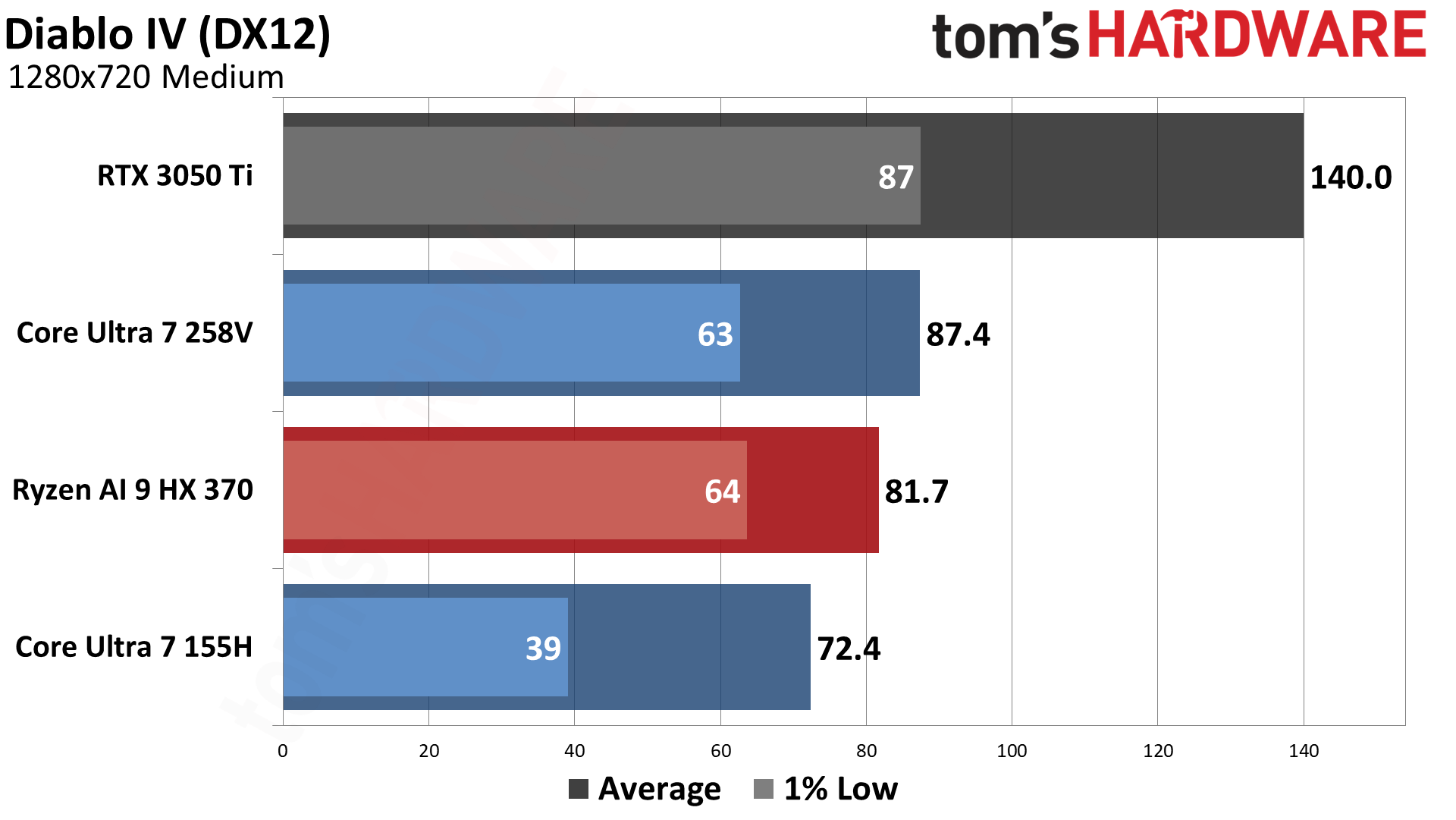

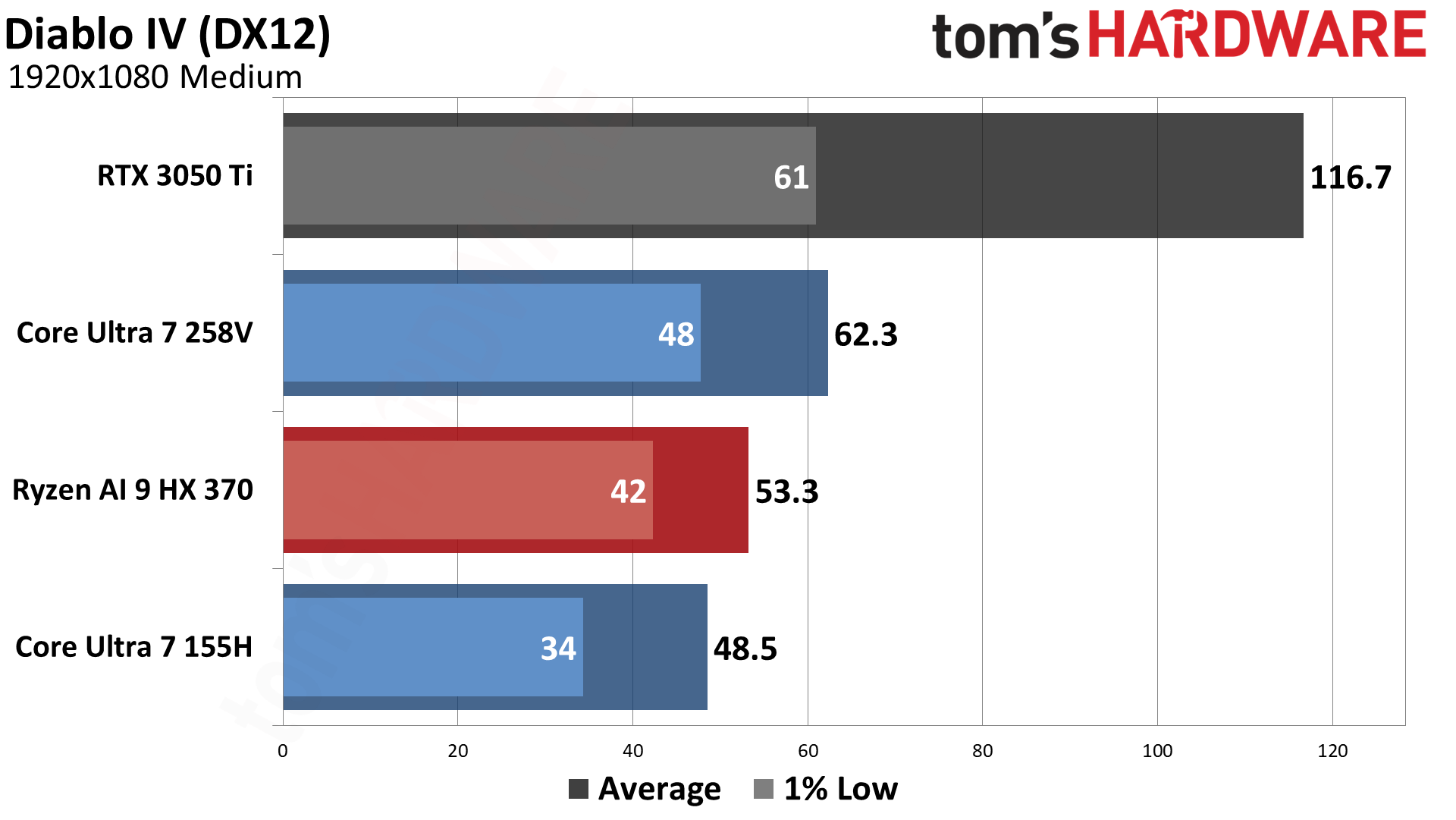

Diablo IV isn't remotely demanding, as evidenced by the three iGPUs all clearing 60 fps at 720p, and even managing 48 FPS or higher at 1080p medium. There are some stutters, however, as evidenced by the 1% lows. But the game is easily playable on any of the three iGPUs. Intel LNL gets another 7%/17% lead over AMD at 720p/1080p, and a 21%/28% lead over MTL.

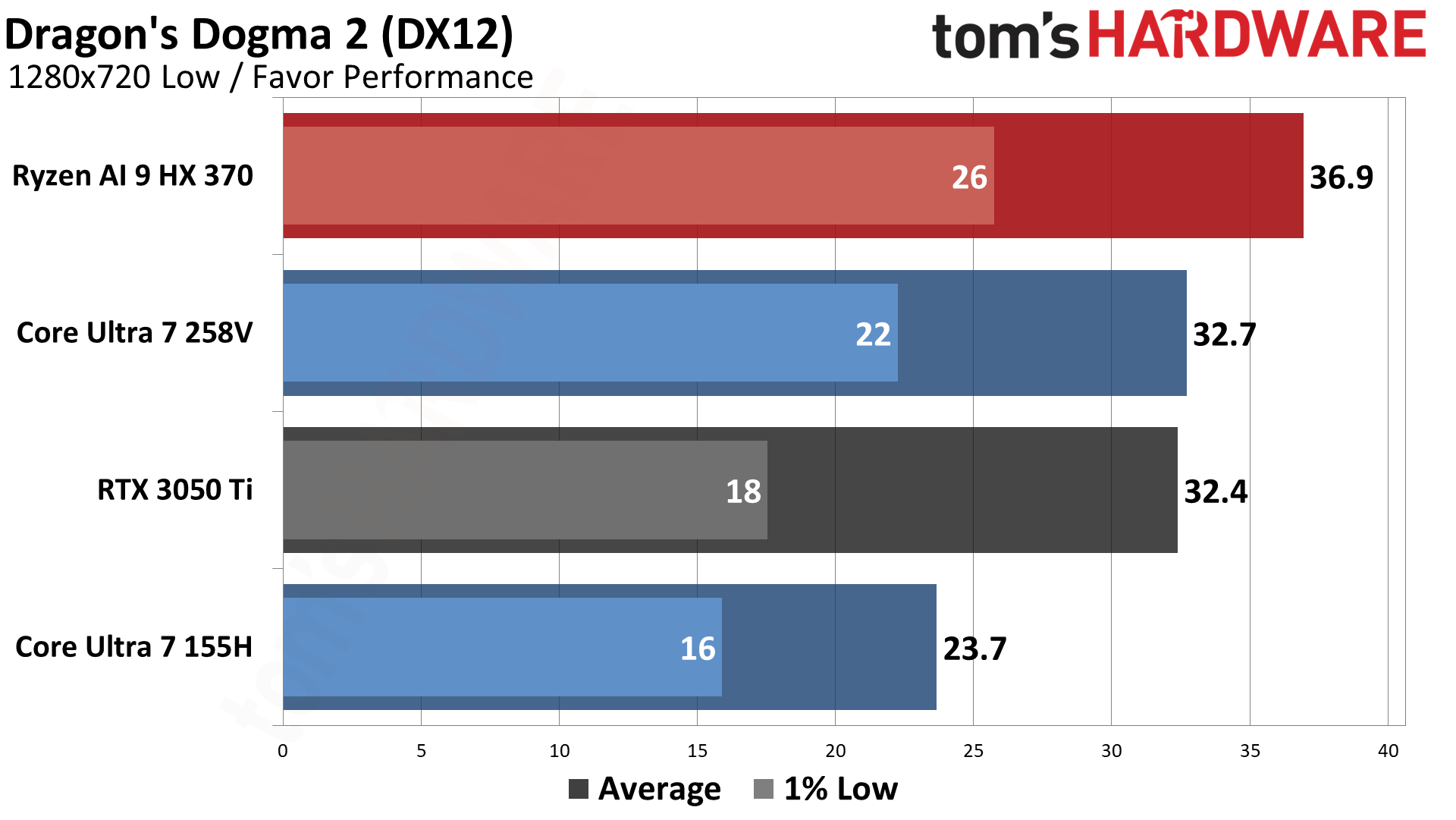

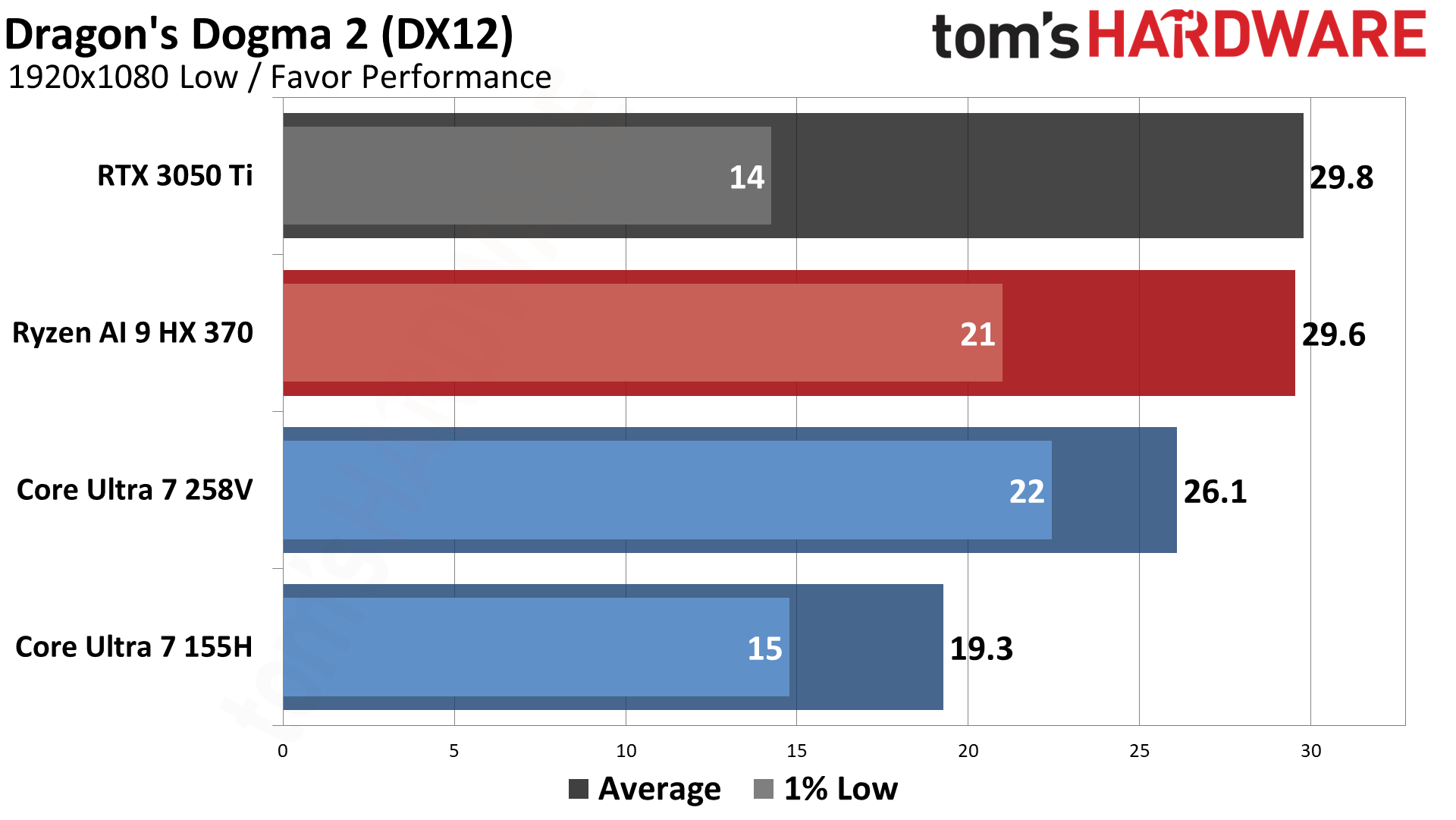

Dragon's Dogma 2 feels rather sluggish compared to many other games, and it doesn't even look that great. We ran around the city of Vernworth for our testing, and every GPU felt stuttery — including the RTX 3050 Ti. We can only assume that the game wants more than 4GB of VRAM, but in general it just feels pretty poorly optimized. AMD gets the win this time, with a 13% lead over Lunar Lake, which in turn beats Meteor Lake by 38%/35% (720p/1080p).

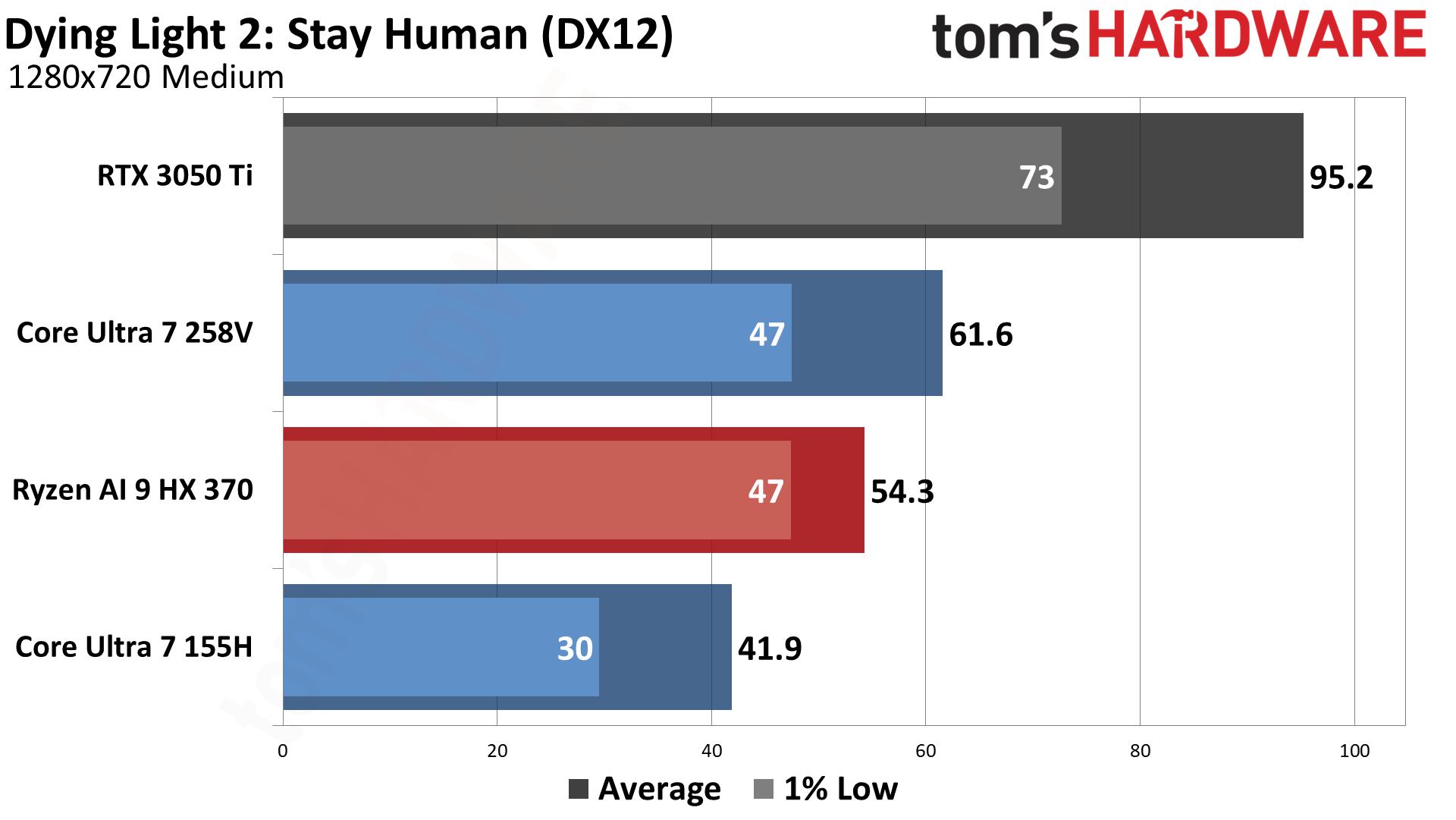

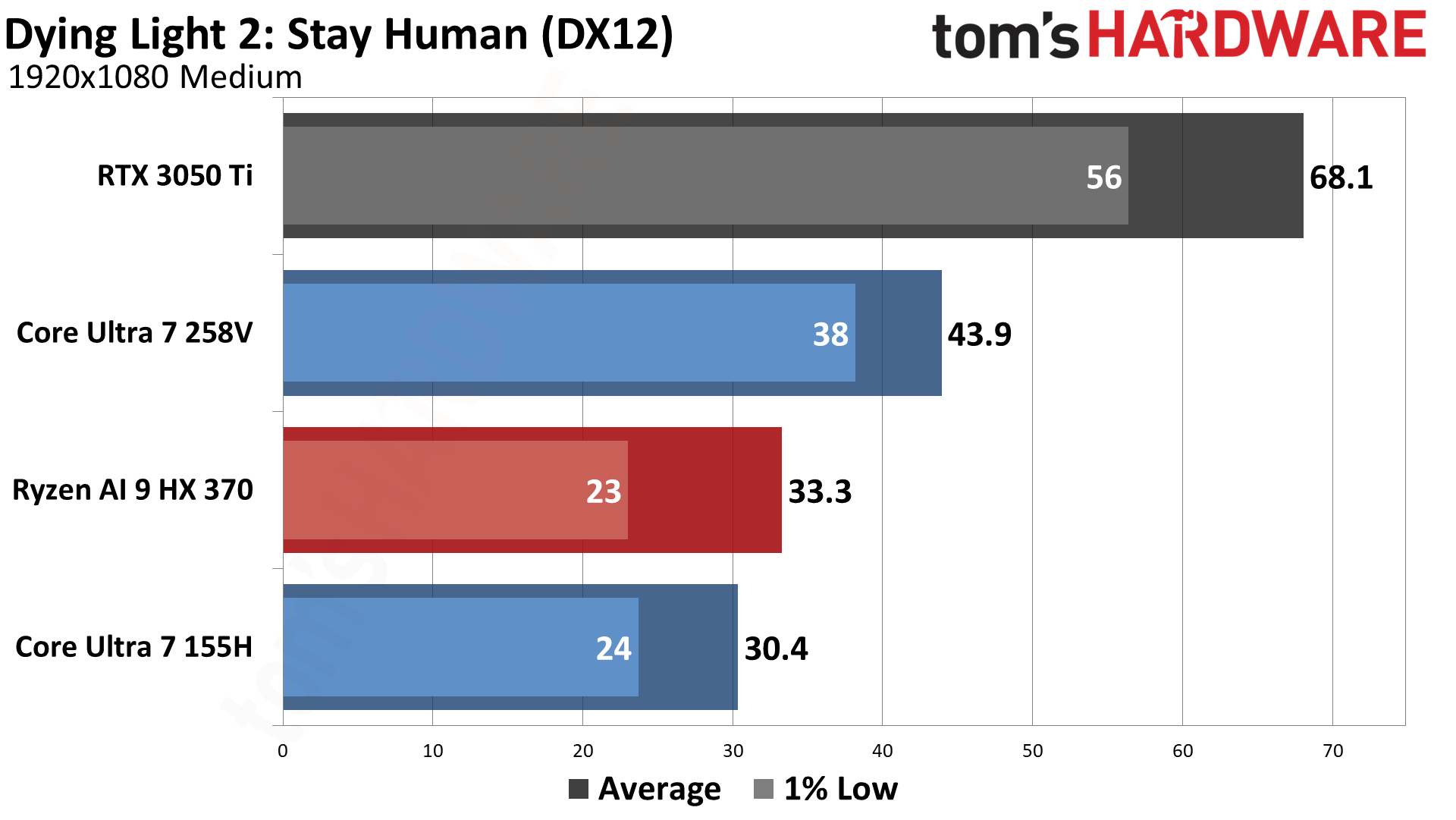

Dying Light 2 runs quite nicely on the three iGPUs and was playable even at 1080p medium on Lunar Lake — Meteor Lake was more borderline at that resolution. Intel gets the win again, leading by 14%/32% over AMD and 47%/45% over MTL.

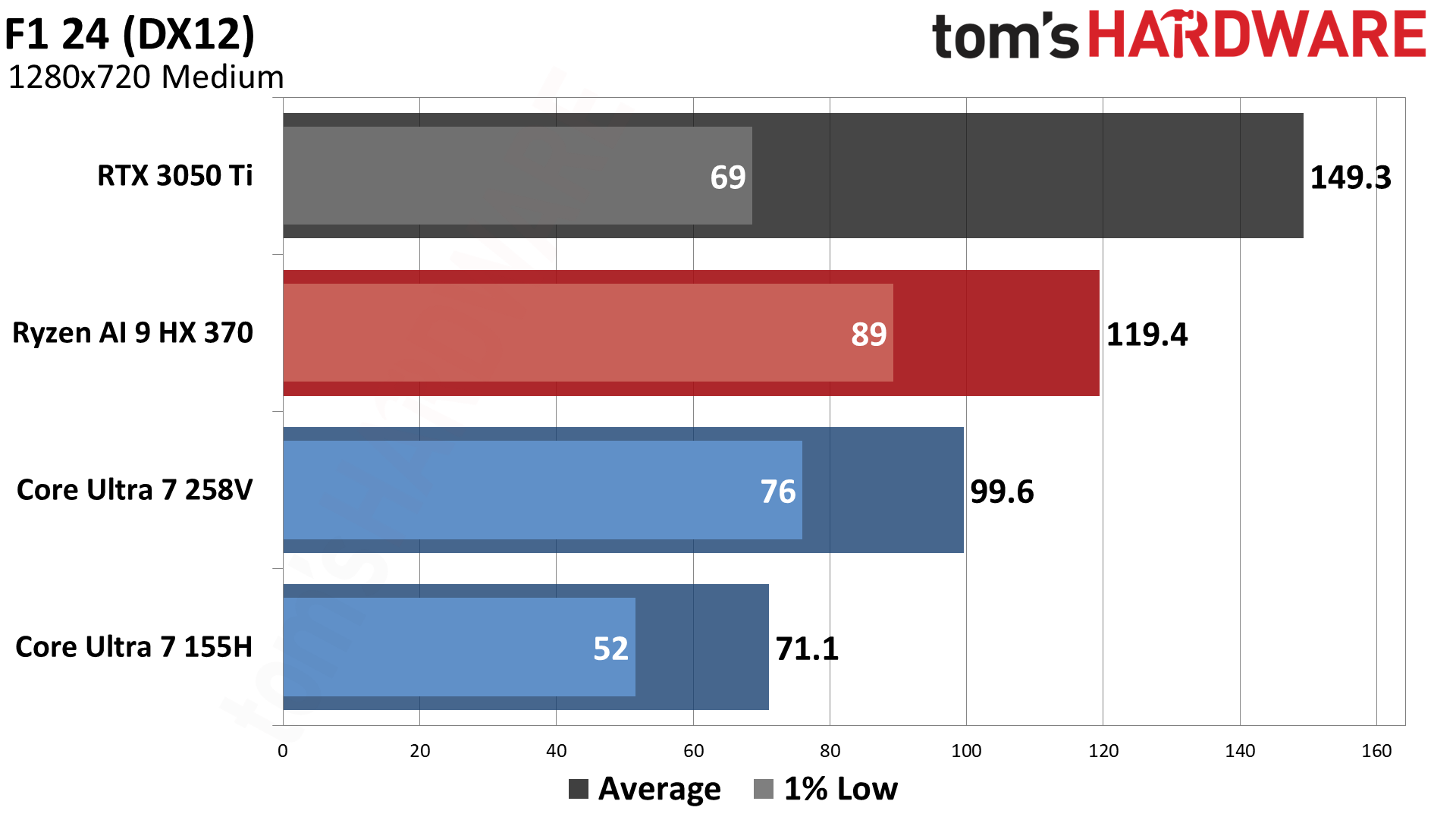

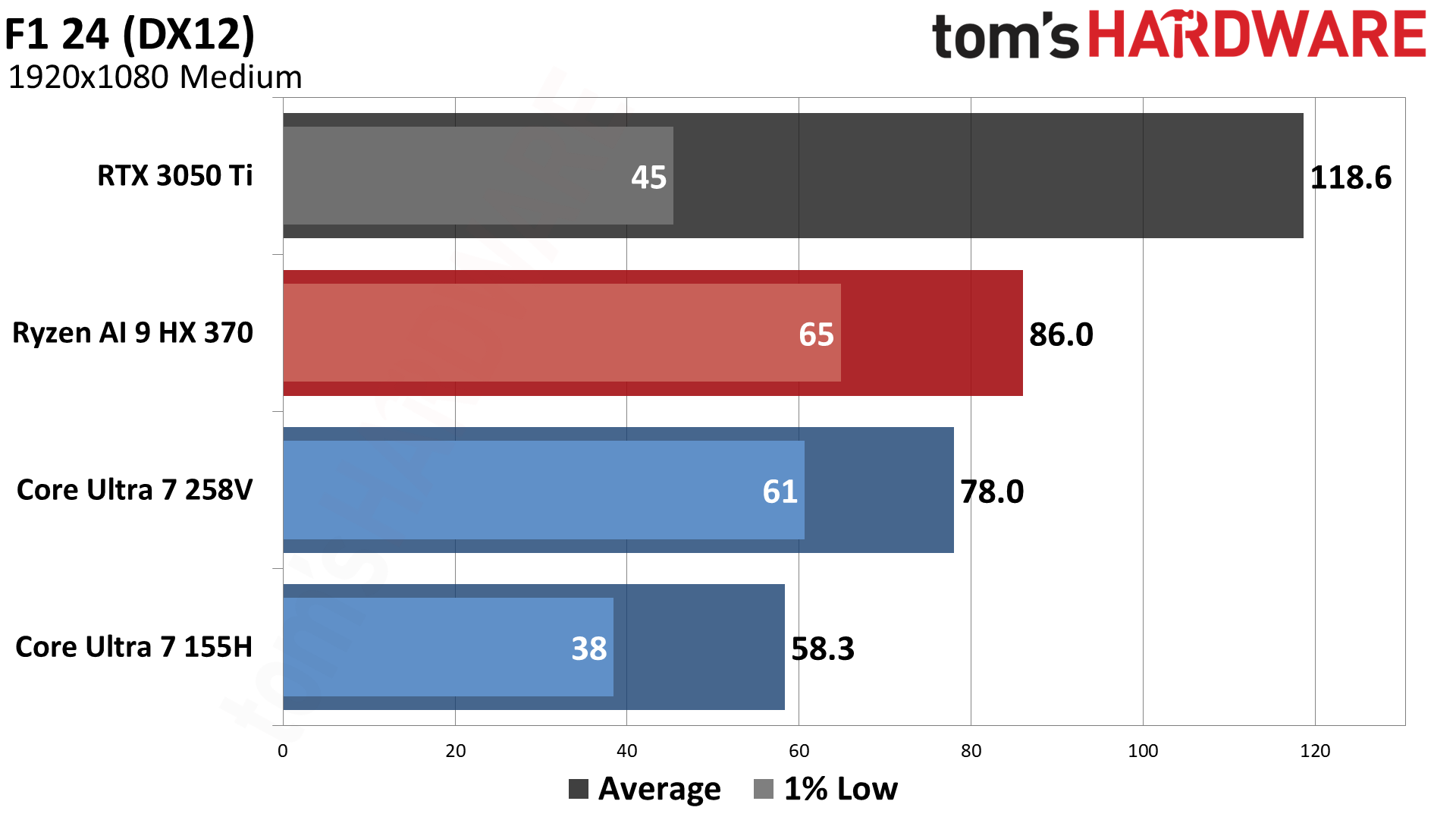

AMD takes the pole position with F1 24, beating Arc 140V and Lunar Lake by 20%/10%. Intel's newer iGPU is still 40%/34% faster than Meteor Lake, however.

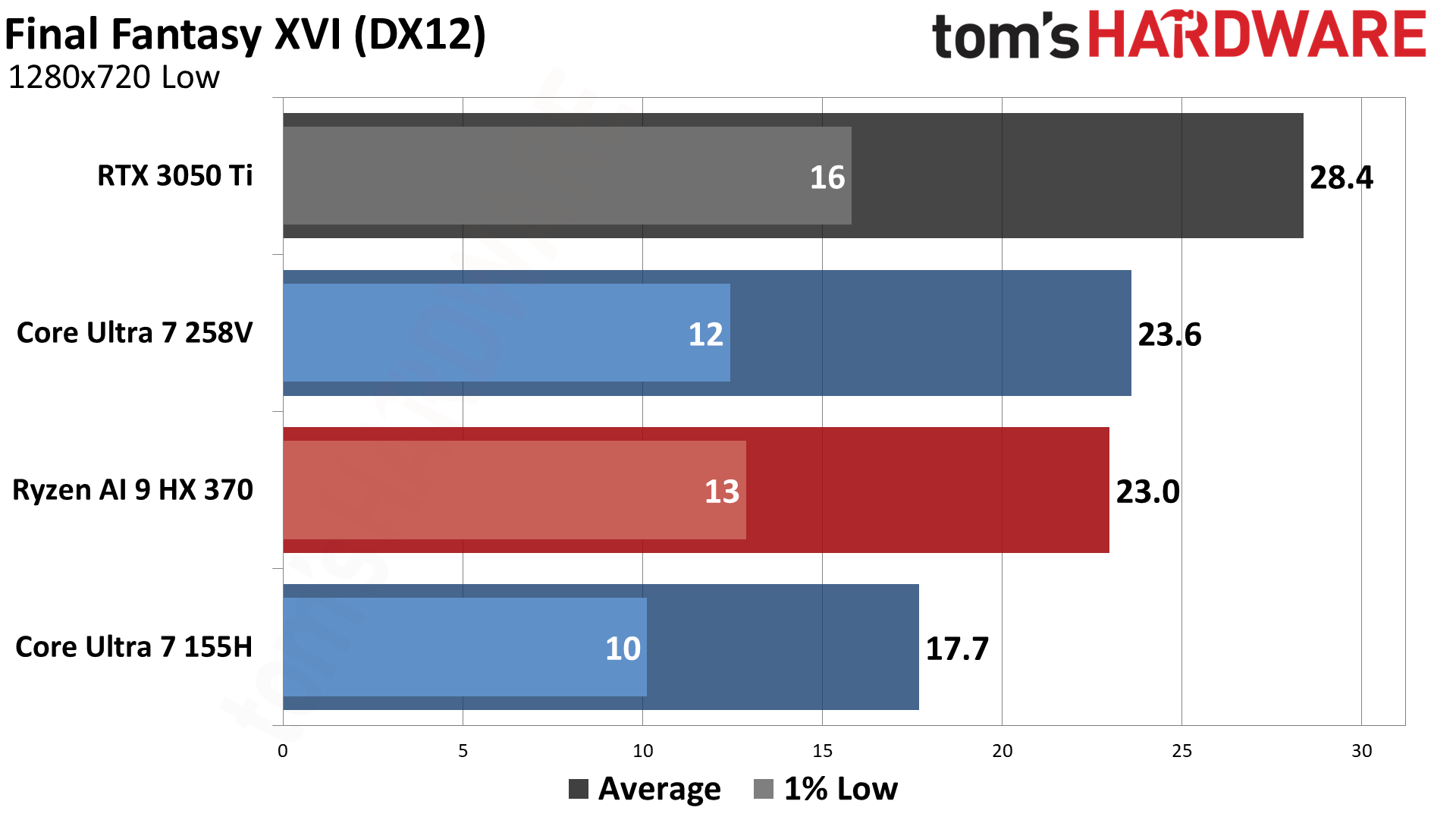

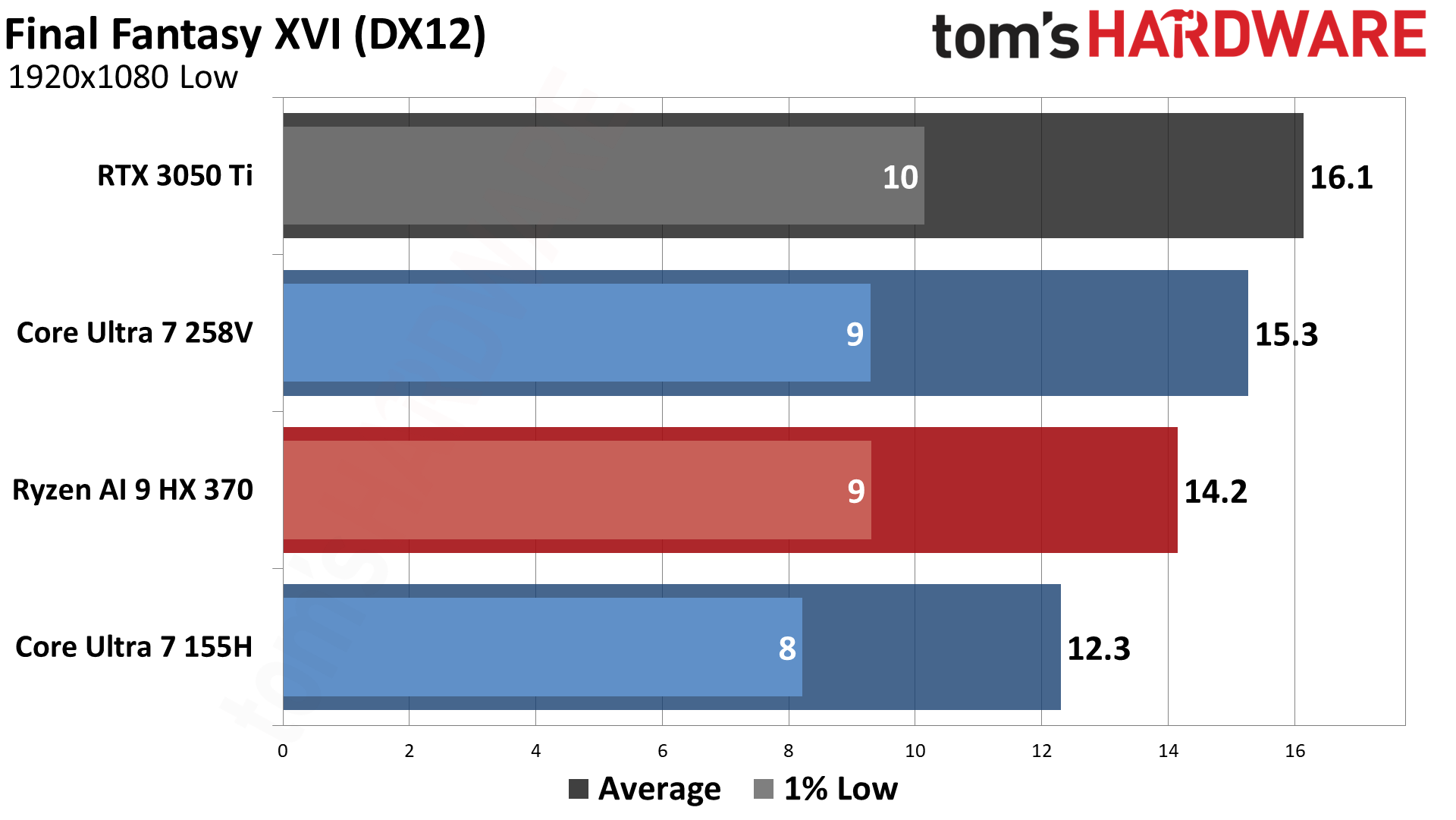

None of the iGPUs manages to run Final Fantasy XVI, using the default low preset, at anything approaching a playable level. Even the RTX 3050 Ti struggles, with minimums regularly falling below 20 fps at 720p. At 1080p, the fastest of the four laptops (Dell G15) only gets 16 FPS, while the slowest (MTL) gets 12 FPS. As we noted in our desktop testing of Final Fantasy XVI, the game feels extremely poorly optimized.

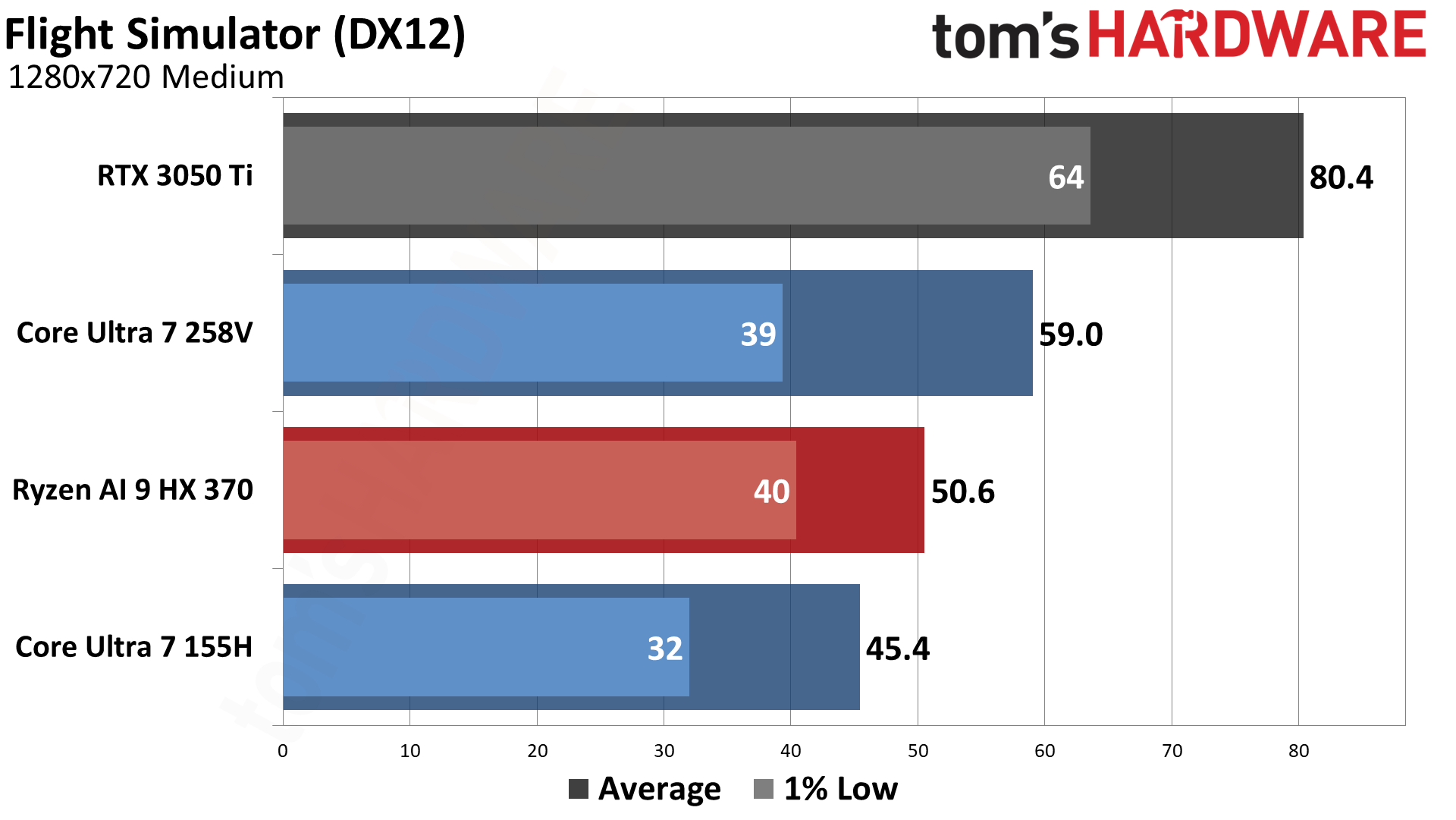

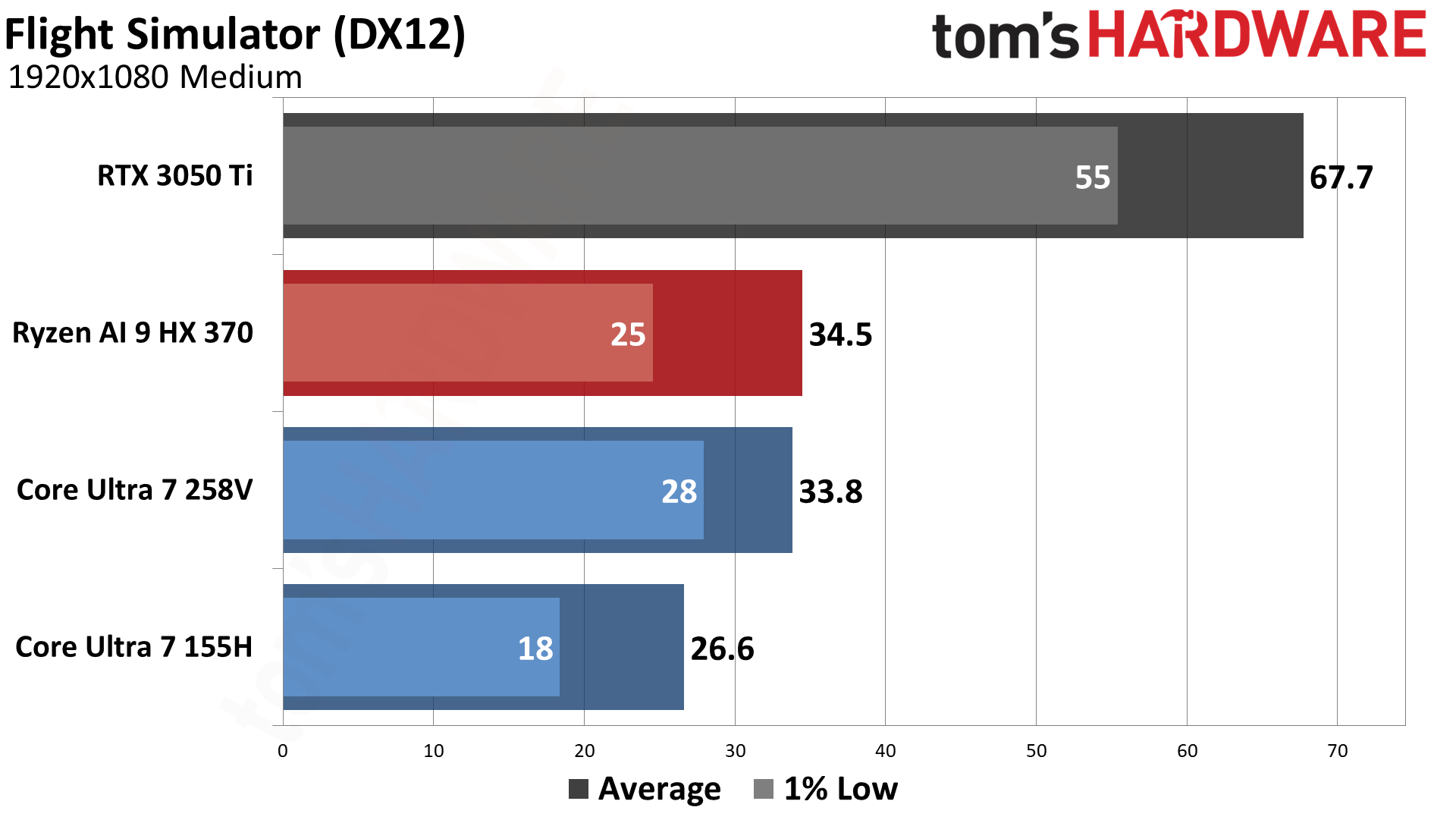

Microsoft Flight Simulator 2020 benefits from both high CPU as well as higher GPU performance, and despite only having eight cores, Lunar Lake delivers good performance overall. It's 17% faster than the Radeon 890M at 720p, but 2% slower at 1080p. Gen over gen, Lunar Lake beats Meteor Lake by 30% / 27%.

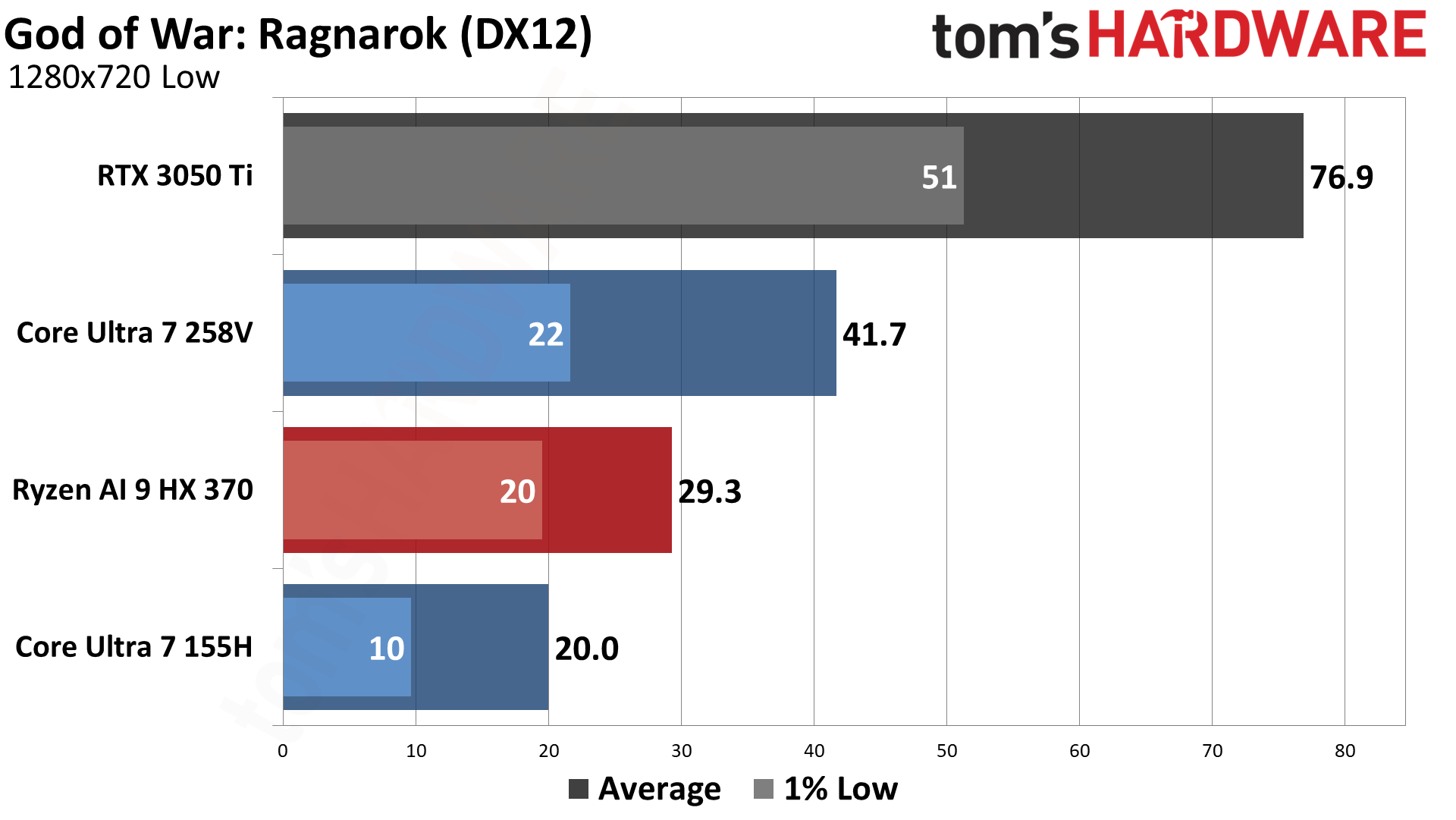

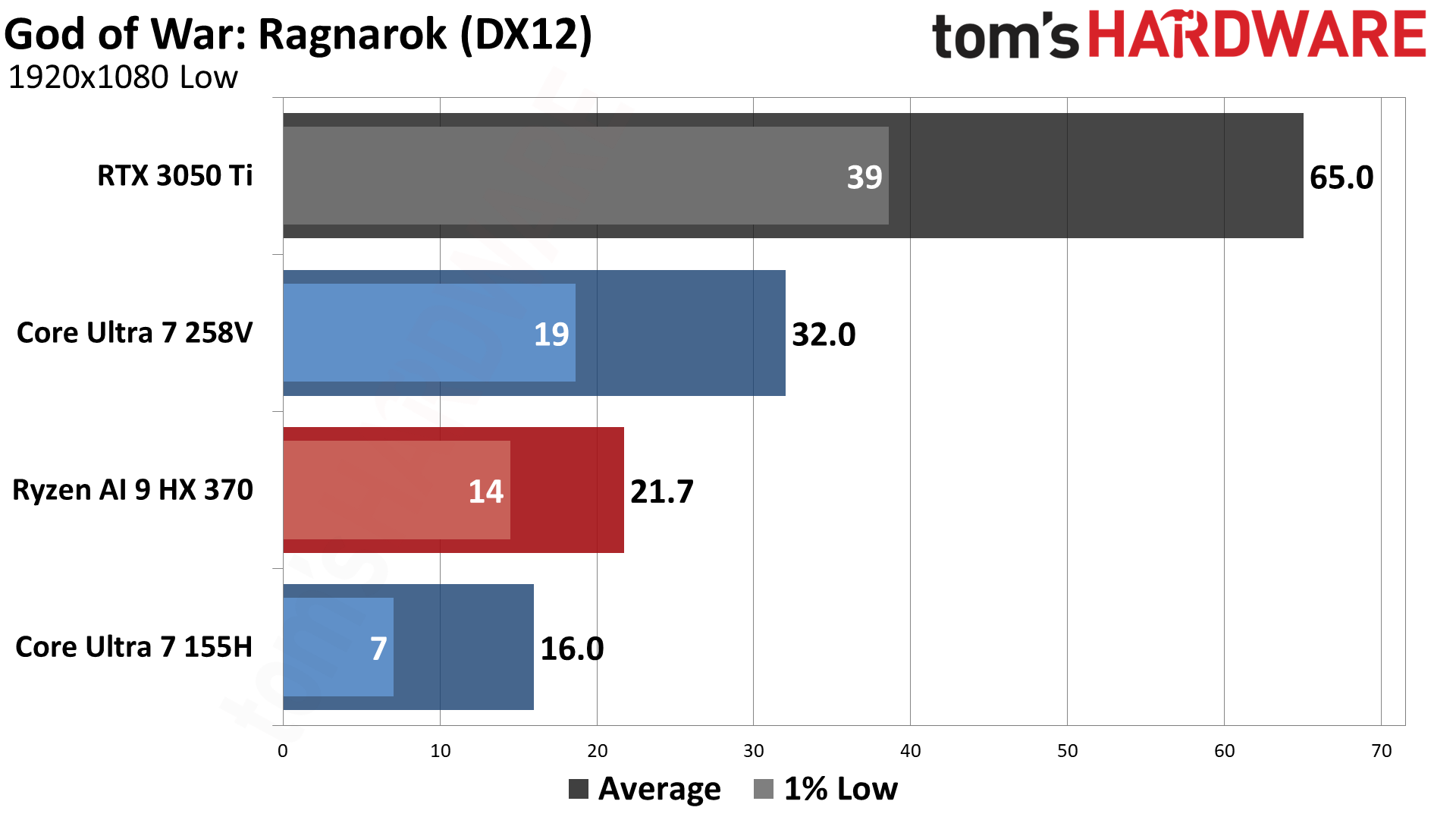

God of War: Ragnarok is another game where all three iGPUs have a difficult time, even at 720p using minimum (low) settings. The Arc 140V averages 42 FPS, but minimums fall into the low 20s, and it feels quite stuttery. Still, Intel gets the win over AMD by a relatively large 42% / 47% — we'd wager AMD needs to do some driver tuning here. Looking at the previous generation, Intel sees just over double the performance.

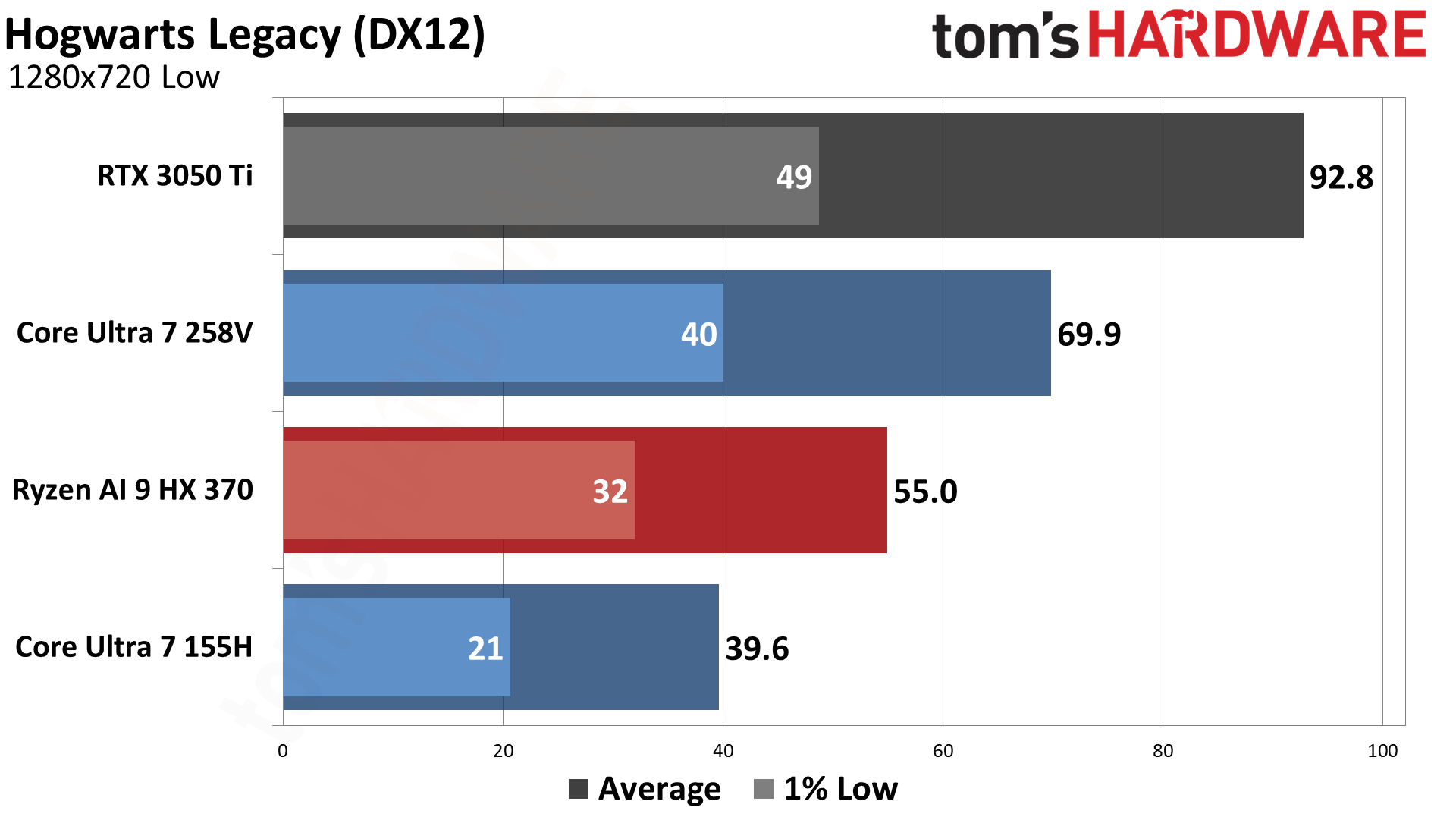

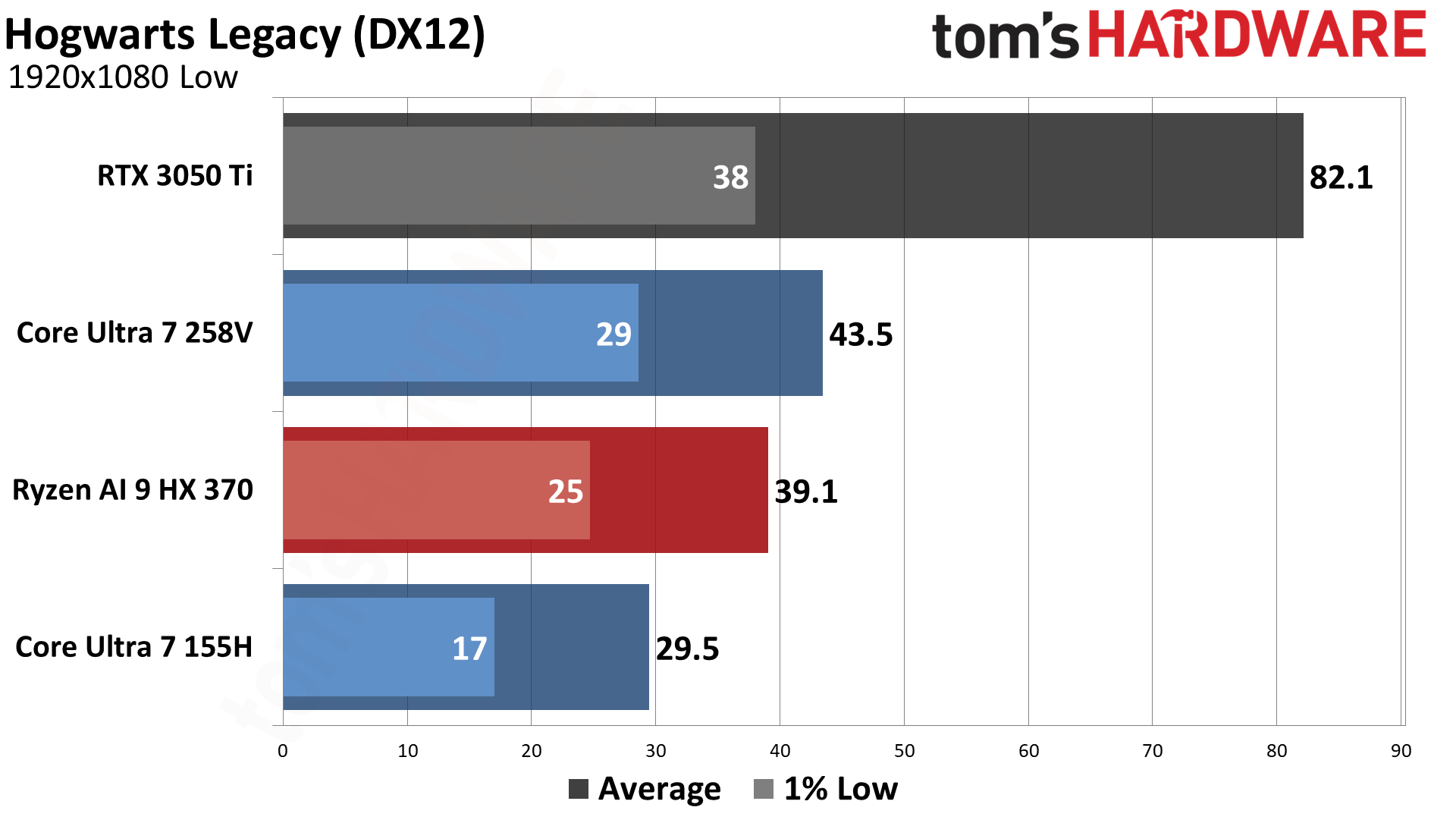

Hogwarts Legacy also has the occasional stutter, though this seems more endemic to the game engine than the GPU. Each system would 'stall' noticeably at a certain point in our run through the town of Hogsmeade, and we've seen the same thing with desktop GPUs. Intel's new mobile CPU gets top honors again, though, with a 27% / 11% lead over AMD's finest, and a 76% / 47% lead over the prior gen 155H.

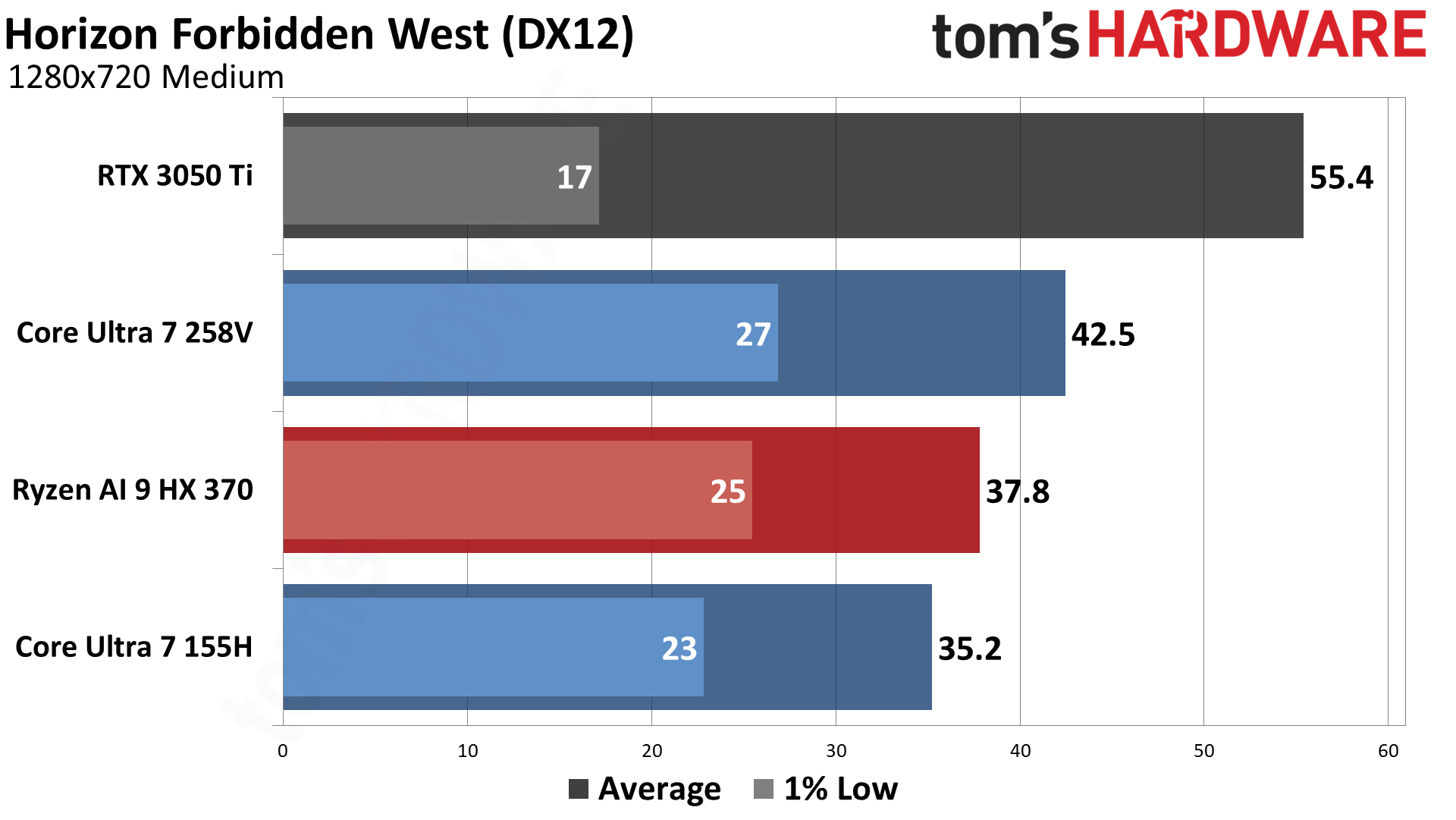

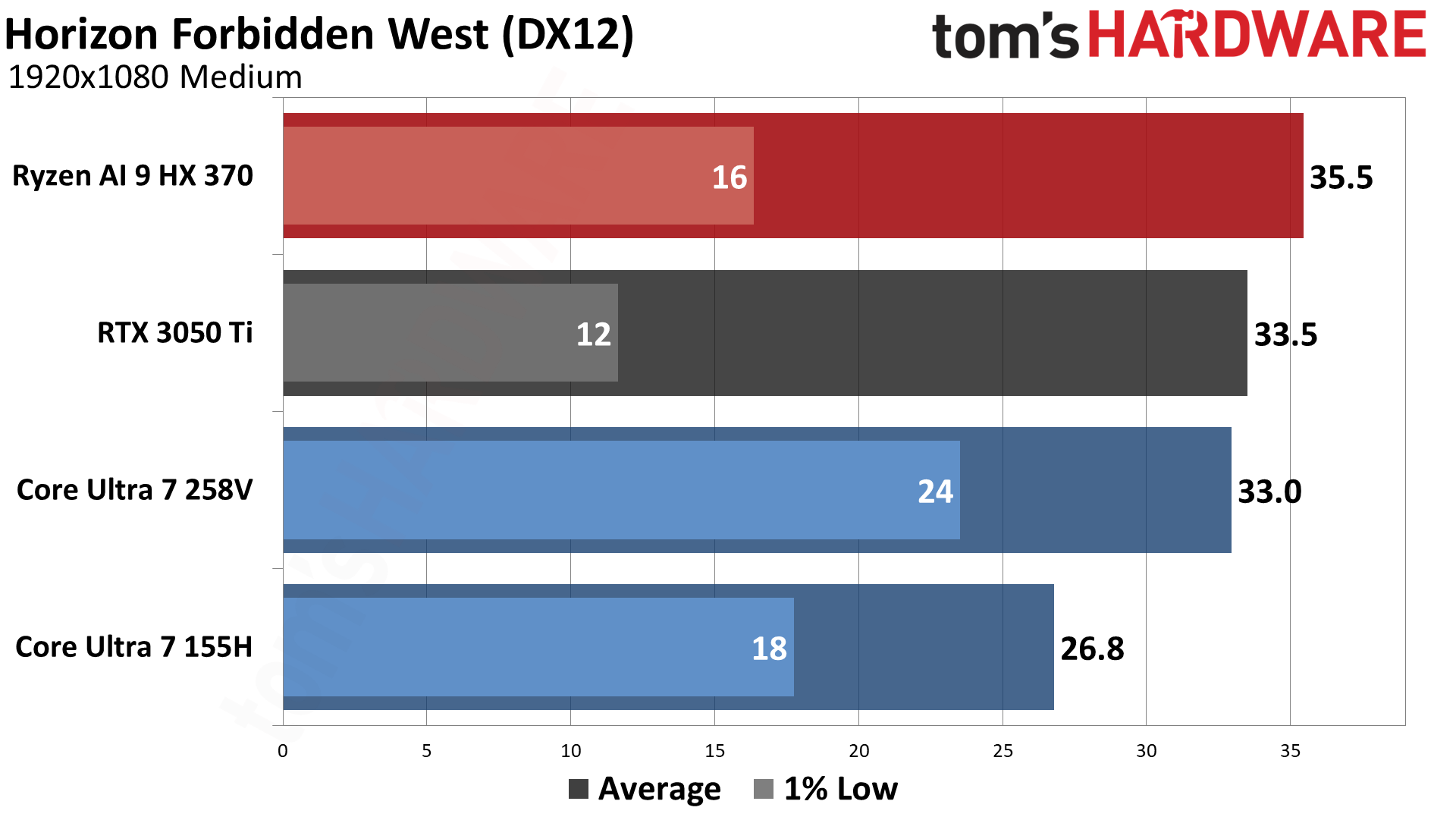

Whether or not you think Horizon Forbidden West looks better than its predecessor, it's pretty demanding of the GPU, with minimums on all of our test laptops falling below 30 FPS. Arc Graphics 140V in the 258V comes out in first among iGPUs at 720p, leading AMD by 12%, but then it falls behind by 7% at 1080p. Gen on gen, Intel sees a 21% / 23% lead over Meteor Lake.

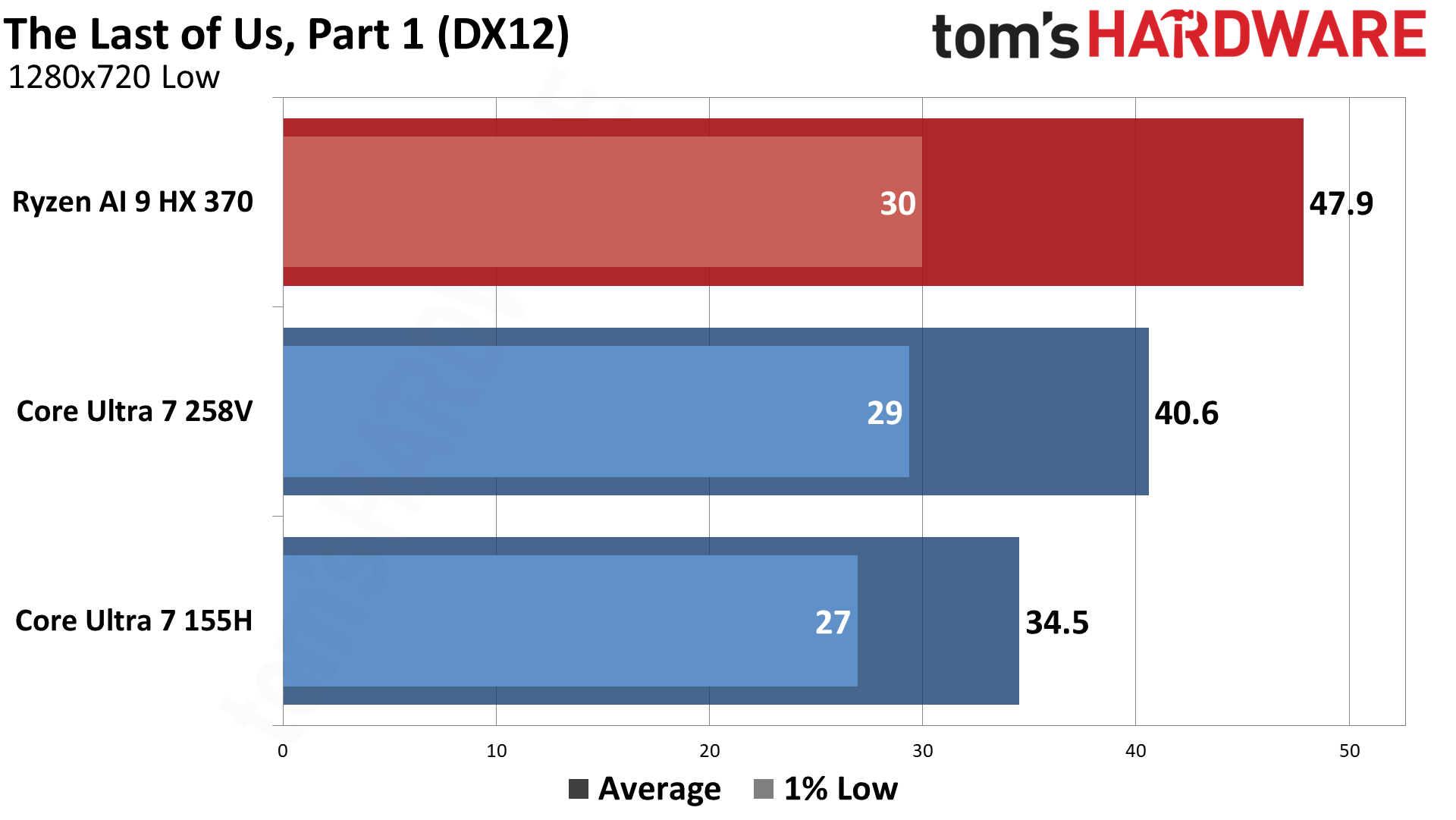

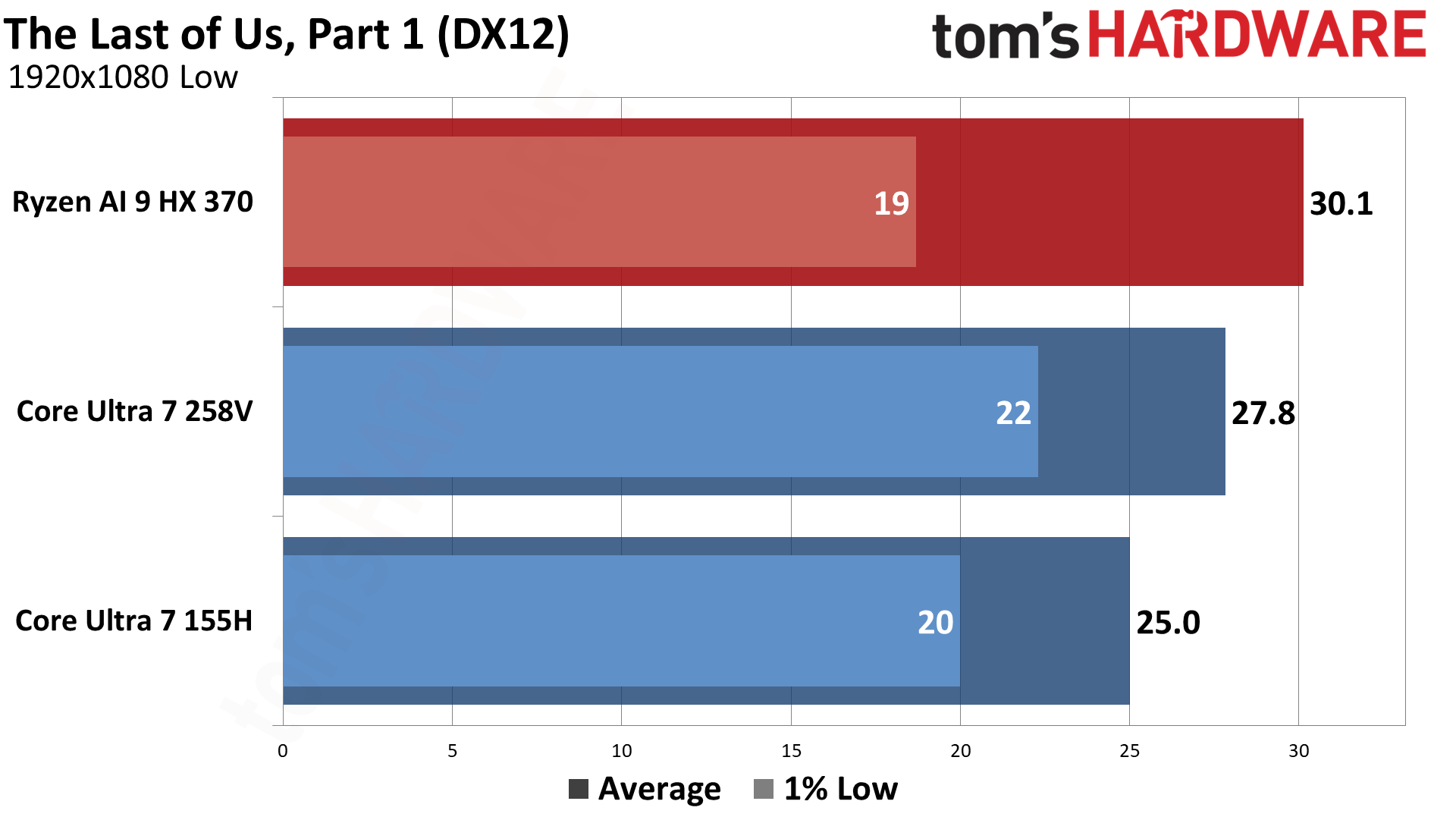

The Last of Us, Part 1, refused to run on the RTX 3050 Ti laptop, showing an "insufficient memory" error. We're not sure if that's purely from the 4GB of VRAM, or if it's the combination of VRAM and system RAM. As an AMD promoted game, it's no surprise to see the 890M leading Intel's iGPU by 18% / 8%. Intel only sees an 18%/11% lead over its prior generation part as well, one of the smaller margins.

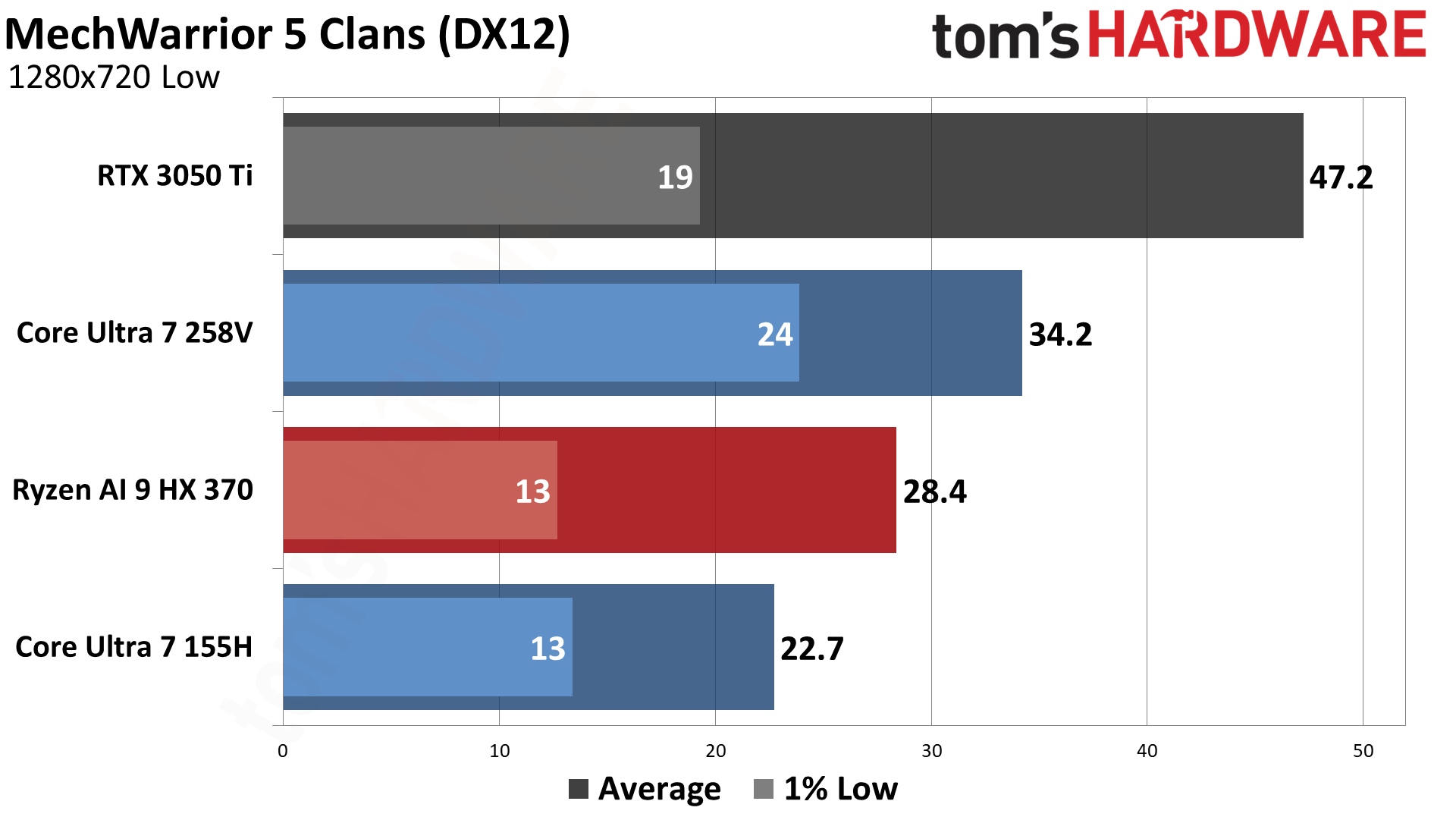

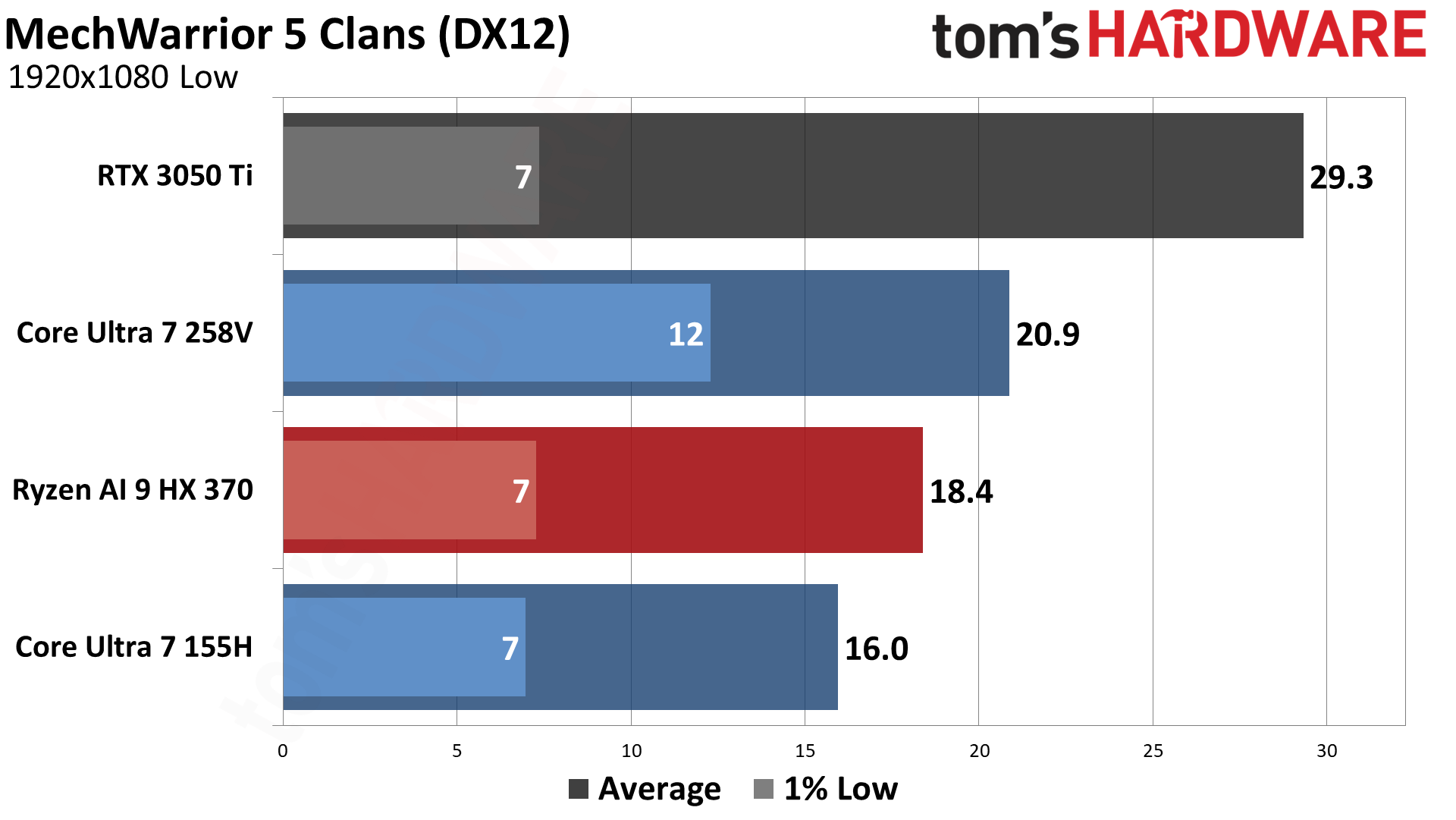

MechWarrior 5 Clans uses Unreal Engine 5, and even at minimum settings, it's rough on lower tier GPUs. The RTX 3050 Ti has decent average FPS of 47, but minimums fall below 20 FPS with clear hitching and stuttering present. The 258V is manageable at 720p, beating AMD's part by 20% and the 155H by 51%. Moving up to 1080p, none of the laptops were really playable, though Lunar Lake still shows a 14% advantage over AMD and a 31% lead over Meteor Lake.

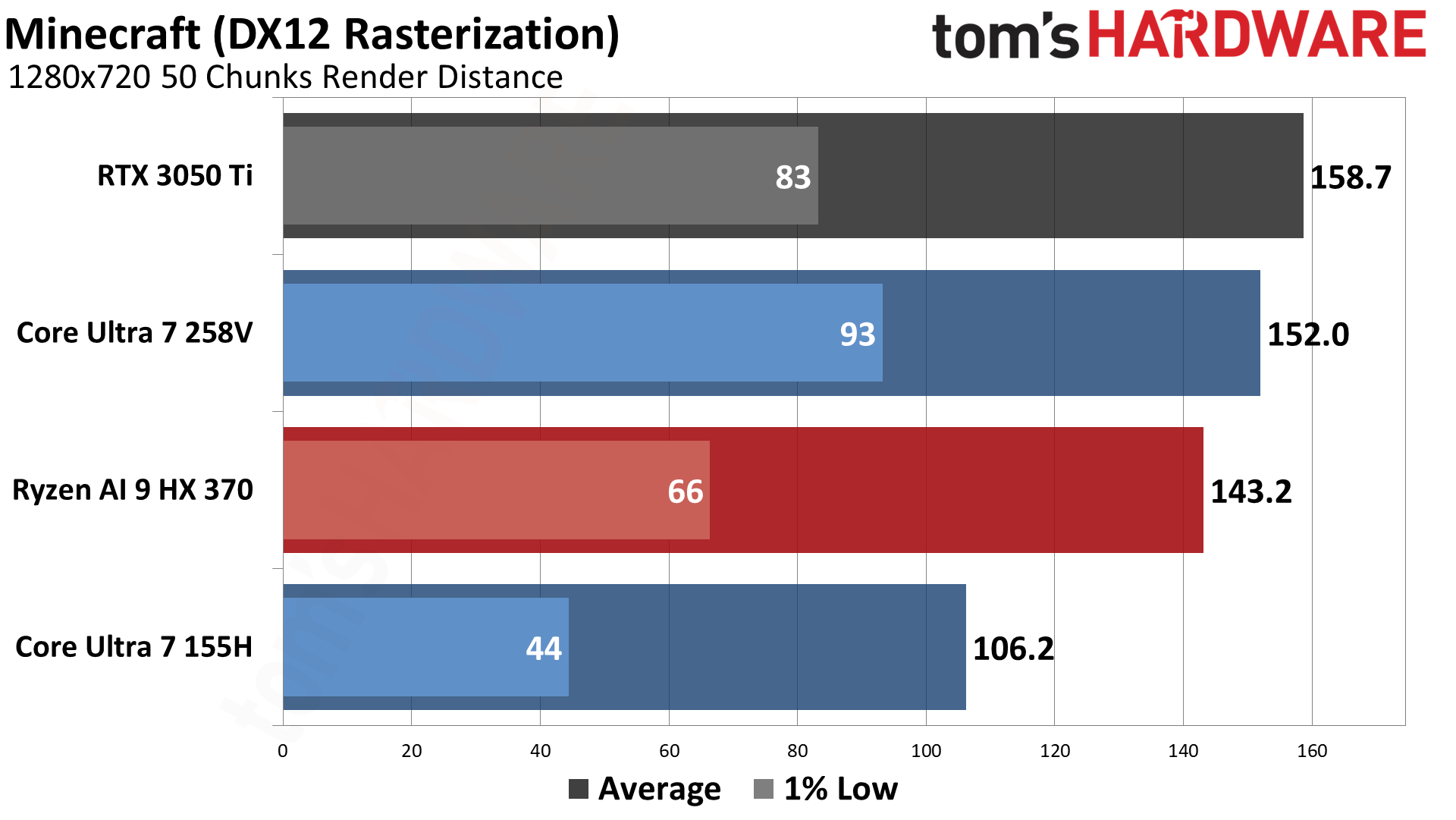

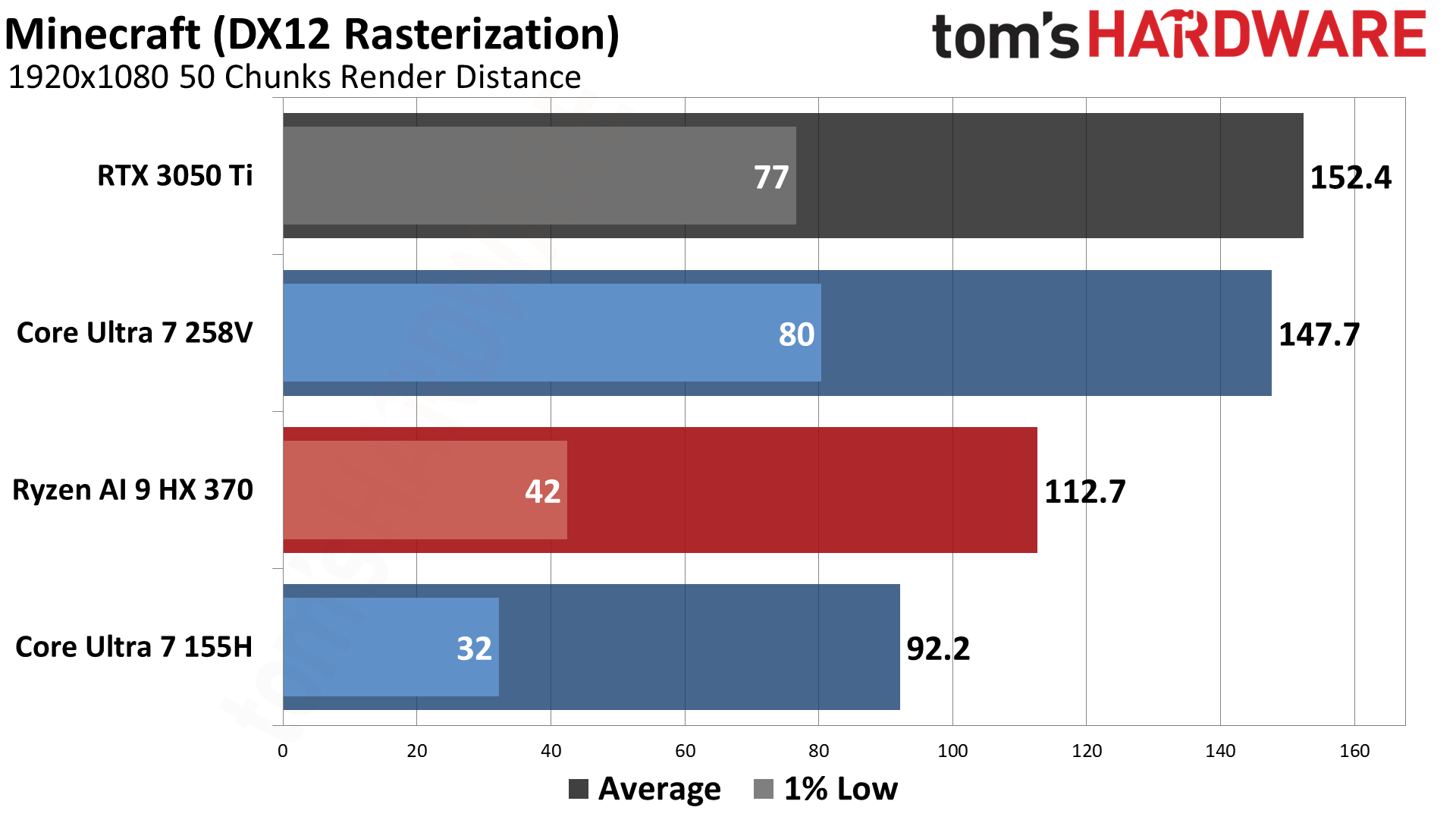

Without ray tracing turned on, Minecraft can run on pretty much anything and everything. It ends up CPU limited with the RTX 3050 Ti only drawing around 22W and with similar 720p and 1080p performance. It seems to be more single-threaded overall, which is why Lunar Lake ends up being the fastest iGPU at 1080p, with 31% higher FPS than Strix Point and a 60% gen-on-gen improvement over MTL.

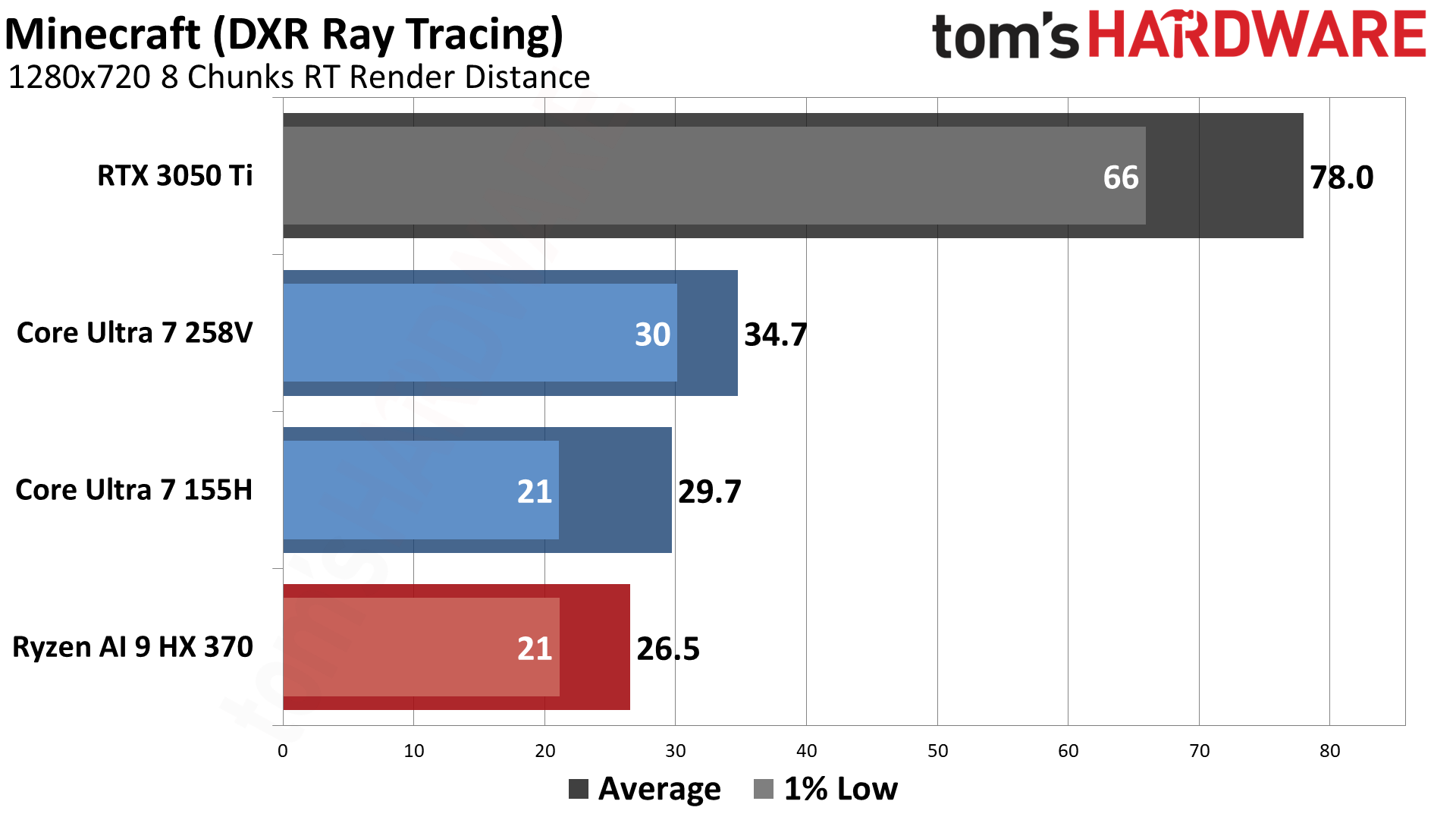

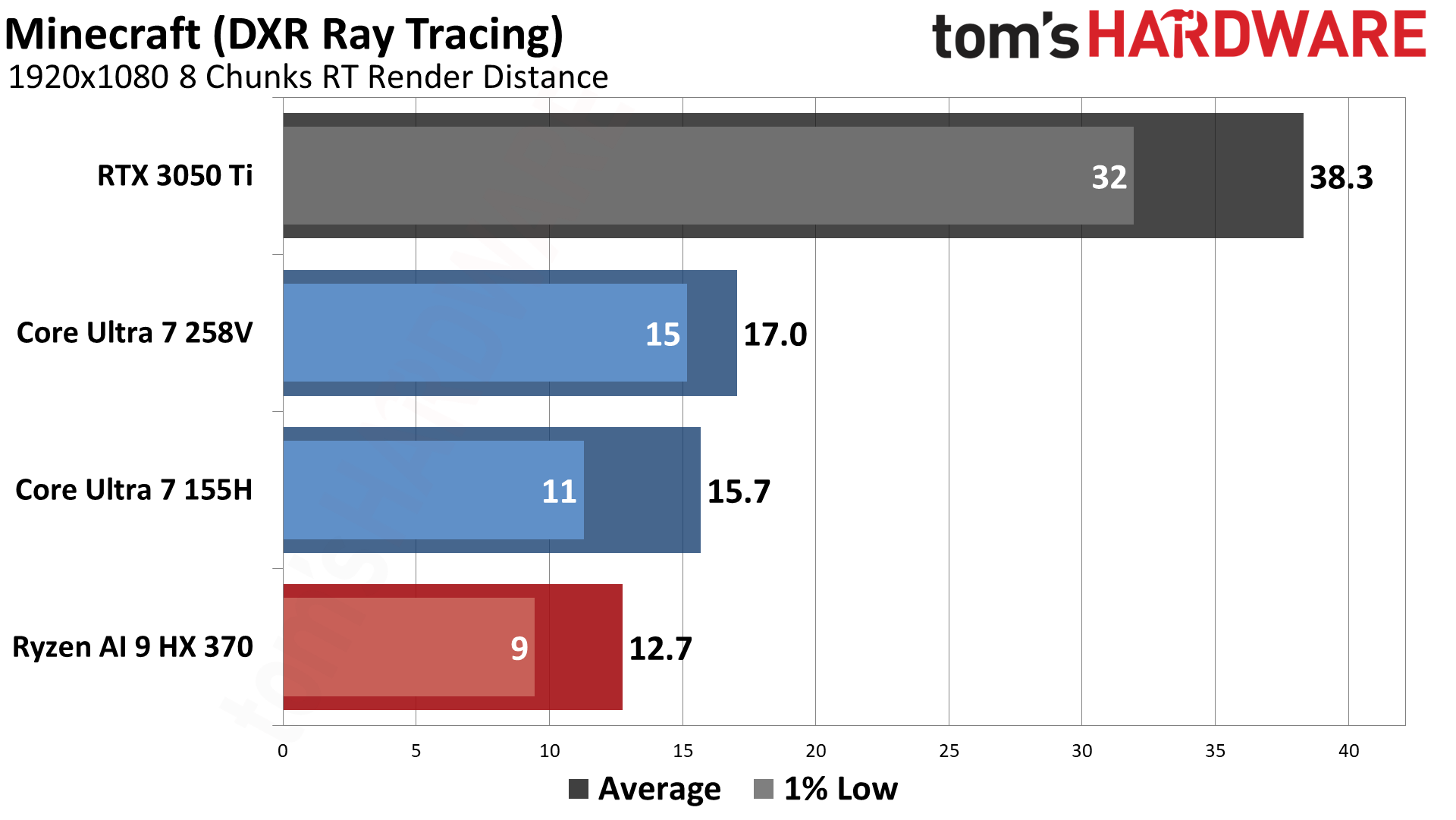

Turning on ray tracing causes a massive hit to performance, and the 3050 Ti power use jumps to 87W. LNL ends up 31% / 34% faster than AMD's Strix Point, and 17% / 9% faster than MTL. It almost looks like Minecraft with DXR enabled on the Intel iGPUs ends up largely limited by memory bandwidth.

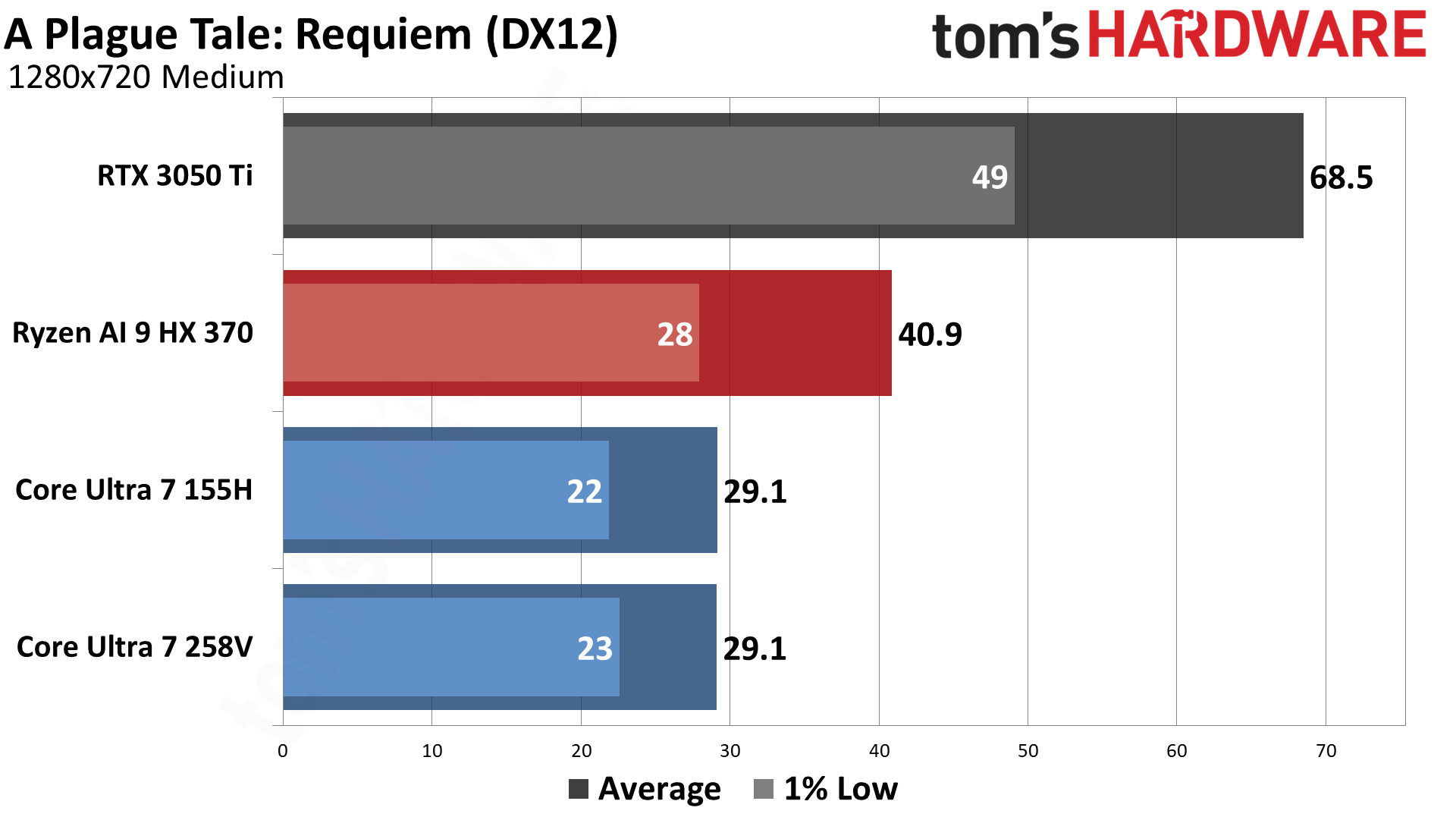

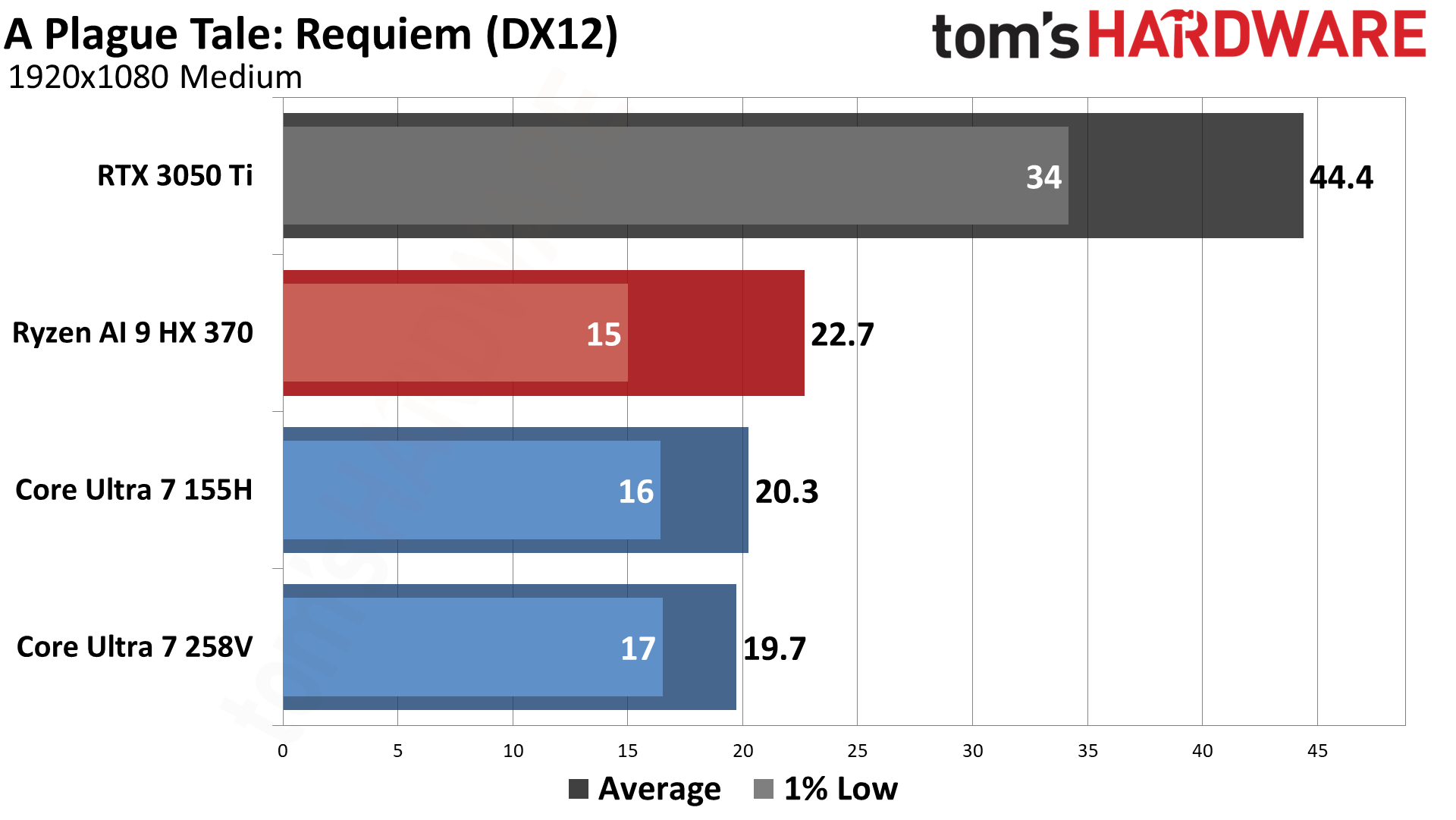

A Plague Tale: Requiem is one of the outliers as far as Lunar Lake goes, with performance basically unchanged compared to Meteor Lake. It looks like Intel could work on its drivers for this one, while AMD's 890M delivers a 40%/15% gutpunch to the Intel GPUs.

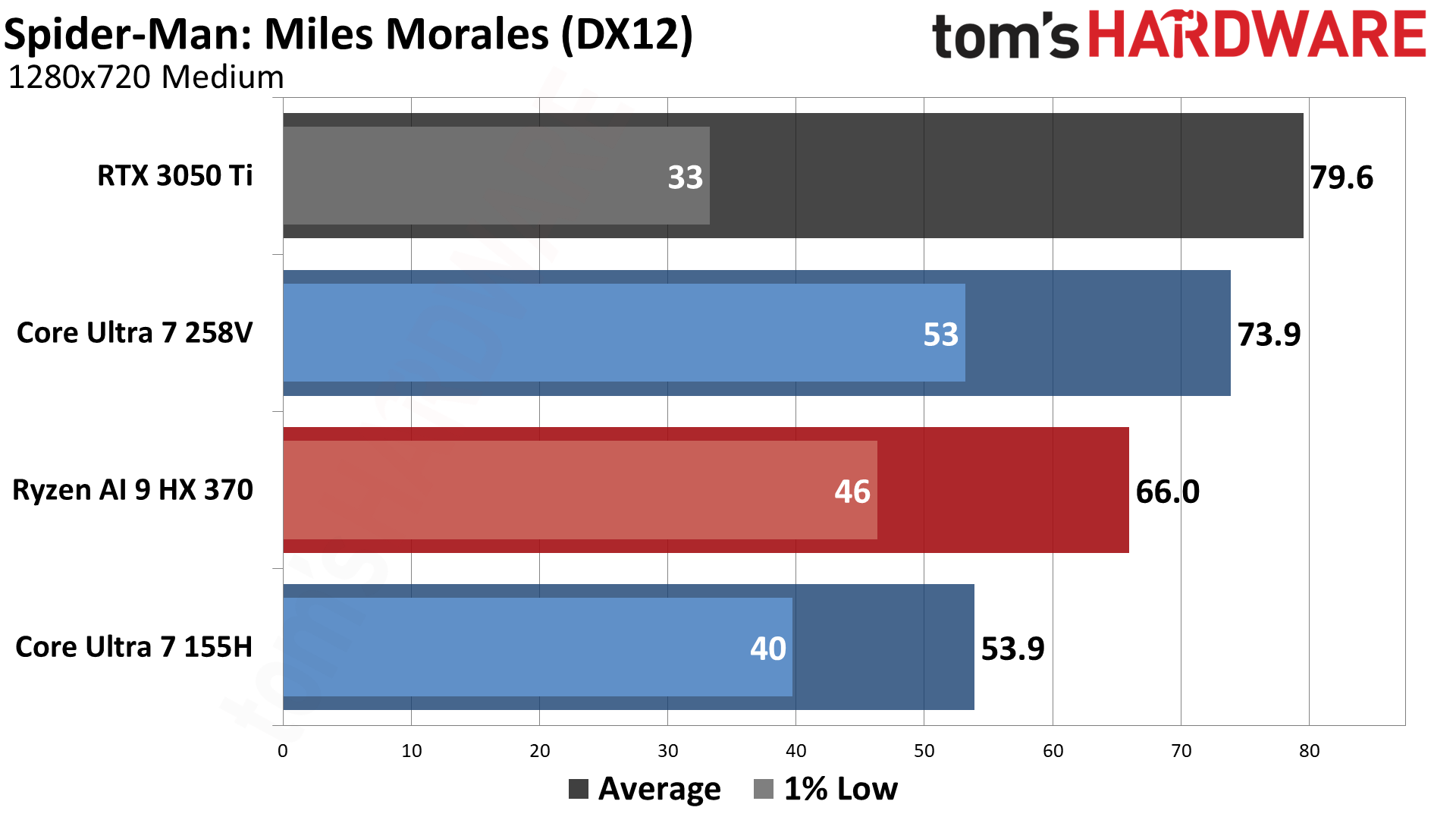

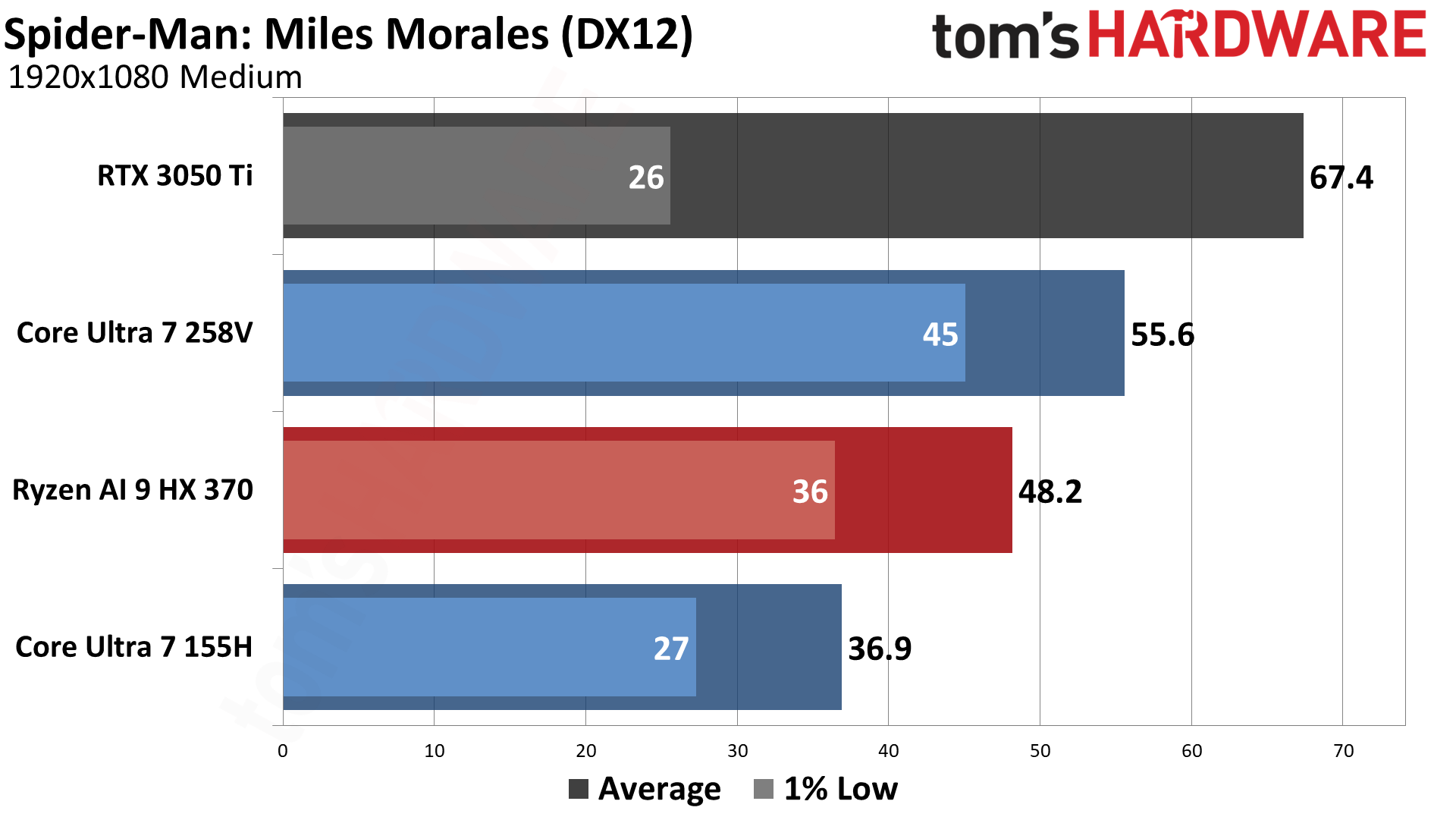

Spider-Man: Miles Morales is another game that likes both CPU performance as well as VRAM. The RTX 3050 Ti gets held back on both fronts with its older i5-12500H processor, barely outpacing the 258V at 720p though the gap grows at 1080p. Lunar Lake ranks again as the top iGPU with 12% / 15% better performance than AMD's Strix Point, and 37%/51% higher gen-on-gen performance.

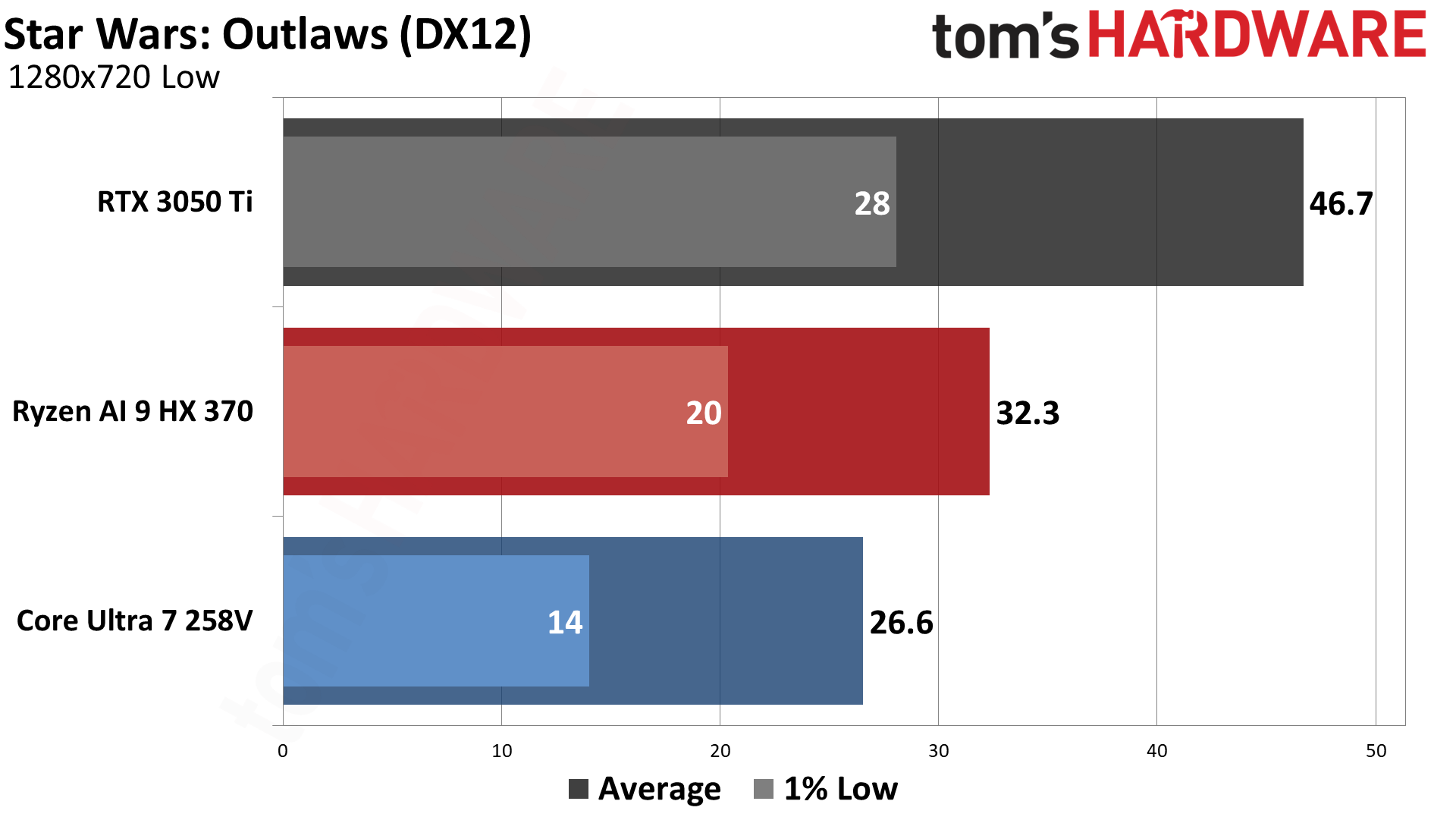

Star Wars Outlaws is the sole game in our 24 game test suite that refused to run on Meteor Lake, and it also kept crashing on Lunar Lake. We got a result at 720p, though we wouldn't put too much stock in it until Intel fixes the drivers. There were also rendering errors on Lunar Lake, and Intel says it's aware of the problems. AMD's 890M didn't have any such trouble, delivering 22% higher performance, without crashing, compared to Intel's 140V GPU processor.

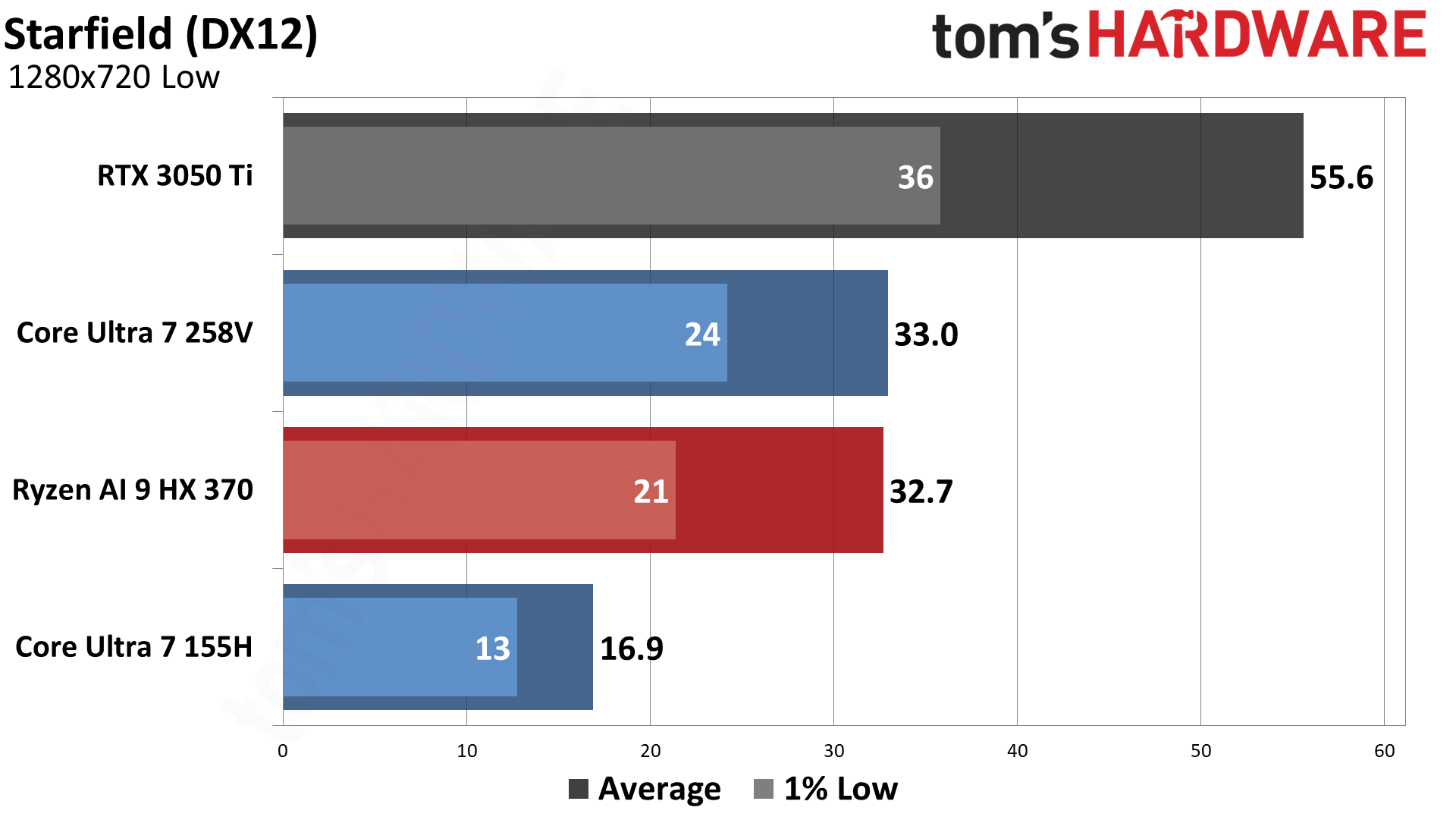

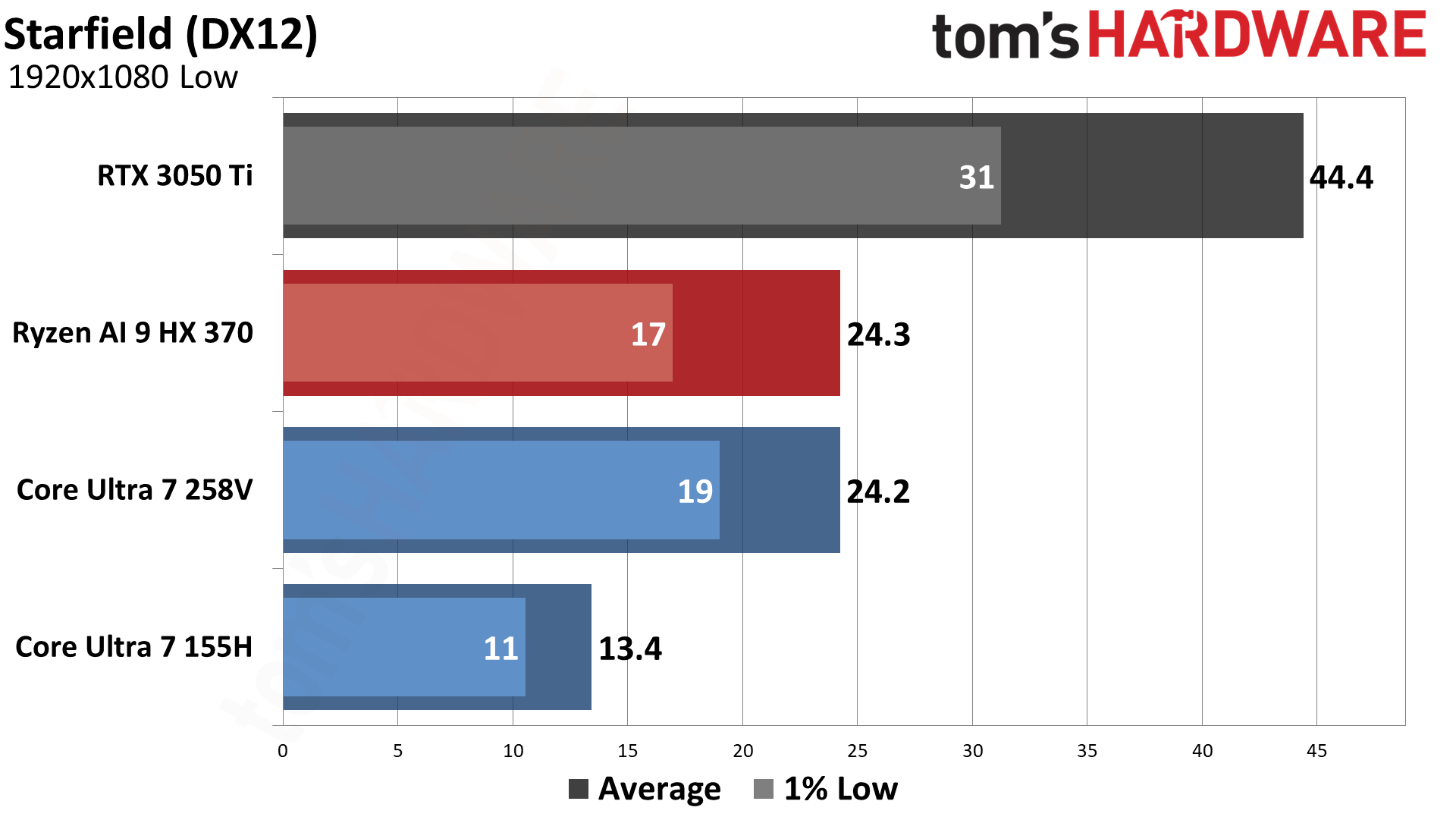

Starfield has AMD and Intel on equal footing, though neither provides a particularly great experience with average performance in our test sequence (on Akila) of just 33 FPS at 720p, and 24 FPS at 1080p — though Intel has slightly higher minimum FPS in both cases. LNL beats MTL by 95% / 80%, one of the larger difference between the old and new Intel GPUs.

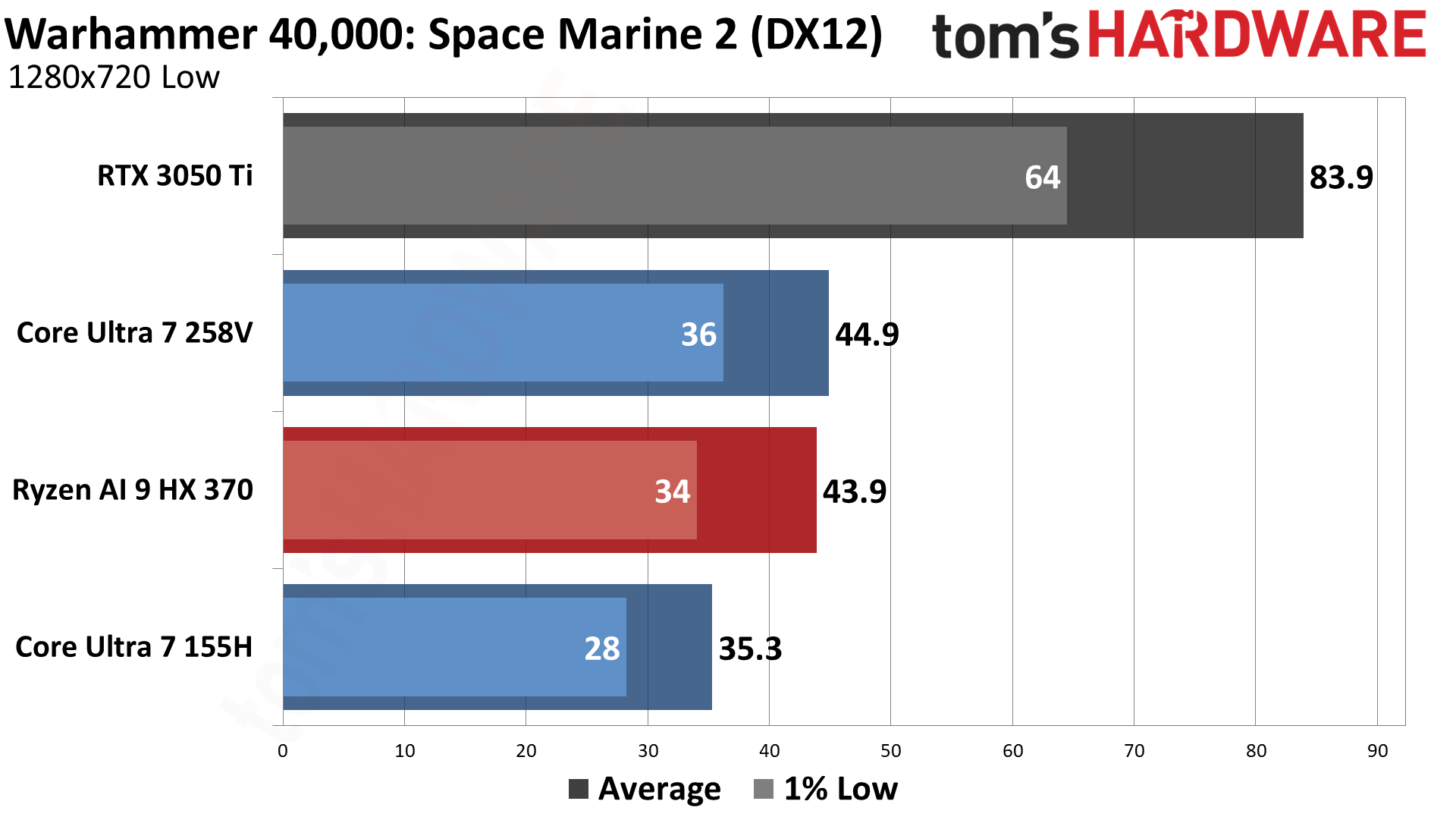

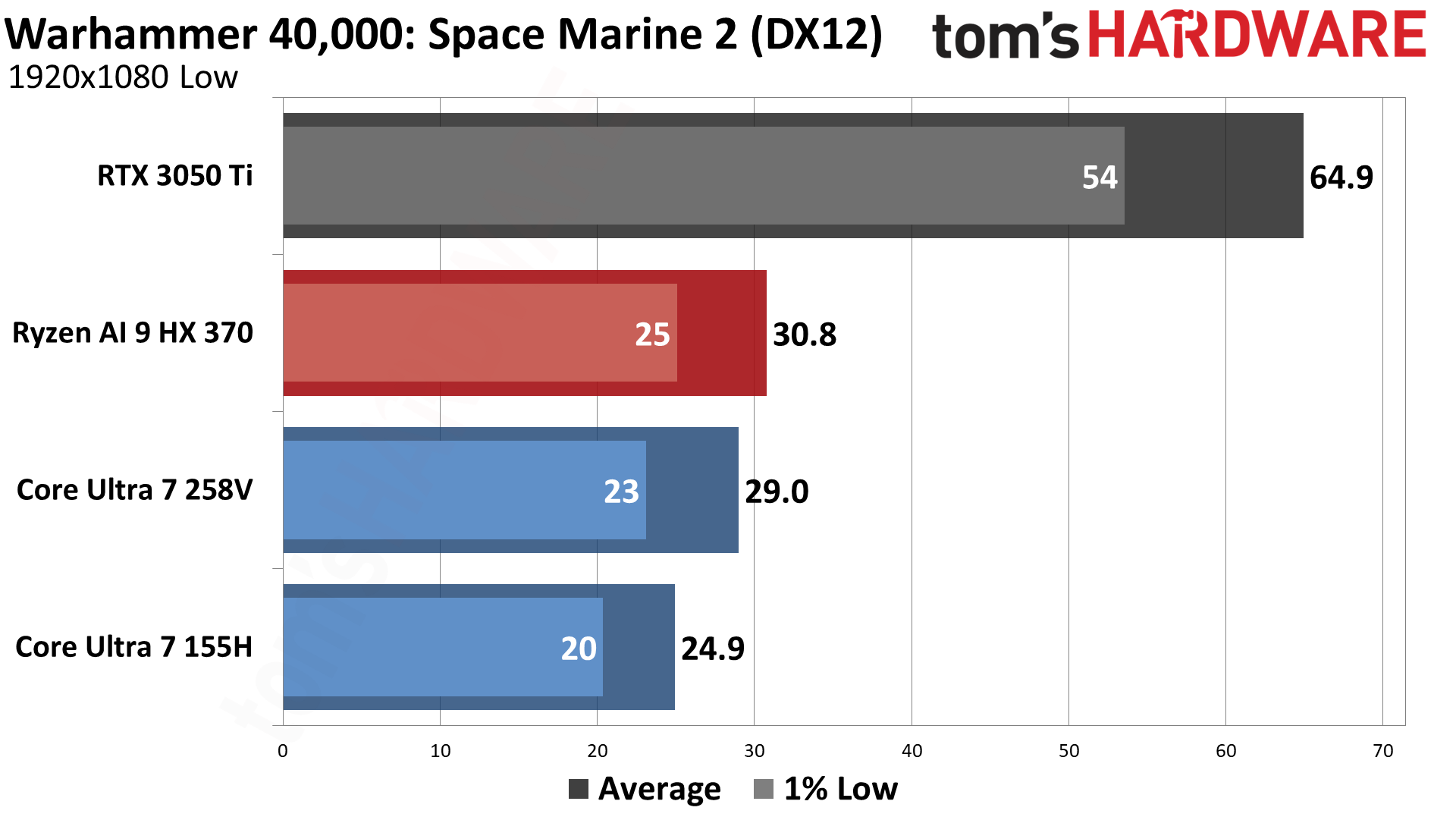

Another recent release, and an AMD promoted game as well, Warhammer 40,000: Space Marine 2 pretty much has Lunar Lake and Strix Point at parity, with AMD having a slight 6% lead at 1080p. LNL is 27%/16% faster than the previous gen MTL, however.

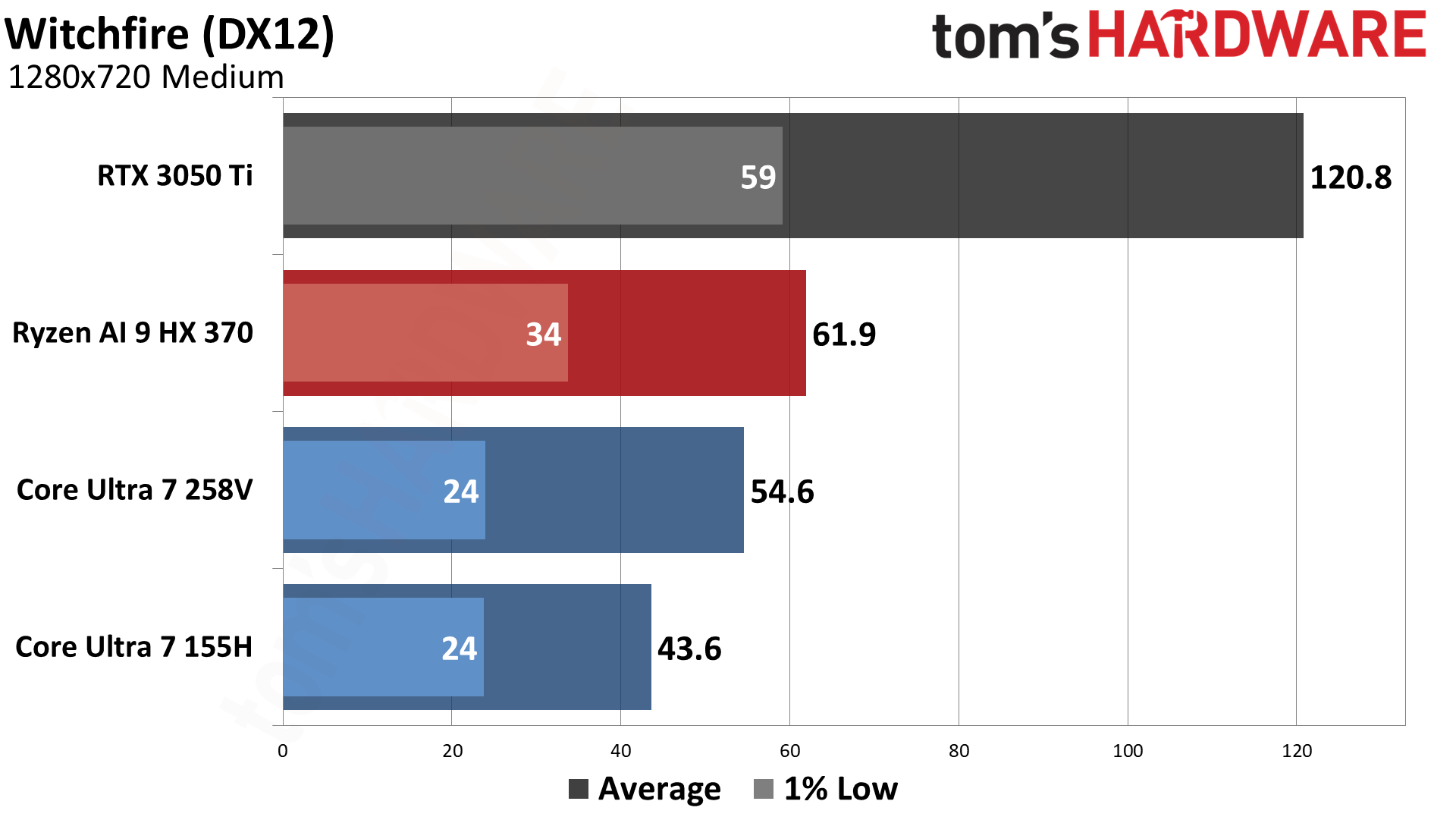

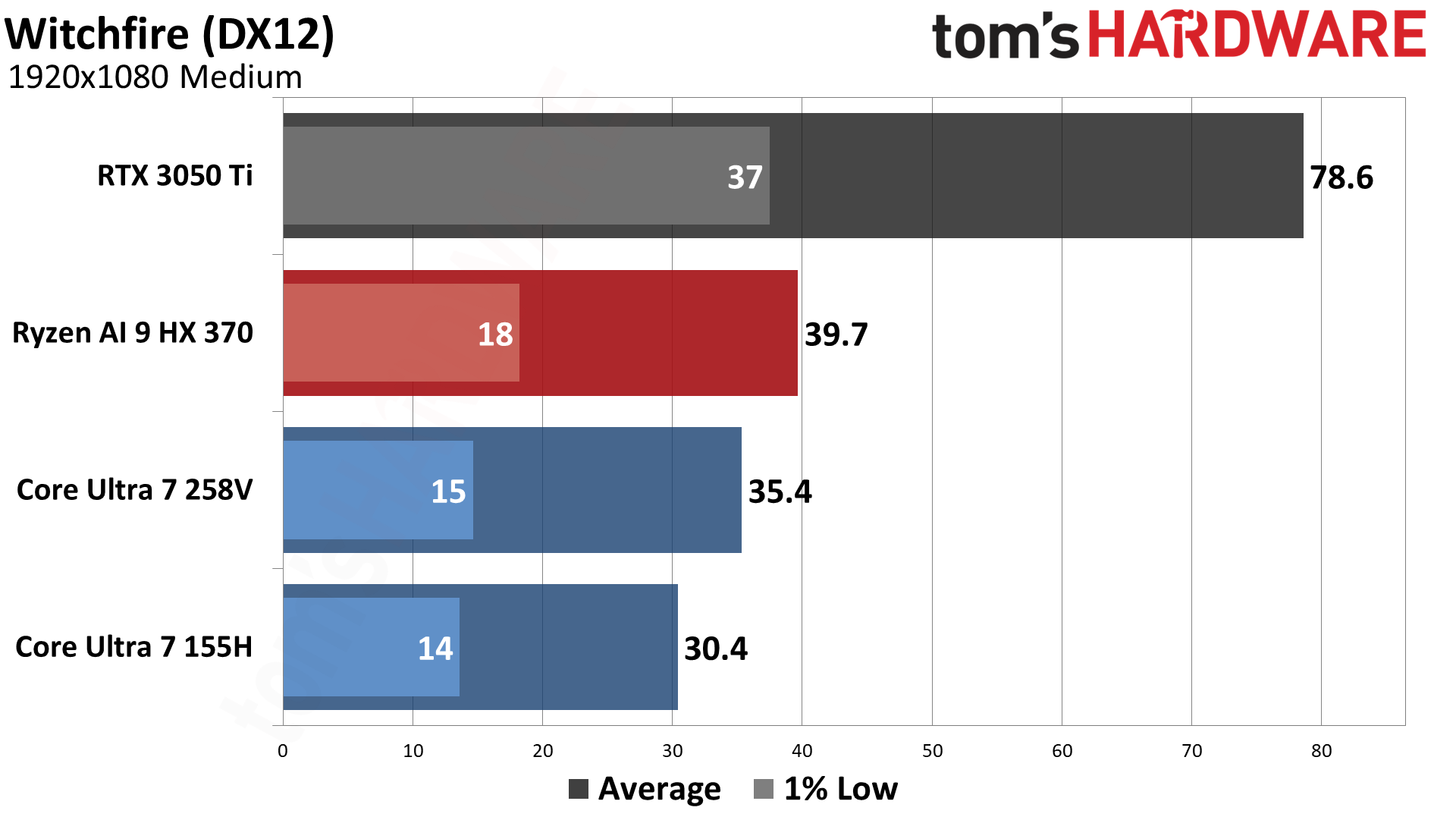

Last but not least, we have Witchfire, and indie game from some of the people that made Painkiller back in the aughts. Two of the studio's heads left People Can Fly to form The Astronauts in 2012, around the time the Epic acquired a stake in People Can Fly. The game uses Unreal Engine 4, so performance tends to be better than in UE5 games. AMD's Radeon 890M takes the top spot at both 720p and 1080p, beating Lunar Lake's 140V by 12%–13%. LNL in turn leads the previous generation Arc Graphics by 25% / 16%.

Total power use and GPU clocks

That takes care of the gaming performance, but let's also quickly discuss power use and GPU clocks, as the data is interesting. First up is power.

We mentioned how the laptops vary in power limits, and here you can see that the settings we used result in very similar power use from all three of the Zenbooks with integrated graphics. This data is from FrameView's total package power for the CPU, and includes around 1.5W extra for RAM on Lunar Lake, but otherwise across all 26–27 tests, the power use is very similar.

And then look at the gaming laptop, which uses 39.2W on average for the CPU, and 77.3W on average for the RTX 3050 Ti. We suspect that with a slightly different and newer graphics solution, like the RTX 4050 laptop GPU, performance would be improved thanks to the additional VRAM, and the CPU and system RAM might also hold back the 3050 Ti. But it's still interesting to see just how big the gap is between the iGPU solutions and the dedicated GPU.

Nvidia GPUs can also run in lower power modes, though we did some limited testing with a 30W power limit and found that performance dropped substantially in that case, with the 3050 Ti often falling below the fastest iGPU solution. There's a good reason we never liked the RTX 3050 or 3050 Ti laptop GPUs. Beating a newer iGPU doesn't require much performance, really.

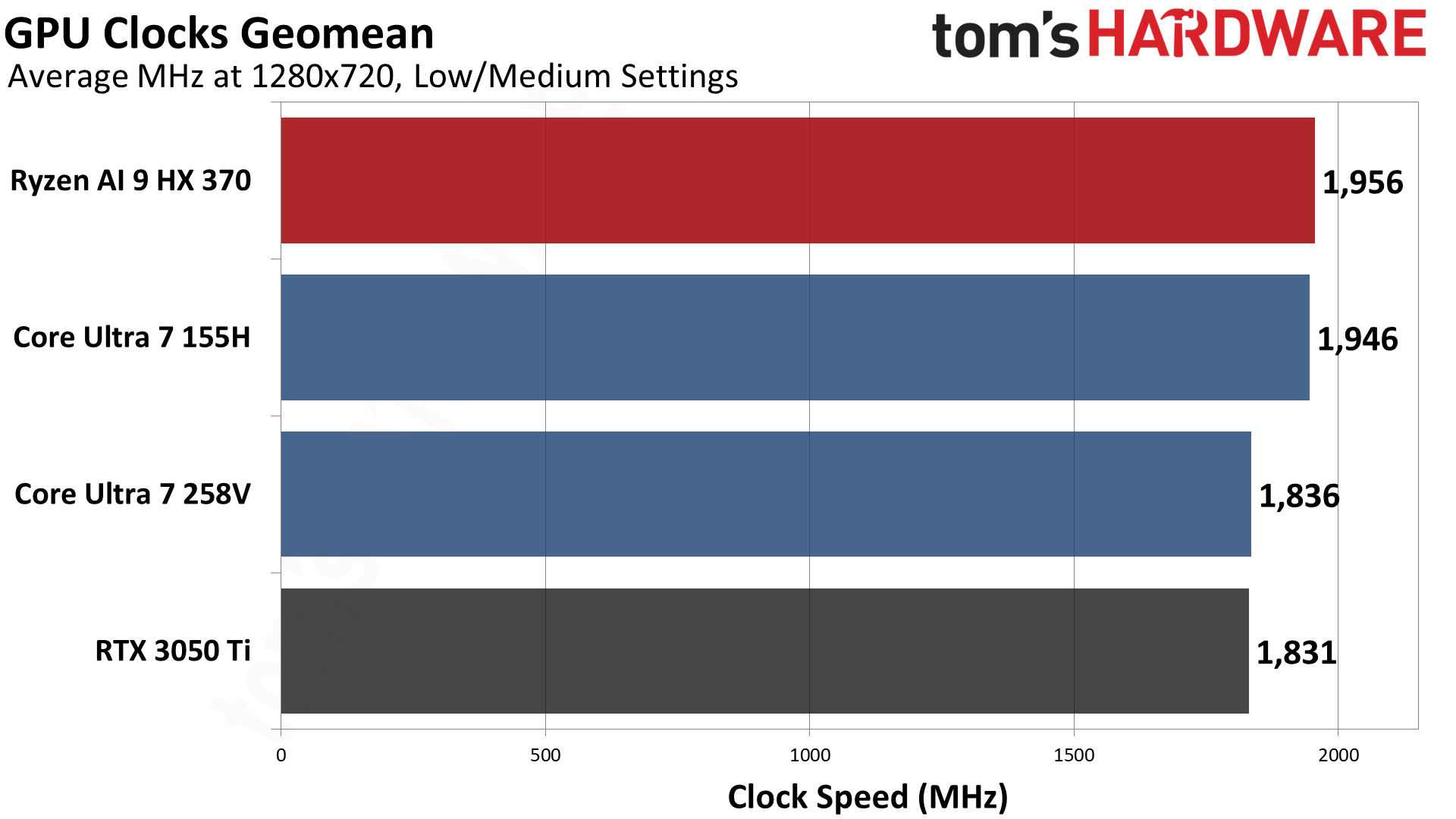

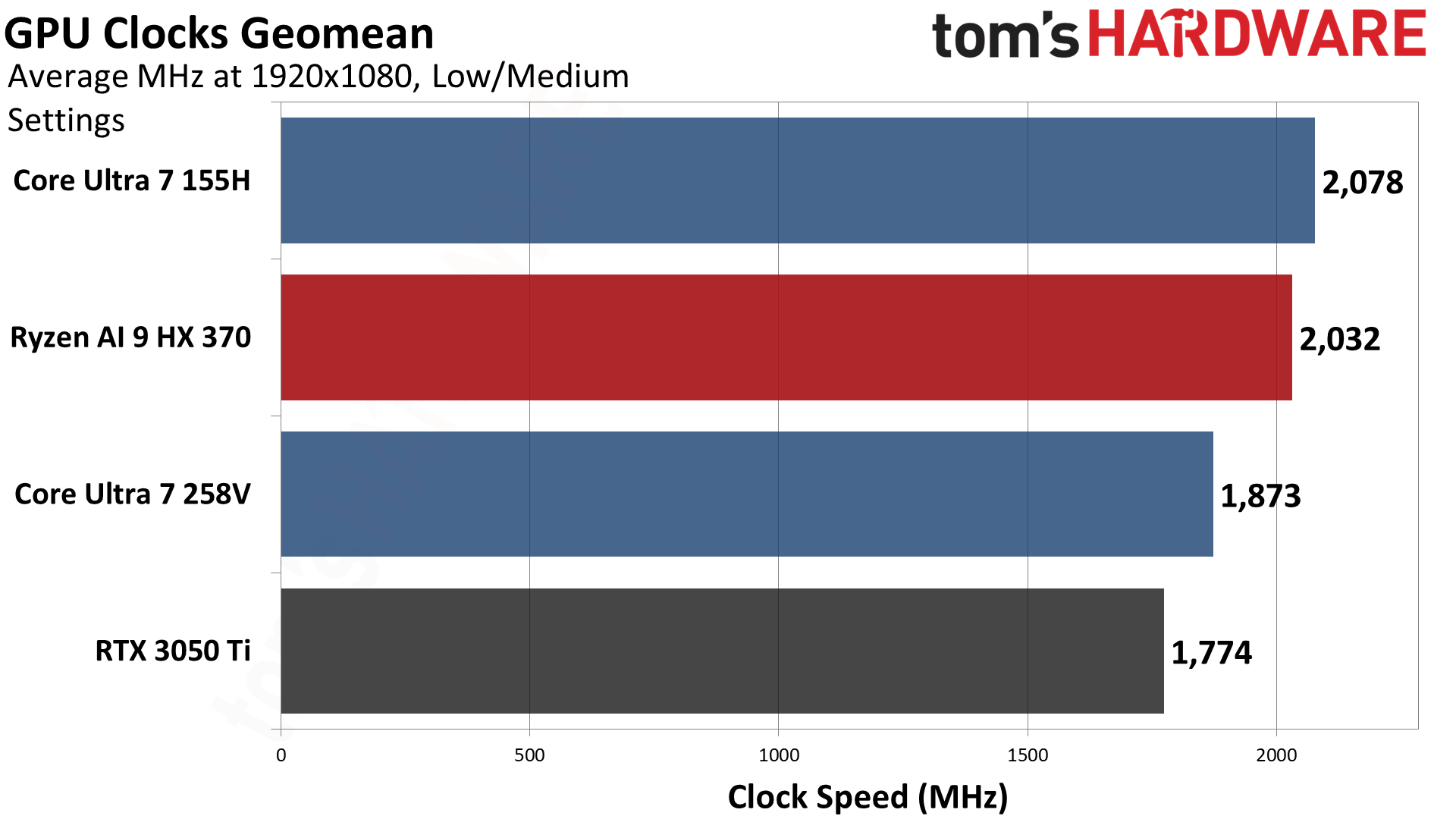

And finally, here are the GPU clock speeds as reported by FrameView. What's interesting here is that, despite having a maximum 2900 MHz boost clock, AMD's Radeon 890M typically runs at speeds closer to 2000 MHz. That's the same speed, roughly, as Intel's Meteor Lake Arc Graphics.

Lunar Lake on the other hand clocks even lower, which emphasizes the architectural improvements that the Battlemage Xe2 architecture brings to the table. It averaged around 1850 MHz across our test suite, only 100 MHz below its maximum boost clock, and many of the games showed a 1950 MHz average clock speed.

Put another way, at real-world clocks, Lunar Lake's Arc 140V GPU has a theoretical 3.8 teraflops of graphics compute, Strix Point's Radeon 890M has a theoretical 4.1 teraflops, and Meteor Lake's Arc Graphics also offers a theoretical 4.1 teraflops. Looking at the actual resulting performance (and, for simplicity's sake, ignoring the difference in memory speeds), that means on a clock for clock basis, Battlemage as an iGPU currently delivers better overall efficiency in terms of gaming performance per teraflops.

We crunched the numbers, and we end up with Lunar Lake offering 10.33 FPS/TFLOPS across our test suite, Strix Point gives 9.05 FPS/TFLOPS, and Meteor Lake only offers 6.90 FPS/TFLOPS. That means, for our independent testing, Intel delivered exactly 50 percent higher performance per teraflops compared to its prior generation GPU.

Thoughts on integrated graphics in 2024

For a lot of people, integrated graphics will be the only thing on offer with many laptops. It's possible to do dedicated GPUs, but that results in thicker, heavier systems that often offer much worse battery life, make more noise, run hotter, and cost a lot more. Given those factors, I'd make the argument that if you're only intending on modest gaming on a laptop, and you have good internet (with no data cap), you'd be better off with a thin and light laptop and an Nvidia GeForce Now subscription.

Do the math: RTX 2080/3080 performance at 1440p60 for $10 per month, or an "RTX 4080 in the cloud" with up to 4K120 performance for $20 per month. Image fidelity via streaming isn't as good as locally rendered graphics, but on a laptop I'd be more than happy to get a virtual 4080 equivalent that can actually deliver solid performance at 1440p ultra settings.

Of course, now even paid subscribers are limited to 100 hours per month, but if that's enough for your needs, and like I said you have good internet? I've used GeForce Now enough to know that it's better than what you get with just about any laptop, for a fraction of the cost. But don't try using it on most hotel WiFi networks, or be prepared for disappointment.

Or if you just play lighter and/or older games, look at the Diablo IV results here for a sample of what that can be like: Modern integrated graphics solutions can absolutely handle lighter games, even at 1080p. Plenty of games exist where you don't need a gaming laptop at all, and the games shown here are decidedly more of the "heavy" variety, with only a few exceptions.

Secondarily, there's the question of where integrated graphics are going. Intel, AMD, Qualcomm, and Apple all offer baseline iGPUs with 1024 or 1280 shader ALUs right now. Apple is more than happy to double or quadruple that figure, with 2X to 4X the memory bandwidth as well, for a hefty price increase. Apple MacBook Pro users are willing to fork out for such a configuration, but Windows users have so far been less enamored with faster iGPUs.

But there are a lot of rumblings about AMD Strix Point Halo, which could double the memory interface to 256 bits and increase the CU count from 16 in Strix Point to 40 CUs. It would be, roughly speaking, AMD's equivalent of the Apple M4 Pro, with more compute but similar bandwidth. It would also be at least relatively similar to the PlayStation 5 GPU, but with less bandwidth. Is there a market for such a monster iGPU, running Windows? We'll find out, if or when AMD begins shipping Strix Point Halo.

As for Intel, Lunar Lake takes the podium for 'pure' Windows integrated graphics right now. It's not by a huge margin, and there are still a few driver issues that we encountered, but things are much better today than they were four years ago as far as gaming compatibility and performance are concerned. And as noted at the start, our testing was done without enabling any performance enhancing software, which mostly applies to AMD's GPU.

AMD rightly notes that FSR support is more widespread than XeSS. (DLSS is also more widespread than FSR and XeSS, but that's another story.) The reality is that using things like AFMF2, FSR, or various other approaches that change the rendered resolution or insert 'generated' frames represent a different workload. You can try all of those out on whatever laptop or desktop GPU you buy, and some can deliver a better gaming experience — because no matter how you slice it, 1080p medium at 20 fps isn't good.

Driver-side adjustments in particular fundamentally skew the workloads and open the door for all sorts of shenanigans, so at least for our primary performance testing, we're sticking to native rendering. We may however choose revisit all of our testing with various performance enhancers, which will be more of an experiential look at performance rather than discussing which GPU actually has more apparent horsepower.

Because, let's be frank: AMD still has better graphics drivers and software. Intel's hardware may have the performance advantage, but even though it was only one game that failed to run, there were other games (like Baldur's Gate 3) that also delivered a poor experience. We're more confident now than a few years back that Intel will continue to work on such driver issues, but we notified them of the Star Wars Outlaws and Baldur's Gate 3 issues a few weeks ago, and a fix still hasn't appeared.

Set aside the drivers and software, and Intel's latest iGPU looks good from the hardware standpoint. It shows some excellent efficiency and performance improvements over the previous Arc Alchemist GPUs, which is great to see and gives us hope for Intel Battlemage.

Except, there are rumors and leaks that suggest Battlemage desktop cards my only offer up to 24 Xe-cores. That means, with a 2.5 GHz clock as an estimate, such a GPU would offer 15.4 teraflops of compute. If correct, that would be roughly at the level of an RTX 4060 Ti, or a bit lower performance than an AMD RX 7700 XT. That's just a ballpark estimate and the final results remain to be seen, but we really hope Intel has something more in store with Battlemage than a 24 Xe-core solution.

Beyond that, we have Panther Lake coming next year, which could have an Intel Arc Celestial (Xe3) GPU. We're certain AMD will have more integrated RDNA 3.5 and RDNA 4 GPUs launching in the future as well. But for now, Lunar Lake currently claims the crown for being the fastest Windows PC integrated GPU.