One of the holy grails of generative AI storytelling is being able to create consistency across characters in images and video. Now MidJourney has made that a little easier.

The new character consistency feature works in a similar way to using a reference image for style and scenes, but focuses in on specific character traits found in the source image.

One of the primary use cases for this could be in creating graphic novels or turning the MidJourney generated images into short video clips and using something like Pika Labs lip sync to animate the lips and allow for a variety of scenes with the same character.

How does MidJourney character consistency work?

Like all MidJourney features character consistency works by appending a code to the end of the prompt, in this case —cref and the URL of the image.

It works best using MidJourney images as a source rather than a photograph or drawing made outside of the AI image generator, although you can still use other images.

You can also apply the --cw tag followed by a number between one and 100 at the end of the prompt after the --cref to set how close to the source image MidJourney should stick.

You can also add multiple characters to a scene by using a second or third --cref with URLs to each source image. If you set the --cw very high it will take in everything including clothes, face and hair. If you set it low it will just concentrate on copying the face so you can change outfits.

How well does MidJourney character consistency work?

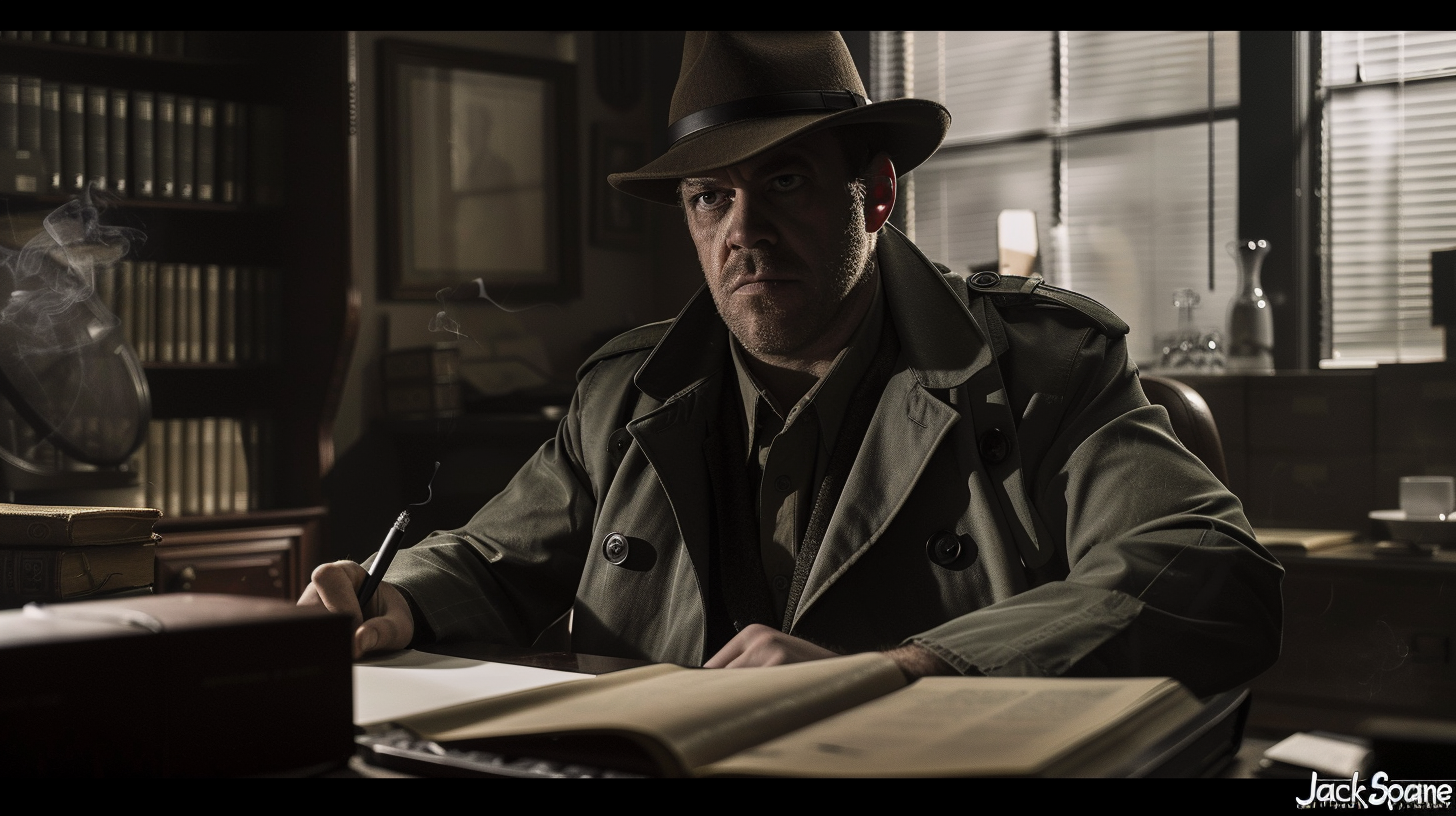

I decided to try it out on a character I created for an earlier AI sound effects project called Jack Sloane, an old-school mid-40s private detective.

For the most part it kept the consistency, carrying his scowl and weathered face across each generation, even keeping his outfit with hat and coat.

For the first few tests I kept him in his office at the desk, just changed some subtle elements. I then lowered the –cw value to 40 and changed the prompt to put him in a slightly different outfit.

It removed his jacket but kept the hat and trademark scowl. It worked well enough that it could comfortably be used for a new frame in a graphic novel or short film.

Finally I put him in the corridor and had a second character, Lydia Carver — a woman looking for her missing husband — in the scene with Jack. It did ok but not well. Two of the four images had two Jack’s with no Lydia, one got the dress wrong and one was about right.

What are the main use cases?

Overall MidJourney did a good job with its character consistency. It requires some careful prompting and getting the right source image, but it does work.

What this also does is bring the era of generated storytelling that much closer, allowing for a new type of content creation across a variety of mediums.

A brilliant writer can now visualize their creations more easily than ever, a filmmaker cut create storyboards that will help in pitching to investors and others can turn a random idea into reality.

.png?w=600)