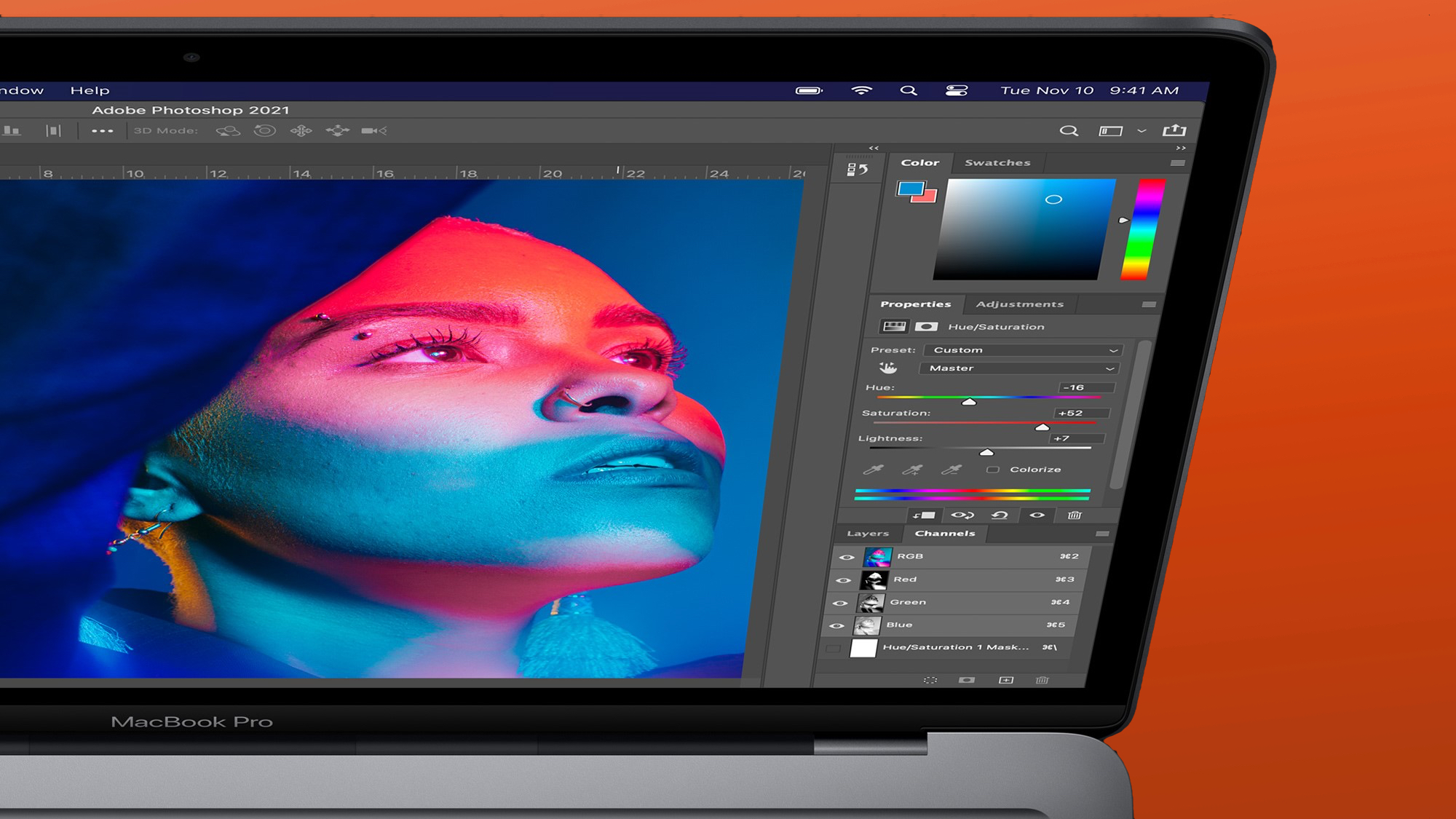

Adobe's new "Project Hi-Fi" is an interesting project that lets designers take an idea from a simple sketch and turn it into a fully rendered digital image using artificial intelligence. Currently a prototype, it could one day become a core Photoshop feature — and I've been given an advance look at it.

The company gave me a preview of Hi-Fi ahead of its MAX creativity conference running this week. The feature isn't entirely new; you can use AI to enhance sketches on most popular AI platforms including Leonardo and Freepik — but this is a first for Photoshop.

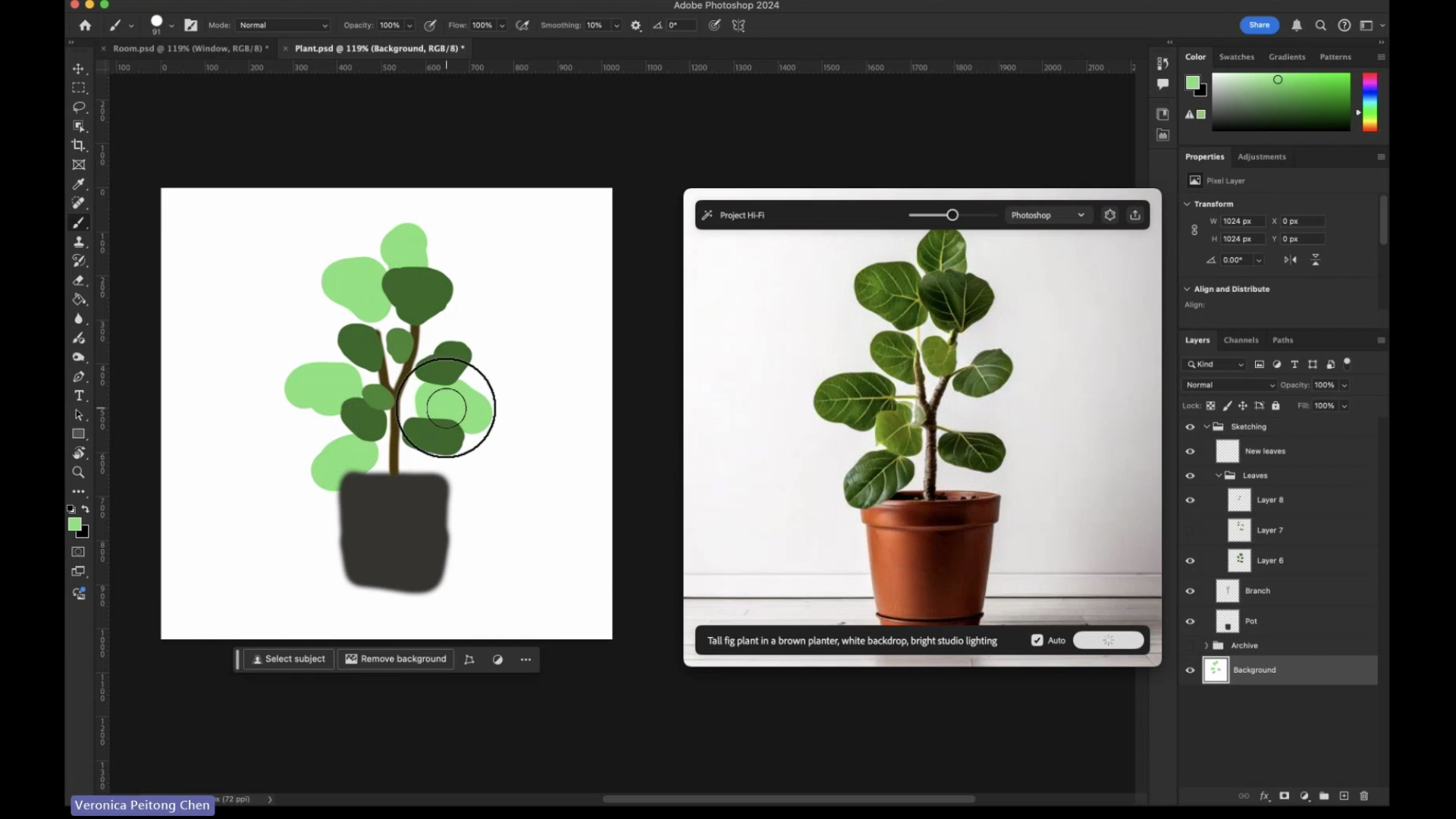

This approach stands out though thanks to the ability to use photographs, layers and more than just a quick drawing on a canvas. In the demo, Adobe's designer took a photograph of a boring, empty room and gradually doodled on top to create a render.

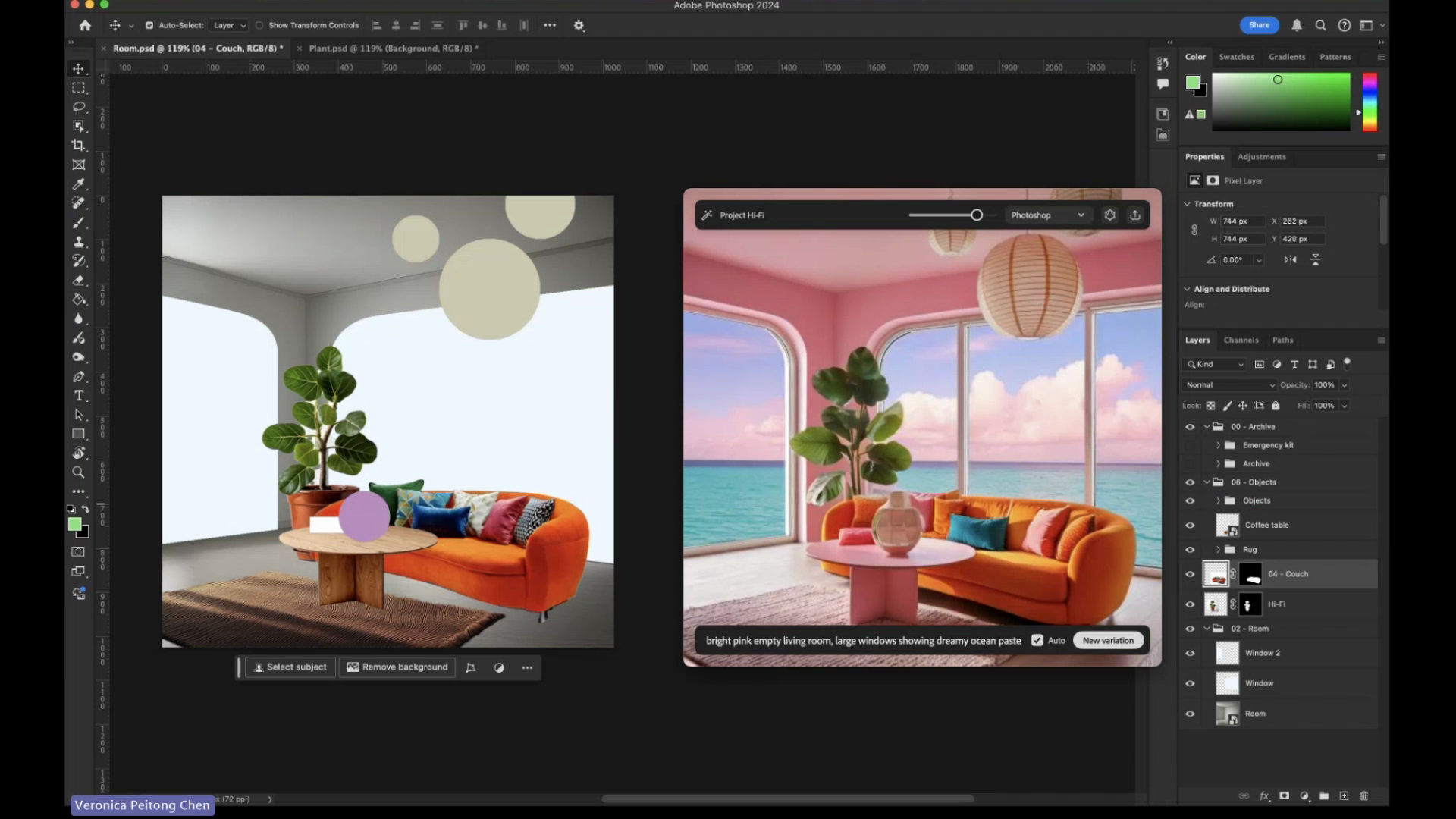

The final output from the example I was shown was a beautifully designed, if overly pink living room. This included a custom-designed sofa, an image of a lamp from a website, and a prompt to direct how the AI should render the final project.

This is just the latest in a long line of Adobe "Sneak Peek" projects that have quickly found their way into the beta stage. One example is Project Neo from 2023 which lets you generate 3D objects more easily.

What is Adobe Project Hi-Fi?

Project Hi-Fi (High Fidelity) takes an image from anywhere on your computer, a text prompt, and renders it. In the plugin, you simply select the application it should take the image from, type in how it should change it, and click Create. It happens almost automatically. As you change the prompt the image will also change.

It uses the same Adobe Firefly model to generate the resulting images as Adobe already uses in Photoshop to extend an image or generate infill content. I think sketch-to-image is a better use case for Firefly in Photoshop than generative fill.

It could be used to mock up an idea for a flyer you want to send out for a house party or give a builder a sketch for a BBQ pit.

According to Adobe: "You can capture any part of your screen and use it as a guide to quickly create high-quality images with AI," adding that "these images can then be easily brought into Adobe Photoshop for further editing."

The demo I saw of the project seemed to also allow you to create a multi-layer project in Photoshop, send the composite to Lens and it would render that, allowing you in near real-time to turn layers on or off and immediately see it re-rendered in high resolution.

The Adobe designer Veronica Peitong Chen loaded a photograph of the corner of an all-white room, sketched a couple of floor-to-ceiling windows and asked Hi-Fi to create a "bright pink empty living room, large windows on the back wall" and it did it immediately.

A spokesperson told me that "by leveraging advanced models, Adobe Lens boosts both productivity and creativity, turning your screen content into detailed visuals with real-time AI technology. It’s a seamless way to transform your design concepts into polished images effortlessly."

This is going to be ground-breaking for graphic designers wanting to quickly show their vision to a prospective client but it goes beyond that, it could be used to mock up an idea for a flyer you want to send out for a house party or give a builder a sketch for a BBQ pit.

What else came out of MAX 2024?

As well as its usual Sneak Peeks, Adobe revealed new details of its Firefly Video model, creating videos from text or image prompts. This will be built into a future version of Premiere Pro for clip extensions, better transitions and more.

There are also updates to the vector AI model to improve illustrations, new capabilities in Photoshop to remove distractions from images such as poles and wires, as well as improvements to generative fill, expand and background.

"The usage of Firefly within our creative applications has seen massive adoption, and it's been inspiring to see how the creative community has used it to push the boundaries of what's possible," explained Adobe CTO Ely Greenfield.

Adobe's generative AI models aren't necessarily the best in terms of photorealism or capabilities, but the deep integration with Photoshop, Illustrator and Premiere are what gives them that edge over Midjourney or Runway. They are also "ethically trained" on data licensed by Adobe which makes them easier for companies to justify.

I think we're about to see a revolution in how we pick out furniture, design our living spaces and create content as Adobe brings these new sketch and AI features to more mainstream products. If Project Hi-Fi finds its way into Adobe Express expect to see pink wallpaper and furniture everywhere in your Facebook feed or For You Page.