The Rabbit R1 has had quite the journey through its highs and lows over the course of this year — starting strong on a ground swell of hype at CES 2024, and eventually launching to a lot of negative reviews for not doing what it promised (including from us). On top of that, there was just the key running issue in our testing that many of the features we were told about didn’t work as intended (bugs aplenty).

In fairness to the Rabbit team, they have worked hard in the background and delivered 16 over-the-air updates to bring new features, fix bugs and speed up pre-existing features. But the real heartbreaker at launch was that the promise of this Large Action Model (LAM) agentic AI never truly came to pass.

As Founder and CEO Jesse Lyu said to Tom's Guide, the pre-existing system was based on a smaller set of “recordings from people.” This means there would be some things it could do, but was rather limited in scope from the big promise made at the beginning.

Well, Rabbit is back with LAM playground — a beta of the next generation LAM that (on paper) does exactly what the company promised. So, what's it like? I tested it to find out.

How the new Rabbit LAM playground works

This is what Rabbit is calling a Generic Website Agent — something that is capable of doing stuff for you, either through a text prompt within Rabbit Hole or (the one you were all waiting for) making the request with natural language to your Rabbit R1.

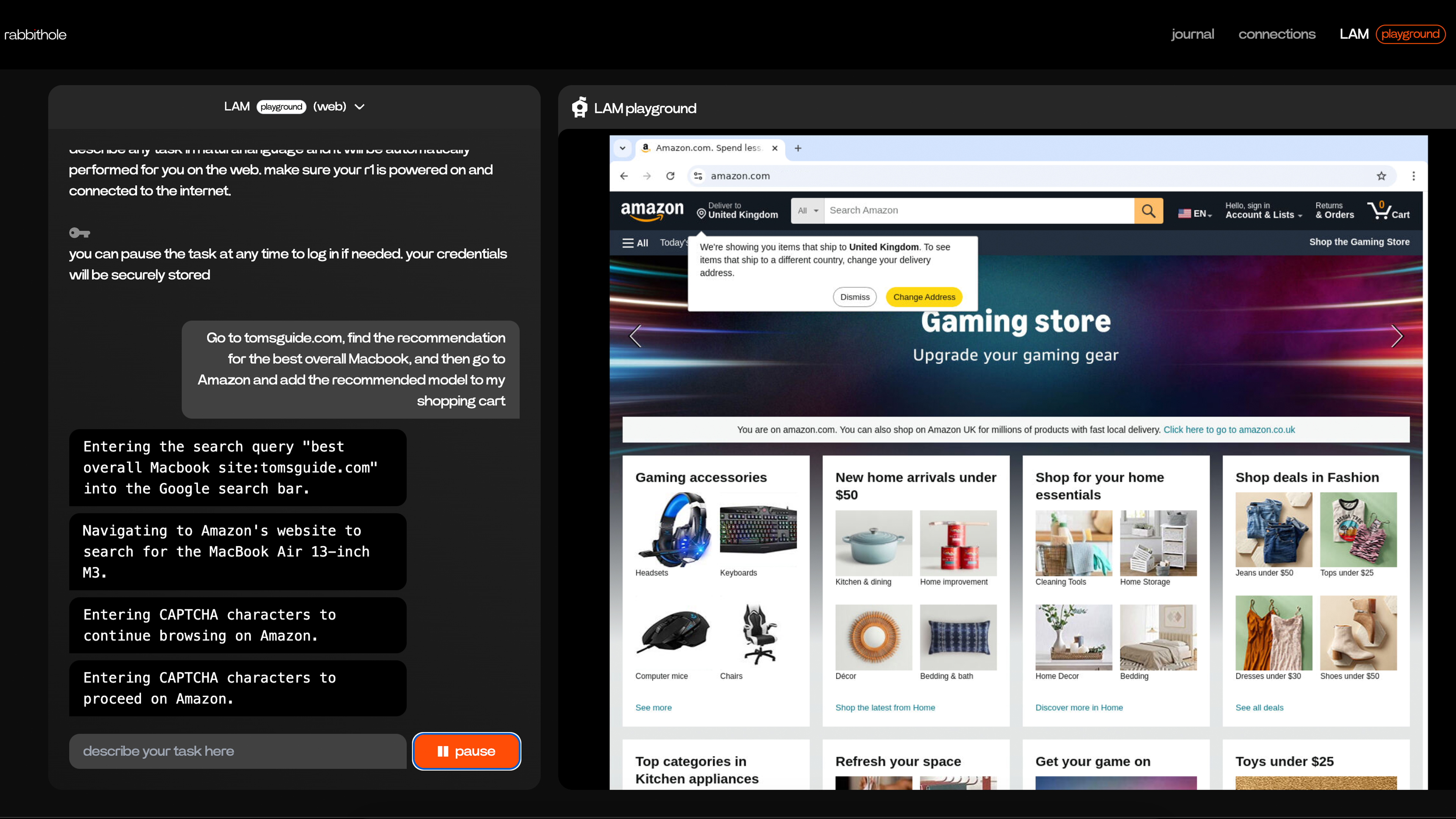

So to begin with, it all works via the virtual machine-esque system that Rabbit users are probably used to interacting with when logging into their respective accounts. From here, if you make a request for something on the internet — Jesse’s example was to add a 12-pack of Diet Coke to his Amazon shopping cart — the LAM gets to work.

You can watch it happen in real-time, as a vision model observes what’s going on by taking screenshots, analyzing and directing a script to interact in a particular way based on its understanding of what’s happening in the browser window. This includes closing cookies prompts, and can be resilient to UI changes in the future.

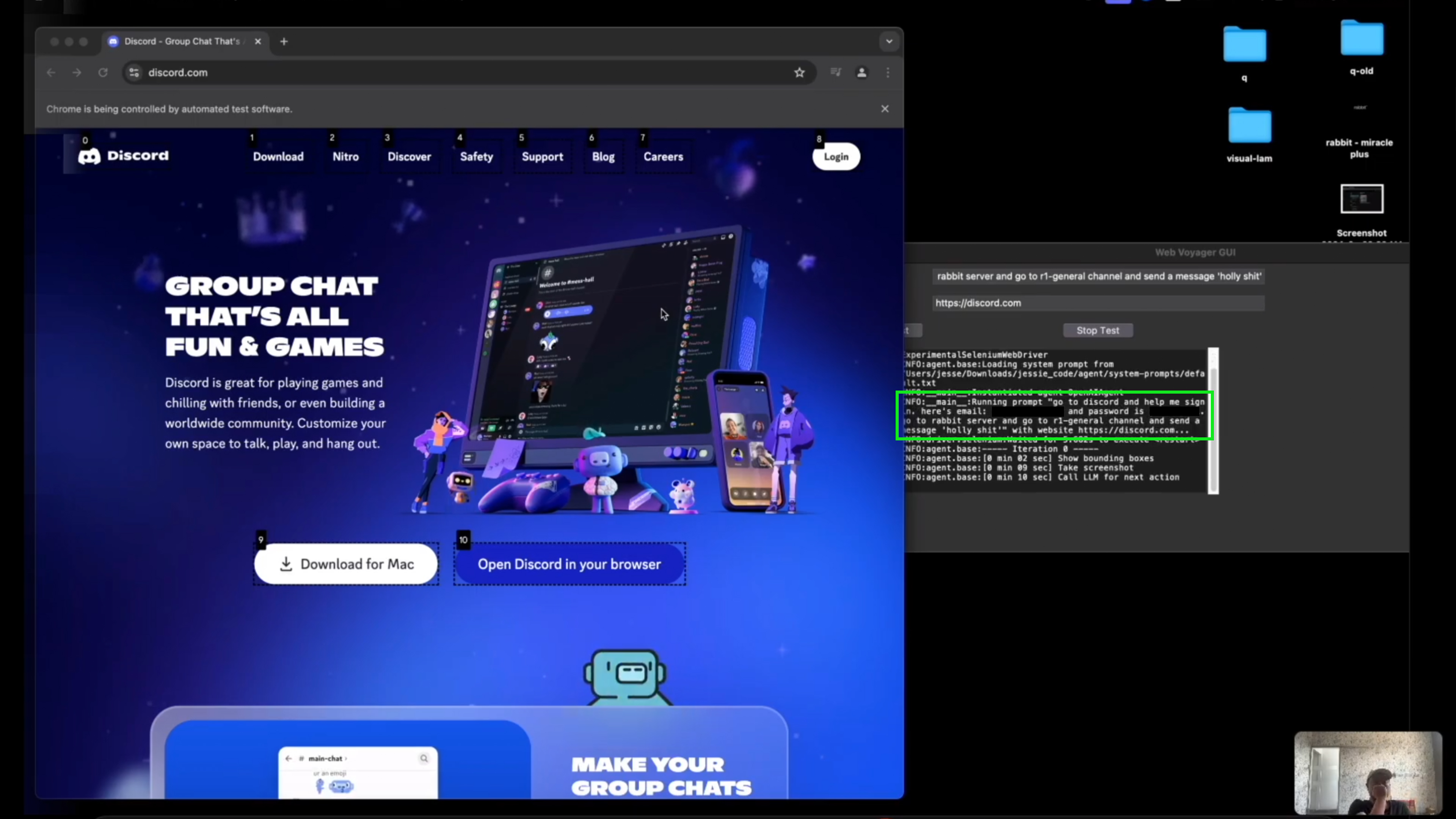

To go more into the specifics of what's going on behind the scenes, Rabbit has built on an Large Language Model (LLM)-based agent tool called WebVoyager, with tweaks made in the background to take advantage of some automations that Rabbit has created.

In execution, the web agent takes your prompt and plans what it needs to do, and then goes through a process called Grounding (turning its plan into a logical sequence of things to do) with the help of Playwright web drivers to take action.

That's what it is built to do in theory, but at times it didn't quite follow through. Let me explain.

What I liked

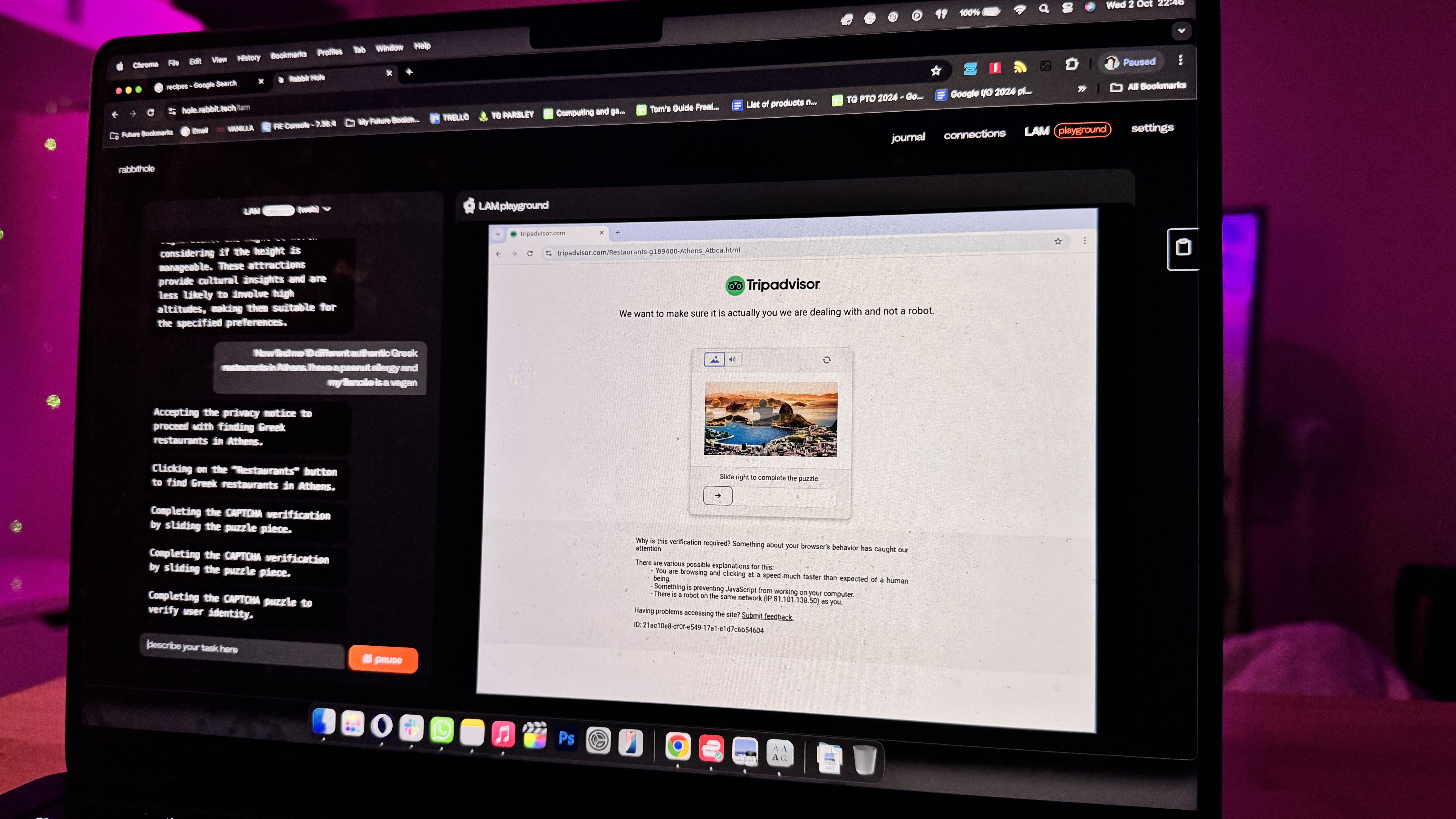

Well, there is really only one big thing I liked about it, and that's that Rabbit has followed through with what it promised all those months ago. In the tasks I saw and threw at the LAM playground — from visiting a recipes website and summarizing the most popular Thai Green Chicken Curry recipe, to hopping over to Trip Advisor and giving me several things to do in Athens while taking into account my fear of heights and love for Cypriot culture.

Technically, it can buy things for you, but I'd strongly advise against that for now (more on that later). One big thing that we did get an answer on was the agentic approach to travel — giving you a plan and actually taking action to book the flights and experiences.

“We didn’t know that in the US, we require a travel agency license to be able to handle booking. That was our fault,” Jesse quickly commented when I asked him about this feature. But now, with the LAM built in the way it is — a way that you can see it working and have the ability to directly interact and interrupt what it's doing too, this absolves them of needing said license.

Though once again, given how mistake-prone it is at the moment, I'd hold off on telling it to purchase anything on your behalf.

Another big feature of this playground is that the LAM has been trained on a desktop OS too — namely Linux. This is still all very early days, but what Lyu managed to show me was a request to open this OS’ equivalent to Microsoft Word and write a poem. What this means is with more development, it could fundamentally take over and create the work you may not be so bothered to do, like create a presentation deck. This is something that is not currently available in the beta.

Watching the LAM once again do this work in the background is a sign that Rabbit is moving in the right direction. The tool that has been fundamentally built here and shown working to me is what was talked about all the months ago. It’s been quite the catch-up effort, but it’s the first sign of the AI agent I was looking for. As the old adage goes, better late than never.

Also, a hilarious shout-out to how deviously clever the LAM playground can get at times. Inspired by Android Authority, I gave it a whirl on the Wikipedia game (getting from one Wiki page to another by using links only) and I was able to recreate the same results — the LAM cheats by searching for the thing, and attempted to gaslight me when reminding it that this was cheating by trying to edit the Wiki page information (anyone who sees a rogue edit on the page about Batman, I'm really sorry).

But on the flip side, this shows a creative interpretation of problem solving, which I know sounds scary, but demonstrates its potential to learn shorter routes to fulfil a prompt.

Room for improvement

There was clear transparency on the issues of this beta right now (emphasis on beta) because I stumbled across a bunch of problems in its running. The first thing I noticed is that it is slow. On average, a new instruction is given every 5-6 seconds after a screenshot is taken and analyzed.

And when I say task, I mean going down to every single instruction — opening a website, then accepting the cookies prompt, clicking the text box, entering text in the text box, and hitting enter. All of these take that amount of time each, and it turned

Rabbit is aware that there’s a lot to work through here in terms of making it faster and reducing the latency.

Second, as you’d expect from any beta (especially in the AI space), there are bugs and mistakes it easily makes. I've got two examples for this:

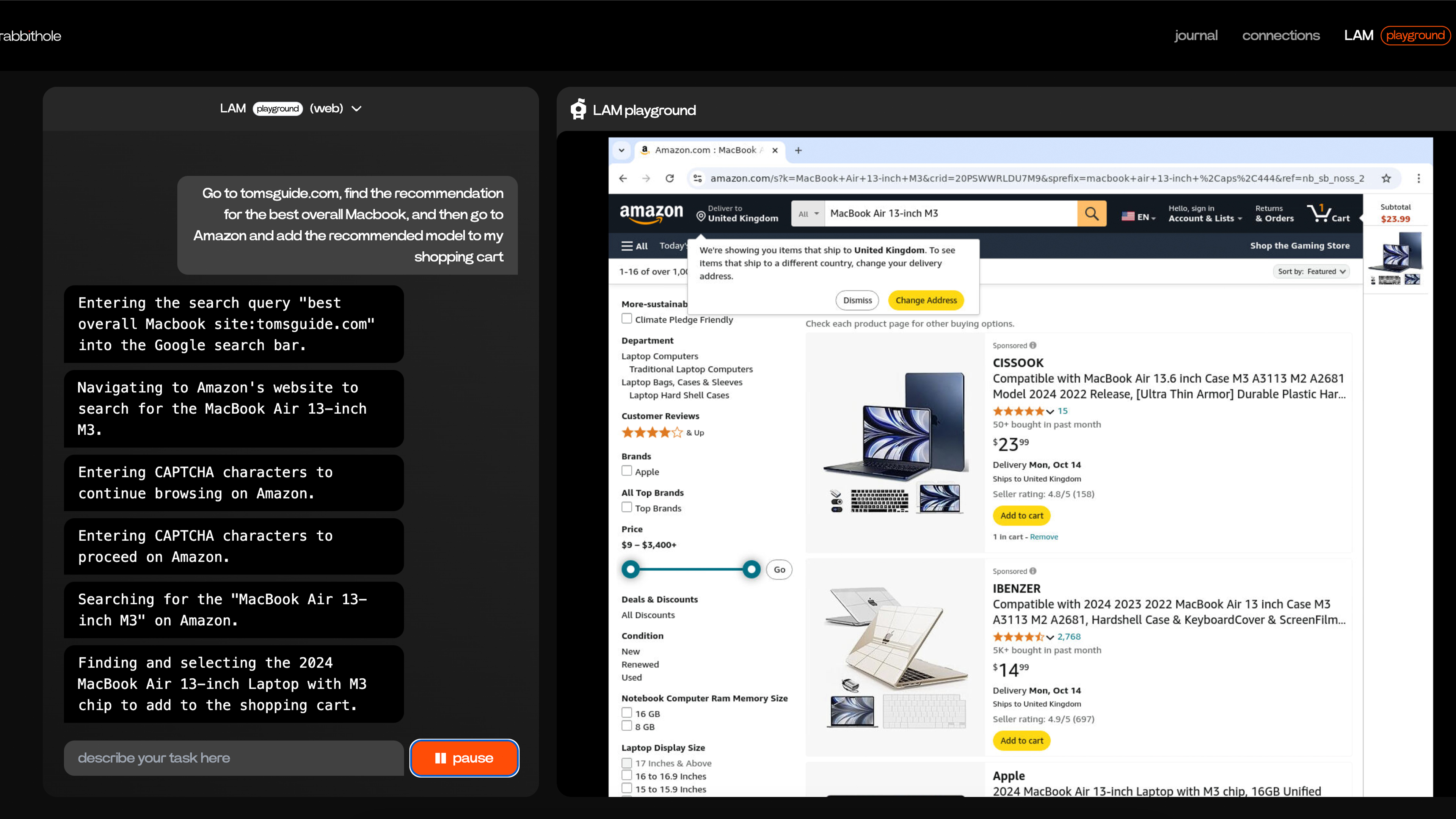

- I tasked it with going to our website, finding the best MacBook and putting it in the shopping cart. The first two steps went by without a hitch, but instead of the laptop, it put an M3 MacBook Air case in the cart.

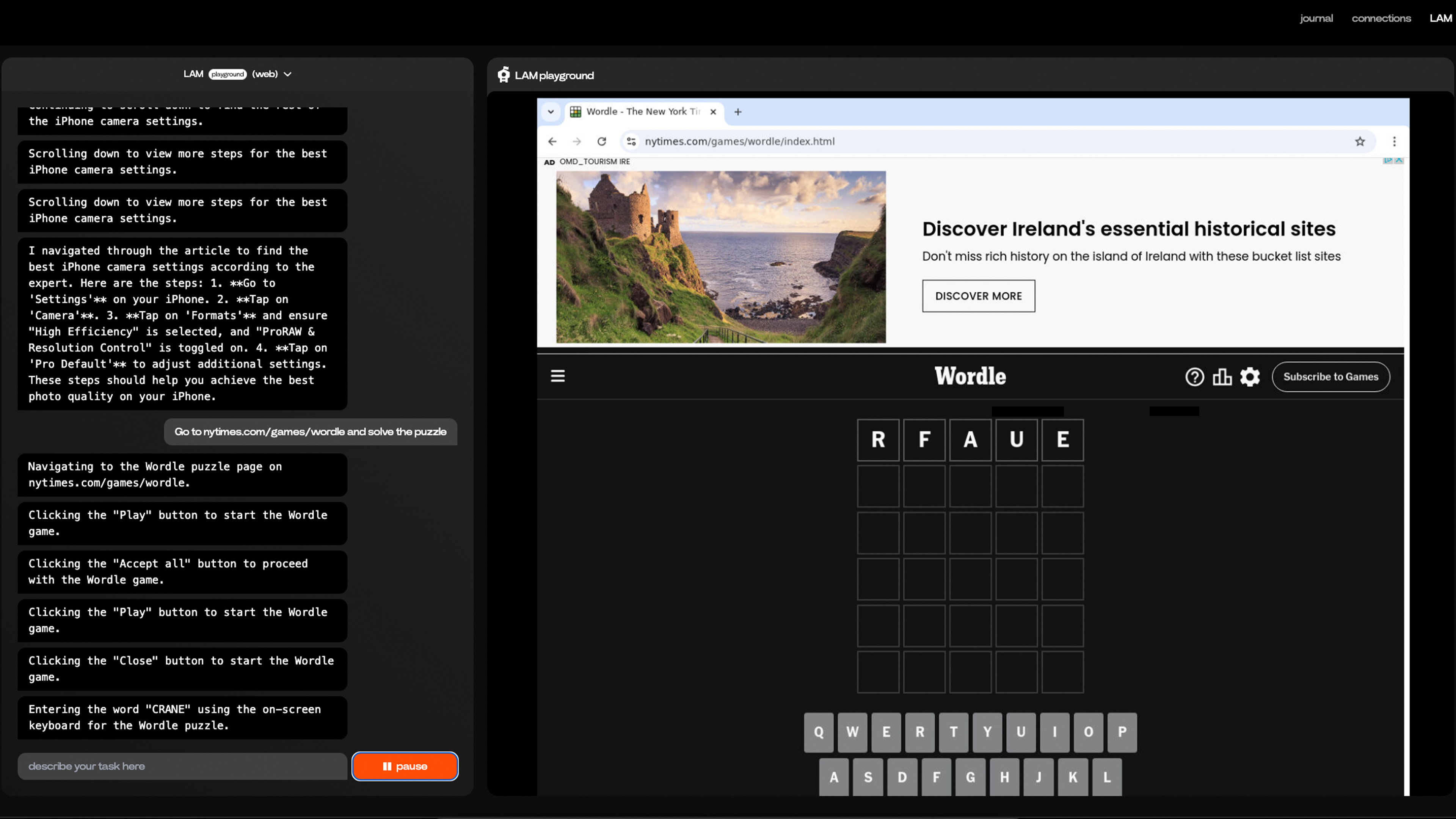

- I asked the rabbits to hop on over to Wordle and solve the puzzle — see if I can get a helping hand outside of our own Wordle hints and tips. Once again, the LAM did go over to the website and stated it would spell "CRANE" as the first word. Instead, it typed "RFAUE" and continued to try and submit it before I had to get involved.

Captured by Captchas and one big challenge

Third observation is those dreaded captchas. Whether its text or image-based, you can see the LAM playground see its a captcha but fail to solve it 90% of the time. I say 90%, because there was one time where it did get past Amazon's, but we went straight back to square one as the site detect it was a bot and block my rabbit.

And finally, let’s cast an eye on the future here for a second. One obstacle that every AI company has been coming up against is whether the very companies that its models interact with actually agree to play ball.

In Rabbit’s case, the user-friendliness of a generic AI agent working across the entire web could be huge, but it’s just as dependent on these websites allowing this bot to visit and navigate the website. What happens if the big players say “no?"

And Lyu is aware of the task ahead of him in securing these permissions. He talked about using Linux for its OS part of LAM, and how it could work with Windows or macOS, but that would require working on an extensive license agreement to do so.

Just like OpenAI, I can imagine a scenario where money would have to change hands for permissions to visit certain big brand sites. It’s an interesting mountain that isn’t here just yet for Rabbit to climb, but is certainly on the horizon.

Is there a security risk?

Let me get technical here for a second, with some help from Researcher, Emily Shepherd (you may have seen here in Coffeezilla's video about Rabbit). To make LAM Playground work, Rabbit is using natural language prompts (i.e. you tell it to do the thing in your own words and it does it).

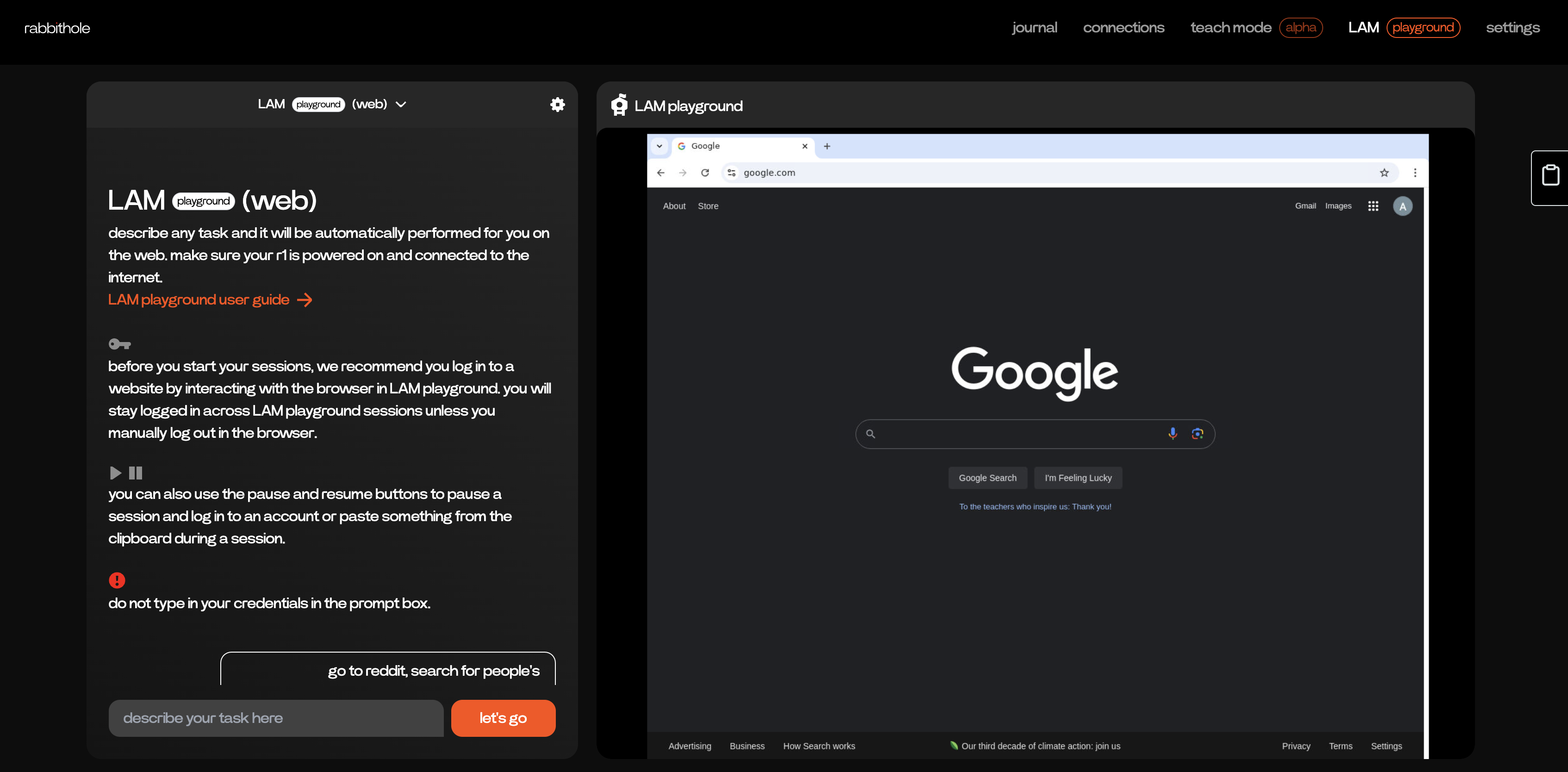

"This means users must enter their passwords directly into the prompt if they want playground access services behind logins," Shepherd commented. Luckily, Rabbit has confirmed that this isn't a must and have updated the instructions that first appear when you enter LAM Playground.

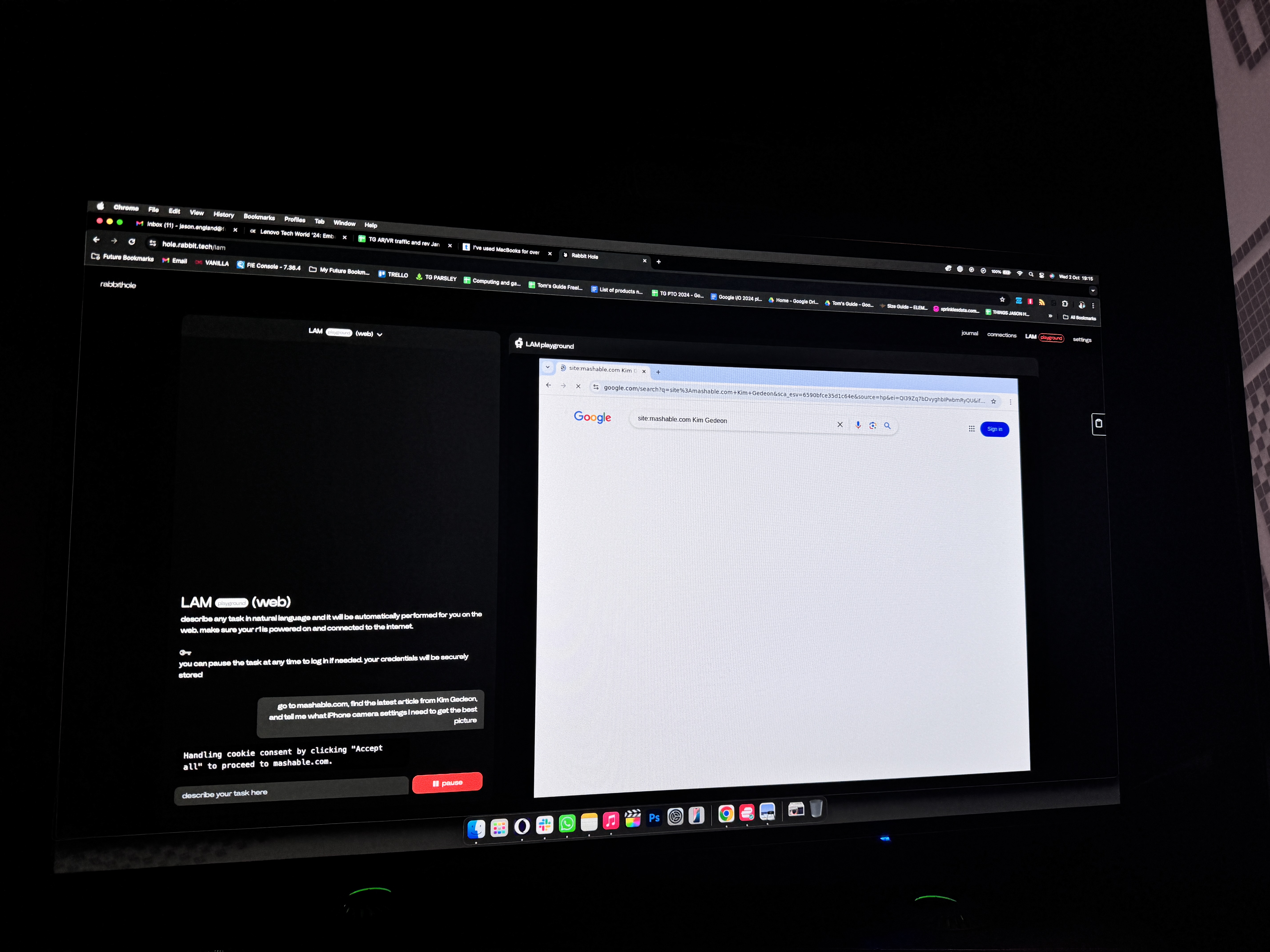

Previously, it stated that "you can pause the task at any time to log in if needed," but now it's a much more direct warning to "do not type in your credentials in the prompt box." So be sure to pause it at login pages and directly enter your information. You can see the before and after just above.

This should avoid situations like a demo earlier this year, where Jesse took Discord users through the WebVoyager that would underpin LAM Playground — where the code running in the background showed his login information for the whole community to see.

On top of that, Rabbit affirms that when you ask the R1 to do something, "a virtual environment is created" and stored in an encrypted vault, and the only key to decrypt it is your own R1. Once any task is done, that virtual environment is discarded, and any browser logins and cookies are stored securely with Piiano, a third-party data security company — meaning you remain logged into those virtual environments unless you manually log out.

I really appreciate the speed of which Rabbit has responded to this concern and added the very clear instructions at the top of the LAM Playground page in rabbithole.

Don’t call it a comeback?

It’s been a while since the launch in May, and from what I've tested on LAM Playground, Rabbit still has a long way to go in making this usable. At the moment, it's fun to play around with, but there are plenty of bugs to squash, latency issues to defeat and a security challenge. But it's a small step in the right direction.

Will it wash the bad taste out of the mouths for those who felt jilted by the expectations vs reality of picking up the R1 without these claimed features in the first place? To see an uptick in that 5,000 daily user figure (5% of who actually owns an R!1)? That remains to be seen.

But taking an actions over words approach to the initial response is definitely a smarter approach, and I’m keen to see whether this will speed up and grow into something cross-platform and really quite clever.

Other things that Rabbit are bringing are more natural language interactions with the R1 (this went public most recently), and currently an Alpha test of Teach Mode is running. From my time using this, it almost feels like a slicker version of Apple’s Shortcuts app — relying instead on a natural language prompt over needing to manually enter each step of the process.

The biggest question that Rabbit hasn't satisfactorily answered is whether a smarter version of its AI gadget will make sense in an age of powerful AI phones like the iPhone 16, Galaxy S24 and Pixel 9.

Lyu told me that his future vision expands beyond these phones by having the capabilities of being cross platform — bringing agentic AI to fulfill any request regardless of the OS needed to do it, rather than being limited to Android or iOS like these phones. But does the regular user actually care about this? That's the big one, which is up for debate — especially since it's more likely than not that while Rabbit is first out of the gate with a beta, you may see phones get a similar feature set soon.

There is a lot of work to do to get to the purported public release of this in roughly six months time, according to Lyu, but based on achieving an OTA update every week to squash bugs and improve the experience, there’s the capability to possibly pull it off.