You may be wondering how to spot AI images in 2024 considering that AI image generators have improved so fast. Is it still possible to tell a real photo from an AI-generated invention? Or the work of a human from AI art made by a machine model? Well, it's becoming more difficult, but there are still ways to spot AI art if you look closely, even with some of the examples that almost fooled us.

Recent developments have seen Instagram finally add a 'Made with AI' tag for AI-generated images, but its implementation hasn't been without controversy. There's also been a surge of interest in Cara app, a social media platform with an anti-AI policy. Cara uses an automated tool to detect AI art, but can the human eye also do the job? Below, we suggest several ways to tell if an image was generated by AI.

How to spot an AI image

Some AI images are more obvious than others, often because of the model they were made with. Some of the best AI art generators are capable of creating more photorealistic output than others. Not all AI images will have all of the telltale signs that we mention below, but our aim is to highlight some of the things to look out for. We've also included some suggestions for further investigation that can help if an image appears genuine to the eye.

01. Strange human anatomy

Most humans have five fingers on each hand, five toes on each foot, two arms and two legs. We know that, but AI image generators can get confused by all these limbs and digits. The latest iterations of Firefly AI, Midjourney, DALL-E, Stable Diffusion and Google Imagen have become much better at this, and the days of obviously wrong hands are largely gone, but look closely if somebody is holding something. Remember that AI photo of the Pope in a puffer jacket. He appears to be holding a can with his fist closed.

Even if the main subject of an image appears to look anatomically correct, people in the background can be a giveaway, particularly in scenes with crowds. One of the AI images to have most fooled people recently was uploaded by Kate Perry, showing the singer apparently attending the Met Gala.

There are some tell-tale signs in the rendering of Kate herself: the direction of her eyes seems off, for example, but it's fairly convincing. But zooming in on the photographers in the background reveals a jumble of weird fingers and cameras, or camera-like objects, pointing in all directions.

Hair is something else that AI models struggle to render realistically. Is the subject's hair blowing in the wind in an unnatural way? Do the strands of hair appear unnaturally sharp or start and finish abruptly?

02. Strange artifacts and inconsistent details

There are other anomalies and artifacts that tend to crop up in AI images, including objects in illogical places and distortions in complex patterns or lines that should be symmetrical. AI doesn't know human logic, so it sometimes puts windows, doors or other objects in places where a human would never put them, or it interrupts and changes patterns in wallpaper, carpets and clothing. Accessories like jewelry can often appear warped, and reflections and shadows may fall in contradictory places.

Making pictures of Trump getting arrested while waiting for Trump's arrest. pic.twitter.com/4D2QQfUpLZMarch 20, 2023

In one of these AI-generated images of Trump getting arrested, the now convicted felon appears to be wearing a police truncheon on his own belt. In the other, there are strange textile folds around where his left arm should be.

Also look for inconsistencies in the depth of field of the image: does an object in background look sharper than it should be, or an object in the foreground smoother.

03. An unnatural smoothness or exaggerated sharpening

The overall finish can also give away AI-generated images, which often have an unnatural smoothness or hardness, or an inconsistent combination of the two. This can sometimes require a more trained eye since retouching in photo editing software like Photoshop, image compression and use of a lens with a shallow depth of field can also create certain effects in images, from soft backgrounds to smoothed skin or overly harsh sharpening.

But take @agswaffle for example, an Instagram account solely dedicated to AI images of Ariana Grande. In the image above, even ignoring the presence of a seahorse the size of the singer's head, the lighting and exaggeratedly airbrushed look give things away, making her look like a CGI cartoon.

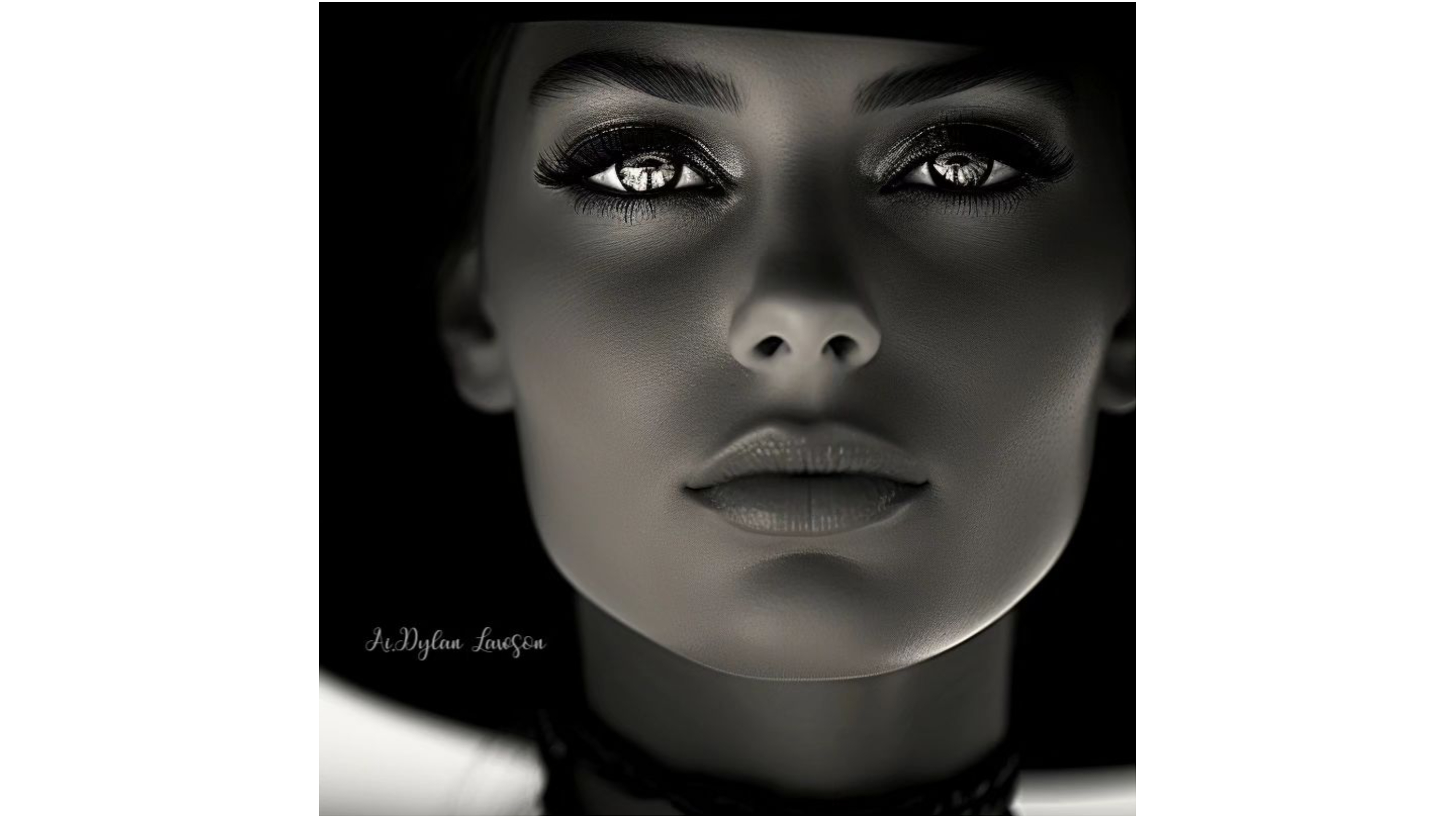

In other cases, it can be a crude, over sharpened look that gives an 'AI photo' away, like in the eyes in the image from Dylan Lawson. But again, this can also sometimes also be the result of over zealous AI-powered sharpening of a real photo. Many suspect the image below of being AI in a Netflix documentary. That would be hugely controversial since a documentary is the last place where undeclared AI imagery should be used, but it may the case that AI upscaling and sharpening tools were used to try to improve the clarity and resolution of a genuine photo.

04. Garbled text and signage

The rendering of text is another area where AI image generators have improved a lot, but although some can now be instructed to generate specified text in images, words, logos and emblems in backgrounds still tend to be nonsensical. Originally posted by Nik Art on Facebook, the image of Paris above fooled some people at first glance, but when we look closely, there are lots of giveaways. People's faces look very strange for one, but street signage is also garbled. There also also examples of garbage bags balanced in impossible locations.

Here's another example where the image may look convincing at first glance, but it soon becomes obvious that it's AI when we look at the logos.

05. How to check if an image is AI

If you're still unsure, there are a few things you can do to try to check if an image is AI-generated. You can start check the metadata of the image by downloading it to you computer and going to Tools > Show Inspector on a Mac or Properties > Details on a Windows PC to see the EXIF information. This is written into an image file when a camera takes a picture and is updated after editing. However, metadata itself can be edited.

Adobe applies tamper-evident Content Credentials to AI images that are generated using Adobe Firefly features, including Generative Fill and Generative Recolor as well as Text to Image generation. However, there are many other AI image generators that do not. Instagram's AI detection appears to be able to identify the output from some of these.

You can also try to investigate the origin of an image by doing a reverse image search on a search engine like Google. A real image of a famous person is more likely to appear online on various sites than an AI fake. You may also be able to find the original source of the image.

Finally, there are several AI detector tools available that allow you to upload or drag and drop an image to inspect it. These include TinEye, AI or Not and Hive Moderation. Some tools give a percentage score giving their verdict on how probably it is that an image was AI generated.

There are still no international regulations on the use of AI imagery. The European Union has draft regulations that will require AI images to be flagged with disclaimers, but it remains to be seen how that will be policed. In the meantime, it makes to be skeptical about images that show very unlikely situations or have an uncanny feel to them. For more tips also see this artist's guide to spotting AI.