During a recent conference I was given a demonstration and overview of the Chinese phone manufacturer Honor’s approach to artificial intelligence and it made perfect sense — eventually.

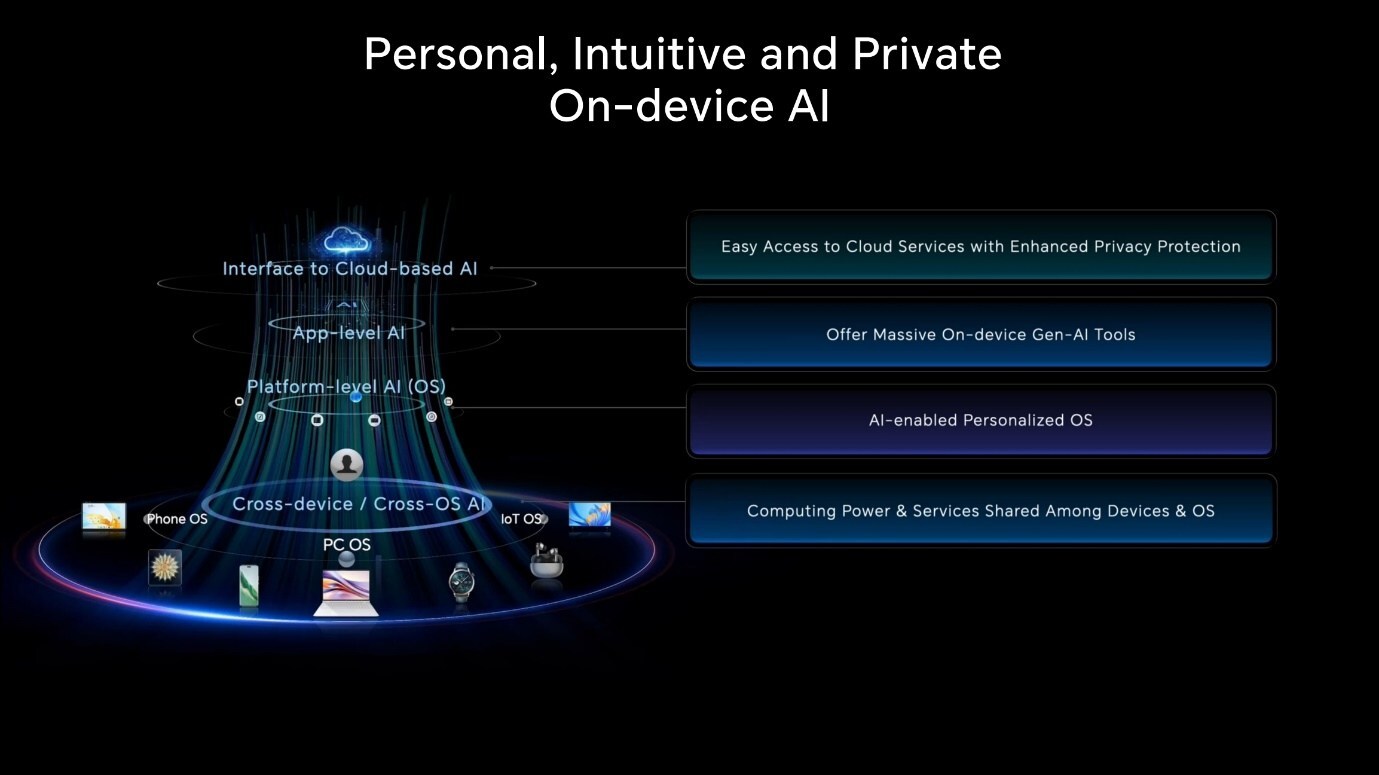

The company spoke about 4-layers of AI, with each layer representing different parts of the system. Diving into this with CEO George Zhao during a press conference, it was clear the plan is to create a sustainable approach to AI for a whole device ecosystem.

It puts local AI, which is fast and secure but not as powerful, at the heart of the system to ensure data is secure, then turns to a range of cloud offerings to handle compute-hungry services. In addition, this would allow other devices such as earbuds, watches or smart glasses to share that secure, local data processing.

This is very similar to the approach I suspect we’ll see from Apple given the rumors and research papers published over the past year. We won’t know for certain until WWDC but I suspect Tim Cook will unveil on-device for personal data, and cloud for complex tasks.

How does this approach to AI work?

The idea is that anything that can be done on the device should be done on the device. Then, anything that can’t be done locally should first have data secured by on-device models.

Applications make use of a range of locally running AI models, including ones for specific tasks like image editing, data analysis and transcription. Rumors suggest Siri 2.0 will act as the hub for Apple in all things AI, even able to dive into apps and perform tasks automatically.

This level of deep integration, which Honor announced at Viva Tech in France as its 4-layer system, allows for flexibility in terms of which models or providers to use and gives developers more choice over how to integrate AI into apps.

Honor says its system will also allow its MagicOS to adapt to the user, presenting the right apps at the right time and adapting functionality depending on frequency of use.

What are the Honor 4-layers

Zhao says the approach they’ve taken to AI is designed to be a beacon to the wider industry and that they will be open to partnerships. For example, at the cloud level, the partnership is with Google Cloud rather than for Gemini specifically. This means they can utilize any AI product available as part of Google Cloud, including Claude 3.

We won’t know what Apple will do around AI until WWDC or how far the split between local and cloud services will go — especially given the partnership with OpenAI — but I think Honor has given us some indication of what we can expect.

The main focus though is on ensuring data doesn’t go off the device unless absolutely necessary. Through a series of small language models built by Honor, they manage personal information and perform actions on the device first, including securing the data.

The CEO gave the example of taking a financial report and wanting AI to provide analysis. Some of this can be performed on a device; then abstracted data, minus the sensitive information, is sent to the cloud for more complex calculations and analysis.

The four layers sound like something Apple would make, focusing on security and privacy at a local level and working with a range of partners for the cloud. I think Apple is also likely to have its own models for set tasks. We’ve seen some of this coming out from Apple Research already.

We won’t know what Apple will do around AI until WWDC or how far the split between local and cloud services will go — especially given the partnership with OpenAI — but I think Honor has given us some indication of what we can expect.