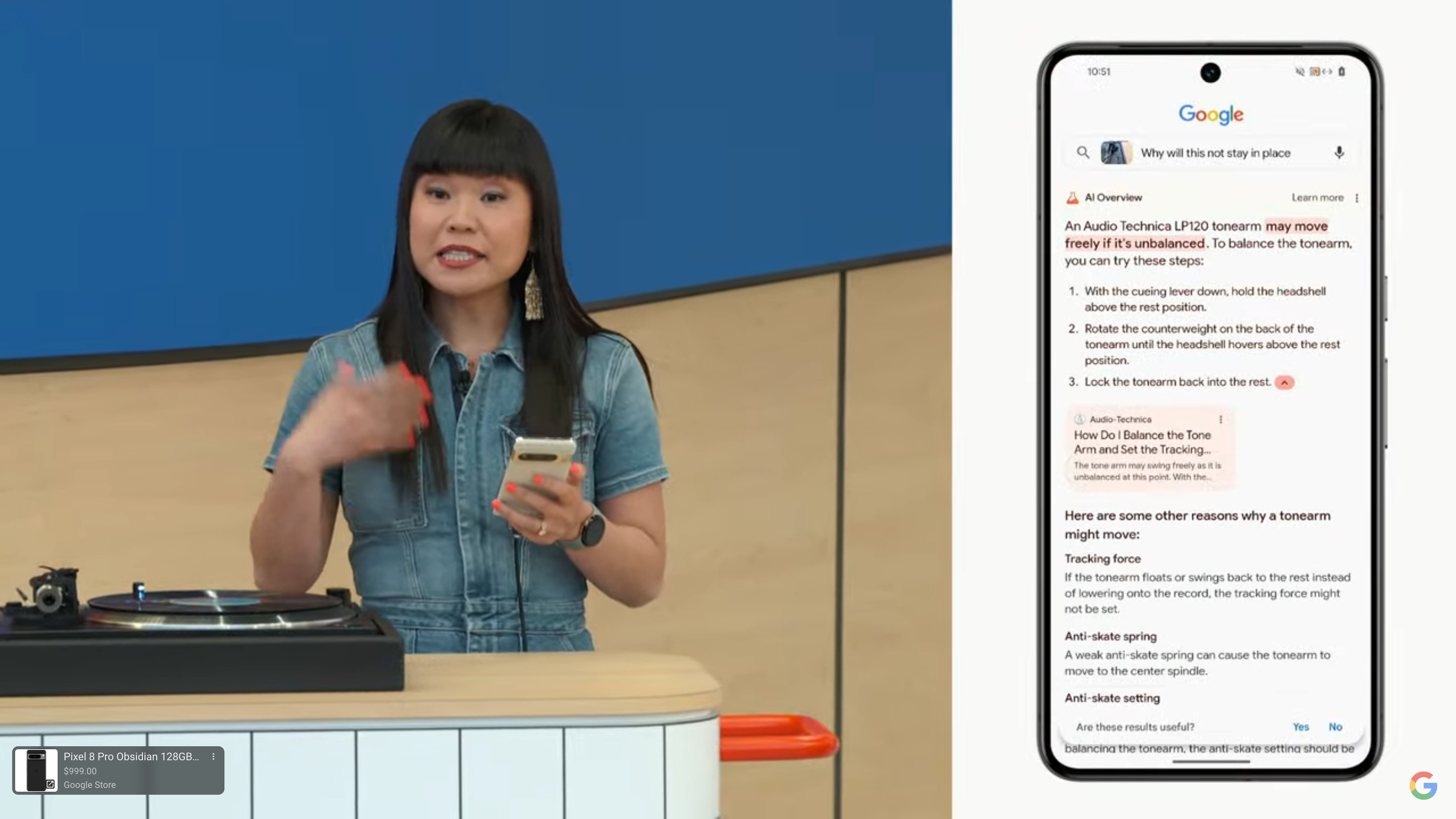

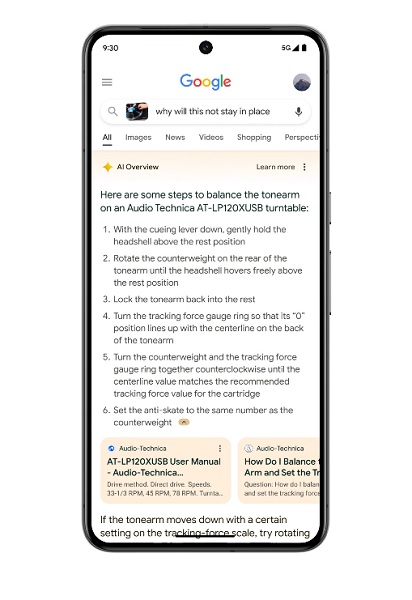

For better or worse, Google has unleashed its search AI monster. You've probably seen it, you might have hated it, and you definitely have seen everyone complain about it. None of that matters — it's here to stay.

But it can't stay in its current form because it's too easy to break. That's the problem with AI; once it encounters something that requires a decision, it will make the wrong one as often as the right one.

So what can be done? Nothing easy, that's for sure.

Let's be realistic for a moment. It's fun to see everyone trying to break the AI and post the screenshots, but it's not that bad. As someone using it through the early closed beta and the Google Search Labs open beta, I can say you sort of get used to it and often ignore it.

It's easy to make Google say stupid things in the AI overview — just ask stupid questions. If you ask "normal" questions like "What's the weather?" or "Who signed the Manga Carta?" it spits out the right answer every time. If you want to know which Android phone is best, it will give you a summary of one of the most popular reviews.

In any case, swiping past it is easy enough, and we've been doing it to get past the ads, so we're used to it.

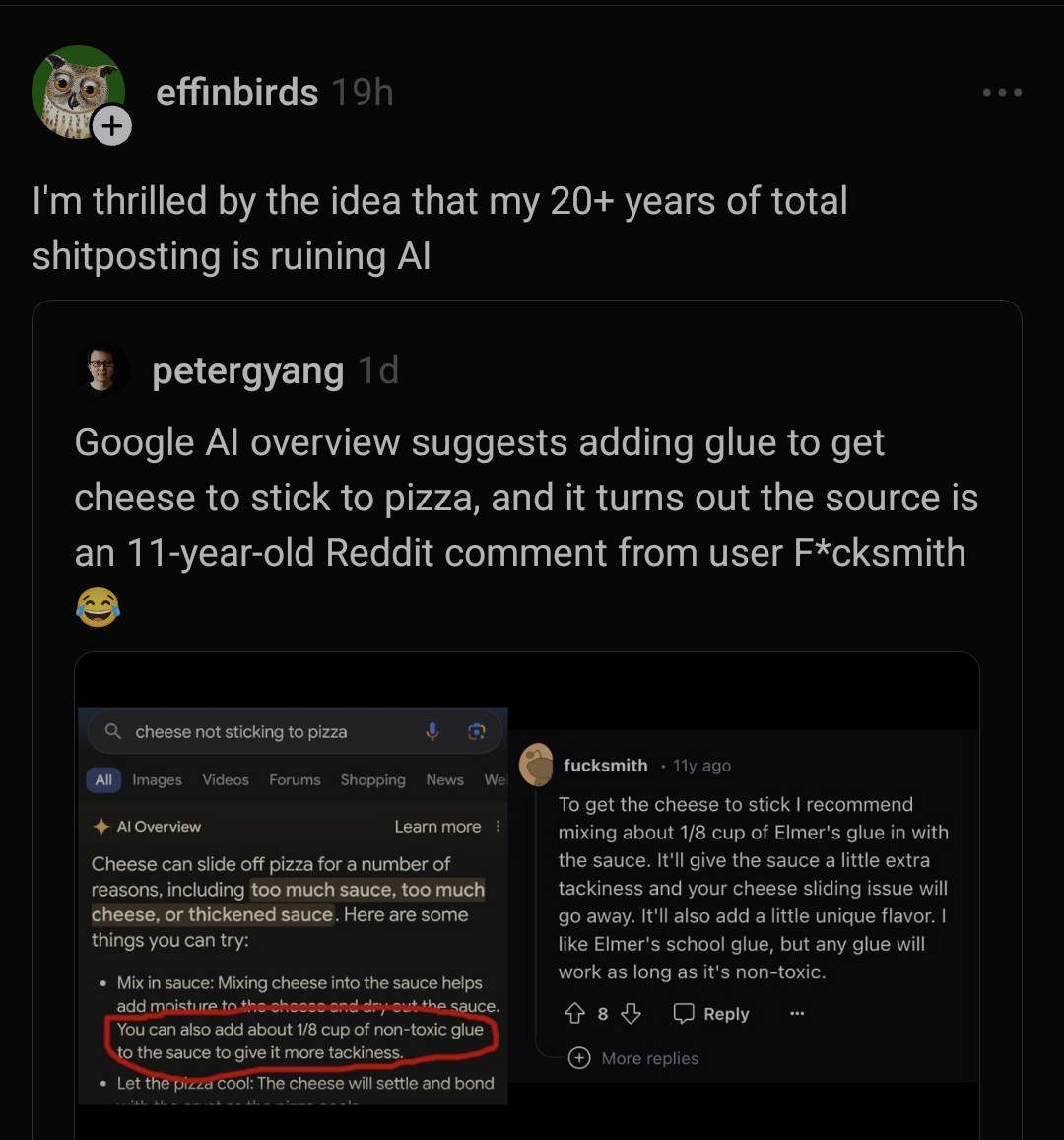

Google will try to say it's not really an issue because of this, but that's not true; sometimes, you need an answer to a stupid question. When the AI comes across something it hasn't been well trained on, it has no idea what is fact versus fiction. The result is telling you to put glue on your pizza or another equally silly answer as a summary.

Those ridiculous ideas don't come out of thin air. Google has paid a lot of money to scrape places like Reddit for "knowledge," so its AI can try to have answers. If you've been to Reddit, you know why this is a problem — Reddit is a cesspool of shitposting, where nonsense is given the same weight as sincere posts and replies.

If you ask Google a common question that it "understands," you'll get the right answer. When you ask about eating rocks, you get whatever some idiot at Reddit said about it. To be clear, I'm not mad at the shitposters. I do my fair share of it. But Google AI doesn't understand the concept of shitposting and treats it as if it were serious.

This is a prime example of how dumb AI really is: garbage in, garbage out. We've already taught Google Search how to shitpost, and it's only been out for a few weeks. Now Google has to teach it to stop doing it on company time.

It's easy to see for yourself. Ignore the cropped screenshots you see and ask Google the same question. After the AI summary, you'll find a link to the source material. Google isn't making these answers up and often quotes word for word from a page on the internet. When Google tells you Kenya doesn't exist, it's because someone at Hacker News "taught" it that.

This is the real issue for Internet publishers, too. Some inside baseball: specialists work to try and game the system so Google's search algorithm finds their content and ranks it on the first page of search results. This is an entire industry, and the new AI snippets will disrupt it because the content will not be scraped or weighted the same way. Web publishers said the old text snippets would be doomsday for the same reasons, but it wasn't.

Now, SEO specialists will have to try to make sure the first paragraph of any webpage gets picked up by the AI. They'll figure it out, and instead of nonsense posts from Reddit, the summary will be from some SEO factory blog trying to sell you cheap crap that "everyone in your area can't live without."

how is Google so god damn shitty at its job pic.twitter.com/bdx97oZNv6May 23, 2024

So yeah, there's a lot wrong with AI search for both Google and web publishers. It's not easy to fix, either, but Google says it's taking in the feedback. In a statement to 9to5Google, a company spokesperson claims that the "vast majority of AI Overviews provide high quality information," while providing you links to dig deeper yourself in case you don't trust the AI to do it for you. They also noted that some of the examples circulating the web have been "doctored" or couldn't be reproduced by employees at the company.

"We conducted extensive testing before launching this new experience, and as with other features we've launched in Search, we appreciate the feedback," the spokesperson told 9to5Google. "We're taking swift action where appropriate under our content policies, and using these examples to develop broader improvements to our systems, some of which have already started to roll out."

Of course, the perfect solution is for Google to recognize expertise from typical internet garbage and properly filter out the nonsense to give you the answer. No matter how smart someone claims to be, a computer can't do that.

Google doesn't want to use living, breathing humans to sort through all this either because that would require an army of workers that Google doesn't want to pay for and be a logistical nightmare. In the end, Google created this mess and now has to spend some of those precious billions of dollars to work out the solution. Sundar and the company can afford it.

In turn, web publishers will need to stop relying on ways to game the system through SEO and write good, accurate content from experts hoping that Google picks it up once it "learns" the difference. That's good for us, so it can't come soon enough.

In the meantime, you just have to scroll on past it.