Google is updating search with some new food-focused skills and Google Maps with a new augmented reality view, the company announced Thursday.

Both updates are part of Google’s ongoing reinvention of how it displays its gigantic database of information in search, Maps, and more; the goal being to reposition Google as a sort of information discovery tool, rather than just a place you turn to for questions and answers. The search giant seemingly wants to get everyone spending more time in its services and in front of ads.

Finding the dish you’re looking for

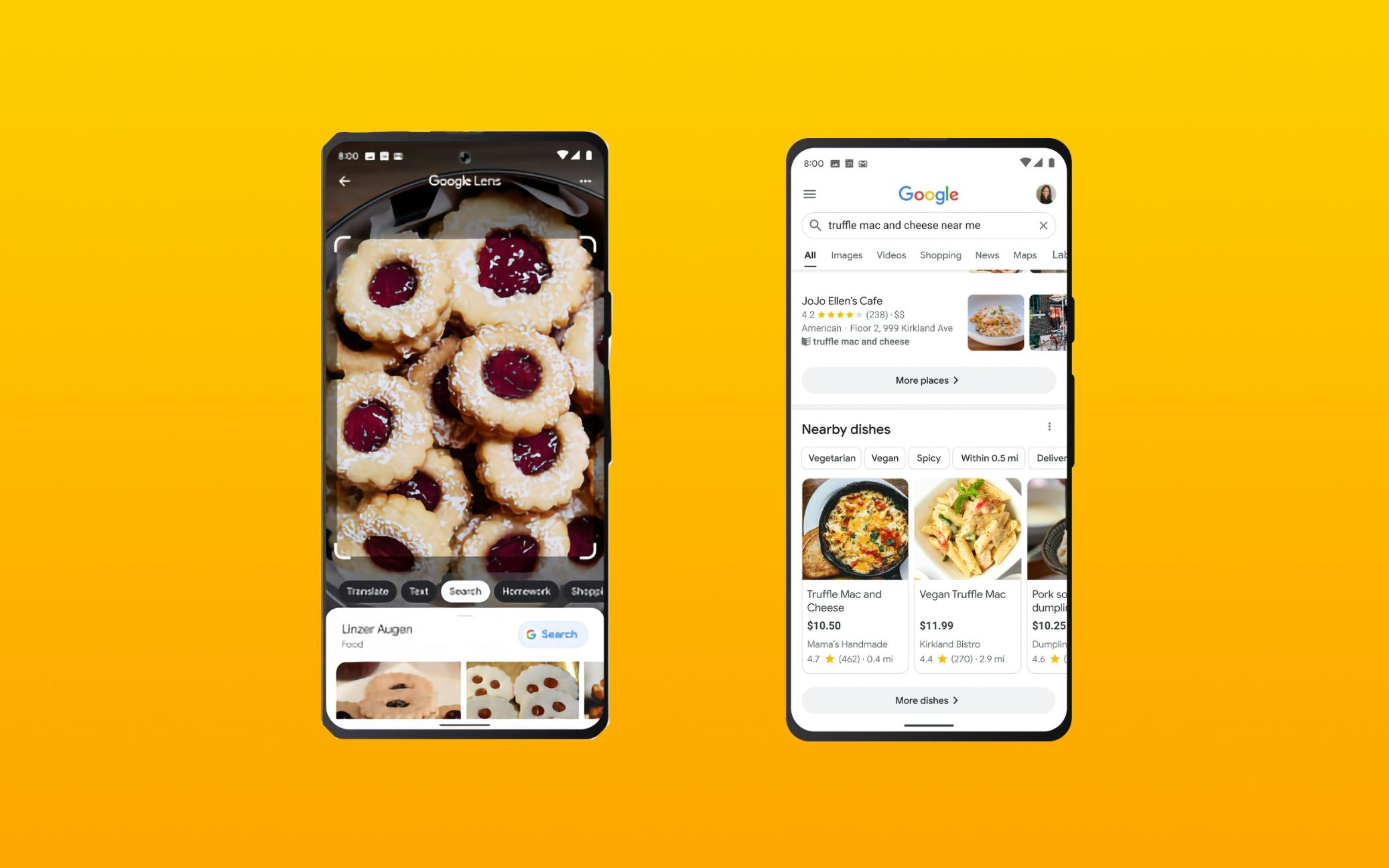

The first change Google’s making is to its “multisearch” feature — searches that combine images and text for more nuanced results — so it can now find food near you. In Google’s example, you can open up the Google app, snap a photo or upload a screenshot of a specific dish, type in “near me,” and then see every restaurant or store that sells the dish nearby.

The idea is that searching that way should be faster than trying to find the name of the dish or business that makes it (imagine having to look through a bunch of menus) while also hopefully being more accurate. Google previous applied multisearch to fashion, where identifying something seems equally complex. In an arena like clothing, you might want a variation on the thing you’re photographing rather than an exact replica (a photograph of a blue button down plus the text “green,” for example).

That doesn’t mean traditional text-based searches aren’t getting improved as well. Google says typing in a search for a specific dish will also pull up nearby restaurants that serve it. If you want truffle mac and cheese, you can find truffle mac and cheese, one way or another.

Searching for locations in the world around you

If you used to be an avid Yelp user, you might be familiar with Google Maps’ Live View. Yelp introduced Monocle in 2009 as a rudimentary software feature that layered Yelp results onto the world around you. (Yes, people were obsessing over AR in the early 2000s, too.) Google Maps Live View is basically that concept, but leveled up.

If you’re in a new city (the feature is rolling out in London, Los Angeles, New York, Paris, San Francisco, and Tokyo) you can pull out your phone, open up Google Maps, hop into Live View, and get common Maps searches (nearby restaurants, ATMs, gas stations, etc.) overlayed onto the world around you. Once you find what you’re looking for and tap on it, Maps can immediately jump into AR directions to get you the rest of the way there.

Changing Search Behaviors

There’s a good chance that adding features like these to search or Google Maps could be more meaningful to the average person than anything Google Assistant can do. But they do require you to think in a different way. Speaking personally, my first instinct is to never pull out my phone’s camera when I’m looking for information.

Some of that is generational. I remember when a Google search only returned text, and video wasn’t the default way to learn something. Some of it, however, has to do with what using Google is like on platforms the company doesn’t control. To get cutting edge search features I have to use the standalone Google app on iOS, something that’s naturally not as much of a hassle on Android.

I don’t think it’s a complete coincidence that the direction of Google’s software changes also encourage you to spend more time and “stumble” onto more things as you search. The company’s money lies in its advertising business (at least $147 billion per recent reports), and search is an ideal place to sell ads. Getting you to spend more time searching is also a pretty great opportunity to put more of them in front of you.