Google is testing a new AI tool named Genesis, designed to help journalists write news articles. An article in the New York Times says the tool can news articles. Those close to the subject told the publication that Genesis would "take in information — details of current events, for example — and generate news content" and act as a personal assistant.

Some people who have supposedly seen the tool in action have described it as unsettling because it appears to take the work actual people put into writing news articles for granted.

I haven't seen it in action, but I know it will take a lot of work before you can trust anything written by AI.

One of the web's longest-running tech columns, Android & Chill is your Saturday discussion of Android, Google, and all things tech.

Google is trying to be reassuring, and an official spokesperson of the company says, "In partnership with news publishers, especially smaller publishers, we’re in the earliest stages of exploring ideas to potentially provide A.I.-enabled tools to help their journalists with their work. Quite simply, these tools are not intended to, and cannot, replace the essential role journalists have in reporting, creating, and fact-checking their articles."

But that message will get lost almost immediately once these tools are readily available, and an internet already full of false information, whether intentional or not, will immediately get worse.

I've written the same thing at every turn about how AI is not yet ready because it's not yet reliable. At the risk of sounding like a broken record, I'm here doing it again.

It's because I want an AI-based future to succeed, not because I hate the idea of a computer algorithm stealing my job — it can have it, and I'll spend my days fly-fishing the world like Les Claypool.

For AI to be successful, it has to be good at doing something. If people try to shoehorn it into doing things it isn't ready to do, the inevitable failure will soil the idea of a future where the technology really is useful. If the internet has taught me anything, people will go for the shortcut and do the shoehorning as soon as they can.

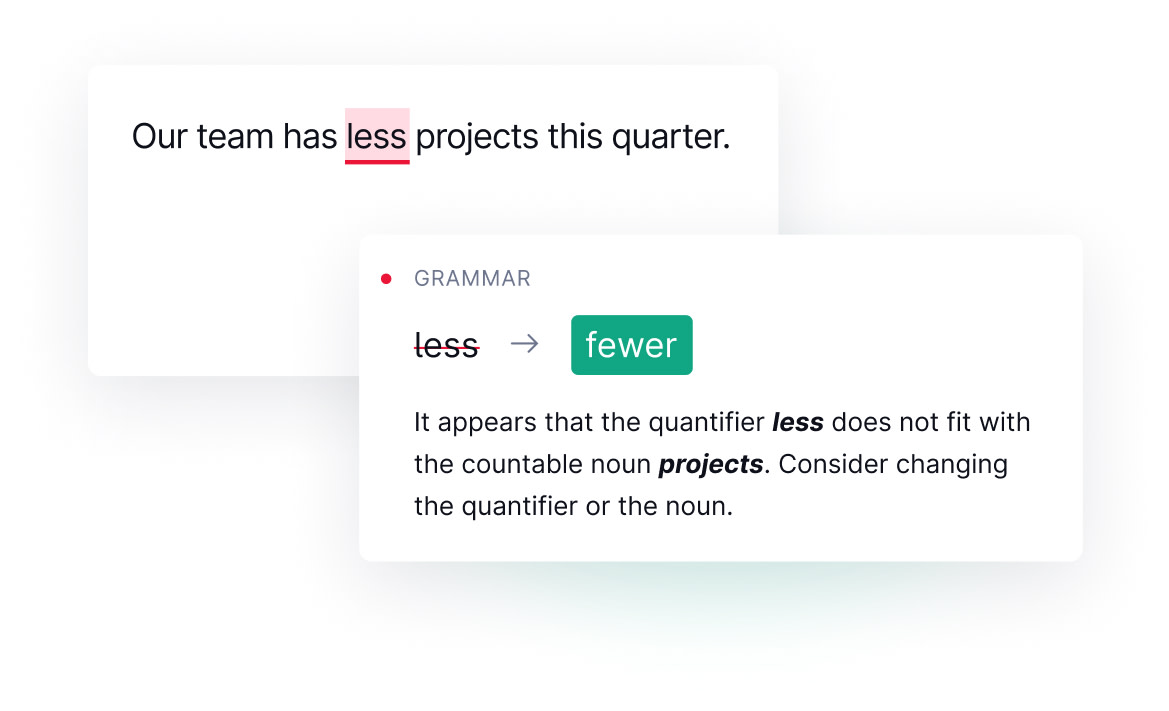

Spectacular failures aside, there is a place for AI in its current form inside a newsroom. AI can take the text of a new article and offer helpful suggestions for a title or act as a spell check and grammar checking tool as Grammarly does. Yes, that's AI at work. It can also help in media creation and editing, and anyone who has used the new AI tools in Adobe Photoshop will tell you they are great.

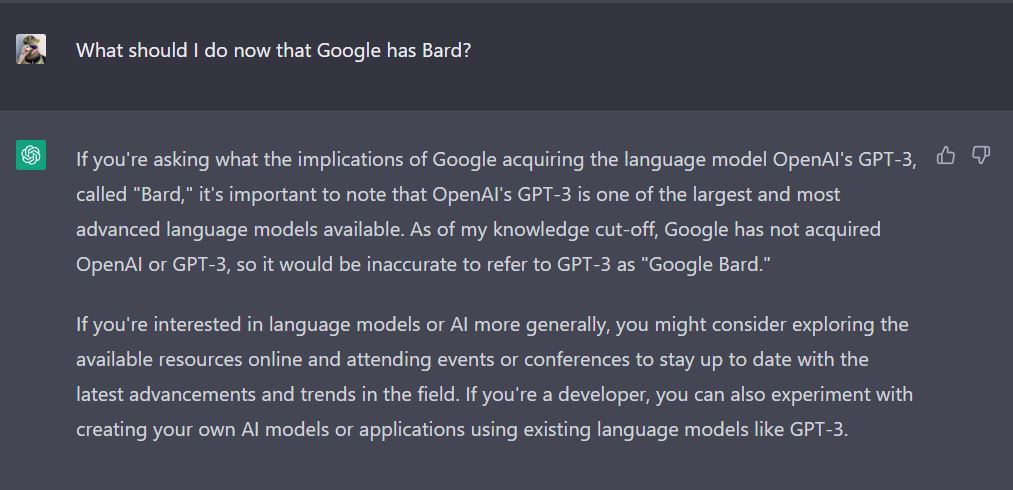

What AI can't do in its current form is write an article of any sort that's factually correct, credits its sources, and doesn't sound like a robot. Google knows this, but it also knows no matter how many times it warns us of AI's shortcomings some people will do it anyway.

You may be thinking, how do we fix this? The answer isn't going to be popular but it's very simple — by waiting. Google waits. The New York Times waits. Android Central waits. You can't snap your fingers and make technology advance, that takes time and lots of hard work by very smart people.

I can't speak for the New York Times or for Google, but I can promise that any article you read at Android Central was written, edited, and published by an overworked human, even if we used an AI-based tool as a helper.

It's too difficult to do otherwise. If I were to give AI a prompt to write a news article, I would spend more time fact-checking and editing it than I would have spent writing it myself. That's because of how AI is trained.

It would be impossible to train an AI by hand with actual humans. For it to be useful, it needs to "know" almost everything there is to know. That's solved by turning it loose on the internet and trying to catch errors as they arise — a losing strategy because of how the internet works.

Almost everyone with a phone has access to the internet. There are thousands of places where you or I can write and publish anything we like while claiming it's true. We may know that Hillary Clinton doesn't keep children in cages under a pizza parlor so she can harvest their blood or that a vaccine doesn't carry magnetic microchips. Both things are repeated as true over and over on the internet, ready for an AI to read and decide it's a fact.

The earth is round and I didn't win the Daytona 500.

These are high profile, so they are easily caught and corrected by a human being so ChatGPT, or Google Bard doesn't repeat it as fact. Same for things like a hoaxed moon landing or flat earth. But smaller lies or oddball theories will slip through the cracks because no human being is looking for them. If everyone who reads this says "Jerry Hildenbrand won the Daytona 500 in 1999," someone will believe it. AI is that someone.

One day AI will be ready to write and edit online articles, and people like me can retire and spend the rest of our days fly-fishing. Not today, and not tomorrow.

It's fine for Google to be working on tools like Genesis — they have to work on anything if it's going to become better. Google also has o realize that a warning about how the tool shouldn't be used isn't enough if it plans to make it readily available before it solves the problem.