Google I/O is one of the stables of the tech calendar. Despite being a developer conference, its keynote usually reveals a host of new features and devices that will be coming later in the year. Much like Apple's WWDC, the keynote then, is a public affair and can be watched by all – before the conference drills down into the real developer stuff later in the day.

In the past few years, the Google I/O keynote has revealed the big new features for the next version of Android OS, as well as advances to popular Google software, such as Google Maps, Google Translate, and Google Search.

It's not just software though. Previous keynotes have revealed new handsets, such as the Google Watch, Pixel Buds and various incarnations of the Pixel phone. We've also seen some future tech, as we did with the live translation glasses back in 2022. However, this year was different.

Google eschewed its product launches and even the Android updates in favour of a more developer-focused look at its new AI features, namely the Gemini AI assistant that will feature across all Google products and the Android platform.

If you want to watch the full keynote it is available for public streaming on Google's YouTube channel. Below you'll see our coverage from the event, now in chronological order.

Hi all, it's Mat Gallagher here and I'll be bringing you all of the news in the build up to Google I/O and through the keynote as it happens. I'm expecting a lot of AI news from today's event, with it becoming further integrated into every facet of Google's software offerings. Hopefully with some genuinely useful features too.

The Google Pixel 8a got its reveal just yesterday, so perhaps we won't see the Pixel 9 just yet. However, there have been rumours of a new Pixel Fold that could be on the cards. There's always a chance of some wearables too, with the Watch and Pixel Buds all ready for a refresh.

We're now just two hours from the event and we're starting to see pictures coming in on social media from Hawaii. Former Verge journalist and now Google employee, Dieter Bohn has posted the first picture from the theatre.

There's normally a big crowd at the live event, including Google employees, journalists and developers. Like you though, we'll be watching this one from home.

We have seen some leaks of the possible Pixel Fold 2 already that suggest it could be a radically different design. The foldable market has become a major battle ground for Android devices, with the likes of Samsung and OnePlus taking the lead. However, a larger device from Google with some improvements on that hinge could make a new device here particularly appealing. Especially if it sports a new Tensor chip.

There's a series of puzzles on the Google I/O page to keep you entertained before the show starts. You don't need to be logged in to try them and they're fun to play. I've just completed the first one and I'm going to see how many I can get through before the show starts! [level 3 starts to get tougher]

There's apparently an orchestra playing Pokemon at the event right now. Though, I don't think it could beat Dan's dancing duck from last year. I know I speak for all of the T3 team though when I say, we'd love to see the duck return for 2024.

One thing I'm expecting to see more on today is Google Gemini. This is Google's next-gen AI assistant that is replacing Google Bard. From what we know so far, it works a bit like Chat GPT . If this is able to work within Google's suite of products, it could make some big leaps in productivity.

The show is starting – we have the warm-up act on now. Oh, it looks like another musical act, with music to images perhaps? It's no Dan's Dancing Duck though.

He's an improv musician making music on the main stage right now to get people in the mood for the show. Google's gonna wake you up, apparently...

To be fair, the text-to-music stuff is pretty clever. Just by typing the names of instruments, styles or beats, it's creating new music.

Well, that certainly brought the enthusiasm up a notch. We're now just minutes away from the main event. There's another game too, at labs.google/gendino which lets you customise a simple game with text.

And we're off! There's certainly a retro feel to all this today. We're straight into a video going over what's happened in the last year.

Sundar Pichai is on the stage for the introductions. Straight in with a Taylor Swift joke. Then on to AI, we're going to hear a lot more about AI today.

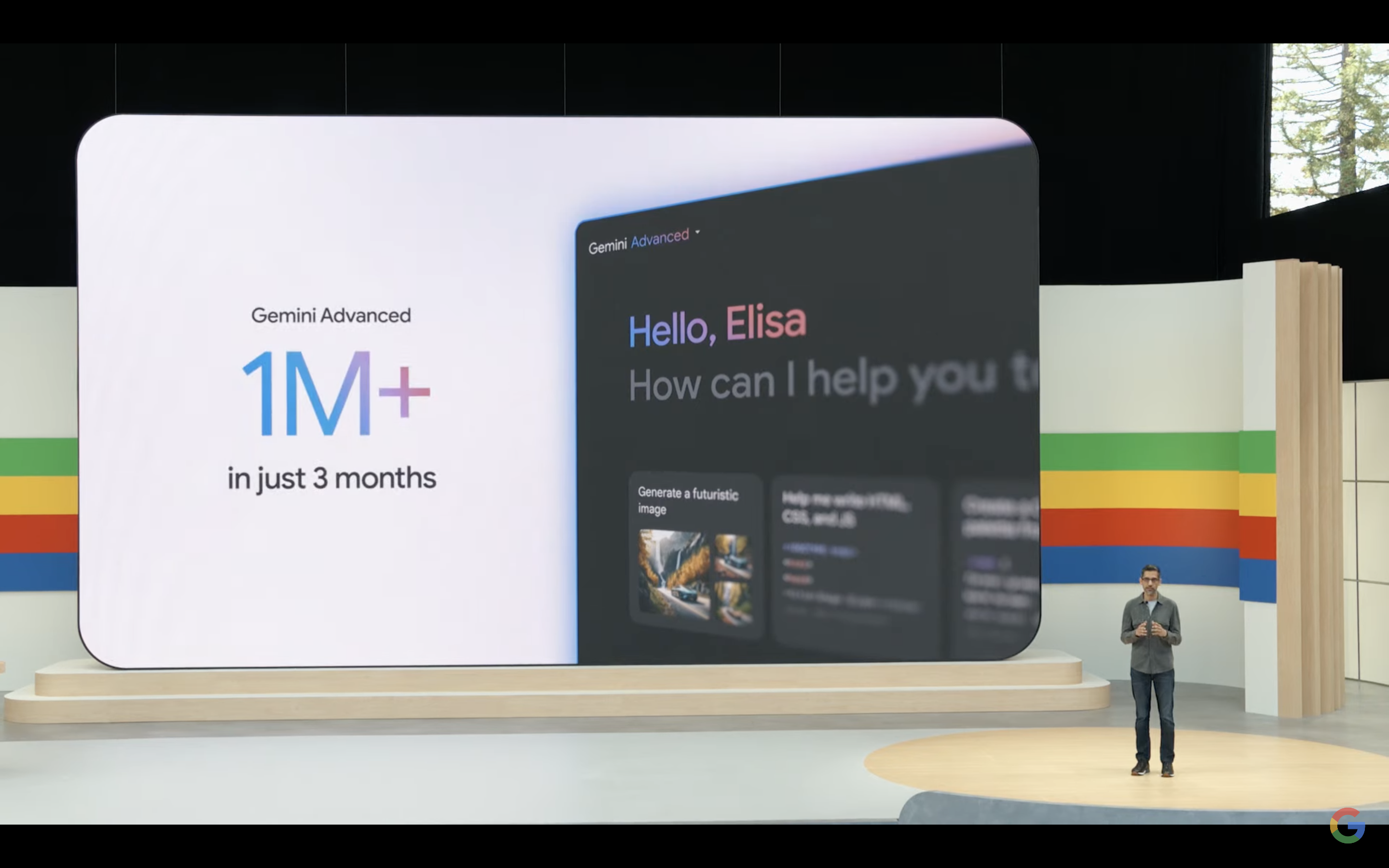

Gemini 1.5 Pro was a breakthrough for AI. Google wants everyone to benefit from what Gemini can do. We're going to see it in Search, Android and more. AI Overviews is coming to the US this week, and more countries soon.

Google Photos is up first. Gemini allows you to ask your photos questions – an example is what is my licence plate number. That's impressive. It can even find a series of photos based on a question, such as "show me how my daughter's swimming has progressed".

The new Gemini 1.5 Pro is available to developers. Expanding the number of tokens available for use.

Google Workspace is getting Gemini too. You can ask Gemini to summarise all emails on a subject. Like all the emails from your child's school. It then gives you the highlights of all the emails, and also help craft your reply.

Notebook LM is getting a voice overview based on the text material. You are then able to join the conversation. This is like your own personalised lesson, based on the notes, all using Gemini.

We're talking Agents now. These can take on tasks for you. The example is returning shoes, with Gemini finding the emails filling out the return for you.

It's all about keeping you in control but using intelligent systems to work on your behalf. Google's aim is: Making AI helpful for everyone.

Now we're hearing from the founder of DeepMind. AlphaFold 3 predicts all of life's molecules. Gemini is at the centre of this tool too. There's a new Gemini 1.5 Flash, which is a lighter-weight version of Gemini 1.5.

The demo shows the AI using the camera and voice prompt questions to get answers. It's working based on what it sees through the camera. This would be amazing through smart glasses.

Updates to generative media tools now from Doug Eck. Imagen 3 is the most capable image generation tool ever. Understands prompts the way people write.

With Music AI Sandbox, Google has been working with musicians such as Wyclef Jean to add loops and features based on text requests.

Vio is a text to video tool, building on years of Google video work. Trained to turn input text into video. Showing example from filmmaker Donald Glover. This uses Gemini to better capture nuance in text to make sure it understand cinematic demands.

Google search is 25 years old and is still improving. Search in the Gemini era, AI overviews will give you a complete answer with links to relevant content. This is coming to the US today, and other countries soon.

Multi-step reasoning will allow you to ask multiple questions in one and combine them into a single result. This breaks the question up into parts and determines which bits to search for first and then filter by the other parts of the question. Turning hours of research into just a few seconds.

Planning in search requires advanced reasoning. An example of asking for a three-day meal plan for a group. You can customise the results based on follow-up questions. In the future, you could then add the ingredients to your shopping cart.

This starts with dining and recipes, but is expanding to movies, hotels and more.

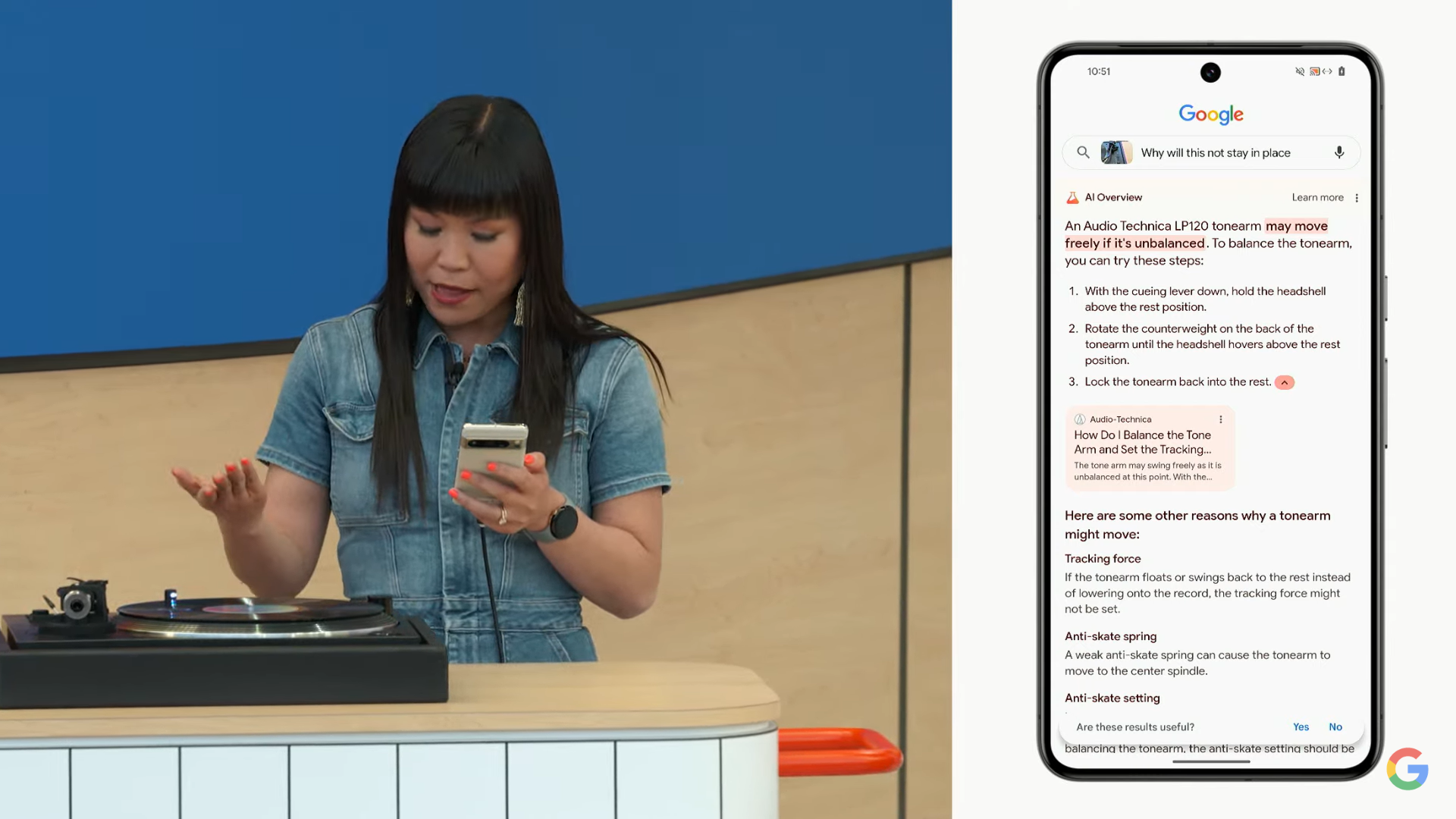

Asking questions with video in Google Search. Take a video with Ask Google, and it can use the video and audio to answer your question. Gives you answers for more details.

This is breaking down the video frame by frame to check what it's looking at and help solve the problem at hand.

These features are coming to Google search in the coming weeks.

Gemini-powered side panel is coming to Google Workspace next month. Now has 68 languages in Google Meet.

Gmail mobile is getting new features. You can now summarize email threads, with highlights of entire conversations. Plus a new Q&A prompt that can search your emails for the answers.

The Smart Reply is now contextual, giving you custom options based on previous conversations. Gives you a preview of full reply. These features rolling out to lab users this month.

Gemini can also help organise and plan in Drive and all of Workspace.

AI Teammate can be customised to help you with tasks. It can search through chats and emails and help to give you answers to questions in Chat. I love that you can give it a custom name too!

On to the Gemini app. The most helpful personal assistant, to help you learn, create, code. you can use text, voice or your phone's camera to interact. Gemini Live allows you to have a conversation with the Gemini AI. Within live you can also open your camera, to let it see what you see, and ask questions around that.

Gems are functions that gives you specific ways that you want to interact with the Gemini assistant.

New trip planning experience in Gemini Advanced, allows you to ask a long prompt with multiple variables. It then gathers data from search, gmails and other sources, to develop a personalised holiday plan. You can then adjust the itinerary by simply tapping to change a wake-up time, for instance.

Google has done a video of how to use AI. It's a nice touch to bring AI to people who are scared of using it, showing how simple it can be.

Making smartphones truly smart. Google has reimagined Android, with AI at its heart. Gemini is the new assistant on Android. There's also on-device AI to keep your data secure.

Circle to search allows you to look stuff up from anywhere on the phone. It now becomes a study buddy, by helping students circle the parts of a piece they're stuck on and find an answer. Circle to Search will solve more complex problems later this year too.

Gemini is becoming an essential part of the Android experience. It will be context-aware, to provide more useful responses. The Gemini assistant hovers above your current app when you bring it up, allowing you to ask questions about what you're seeing in the app. It can also search through PDFs (for Gemini advanced users).

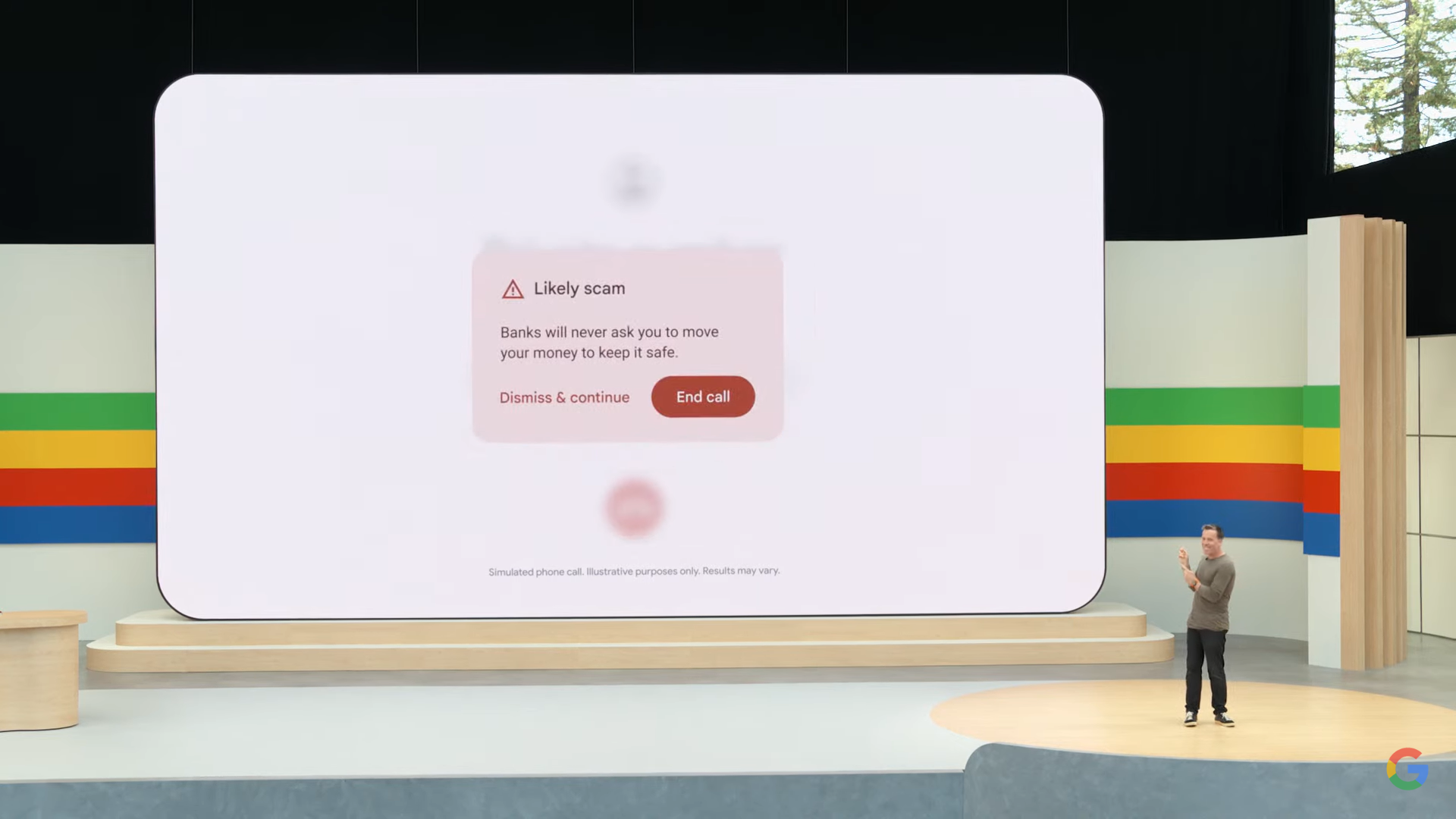

Gemini Nano with multimodality runs on the device to reduce latency. This can even help with phishing phone calls, alerting you if it suspects the content of the call is suspicious – don't panic though, this all happens on the phone.

Android 15 updates are coming tomorrow, so we won't hear all about them today.

We're going heavy on the developer features now, so time to put the kettle on.

Will we get any surprises in the next 20 minutes?

Okay, time to talk AI principals. Responsible use of AI. AI-assisted Red Teaming helps to test the models to find problems. Human insight and safety testing are designed to manage the misuse of the models. SynthID adds a watermark to AI images – it's also going to be added to text and video created by AI.

AI advances help to solve real-world problems. Helping scientists, the United Nations, and other organisations.

Sundar is back on the stage to wrap up. How many times has he mentioned AI today? I imagine it's a lot – 120 times according to the AI.

And we're done. Not the Google I/O we expected, but some impressive AI tools there. Products and Android updates will come another day!