The extreme flooding and mudslides across California in recent weeks took many drivers by surprise. Sinkholes swallowed cars, highways became fast-moving rivers of water, entire neighborhoods were evacuated. At least 20 people died in the storms, several of them after becoming trapped in cars in rushing water.

As I checked the forecasts on my cellphone weather apps during the weeks of storms in early January 2023, I wondered whether people in the midst of the downpours were using similar technology as they decided whether to leave their homes and determined which routes were safest. Did they feel that it was sufficient?

I am a hydrologist who sometimes works in remote areas, so interpreting weather data and forecast uncertainty is always part of my planning. As someone who once nearly drowned while crossing a flooded river where I shouldn’t have, I am also acutely conscious of the extreme human vulnerability stemming from not knowing exactly where and when a flood will strike.

About two-thirds of flood-related fatalities in the U.S. are classified as “driving” and “in water.” If people had known the likelihood of flooding in those locations in real time – via a cellphone app or website – it’s possible some of these deaths could have been avoided.

Yet even emergency management personnel currently operate with surprisingly little information about when and where flooding is likely to strike. They know where flooding may occur, particularly along rivers. But each flood is different, and key questions, such as which roads can safely be used and which populations are exposed, still require firsthand observation.

I have been working with colleagues to develop a method that gets around the current roadblocks to that kind of forecasting. Using “probabilistic learning” – a type of machine learning – the method can create local flood hazard models that can pinpoint conditions street by street using real-time storm forecasts.

The flood forecast challenge

Computer programs that can predict what happens to rainwater after it hits the ground are the ultimate tools for predicting in real time where and when floods will strike.

However, such flood models require immense amounts of computing power. Currently there is no means to quickly predict flooding in real time just anywhere. The level of detail relevant to human decisions – representing buildings, evacuation routes or infrastructure assets – is out of reach.

A second challenge is the high uncertainty in precipitation forecasts and many other inputs of flood models.

Research over the past decades explored the possibilities of solving these formidable challenges using “brute force” approaches: faster computers and more computers. Ultimately, it suggests a need to rethink how we forecast floods.

Designing effective local flood forecasts

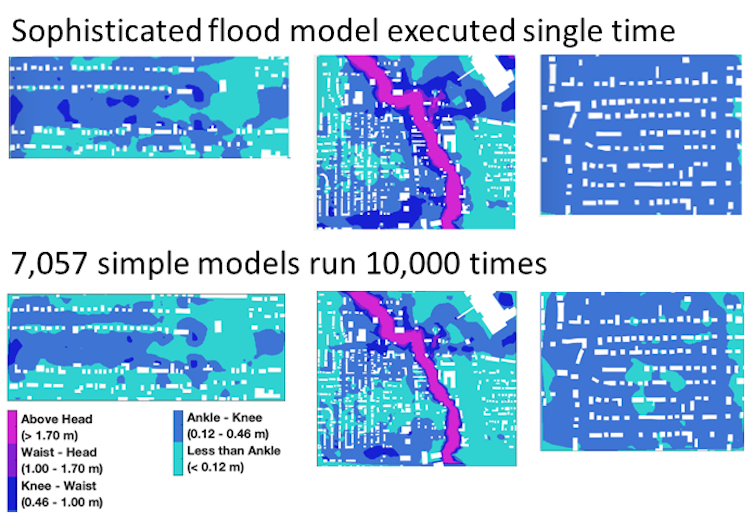

The approach we developed tackles these challenges by using a sophisticated flood model to develop and train simpler models that can then mimic flood behavior in local environments at nearly the same level of accuracy as their more powerful teacher. Importantly, we have shown in studies that even a personal computer can then use these simpler models to forecast flooding in real time. Perhaps even a cellphone.

To forecast flooding, one needs to predict how flooding starts and evolves in urban communities – with a high level of detail and an understanding of the bounds of uncertainty around the prediction.

Our approach builds on key information that many cities already have: detailed data on topography of their communities and the surrounding watershed, how land is used, the layout of buildings and roads, and the characteristics of stormwater drains and pipes, such as how much water they can carry. Pavement and outdated stormwater infrastructure in particular can affect how water flows and which areas flood in an urban environment.

We then use one of the most up-to-date, sophisticated flood models available to train simpler models.

These simpler models are faster and require far less computing power because they use extremely simple functions, and each focuses on a single variable at a given location and time such as, for example, inundation level or water flow velocity. Thousands of them can provide a remarkably accurate picture of likely flooding when real-time weather forecast information is added.

The crucial point is that these simpler models are developed in the “off time” – well before the storms. These analyses can be performed using a freely available toolkit developed by the Department of Energy.

This approach replaces the nearly impossible burden of computation in real-time with the easier task of using previously trained, simple models of the local community.

We demonstrated the approach in a study using the 2017 flooding in Houston from Hurricane Harvey. The results showed that a computational problem that would otherwise take years to run on a typical computer can be executed in just a few seconds with a comparable level of accuracy.

Looking ahead

As global warming increases rainfall intensity and populations grow, flood risks will rise. Another increasingly recognized problem is that the standards of how communities manage stormwater are based on the climate of the past. Existing storm drains and pipes simply cannot handle the additional water, increasing the costs of flood damage.

While further work is needed to put this into wide use, we believe the method opens avenues for making flood forecasting more relevant, detailed and accurate. It also offers the means of assessing the adequacy of existing stormwater infrastructure and can be restyled to assess the impact of other hard-to-quantify natural hazards, such as landslides and fires.

Cities with high home values are likely to see more flood-forecasting services from the private sector. However, government agencies could use this kind of novel modeling to expand their forecasting mission to assist everyone. One could envision counties, with the right skills and resources, providing local flood forecasting. Who, if not the local communities, is most vested in the effective flood response management and mitigation?

Valeriy Ivanov has received prior funding from National Science Foundation, the “Catalyst Program” of Michigan Institute for Computational Discovery and Engineering at the University of Michigan.

This article was originally published on The Conversation. Read the original article.