In the last ten years, some 20,000 or so academic papers have been published on the neuroscience of sex and gender. Perhaps you have read the media coverage of such papers, suggesting there’s finally proof that stereotypical abilities such as men being good at reading maps or women excelling at nurturing can be pinpointed in the brain.

Given the sheer quantity of output in this area, how can you tell what is really groundbreaking research, and what is an overenthusiastic application of hype?

Misleading spin is often blamed on university PR teams, non-specialist science writers in mainstream newspapers, or social media. But the source of deceptive impressions may sometimes be the research papers themselves.

For example, researchers may hyper-focus on a limited set of findings. They may fail to report that many of the differences they were looking for didn’t make the statistical cut. Or they may be less than cautious in discussing the impact of their findings.

Just as much as researchers need to be meticulous about the best methodology and the most powerful statistics, they need to manage the impressions they make when communicating their research. And, if they don’t, then the interested but non-expert reader may need help to spot this.

Magic: spotting the spin

My colleagues and I recently published a set of guidelines which offer just such assistance, identifying five sources of potential misrepresentation to look out for. The initials helpfully form the acronym “Magic”, which is short for magnitude, accuracy, generalisability, inflation and credibility.

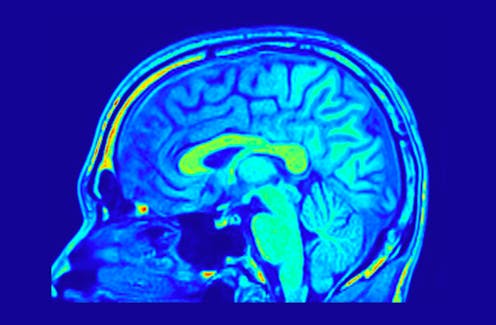

For magnitude, the question is: is the extent of any differences clearly and accurately described? Take this 2015 study on sex differences in the human brain. It reported on 34,716 different patterns of functional brain connectivity, and found statistical differences between females and males in 178 of them.

Yet given that less than 0.5% of all possible differences they were measuring actually turned out to be statistically significant, they wouldn’t really be justified in reporting sex differences as prominent. In this study, they weren’t.

The next question is to do with accuracy. Are techniques and variables clearly defined and carefully used in the interpretation of results? It should be really clear how the study was run, what measures were taken, and why.

For example, a recent paper suggesting that the Covid lockdown effects had a more pronounced effect on adolescent girls’ brain structure than boys’ fell at this hurdle. The abstract referred to “longitudinal measures” and much of the narrative was couched in longitudinal “pre- and post-Covid” terms. Longitudinal studies –– which follow the same group of people over time –– are great as they can discover crucial changes in them.

But if you peer closely at the paper, it emerges that the pre- and post-Covid lockdown comparisons appear to be between two different samples – admittedly selected from an ongoing longitudinal study. Nonetheless, it is not clear that like was compared with like.

The third question has to do with generalisability. Are authors cautious about how widely the results might be applied? Here we encounter the problem with many scientific studies being carried out on carefully selected and screened groups of participants – sometimes just their own students.

Care should be taken to ensure this is clear to the reader, who shouldn’t be left with the impression that one or more sets of participants can be taken to be fully representative of (say) all females or all males. If all study participants are selected from the same single community, then referring to “hundreds of millions of people” in interpreting the relevance of the results is something of an overstatement.

The fourth category, inflation, is to do with whether the authors avoid language that overstates the importance of their results. Terms such as “profound” and “fundamental” may be misplaced, for instance. Remember, James Watson and Francis Crick merely described their discovery of DNA’s double helix structure as of “considerable biological interest”.

Finally, we should consider credibility: are authors careful to acknowledge how their findings do or do not fit with existing research? Authors should be up front about alternative explanations for their findings, or suggest other factors that might need to be investigated in further studies.

Suppose, for example, they are looking at the allegedly robust sex differences in visuospatial skills, which include things like visual perception and spatial awareness. Have the authors acknowledged research suggesting that the amount of time people spend on practising this skill, such as when playing video games, has been shown to be more significant than biological sex in determining such differences?

If gamers are more likely to be boys, that doesn’t necessarily mean their brains are wired for them – it could equally well be reflecting gendered pressures that make such games a popular, culturally comfortable pastime among boys.

The focus of these guidelines is on sex/gender brain imaging studies, but they could well be applied to other areas of research.

Post-lockdown surveys have suggested that the public has greater trust in what scientists are saying than they did before the pandemic. Scientists need to be careful that they retain that trust by ensuring that what they report is unambiguous and free from hype.

Hopefully the Magic guidelines will help them and their editors achieve this; if they don’t, then eagle-eyed readers, Magic-ally armed, will be on their guard.

Gina Rippon does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

This article was originally published on The Conversation. Read the original article.