Text-to-video is the next big thing in AI. We saw a couple of weeks ago how awesome (and a bit creepy) the AI-generated Pepperoni Hugspot commercial was. Pizza Later, the person who developed that video, told us that they used a tool called Runway Gen-2 to do the moving images in that project. With its text-to-video engine, they were able to give simple prompts such as "a happy man/woman/family eating a slice of pizza in a restaurant, tv commercial," and get photo-realistic content.

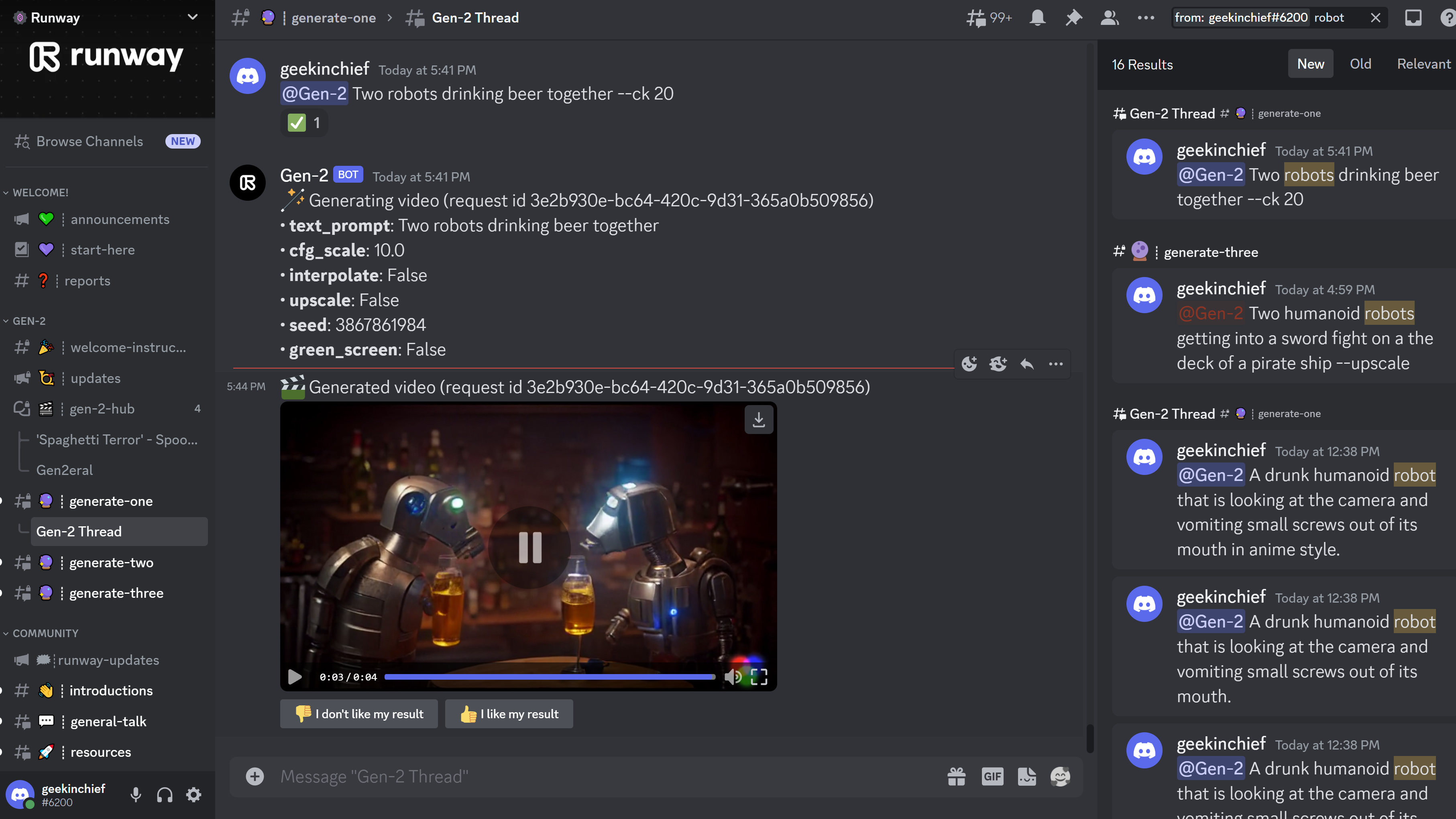

I've just gotten access to the public beta of Runway Gen-2 and I'm really impressed by the realistic nature of its output. While the videos are short at just four seconds each, the quality of the imagery is impressive and it all works via sending short requests to a bot on Runway ML's Discord server.

By sending a few words of text to the @Gen-2 bot, I was able to get short, photo-realistic (or cartoon style) clips of everything from a family enjoying a sushi dinner to a robot with a serious drinking problem. The output was frequently not exactly what I asked for, but it was always interesting and was superior to the NeuralInternet Text-to-Video Playground I wrote about last week.

While anyone can join the server, you'll only see the list of Gen-2 chatrooms once you gain access to the beta program (which many are on the waitlist for). There are some rooms where you can chat and share projects with other users and then there are three rooms named Generate One, Generate Two and Generate Three where you can go to send prompts directly to the @Gen-2 bot. The moderators encourage you to keep issuing prompts to the same thread so as not to make a mess of each chatroom.

Prompting Runway Gen-2

A Runway Gen-2 prompt might look be something like "@Gen-2 A drunk humanoid robot that is looking at the camera and vomiting small screws out of its mouth." The bot will immediately respond back with your prompt and some parameters it's using (ex: "upscaling") which you can change by issuing a new prompt (more on that later). Then, a couple of minutes later, you'll get get a 4-second video based on your prompt.

Here's what my drunk robot looked like. All of the videos are playable from within Discord and you can download them as MP4 files. I have separately converted all the video samples shown in this article into animated GIFs so we can view them more easily (and without pre-roll ads).

You'll notice that the above clip wasn't exactly what I asked for. The robot is not vomiting up screws as I had intended. Instead, it is just looking menacingly at a cup of beer. My other attempts at this prompt were not exactly what I wanted either. When I left the word "drunk" out, I got a robot opening its mouth but not spitting anything out.

Using Pictures With Runway Gen-2 Prompts

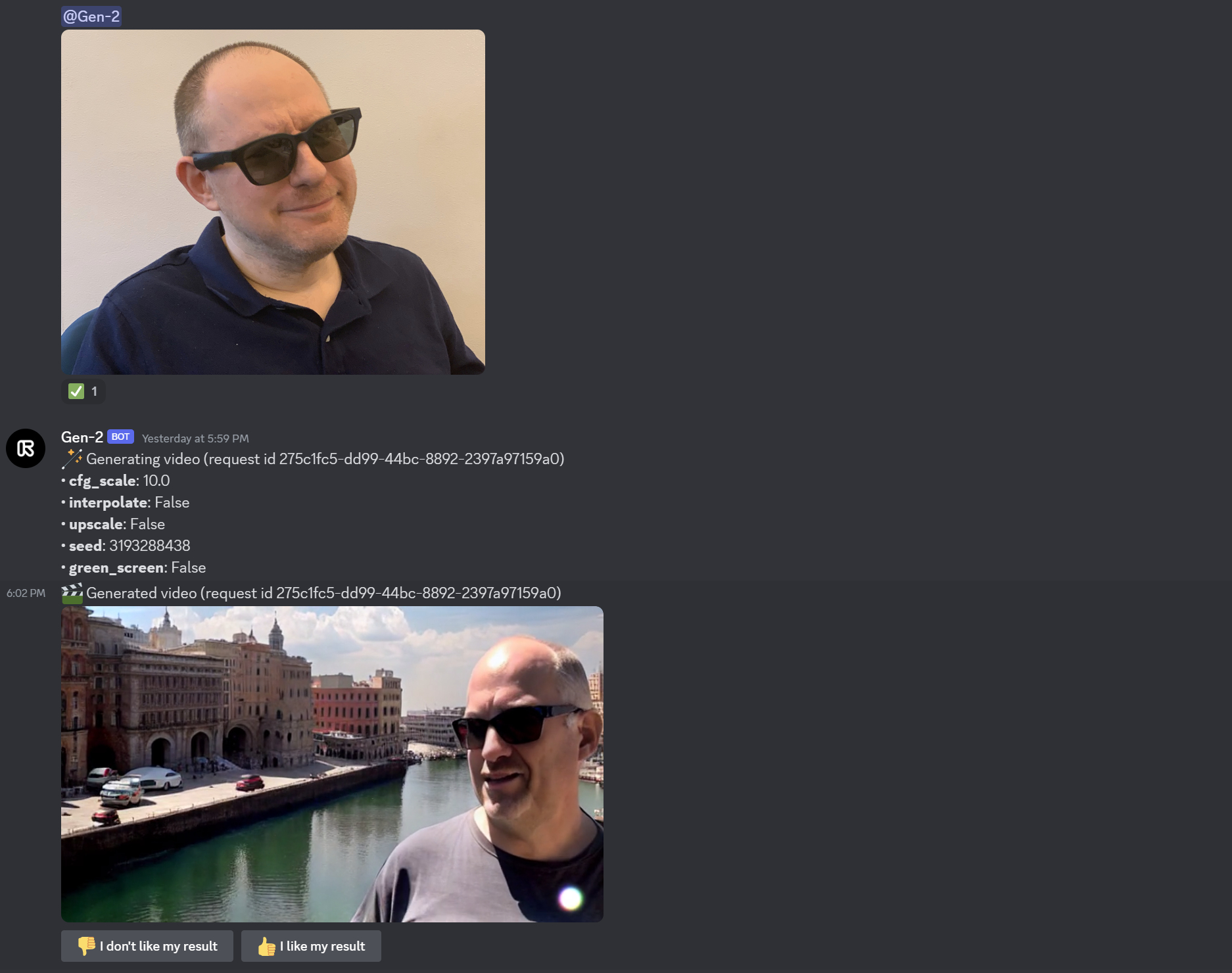

You can also feed images to the bot by either copying and pasting them into Discord along with the text prompt or putting the URL of the image into the prompt. Runway Gen-2 will not actually use the image you uploaded, however. It will only draw inspiration from the image in creating its own video. I uploaded images of myself many times and it gave me videos of people that looked a little like me, but were definitely not me.

For example, when I uploaded a picture of myself and gave it no further information, it showed a balding, middle-aged man with sunglasses who was not me, standing next to a river and some buildings. His mouth moved and the water moved.

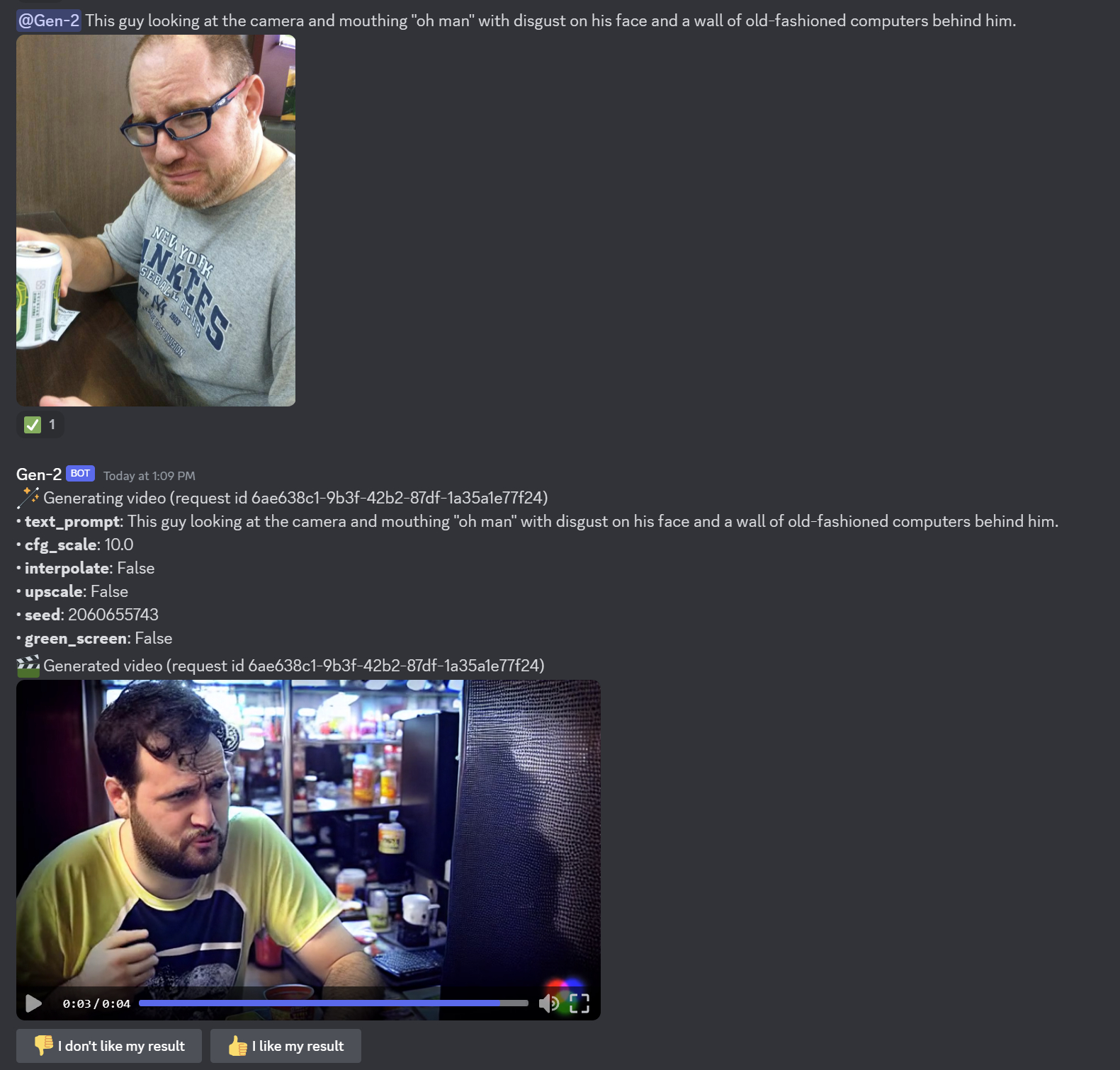

The Runway Gen-2 bot is better at copying the emotion or topic of an image you provide. I showed it an image of myself having a look of disgust on my face and asked for "this guy looking at the camera and mouthing 'oh man'."

A lot of the users on the Discord server say that they achieved great results by generating a still image with another AI tool such as Midjourney or Stable Diffusion, then feeding that image to CLIP Interrogator 2.1 on Hugging Face, a tool which looks at an image and then gives you prompts that it thinks refer to that image.

I tried that process, asking Stable Diffusion to make me an image of a boy on a sidewalk playing with toy robots in the 1980s. I then took the image into CLIP Interrogator and got some sample prompts for it which were pretty obvious such as "boy standing next to robot." Still, feeding the same image into prompt didn't quite give me what I wanted. I got a boy with two robots standing in front of a street, but it wasn't the same street or boy.

To Move or Not to Move

The time limitation itself usually means that there's not a lot of time for movement in each clip. But, on top of that, I found that many clips had very little movement in them. Often, it was just someone's head bobbing or some liquid flowing or smoke rising from a fire.

A good way to get more movement is to put a prompt in which requests a time-lapse or panning of some kind. When I asked for a time-lapse of an Icelandic Volcano or a pan shot of a New York subway, I got some pretty nice results. When I prompted for a panning view of the Taipei skyline, I got clouds moving but no panning, and the city was definitely not Taipei.

Asking for running, chasing or riding may or may not get the job done. When I prompted for a "tortoise skateboarding," I got some kind of werid tortoise-like animal rolling down the street at rapid speed. But when I asked for Intel and AMD boxers fighting each other, I got a picture of two boxers that didn't move at all (and neither had Intel or AMD logos).

What Runway Gen-2 is Good and Bad At

Like other AI image generators, Runway Gen-2 doesn't do a great job of reproducing very specific, branded characters, products or places. When I asked it for Mario and Luigi boxing, I got two characters that look like knock-offs of Nintendo's characters. I asked many times for videos of Godzilla and got some giant lizards that not even the most casual fan would confuse with the King of Monsters.

It was a little better with Minecraft references. When I asked for a creeper and an enderman eating pizza and again for a creeper eating at McDonald's, I got decent-looking creepers but an inaccurate enderman. Asking for a family of creepers eating pizza gave me a family of humanoids who look like they came from Minecraft. Anyone who has played Minecraft knows that creepers are green monsters with black spots.

The tool is terrible with logos. I gave it the Tom's Hardware logo and asked it to use the logo in a commercial and it gave me back this this bizarre thing.

When I asked it for an AMD Ryzen CPU on fire, I got something that looked vaguely like a PCU with a logo that you just have to see for yourself (below).

What Runway Gen-2 does really well is give you generic images of people and families doing things like eating. You may or may not get them to eat exactly what you want. When I asked for a family eating live worms, I got a family that looked more like it was eating salad. A family eating sushi in a 1970s pizza restaurant looked particularly realistic.

I feel compelled to point out that I almost always got white people when I asked for a person without specifying their ethnicity. The only time I got a non-white family (or person) without specifically asking for one is when I asked for the family eating sushi. This is a well-known problem with training data on many generative AI models.

Special Parameters

There are a handful of parameters you can add to the end of your prompt in Runway Gen-2 in order to change the output a bit. I didn't fool around with these a lot.

- --upscale delivers higher resolution

- --interpolate makes the video smoother

- --cfg [number] controls how creative the AI becomes. Higher values are closer to what you asked for.

- --green_screen output ths the video with a green screen area you can use in editing

- --seed is a number that helps determine the outcome. By default, it's a random number every time, but if you use the same number again, you should get a similar result.

Stitching It All Together

If you search the Internet for examples of Runway Gen-2 videos, you may notice a lot of videos that are longer than 4 seconds and have sound. People make these videos by stitching together many different 4-second clips in a video editor and adding sound and music that they've gotten elsewhere.

One of the most famous of these Runway Gen-2 videos is the Pepperoni Hugspot pizza commercial which I mentioned above. But, in the Runway ML Discord, I see a lot of people posting YouTube links to their creations. One of my favorites is "Spaghetti Terror" which was posted to Twitter by Andy McNamara. And Pizza Later's new lawyer commercial is a hoot.

Bottom Line

Runway Gen-2 is in private beta as I write this, but the company has said it intends to make it available to everyone soon, as it already has with its Gen-1 product. As a technology demo, it's truly impressive and I can see someone using its short clips in lieu of stock video or stock animated GIFs.

Even if the time were extended to 60 seconds, it seems unlikely that this tool could replace professionally (or even amateurly) shot video anytime soon. Its inability to accurately reproduce very specific places and people is a huge downer, but it's also a limitation I've seen in every image-generating AI thus far. However, the technology is right there and, as the training data scales up, this could be even more impressive.