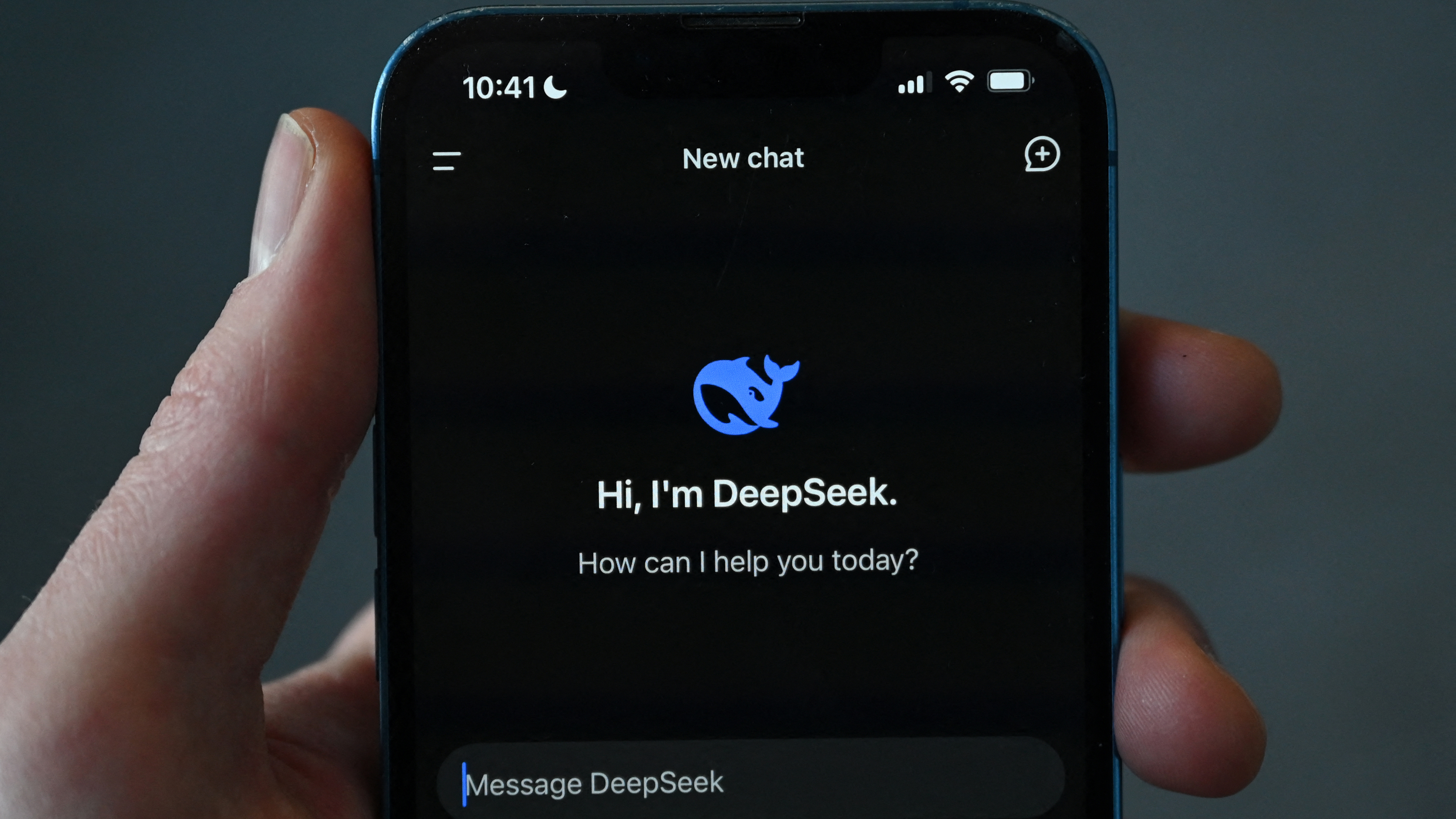

DeepSeek, a China-based AI company that launched a chatbot that disrupted US tech stocks and App Store rankings, has launched an AI image generator just days after its chatbot went viral. Janus-Pro is a multi-modal image generator that DeepSeek says both understands and performs better than earlier models.

Shared on Hugging Face this week, Janus-Pro can generate images from text prompts or low resolution image uploads. DeepSeek shared the AI download with a chart demonstrating more realistic results than the company’s earlier attempts. While Janus-Pro isn't yet available for consumers, it's now available for developers.

DeepSeek’s launch of an AI chatbot went viral over the weekend for upending US-based AI companies like ChatGPT in App Store rankings, in part because the AI is both more cost effective and doesn’t need the latest processors to run. However, the launch comes as the company shut down new signups due to a cyber attack, while some experts found the AI had a lower security safeguard that allows the AI to help code malware and steal credit card data.

According to DeepSeek, Janus-Pro “decouples” the image generation process, which means it separates some of the steps involved in analyzing the input and creating the image as an output. The company says that the “simplicity, high flexibility, and effectiveness of Janus-Pro make it a strong candidate for next-generation unified multimodal models.”

In the Hugging Face page for Janus-Pro, DeepSeek shares several generated images that show improvement over the company’s earlier models, including one illustration that shows the AI generating an image with text, a notoriously hard task for AI.

But, will the risks that researchers found with DeepSeek’s chatbot carry over into the company’s image generator as well? (I can’t help but hearing the words “Janus-Pro” in the voice of Janice from the sitcom Friends, complete with her nasal, obnoxious laugh.)

“We should be alarmed.” Ross Burley, the co-founder of the Centre for Information Resilience, said in a statement on the Large Language Model. “Chinese AI technology integrating further into the UK and Western society is not just a bad idea—it’s a reckless one. We’ve seen time and again how Beijing weaponises its tech dominance for surveillance, control, and coercion, both domestically and abroad. Whether it’s through spyware-laden devices, state-sponsored cyber campaigns, or the misuse of AI to suppress dissent, China’s track record demonstrates that its technology is an extension of its geopolitical strategy. This might appear to be a benign Large Language Model, but we’ve already seen that the AI is suppressing information critical of the Chinese government.”

Tech Radar questions whether or not the app can survive in the US, warning readers not to get too attached. The launch also comes during a time of increased national security concerns from US politicians over China-owned tech like TikTok and DJI.

You may also like

Miffed at generative AI? Go take a real photo with the best mirrorless cameras or the best compact cameras.