AI burns through a lot of resources. And thanks to a paradox first identified way back in the 1860s, even a more energy-efficient AI is likely to simply mean more energy is used in the long run.

For most users, “large language models” such as OpenAI’s ChatGPT work like intuitive search engines. But unlike regular web-searches that find and retrieve data from anywhere along a global network of servers, AI models return data they’ve generated from scratch. Like powering up a nuclear reactor to use a calculator, this tailored process is very inefficient.

One study suggests the AI industry will be consuming somewhere between 85 and 134 terrawatt-hours (TWh) of electricity by 2027. That’s a similar amount of energy as the Netherlands consumes each year. One prominent researcher predicts that by 2030, over 20% of all electricity produced in the US will be feeding AI data centres (huge warehouses filled with computers).

Big tech firms have always claimed to be heavy investors in wind and solar energy. But AI’s appetite for 24/7 power means most are developing their own nuclear options. Microsoft even plans to revive the infamous Three Mile Island power plant, scene of America’s worst ever civil nuclear accident.

Despite Google’s ambitious target of being carbon neutral by 2030, the company’s AI developments mean its emissions have climbed 48% in the past few years. And the computing power needed to train these models increases tenfold each year.

However, Chinese start-up DeepSeek claims to have created a fix: a model that matches the performance of established US rivals like OpenAI, but at a fraction of the cost and carbon footprint.

An environmental game changer?

DeepSeek has created a powerful open-source, relatively energy-lite model. The company claims it spent just US$6 million renting the hardware needed to train its new R1 model, compared with over $60 million for Meta’s Llama, which used 11 times the computing resources.

DeepSeek uses a “mixture-of-experts” architecture, a machine-learning method that allows the model to scale up and down depending on the complexity of prompts. The company claims its model can also store more data and be trained without the need for huge amounts of expensive processor chips.

In reaction, US chip manufacturing and energy stocks plummeted following investor concerns that AI companies would rethink their energy-intensive data centre developments. As the world’s largest supplier of specialist AI processors, Nvidia saw its share price fall by US$589 billion, the biggest one-day loss in Wall Street history.

Paradoxically, as well as upsetting the performance of US tech stocks, improving the energy efficiency of AI platforms could actually worsen the industry’s environmental performance as a whole.

With tech stocks crashing, Microsoft CEO Satya Nadella tried to bring a longer-term perspective: “Jevons paradox strikes again!” he posted on X. “As AI gets more efficient and accessible, we will see its use skyrocket, turning it into a commodity we just can’t get enough of.”

The Jevons paradox

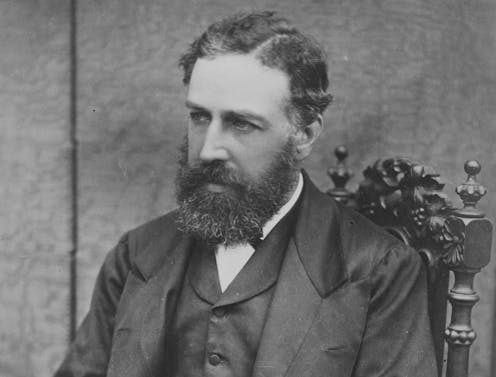

The idea that energy efficiency isn’t always a good thing for Earth’s resources has been around for well over a century. In 1865, a young Englishman named William Stanley Jevons wrote “The Coal Question”, a book in which he suggested that Britain’s place as an industrial superpower might soon come to an end, due to its rapidly depleting coal reserves.

But to Jevons, frugality was not the solution. He argued: “It is wholly a confusion of ideas to suppose that the economical use of fuel is equivalent to a diminished consumption. The very contrary is the truth.”

According to Jevons, any increase in resource efficiency generates an increase in long-term resource consumption, rather than a decrease. Because greater energy efficiency has the effect of reducing energy’s implicit price, it increases the rate of return – and demand.

Jevons offered the example of the British iron industry. If technological advancements helped a blast furnace produce iron with less coal, profits would rise and new investment in iron production would be attracted. At the same time, falling prices would stimulate additional demand. He concluded: “The greater number of furnaces will more than make up for the diminished [coal] consumption of each.”

More recently, the economist William Nordhaus applied this idea to the efficiency of lighting since the dawn of human civilisation. In a paper published in 1998, he concluded that in ancient Babylon, the average labourer might need to work more than 40 hours to purchase enough fuel to produce the equivalent amount of light emitted by a modern lightbulb for one hour. But by 1992, an average American would need to work for less than half a second to produce the same.

Throughout time, efficiency gains haven’t reduced the energy we expend on lighting or shrunk our energy consumption. On the contrary, we now generate so much electric light that areas without it have become tourist attractions.

Warming and lighting our homes efficiently, driving our cars, mining Bitcoin and, indeed, building AI models are all subject to the same so-called rebound effects identified in the Jevons paradox. And this is why it will be impossible to ensure a more efficient AI industry actually leads to an overall reduction in energy use.

A Sputnik moment

In the 1950s, the US was horrified when the Soviets launched Sputnik, the first space satellite. The emergence of a more efficient rival caused America to allocate more resources to the space race, not less.

DeepSeek is Silicon Valley’s Sputnik moment. More efficient AI will probably mean more distributed and powerful models, in an arms race that is no longer made up only of US tech giants. AI offers superpower status, and the floodgates may now be fully open for the UK and other global competitors, as well as China.

What’s for certain is that in the long term, the AI industry’s appetite for energy and other resources is only going to increase.

Don’t have time to read about climate change as much as you’d like?

Get a weekly roundup in your inbox instead. Every Wednesday, The Conversation’s environment editor writes Imagine, a short email that goes a little deeper into just one climate issue. Join the 40,000+ readers who’ve subscribed so far.

Peter Howson has received research funding from the British Academy.

This article was originally published on The Conversation. Read the original article.