In early October 2023, Michal Šimečka, a progressive leader vying for the presidency in Slovakia, lost out to his pro-Kremlin opponent after a fake audio clip emerged online days before the ballot. In it, a likeness of his voice suggests to a journalist that he would consider buying votes to ensure victory. A few weeks later, the election of Argentina’s libertarian president, Javier Milei, came after supporters of both Milei and establishment opponent Sergio Massa harnessed generative artificial intelligence to exaggerate their strengths and smear their rivals. Milei fabricated images of himself as a lovable lion protective of the nation—a far cry from his public persona as a chainsaw-wielding firebrand. Massa’s backers transformed the fifty-one-year-old then economy minister into various iconic protagonists from numerous Hollywood blockbusters and cast Milei as several hated villains. Massa’s campaign even posted a fake video on Instagram, since taken down, wherein Milei calls for a legal market in human organs.

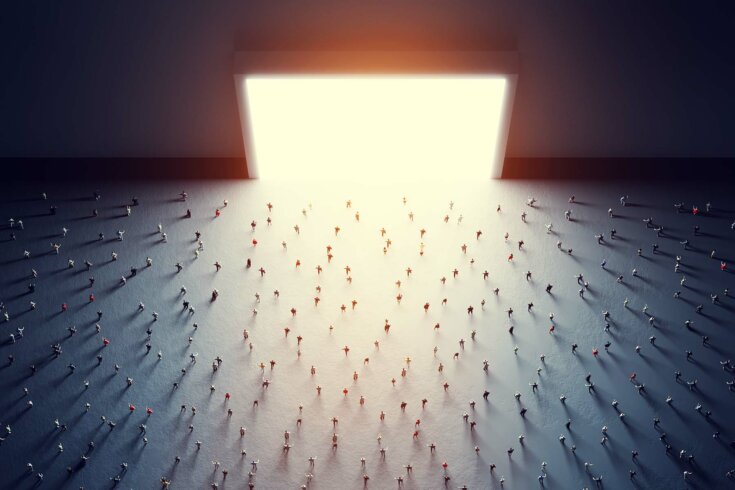

The era of the deepfake campaign has already begun. And as generative AI gathers ubiquity and sophistication, the fraying of social cohesion throughout the West in recent years may soon feel quaint by comparison. Rather than stoke outrage, tribalism, and conspiratorial thinking among voters, these new digital tools might soon breed something arguably much worse: apathy. Put off by civic life’s becoming awash with misinformation and deepfakes, an even larger portion of the electorate may eventually choose to avoid politics altogether. For them, the time, stress, and confusion involved in discerning fact from fiction won’t be worth it—not when generative AI programs will deliver instant, endless escapism on demand, whether fake or true.

Anti-democratic actors are no doubt already banking on this outcome. “The Democrats don’t matter,” Steve Bannon reportedly said in 2018, before articulating a political strategy that is a mainstay of authoritarian populist movements everywhere. Instead, the former head of Breitbart News and architect of Donald Trump’s rise to power—and still a prolific luminary of the far right—asserted that elections can be swayed by poisoning the information space. “The real opposition is the media,” Bannon argued. “And the way to deal with them is to flood the zone with shit.”

Seven-plus years removed from Brexit and Trump’s election as US president, a new tsunami of fake content is about to hit the internet. Technology expert Nina Schick, the author of Deepfakes: The Coming Infocalypse, predicts that the generative AI arms race among tech companies, sparked by the advent of ChatGPT, could mean that, by 2025, upwards of 90 percent of online material will be synthetically produced by AI. Whether that figure will ultimately be reached is uncertain. But it’s clear, at least, that 2024—when roughly one in four people globally are eligible to head to the polls—will offer a glimpse of what AI-tinged electoral cycles may resemble in the not-too-distant future.

While there’s no way to verify whether the deepfake of Šimečka influenced the outcome in Slovakia, it further confirms the growing appeal of such tactics within democracies. Last June, supporters of the now-defunct Republican presidential campaign of Florida governor Ron DeSantis shared synthetic photos showing Trump kissing Dr. Anthony Fauci, a figure reviled by American conservatives for being the face of COVID-19 lockdown measures. Before that, in April, the Republican National Committee released a slick computer-generated video suggesting that a second Biden term in the White House would turn America into a dystopian hellscape and encourage China to invade Taiwan. A debunked recording that emerged online on the eve of Britain’s opposition Labour Party’s annual conference last October allegedly captures party leader Keir Starmer verbally abusing staffers. The context: heading into an election year, Labour was polling well ahead of the ruling Conservatives. In Indonesia, the country’s deceased former dictator Suharto was digitally resurrected in early January in a video posted on social media by the current deputy chair of the political party Suharto founded. The clip attracted millions of views in less than a week.

Yet, for the West in particular, an even deeper governance issue may be playing out in the background. In liberal democracies—where citizens tend to be more secular and self-oriented and have smaller family networks than in other societies—AI may also accelerate a decades-long erosion of civic engagement and social capital.

A core tenet and strength of liberal democracies is their emphasis on individualism. And yet as personalized echo chambers emerged online, they simultaneously rendered this principle a liability. Across the Western world, the fragmenting of shared reality among individuals and different identity groups alike is fuelling political extremism and dysfunction. This fragmentation is due in part to the business models of social media companies, whose lucrative market valuations rest upon algorithms that cultivate and monetize polarization among users.

Pandemic lockdowns in 2020 underscored just how socially adrift a significant number of people in industrialized nations have become. This trend was first diagnosed in the United States a quarter century earlier by political scientist Robert D. Putnam. In his book Bowling Alone: The Collapse and Revival of American Community, Putnam explains how the rapid social, economic, and demographic changes within the US since the 1960s generated many enormous benefits. But they also triggered a steady decay of collective life—both within social groups and among them.

Similar dynamics are mirrored across nearly all advanced democracies. These were turbocharged by the strict health measures meant to prevent rising COVID-19 case numbers from overwhelming health systems. For countless people—children and youths included—the internet and digital life became their only connection to the outside world. A 2022 systemic review and meta-analysis of eighty-nine studies confirmed a 67 percent spike in screen time from early 2020 to mid-2022 among children and a 51 percent increase for the same period among adults. For adults, this usage tallied a large majority of waking hours per day on average.

Yet, even after life has more or less returned to normal, dating is on the decline, the quality of friendships is withering, school absenteeism is rising, and in-person workplaces haven’t recovered—revealing how our hyperconnected world seemingly deprioritizes actual human connection. So much so that the World Health Organization, in November 2023, declared loneliness a “global public health concern.” The agency has since launched a two-year Commission on Social Connection to address the damaging effects isolation has on the well-being of individuals, communities, and societies.

Looking ahead, generative AI risks hardening digitally siloed forms of existence. Speaking in January at the World Economic Forum in Davos, Switzerland, OpenAI CEO Sam Altman suggested that future AI products will feature “quite a lot of individual customization.” Such customization may particularly play a role in those computer programs beginning to function as stand-ins for genuine interpersonal relationships—a topic previously explored in director Spike Jonze’s 2013 film Her, which follows a despondent writer’s romantic entanglement with an AI operating system. Through their use in marketing and advertising initiatives, chatbots are communicating among themselves, continually surprising observers with the way they are able to accurately mimic human patterns of behaviour. For nefarious actors, this is an opportunity to enable lonely, isolated humans to outsource their social needs—and their destructive, anti-social urges—to chatbots.

Launched in early January, OpenAI’s new GPT Store, which offers up custom versions of ChatGPT created by third-party developers, is emulating the toxic engagement-based revenue structure of legacy social media platforms. Despite its stated policies against tools “dedicated to foster romantic companionship,” the site has been overrun by chatbots promising to serve as AI girlfriends. Elsewhere, produced by a California-based software company, the original AI companion bot Replika promotes itself as “The AI companion who cares.” Deepfakes are emerging as an illicit economic industry too. One platform allows users to offer bounties to solicit deepfakes of regular people for use in personal revenge schemes or identity theft or to create non-consensual pornography.

Advancements in virtual and augmented reality are underpinning more intensely immersive video gaming—an activity already labelled by the WHO as potentially addictive. And despite corporations’ evident struggles in laying the groundwork for the metaverse, many experts predict that, by 2040, it could be used on a daily basis by half a billion people or more.

On a more granular scale, two Harvard University academics, Albert Fox Cahn and Bruce Schneier, have written that continual engagement with chatbots might reshape users’ approach to other humans in insidious ways. Over time, they argue, this could produce “a world where our conversations with [chatbots] result in our treating our fellow human beings with the apathy, disrespect, and incivility we more typically show machines.”

Amid such a cacophony of computer-generated noise, trickery, and displacement of human social bonds, everyday citizens will struggle to trust anything they can see or hear.

This will likely invite two consequences for liberal democracies. First, voters may become ever more nostalgic for a simpler past, feeding a desire for a strongman to take control of it all. Or regular citizens may increasingly withdraw from collective pursuits altogether, because they will seem too fractious and bewildering to navigate. Both outcomes would be a boon for authoritarians.

Communities across the developed world are already becoming radically unmoored from a common narrative. Generative AI will lead to even greater social splintering, by allowing more and more individuals to bypass the types of messy and complex human exchanges and contests of ideas that form the bedrock of democracy. Why put in the uncomfortable effort to develop empathy for others and understand new points of view when generative AI can instantly produce an array of options and digital sycophants to entertain you, keep you company, and tell you only what you want to hear?

In a recent interview discussing Germany’s ascendant far-right political party, Alternative für Deutschland, Constanze Stelzenmüller, director of the Center for the United States and Europe at the Brookings Institution, highlighted the need for greater civil society pushback against anti-democratic movements within liberal democracies. Throughout the West, she says, most citizens “have been consumers of prosperity, stability and security and sort of quite unquestioningly handed off the business of politics to our representatives.” But this must change. “I think we’re now living in a time when it’s become very clear that we can no longer just be consumers,” argues Stelzenmüller. “We also have a role and, dare I say, a responsibility as citizens to preserve representative democracy.”

That responsibility risks becoming increasingly starved of time and attention. In 2008, Pixar’s animated movie Wall-E illustrated a future in which humans voluntarily forfeit their agency to machines. Fifteen years later, Pixar’s parent company, Walt Disney, created a secretive task force to study how generative AI could be applied across the conglomerate’s media endeavours, theme parks, and vast advertising systems.

This dark bit of irony encapsulates a general risk of generative AI going forward: at a time when the most pressing local, national, and international challenges require more input and engagement from citizens, the technology promises to create ever more avenues for individuals to opt out of civic life.

Reprinted, with permission, from the Centre for International Governance Innovation.