A new approach to computing could double the processing speed of devices like phones or laptops without needing to replace any of the existing components.

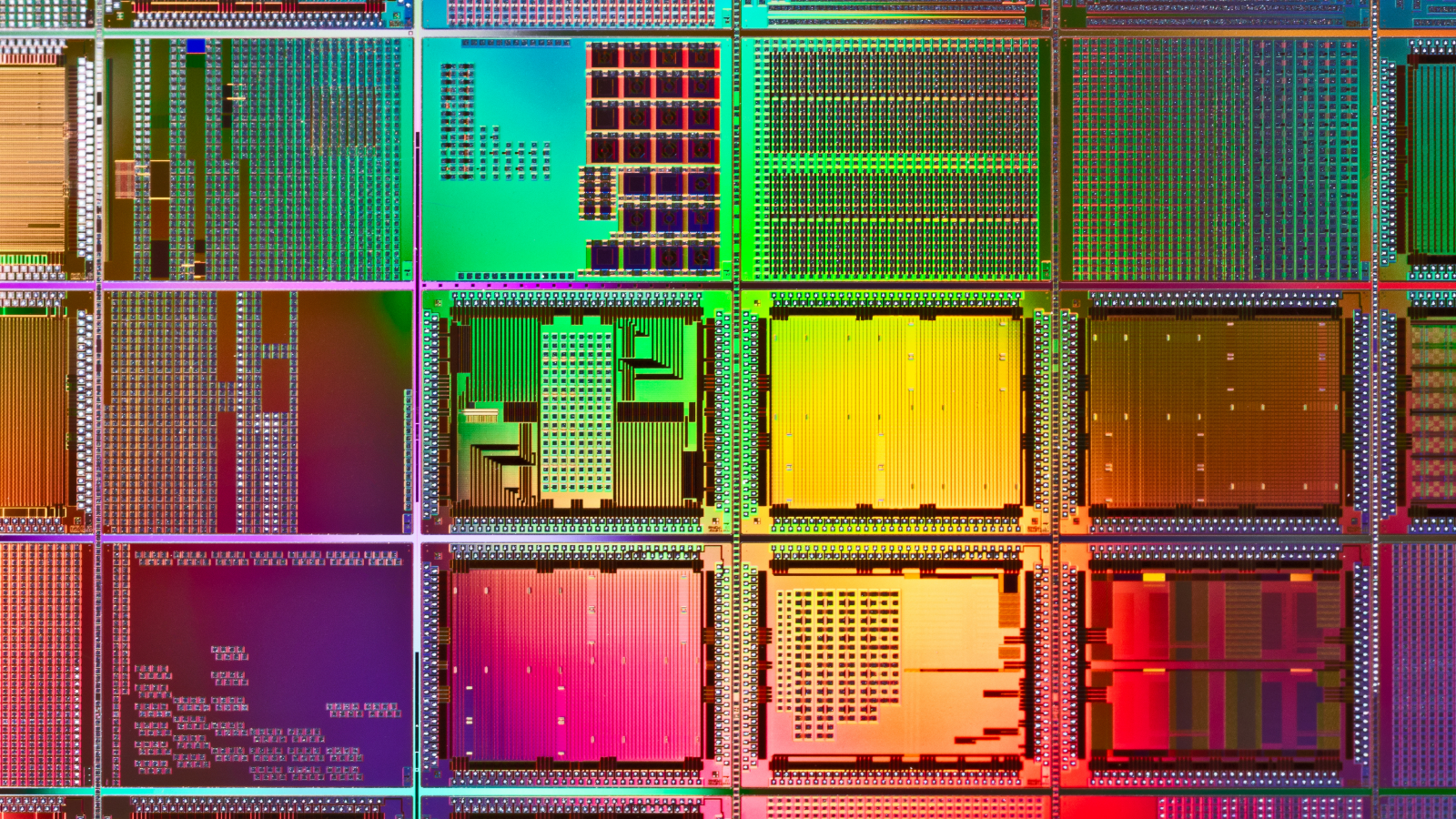

Modern devices are fitted with different chips that handle various types of processing. Alongside the central processing unit (CPU), devices have graphics processing units (GPUs), hardware accelerators for artificial intelligence (AI) workloads and digital signal processing units to process audio signals.

Due to conventional program execution models, however, these components process data from one program separately and in sequence, which slows down processing times.

Information moves from one unit to the next depending on which is most efficient at handling a particular region of code in a program. This creates a bottleneck, as one processor needs to finish its job before handing over a new task to the next processor in line.

Related: World's 1st PC rediscovered by accident in UK house clearance nearly 50 years after last sighting

To solve this, scientists have devised a new framework for program execution in which the processing units work in parallel. The team outlined the new approach, dubbed "simultaneous and heterogeneous multithreading (SHMT)," in a paper published in December 2023 to the preprint server arXiv.

SHMT utilizes processing units simultaneously for the same code region — rather than waiting for processors to work on different regions of the code in a sequence based on which component is best for a particular workload.

Another method commonly used to resolve this bottleneck is known as "software pipelining," and speeds things up by letting different components work on different tasks at the same time, rather than waiting for one processor to finish up before the other begins operating.

However, in software pipelining, one single task can never be distributed between different components. This is not true of SHMT, which lets different processing units work on the same code region at the same time, while letting them also take on new workloads once they've done their bit.

"You don't have to add new processors because you already have them," lead author Hung-Wei Tseng, associate professor of electrical and computer engineering at University of California, Riverside, said in a statement.

The scientists applied SHMT to a prototype system they built with a multi-core ARM CPU, an Nvidia GPU and a tensor processing unit (TPU) hardware accelerator. In tests, it performed tasks 1.95 times faster and consumed 51% less energy than a system that worked in the conventional way.

SHMT is more energy efficient too because much of the work that is normally handled exclusively by more energy-intensive components — like the GPU — can be offloaded to low-power hardware accelerators.

If this software framework is applied to existing systems, it could reduce hardware costs while also reducing carbon emissions, the scientists claimed, because it takes less time to handle workloads using more energy-efficient components. It might also reduce the demand for fresh water to cool massive data centers - if the technology is used in larger systems.

However, the study was just a demonstration of a prototype system. The researchers cautioned that further work is needed to determine how such a model can be implemented in practical settings, and which use cases or applications it will benefit the most.