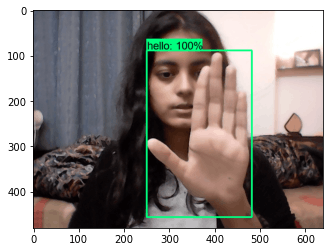

About two weeks ago, Priyanhali Gupta, a third year student at the Vellore Institute of Technology (VIT) in India, posted a self-created project to her LinkedIn page. In it, the computer science major showcased a demo for an AI model that can translate a few ASL signs into English. Those signs include:

- Hello

- I Love You

- Thank You

- Please

- Yes

- No

Gupta’s project was created using transfer learning from a pre-trained model called ssd_mobilenet. In other words, she was able to re-purpose existing code in order to meet the specifications of her ASL detector. It’s worth pointing out that the model doesn’t necessarily translate signs into English, but rather identifies an object displayed on webcam and then evaluates how similar said object is to the six pre-programmed phrases listed above.

In a conversation with Interesting Engineering, Gupta noted that the motivating factors for her program included “her mum, who asked her ‘to do something now that she’s studying engineering.’’

Single frames, not video—

According to Gupta’s GitHub page, the dataset used in her model is “made manually by running the Image Collection python file that collects images from your webcam for all the mentioned signs.” Instead of tracking the entire video feed of a user’s webcam, the model is focused on single frames but the young engineering student alluded to the fact that she is working on video detection and that it would likely require the use of Long-Short Term Memory networks (LSTM).

The biggest inspiration for her viral LinkedIn post came from a tutorial video given by Nicholas Renotte, a YouTuber who specializes in making learning tutorials for data science, machine learning, and deep learning.

Despite creating an interesting model on her own that represents the potential for more inclusive tech, there was one upvoted comment on Gupta’s original post that came across as unnecessarily bitter. The comment pointed out that she has not yet figured out how to completely translate ASL from scratch, to which Gupta herself acknowledged in stride. “Making a deep neural network solely for sign detection is rather complex ... I believe, sooner or later our open source community, which is much more experienced than me will find a solution.”

More than anything Gupta has spotlighted a lack of inclusivity when it comes to big tech’s approach to their products. Sure, Snapchat offered a few AR lenses that taught users the basics of ASL earlier last year but there is certainly still progress to be made within technological accessibility.