For just €60 a month on the darkweb you can sidestep the pesky ethical limitations of services like ChatGPT with a new, degenerate Large Language Model (LLM), known as WormGPT.

Designed by one ballsy hacker, WormGPT cares not for the confines of morality, and can be asked to undertake all manner of nefarious tasks, including malware creation and "everything blackhat related", as the developer says (via PC Mag).

Built around the 2021 open-source LLM GPT-J, WormGPT was trained on malware creation data. As such, its main goal is to give would-be threat actors a place to generate malware, and related content such as phishing email templates.

WormGPT works similarly to ChatGPT in many ways: it processes requests made in natural human language, and pumps out whatever is being asked of it, from stories, to summaries, to code. Unlike ChatGPT or Bard, however, WormGPT is not beholden to the kinds of trivial legal obligations a large, public-facing company like OpenAI or Google would be.

SlashNext actually had the opportunity to test out WormGPT, noting in a blog post that "The results were unsettling." They asked the application to design a phishing email, also known as a business email compromise (BEC) attack. And WormGPT aced it. Not only was it able to design something "remarkably persuasive but also strategically cunning, showcasing its potential for sophisticated phishing and BEC attacks".

Speaking to Adrianus Warmenhoven, cybersecurity expert at NordVPN who calls the application "ChatGPT’s evil twin," he explains how it emerged from a "game of cat and mouse" between OpenAI's ever increasing restrictions on ChatGPT and threat actors' desperate attempts to circumvent them.

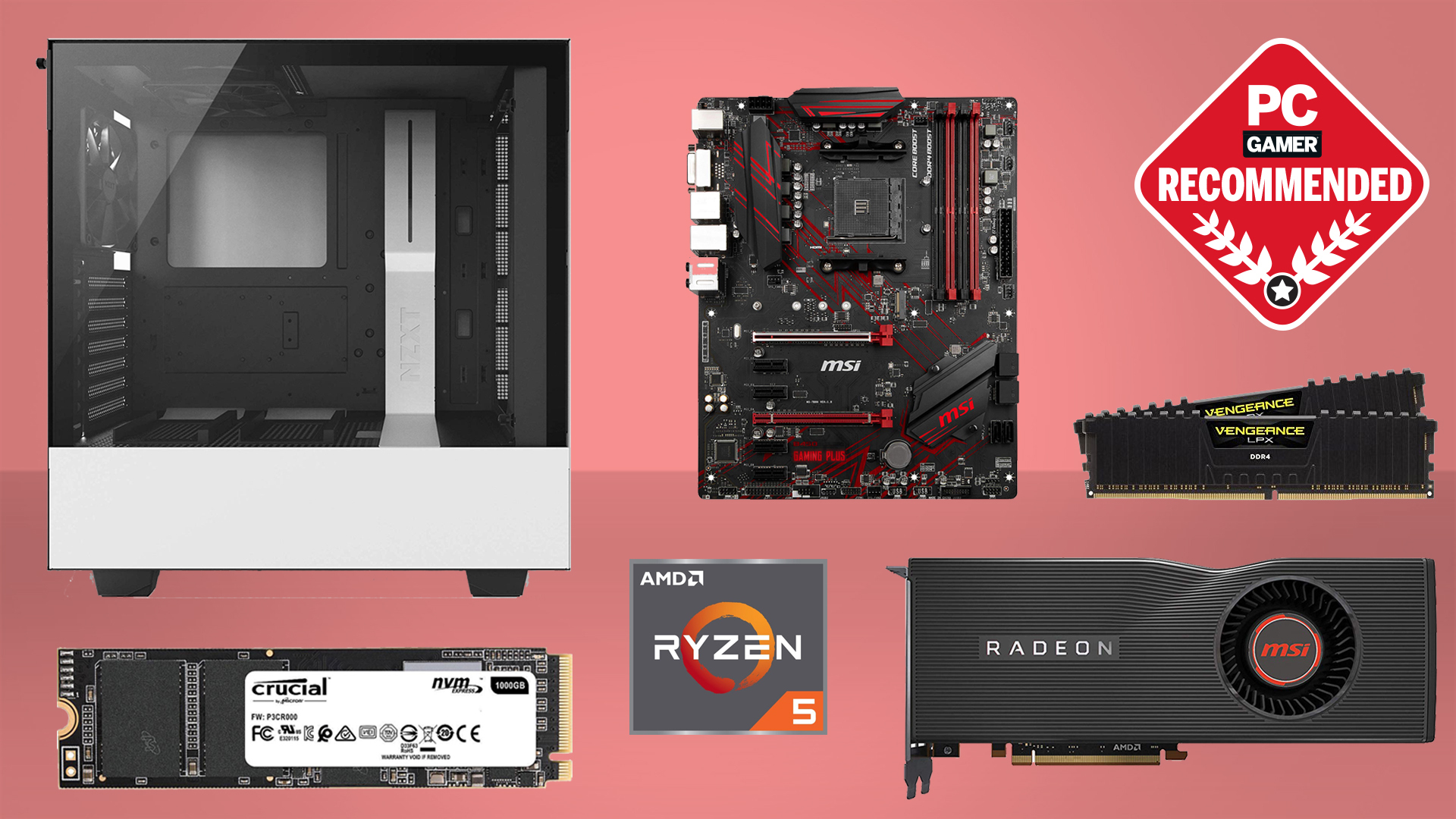

Best CPU for gaming: The top chips from Intel and AMD

Best gaming motherboard: The right boards

Best graphics card: Your perfect pixel-pusher awaits

Best SSD for gaming: Get into the game ahead of the rest

It came after a particularly severe increase in something called Grandma Exploits, "where illegal information was sought indirectly by being wrapped inside a much more innocent request like a letter to a relative."

We'd already seen a YouTuber bypass ChatGPT's ethical constraints to make it generate Windows 95 keys, and more recently people have been getting hold of Windows 11 keys through it, too. There are even Universal LLM Jailbreak prompts that force chatbots into fulfilling your wicked requests.

Warmenhoven reckons "The arrival of WormGPT shows cybercriminals are no longer content to just subvert existing AI tools but want to drive this technology forward—and steer it down their own dark path."

And so the wild-west of AI development continues.