- ChatGPT's Advanced Voice Mode could be getting 'eyes' soon

- Initially unveiled in May 2024, we haven't heard much about the feature since

- Beta code references 'Live camera' hinting an impending launch

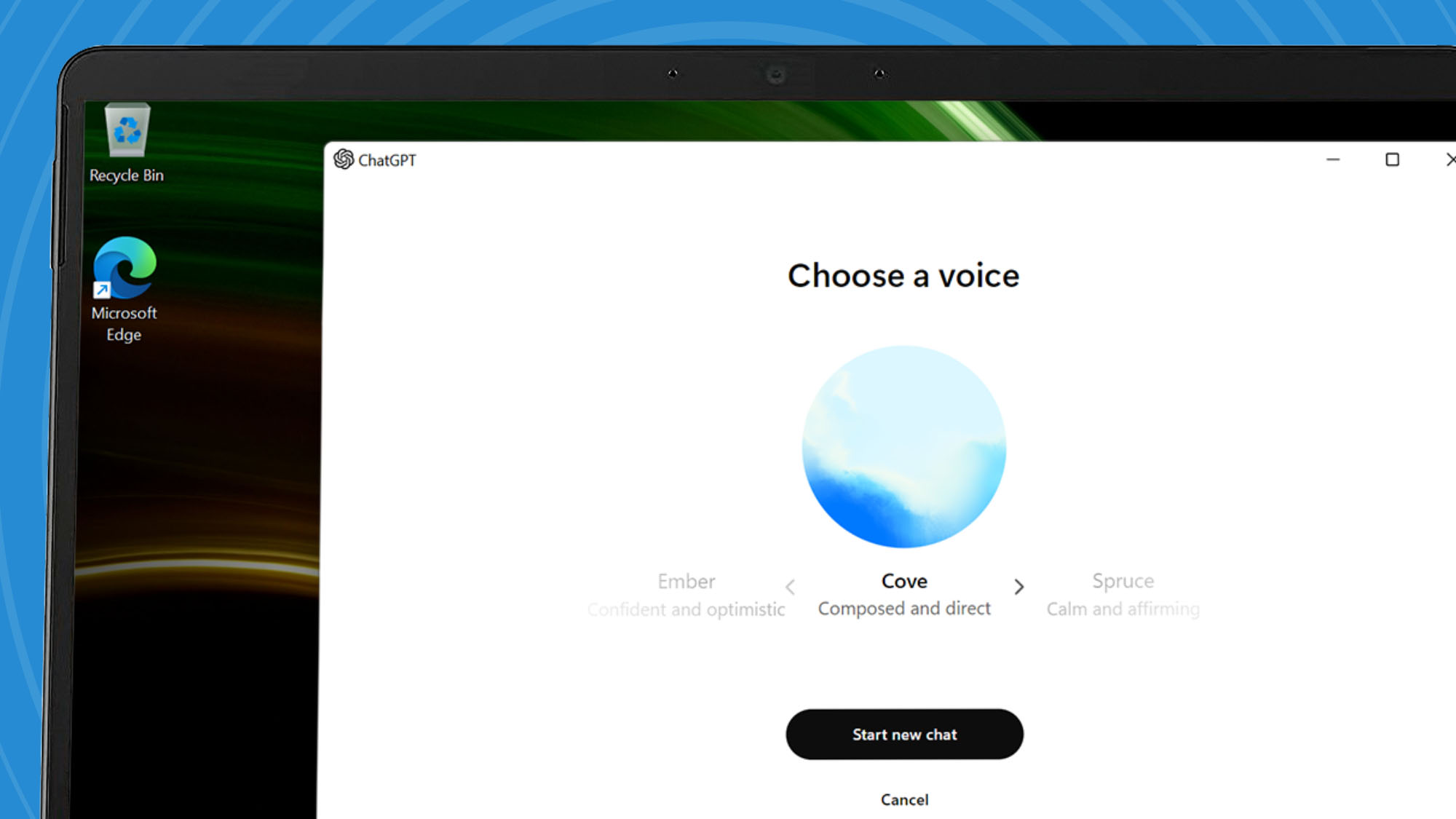

ChatGPT's long-anticipated 'eyes' could be coming to Advanced Voice Mode soon allowing you to have video calls with AI.

OpenAI originally unveiled the feature in May showcasing how Advanced Voice Mode could see what you show it and speak back to you about the subject.

In the demo, Advanced Voice Mode was shown a dog and was able to determine the subject and everything else related to it, including the animal's name. Since that demo and an Alpha release, OpenAI hasn't mentioned this feature and we've heard nothing about its development. Until now.

Code in the latest ChatGPT v1.2024.317 beta build, originally spotted by Android Authority, hints at ChatGPT's eyes coming sooner rather than later. OpenAI hasn't officially confirmed the name for the sci-fi video call feature yet, but according to the code strings it's going to be called 'Live Camera'.

For users who have been waiting for further information on ChatGPT's Visual Intelligence and Google Lens competitor, this is a good sign that Live camera could be entering into beta soon followed by a wider official release.

Trying #ChatGPT’s new Advanced Voice Mode that just got released in Alpha. It feels like face-timing a super knowledgeable friend, which in this case was super helpful — reassuring us with our new kitten. It can answer questions in real-time and use the camera as input too! pic.twitter.com/Xx0HCAc4ToJuly 30, 2024

Would you video call with AI?

A video call option sounds like the natural evolution of ChatGPT's Advanced Voice Mode, allowing you to effectively video call AI. While that might sound incredibly dystopian, it could end up being a fantastic addition to the way we interact with AI models.

ChatGPT's Advanced Voice Mode and other AI voice assistants, like Gemini Live, have proven that there is far more to interacting with AI than a chatbot. Offering as many ways to interact with AI as possible allows users to decide how it best fits their needs and opens up new ways of implementing the software into daily life. I expect this 'Live camera' functionality to be a game-changer for accessibility needs, especially for those with visual impairment.

Hopefully we hear more about 'Live camera' soon, but it's good to know, at least, that OpenAI hasn't forgotten the feature's existence.