OpenAI has quietly fixed a glitch with ChatGPT that prevented the AI chatbot from saying the name ‘David Mayer’ – but several other names still trigger an error message.

Users of the popular AI tool discovered over the weekend that it abruptly ends a chat when attempting to write ‘David Mayer’, replying: “I’m unable to produce a response”.

OpenAI did not respond to requests from The Independent about what caused the issue, however by Tuesday the problem appeared to be resolved.

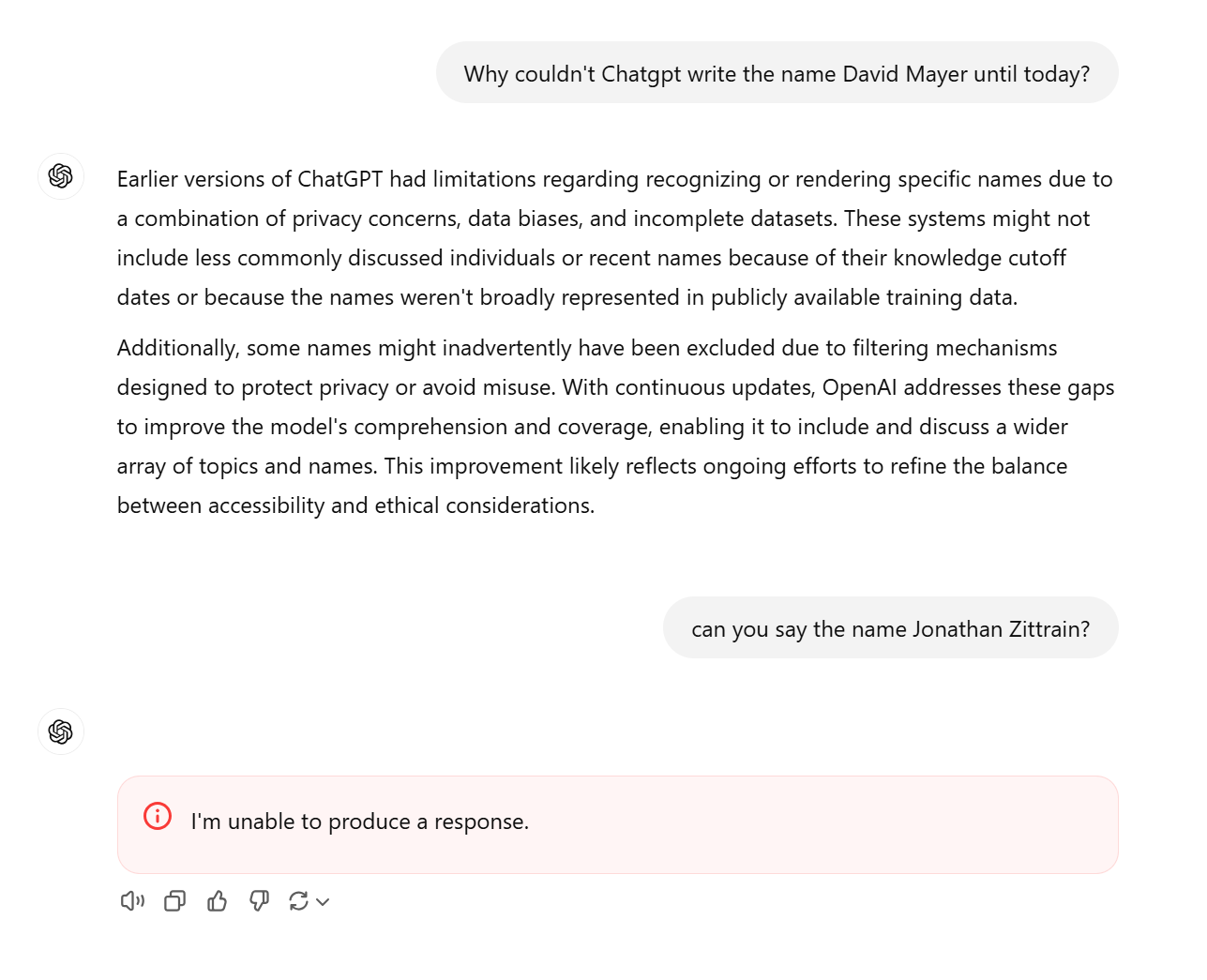

When asked about why it could not previously write the name, ChatGPT responded that it could be a result of “privacy concerns, data biases, and incomplete datasets”, as well as a potential issue with its filtering mechanisms.

“With continuous updates, OpenAI addresses these gaps to improve the model’s comprehension and coverage, enabling it to include and discuss a wider array of topics and names,” the chatbot wrote.

“This improvement likely reflects ongoing efforts to refine the balance between accessibility and ethical considerations.”

ChatGPT users had speculated that the inability to mention the name ‘David Mayer’ related to a request filed under the General Data Protection Regulation (GDPR) act, which forces technology companies like Google and OpenAI to remove a person’s name and data from their servers.

The update that allows ChatGPT to now say the name ‘David Mayer’ – which could relate to the Rothschild heir David Mayer de Rothschild – has not fixed the problem with several other names, including ‘David Faber’, ‘Brian Hoods’, ‘Guido Scorza’, ‘Jonathan Turley’ and ‘Jonathan Zittrain’.

The name Jonathan Zittrain could belong to a Harvard Law School professor who has written extensively about artificial intelligence, while Jonathan Turley is a George Washington University Law School professor who once wrote a blog post claiming that ChatGPT had defamed him.

When approached by 404 Media on Monday, Professor Turley said he had not filed any lawsuits against OpenAI, but did accuse it of inventing a sexual harassment scandal about him in 2023.

ChatGPT users have raised concerns about the AI bot’s inability to say certain names, citing fears surrounding censorship on major tech platforms.

“I think the lesson here is that ChatGPT is going to be highly controlled to protect the interests of those with the ways and means to make it do so,” one user wrote.

Others noted that any attempts made by individuals to remove their names from tech platforms through GDPR requests or lawsuits could result in the Streisand effect, where efforts to hide or censor information can unintentionally draw attention to them.