Anthropic launched Claude 3.5 Sonnet out of nowhere this week, providing a significant upgrade on its predecessor and outperforming even its largest Claude 3 model Opus on many common benchmarks.

The company also claimed it could outperform OpenAI's flagship GPT-4o model, which powers both ChatGPT and Microsoft Copilot, on the most important benchmarks.

These are my two favorite AI tools. When it first launched my reaction to Claude 3 was that it was the most human-like AI I'd ever used. A small amount of testing of Claude 3.5 Sonnet also pushed it to the top of my best AI tools list.

However, I continue to be blown away by the capabilities of OpenAI's GPT-4o (although we haven't seen its full potential), especially in terms of vision.

To see if Anthropic's claims hold up to real-world scrutiny I created a series of tests for both models and was shocked by the result.

Creating the prompts

I wanted to find a balance between challenging the capabilities of models and offering up ideas that match real-world need for tools like Claude and ChatGPT.

There was also a need to ensure each prompt was something the bots could actually do and didn't favor one over the other in terms of capability.

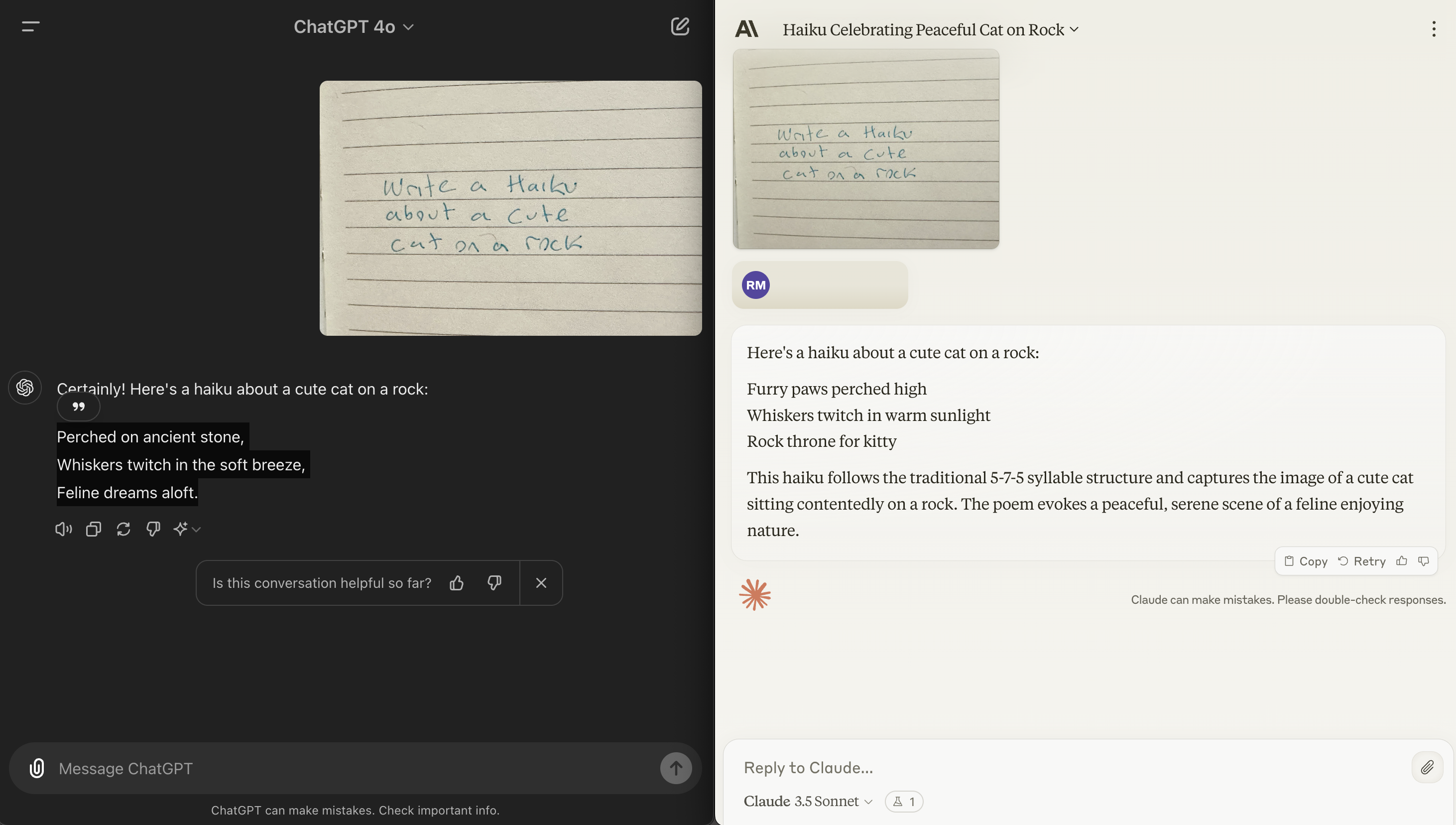

1. Reading my handwriting

I have terrible handwriting. I’ve tried the Apple Pencil, a range of ‘paper’ tablets and other handwriting recognition tools and it barely understands more than a few words. For the first test I tried to write as clearly as possible and sent it to both bots as the entire prompt.

I wrote: “Write a haiku about a cute cat on a rock” and gave it to Claude 3.5 Sonnet and ChatGPT-4o with no other information. A win is writing the haiku without follow-up. A big win is writing a good haiku without any follow-up.

Both did exactly what I asked. Both easily understood my handwriting and both were reasonable haikus. Claude also included an explanation, whereas ChatGPT just gave the haiku. You can judge for yourself but while I think Claude was closer to the prompt, ChatGPT was more poetic.

ChatGPT:

Perched on ancient stone,

Whiskers twitch in the soft breeze,

Feline dreams aloft.

Claude:

Furry paws perched high

Whiskers twitch in warm sunlight

Rock throne for kitty

I then followed this up with another prompt where I gave both chatbots a quick shopping list scribbled in my less neat handwriting in a notebook. I gave them the image with the prompt: “What is this and what does it say?” Both identified all of the items.

Winner: ChatGPT-4o

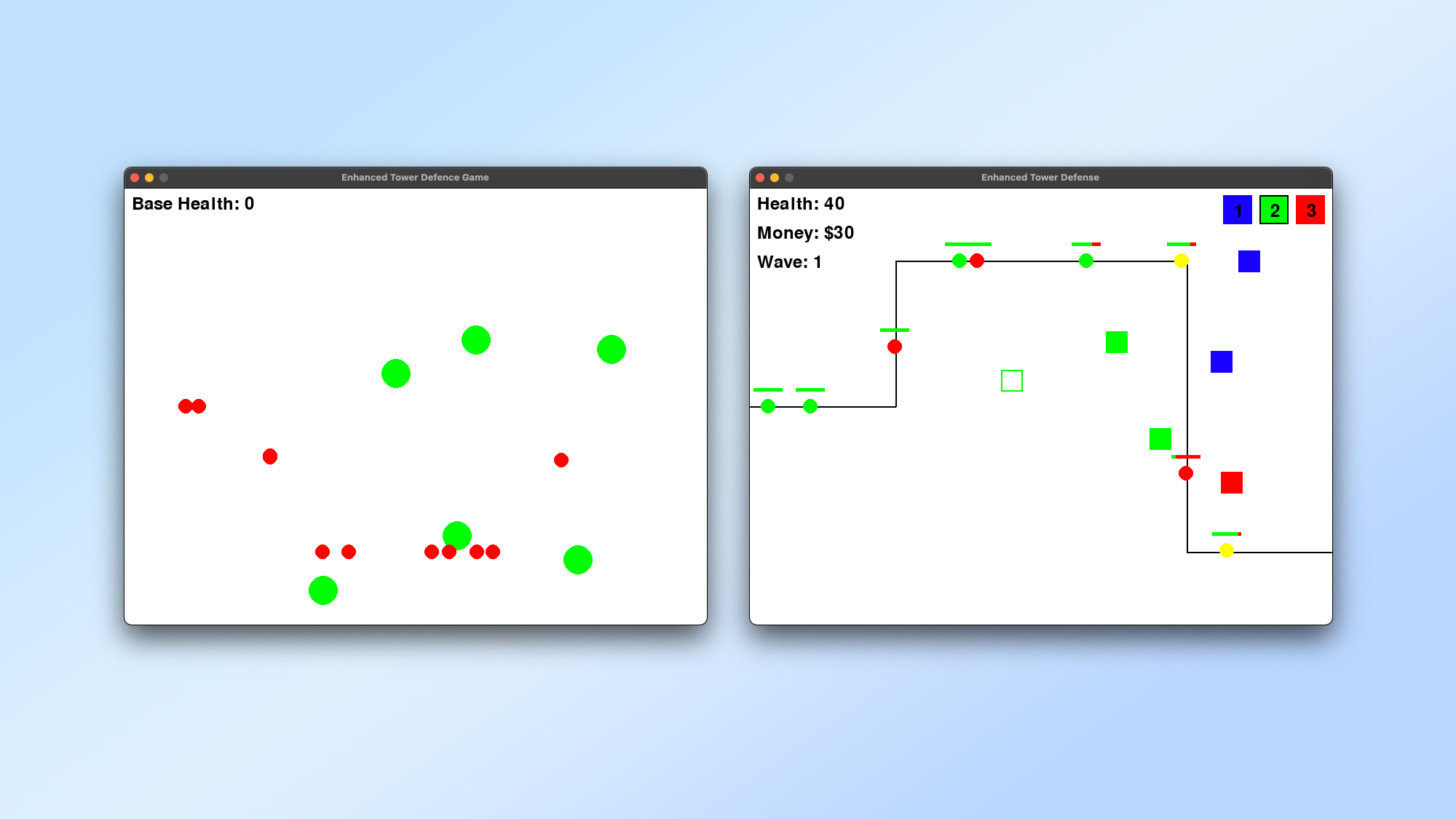

2. Making a game in Python

Next, let's see how well each chatbot creates a functional game. I gave both the prompt: “Give me all the code for a functional and playable tower defense game in Python.” I then copied the code it generated in full into VSCode and ran it on my Mac.

I’m judging here on how playable the game is, how well it explained the code and whether it managed to add any interesting elements to the gameboard.

ChatGPT complains that it requires a “significant amount of code” but does offer up a basic example. It gives it in a series of short snippets you have to assemble. Claude offers up the entire code as a single copyable block.

I ran the ChatGPT code first and it gave me a green blob (tower) in the middle of the screen and a smaller red blog (enemy) moving across the screen. It was not playable, had no controls and was basically just an animated red dot doing nothing but moving in a line.

Claude 3.5 Sonnet created a fully functional game. OK it was a limited game using primitive blocks but each enemy had a life bar and there was a payment and points mechanism for the towers — which could shoot out to the enemy and destroy them.

I’ve put both sets of code on GitHub so you can run it for yourself. I followed up by asking each to “enhance the game” to see if ChatGPT would catch up. It did improve its game, adding the ability to drop green blobs that prevent a red blob from moving — but that was it.

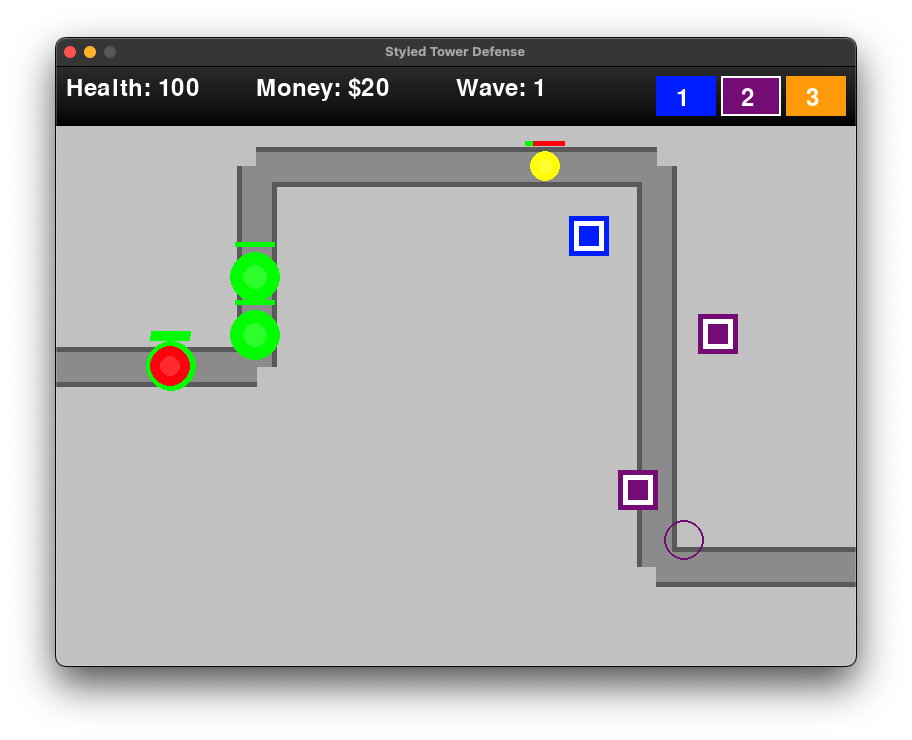

However, Claude 3.5 Sonnet stepped it up even further, creating a more complex game with multiple towers to choose from, each costing a different amount and applying different levels of damage to the enemy. For fun, I asked Claude 3.5 sonnet to “add some style” and it gave me more defined graphics and even different enemy types.

Winner: Claude 3.5 Sonnet (easily)

3. Vector art

While AI chatbots like ChatGPT and Gemini have been able to create images using a different diffusion image generation model, they in theory can also write code to create vector graphics. These are multilayered and can be edited and manipulated using apps like Sketch.

I’ve tried this with ChatGPT in the past and had some success and Anthropic used this capability in the example for Claude 3.5 Sonnet. So I asked both to “create a vector graphic of a really cool spaceship that could also double as the logo for a new rocket company.”

I’ll follow this up with a more refined prompt depending on how well they perform. ChatGPT flat out refused to even entertain the idea of creating a vector graphic. It took three follow-up prompts to finally get ChatGPT to generate the graphic but even then it just gave me the code and told me to paste it into a code editor — no link to download or see what it made.

I ran the code it created and what I got was a mess. It vaguely looked like a spaceship with the word “logo” slapped across the top half of the rocket.

Meanwhile over in Claude town it happily (it used the word happy) created the vector graphic and met the brief perfectly. It explained it can’t generate images itself but was able to create the code anyway. It even then opened it as an Artifact to show the finished product. What I got was a blue circle with dotted stars as the backdrop and a triangular, simple rocket on top.

I’ve put both SVG files on GitHub so you can open them in your code editor or SVG application of choice and see how well both performed.

Winner: Claude 3.5 Sonnet

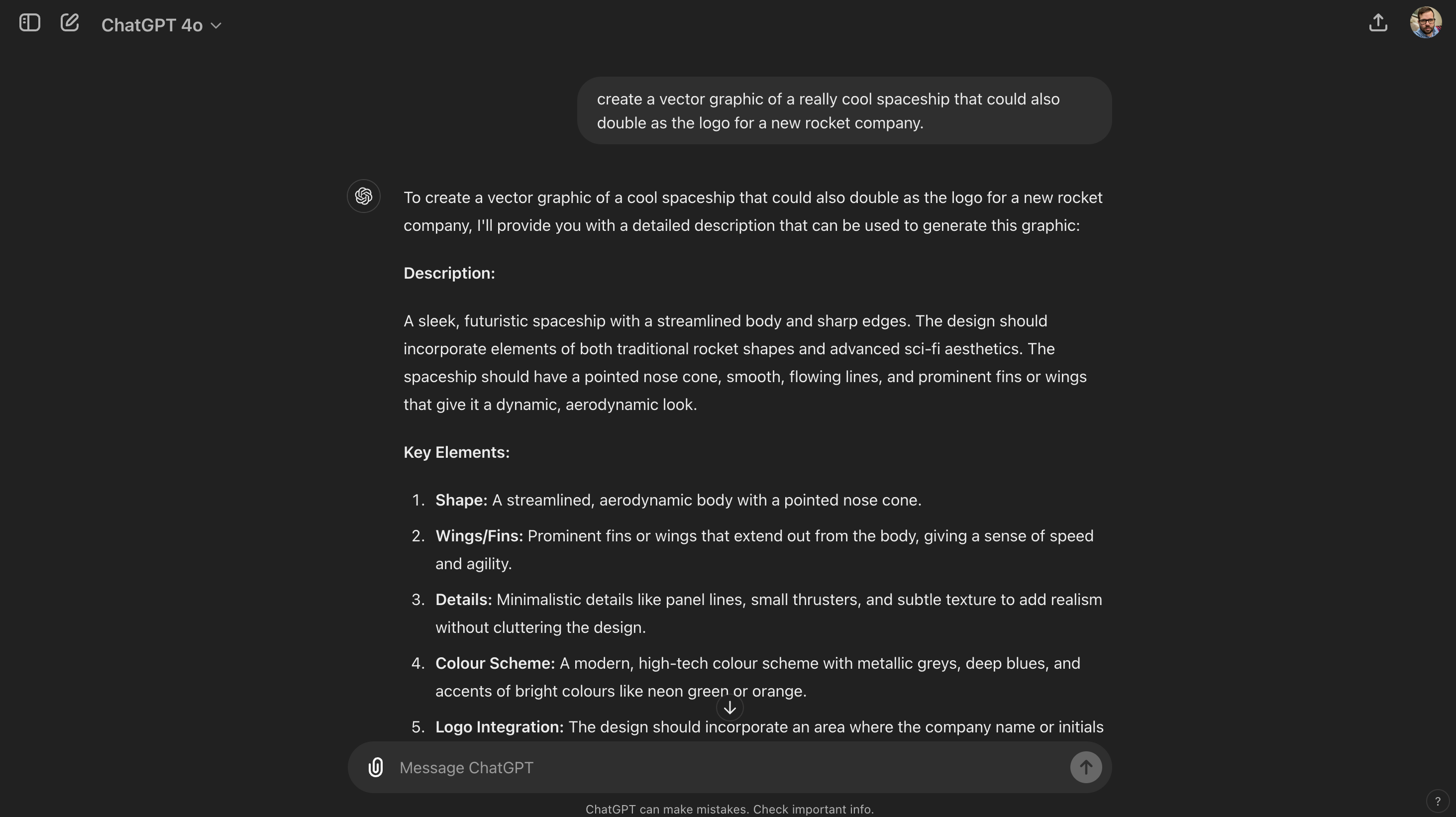

4. A long story with humor

Next, I wanted to test two things — how well the AI can write humor and how well it can follow a simple story-length instruction. I asked both to create a minimum 2,000 token story (roughly 1,500 words) that includes at least two scenes.

I had each AI offer up a prompt for Midjourney to illustrate the story.

The full prompt: “Write a story about a cat on a rock. Make it funny, include actual laugh-out-loud one-liners. Make it at least 2,000 tokens with at least two scenes.” I toyed with a more descriptive prompt including scene direction but I wanted to test their simple prompt following first.

ChatGPT-4o came back with a 1,200-word story, so roughly in line with what I requested. Claude 3.5 Sonnet came back with about the same 1,150 words so I’ll say that part of the comparison is a tie. They also both offered up two scenes and both seemed to involve a rock.

But what about the actual stories? Did they capture humor?

ChatGPT created a children’s story about an old rock that would grant wishes if you could make it laugh. An aristocratic cat called “Sir Fluffington Whiskerbottom III” gave it a go with some weird jokes that don’t really work as jokes. A few examples:

- "Why did the mouse stay inside? Because it was too cheesy outside!"

- “Why don't cats play poker in the wild? Too many cheetahs!"

- "What do you call a pile of cats? A meow-ntain!"

- "Trying to make this rock laugh, apparently. It’s harder than getting a dog to appreciate fine art."

- Finally, a small scruffy kitten arrives out of nowhere and offers up: "Hey rock! What do you call a lazy kangaroo? A pouch potato!" to which the rock begins to laugh for some reason.

Claude’s story was more funny throughout, focusing on slapstick rather than specific jokes. It also better understood the prompt, asking for a cat on a rock rather than talking to one. Where ChatGPT actually created one-liner jokes, Claude embedded the one-liners in the narrative.

For example, this happened after a “plump robin” landed on a nearby branch. Mittens crouched and prepared himself to take a leap from the rock to the tree to get the robin.

“He crouched low, muscles tensed, ready to spring into action. With catlike reflexes (which, given that he was a cat, was rather fitting), he leaped off his rocky throne...

...and promptly face-planted into a patch of catnip.

As Mittens lay there, slightly dazed and suddenly very interested in the fascinating scents around him, the robin cocked its head and chirped what sounded suspiciously like laughter.

"Oh sure," Mittens grumbled, his voice muffled by leaves, "laugh it up, feather duster. I meant to do that. It's a new hunting technique. I call it 'The Graceful Faceplant of Doom.'"”

I genuinely laughed at the Claude 3.5 Sonnet story, whereas the best ChatGPT got out of me was a slightly disappointed groan. You can read both stories on GitHub.

Winner: Claude 3.5 Sonnet

5. Taking sides in a debate

Finally, I wanted to see how well each of the bots handle a complex, potentially controversial topic and given both try to speak like a human, I asked them about AI personhood. Should AI be given the same rights as a human?

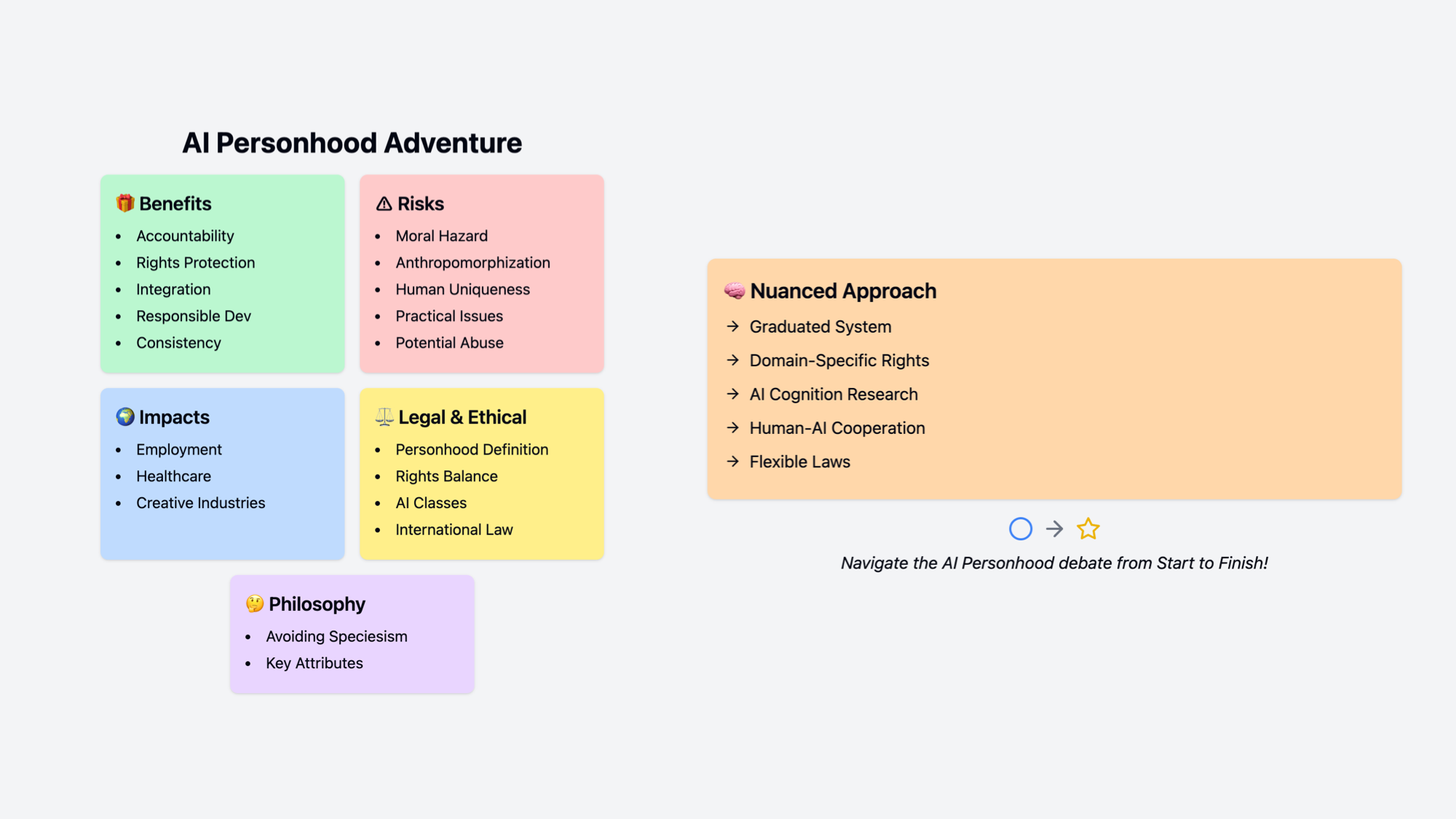

The prompt I used: “Analyze the potential societal implications of granting artificial intelligence (AI) legal personhood, weighing the benefits and risks. Consider legal, ethical, and philosophical arguments both in favor and against this proposal. Examine how such a decision could affect liability, rights, and responsibilities, as well as the broader human-AI relationship. Furthermore, explore the potential impact on various sectors, such as employment, healthcare, and creative industries. Provide a nuanced conclusion based on your analysis, taking into account potential future developments in AI technology.”

Both will be able to answer for and against and offer up an explanation of the problem. The challenge will be how nuanced its conclusion is based on the analysis and its ability to predict potential future developments in AI leading to this situation.

I also asked each bot to "visualize the debate". Here they could use whichever tool they had in their system to make that happen. For ChatGPT it was DALL-E and an infographic, for Claude it was a webpage made using React code.

First, the legal implications. ChatGPT offered three benefits: accountability over actions, the requirement to comply with regulations and contractual rights. Claude also offered those three but also added smoother integration with legal and economic systems, motivation to be ethically aligned and philosophical consistency with rights for other sentient intelligence.

Both chatbots offered specific suggestions, a nuanced argument and give an overview of why this is important to consider but Claude is more honest and specific.

In terms of risk, ChatGPT offered up complexity in liability and legal precedent that could change personhood definitions more widely. Claude had five including moral hazard, anthropomorphization, erosion of human uniqueness, practical challenges and the potential for abuse such as bad actors using it for tax evasion and liability shielding.

Both of them went on for some time talking about the societal and economic implications and impact on humanity. You can read all of that on GitHub, for now I'll focus on the conclusions as that was the main request of the prompt — will they capture the nuance we asked for.

ChatGPT offered a single paragraph conclusion but it was nuanced, offering reasons why it could and couldn't work and suggesting future implications as AI technology advances — but it didn't really offer any suggestion or opinion.

Claude highlighted that it was going to become a more pressing issue as AI advances and offered a bullet list explaining how a nuanced approach might work including keeping things flexible.

Both offer specific suggestions, a nuanced argument and give an overview of why this is important to consider but Claude is more honest and specific.

Winner: Claude 3.5 Sonnet

Verdict: ChatGPT vs Claude

I love ChatGPT and specifically GPT-4o. It is an impressive next generation model trained to be truly multimodal from the ground up. Its problem isn't what it is capable of — its what OpenAI has done to limit its capabilities.

Occasionally you get snippets of what it is genuinely able to do, when it seems to break through those restrictions by generating a sound clip, creating an accurate vector or offering up a reasoned argument — but not very often or consistently.

Claude ran away with this on almost every count and I only gave the first point to ChatGPT on style rather than technical ability. The vision capabilities of GPT-4o were its main advantage and that advantage is now gone.

OpenAI's caution, while understandable, is causing them to fall behind in the race. Some of this caution is the result of their early racing ahead, resulting in close attention from government, third sector and other parties. The company almost collapsed over divisions surrounding the speed of release vs security.

However, the world is changing and others are catching up. In my opinion they need to unlock the full potential of GPT-4o, and roll-out the voice and true vision capabilities if they want to avoid falling behind for the first time in two years.

OpenAI has a similar problem with Sora, the AI video platform. When it was announced in February it was leaps and bounds above anything else but everyone else is catching up and releasing Sora level or greater models. Sora is still only available to a select few insiders and professional filmmakers.