ChatGPT has officially arrived on smartphones. OpenAI recently brought its artificial intelligence chatbot to the Apple App Store. The chatbot's iPhone version is already one of the most popular free apps on the App Store.

But getting too friendly could put your privacy at risk in novel, alarming ways.

ChatGPT app is free - but carries other costs

ChatGPT's new app may be free to download. But that doesn't mean there aren't hidden costs. To paraphrase pathbreaking artist Richard Serra, if something is free, then you're the product.

According to TechRadar, the ChatGPT app provides new users with an interesting warning that “Anonymized chats may be reviewed by our AI trainer to improve our systems.” Furthermore, OpenAI states that when users “use our services, we may collect personal information that is included in the input, file uploads, or feedback you provide.”

OpenAI promises that its system renders conversations anonymous before the human review stage, but that only removes your personal data from the metadata of the file. Anything personal like names or locations or personal attributes typed into the chat box itself remain fully accessible to human reviewers.

So to simplify, your private questions don't live in some walled garden where only an anonymous computer algorithm ingests data and grows organically by itself. Any question you submit to the ChatGPT app about your finances, your dating troubles, your health problems and more will be sent to an OpenAI database where it could be read by a human reviewer. And once you start using the app, you have no choice to opt out of this data collection regime.

An industry rife with data protection issues

Other major tech players entrusted with safeguarding user data don't have sterling track records either. Here are a few of the biggest privacy breaches in recent years:

Amazon

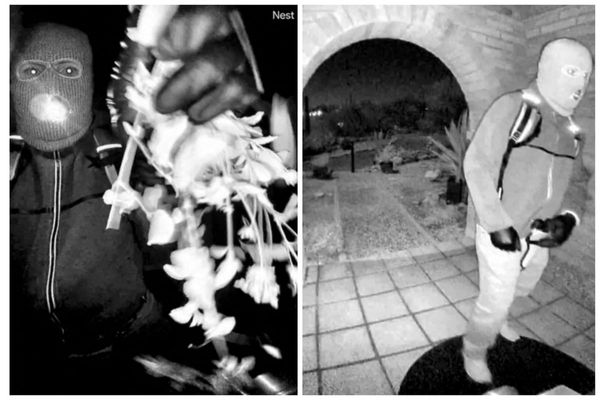

In recent years, news reports surfaced that hackers had accessed Ring home security cameras and used them to speak to and taunt their owners. Hackers even set up a forum to livestream some of the things that they were doing. It was an alarming violation of privacy, one that which could have been used for future intrusions or leverage over the victims.

On top of that, Amazon's Alexa devices have randomly recorded private conversations and leaked them to random phone numbers in people's contact lists.

Facebook's dismal history of protecting users' data is highlighted by the Cambridge Analytica incident, where a political firm paid an app designer to access personal data for 87 million Facebook users. Facebook is finalizing a whopping $725 million privacy settlement to compensate affected users who had their privacy violated.

LastPass

Beginning in August 2022, password management provider LastPass suffered a string of devastating hacks that exposed millions of users private data, as well as company secrets. In the most recent breach, cyber intruders hacked a senior engineer’s home computer to gain access to a critical corporate vault available to only four top employees.

One hacker or hacker group now has encrypted copies of at least 30 million LastPass customers' entire password vaults, along with the company's most sensitive internal secrets and digital access credentials.

Google has agreed to nearly $600 million in privacy settlements criss-crossing the U.S. between 2022 and 2023. Attorneys general from across the country brought consumer protection lawsuits against Google for violations ranging from using protected biometric data to train a photo algorithm, to persistent location tracking by Google programs that assured users they weren't being tracked.

The bottom line

The takeaways from ChatGPT's privacy questions and recent tech company data breaches are clear. Guard your privacy closely. Carefully consider which apps to download. Read the privacy policy and consult app privacy settings to increase your data safety. And as much as possible, only store things on your phone or online that you could bear to have leaked onto the wild west of the internet.

When OpenAI's CEO Sam Altman threatens to leave the EU because of impending privacy regulation, it might be a sign to proceed cautiously with ChatGPT and its cousins in the AI chatbot space.