That's a wrap on AWS re:Invent 2024!

It was a jam-packed few days at the computing giant's annual event in Las Vegas - we saw keynotes by AWS CEO Matt Garman, featuring Amazon CEO Andy Jassy, then Dr. Swami Sivasubramanian, VP, AI and Data, AWS, before the show wrapped up with a talk from Dr Werner Vogels, VP and CTO at Amazon.com.

You can follow our live blog below for all the updates as they happened AWS re:Invent 2024 - and if you think anything was missed, check out our list of news here.

Good morning from a beautiful sunny Las Vegas! The sun is out and preparations are underway for AWS re:Invent 2024, which officially kicks off tonight with an introductory keynote from Peter de Santis, Senior Vice President of AWS Utility Computing, before the main action starts tomorrow.

As noted, the main program at AWS re:Invent 2024 starts tomorrow, when AWS CEO Matt Garman will take to the stage for his keynote.

There will no doubt be lots of news and updates - and we already know the identity of one special guest - his predecessor, and current Amazon President and CEO Andy Jassy.

Good morning from a bright and chilly Las Vegas!

We're all set for the day one keynote, hosted by AWS CEO Matt Garman, which is starting in just over two hours...

As always, AWS re:Invent 2024 is one of the biggest events on the tech calendar, with an estimated 60,000 in-person attendees, and many more tuning in online.

Fingers crossed we can get a seat at the keynote...

The queues to get in were absolutely mighty, but we're in and seated, nice and early, to be bombarded by some pumping techno (just what you want, first thing in the morning, hey)

After a pretty nifty laser intro and an introductory video talking about the need for innovation, security and "seeking the next impossible" - it's time for the main event.

The lights go down and Matt Garman, AWS CEO, takes to the stage.

Garman reminds us it's his first re:Invent as the head of AWS, and welcomes us all to this year's event.

"Today, the largest enterprises in the world...are running on AWS," he declares, from enterprises to governments.

After a shout out to developers and start-ups, Garman introduces the four main themes of today's keynote.

"When you're innovating, ypu want to start with the customers, but then you don't just deliver what the customer wants," he adds, outlining how this approach is part of AWS' DNA.

AWS' approach is about taking a little bit from every great part of technology, and combining it into its technology, Garman adds.

These "building blocks" are still the method AWS uses today - and today there are hundreds of AWS services on offer.

Start-ups are going to play a major role in today's keynote, with Garman saying he's keen to highlight the great work they're doing with AWS tech.

First up is protein analysis firm Evolutionary Scale, which is using AWS to help decode and develop new proteins which could help tackle diseases and disorders to disrupt the pharma industry.

Garman says there are a lot of reasons why companies choose AWS - but top of mind is security.

"We knew that security has to be the foundation of what we built this business on...you can't bolt it on, you have to do it from the beginning."

"Everythign starts with security - it's one of the reasons so many companies trust AWS."

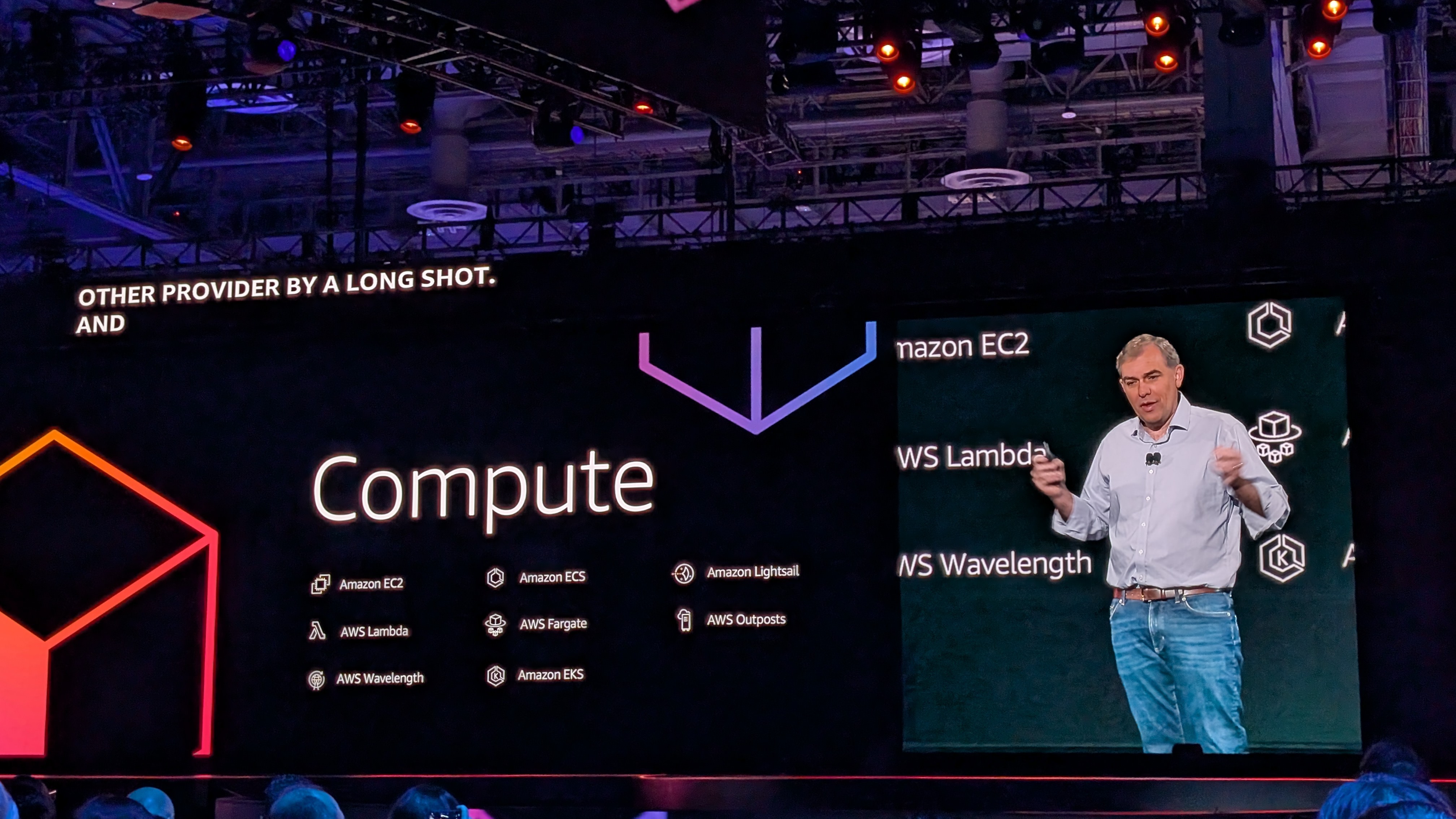

On to the first "building block" component of the today's keynote - Compute.

Garman's old haunt, Compute for AWS is dominated by Amazon EC2, its cloud storage powerhouse.

"AWS has the fastest and most powerful network...and we work hard to make sure you always have the latest technology," he notes.

Keeping this level of innovation is tough, but AWS Nitro helps, Garman notes - offering the chance to offload virtualization for compute and security onto its own chips, giving better flexibility and performance.

Here's our first big announcement of the day - Graviton4 - which is getting a number of new features and upgrades.

Already used across EC2 customers - the next big computing challenge is evolving and growing workloads.

AI, specifically Generative AI, mainly run on GPUs, and AWS and Nvidia have been collaborating for years on ensuring this is a smooth experience.

But now, the two have combined to announce P6 instances. Built on the latest Nvidia Blackwell GPUs, they will be available early in 2025 for customers.

Moving to AI chips themselves, Garman highlights the success of Trainium, which is saving workloads for customers across the world.

Today, AWS is announcing the general launch Trainium2 Trn2 instances - offering much more powerful experience, at better price performance.

The "next generation" of Trn instances is built entirely in-house by AWS, featuring 16 Trainium2 chips, designed for the demanding workloads of cutting-edge AI performance, giving customers more choice and customization options.

What about those models that are too large to fit on a single server? AWS is announcing the exciting-sounding EC2 Trn2 UltraServers for those giants, offering a staggering 64 Trainium2 chips, and 4 Trn2 instances, connected with NeuronLink, giving huge amounts of possible power - up to 83.2 FP8 petaflops.

AWS is alreayd working with Anthropic to build Project Rainier, the world's largest compute cluster for ML training.

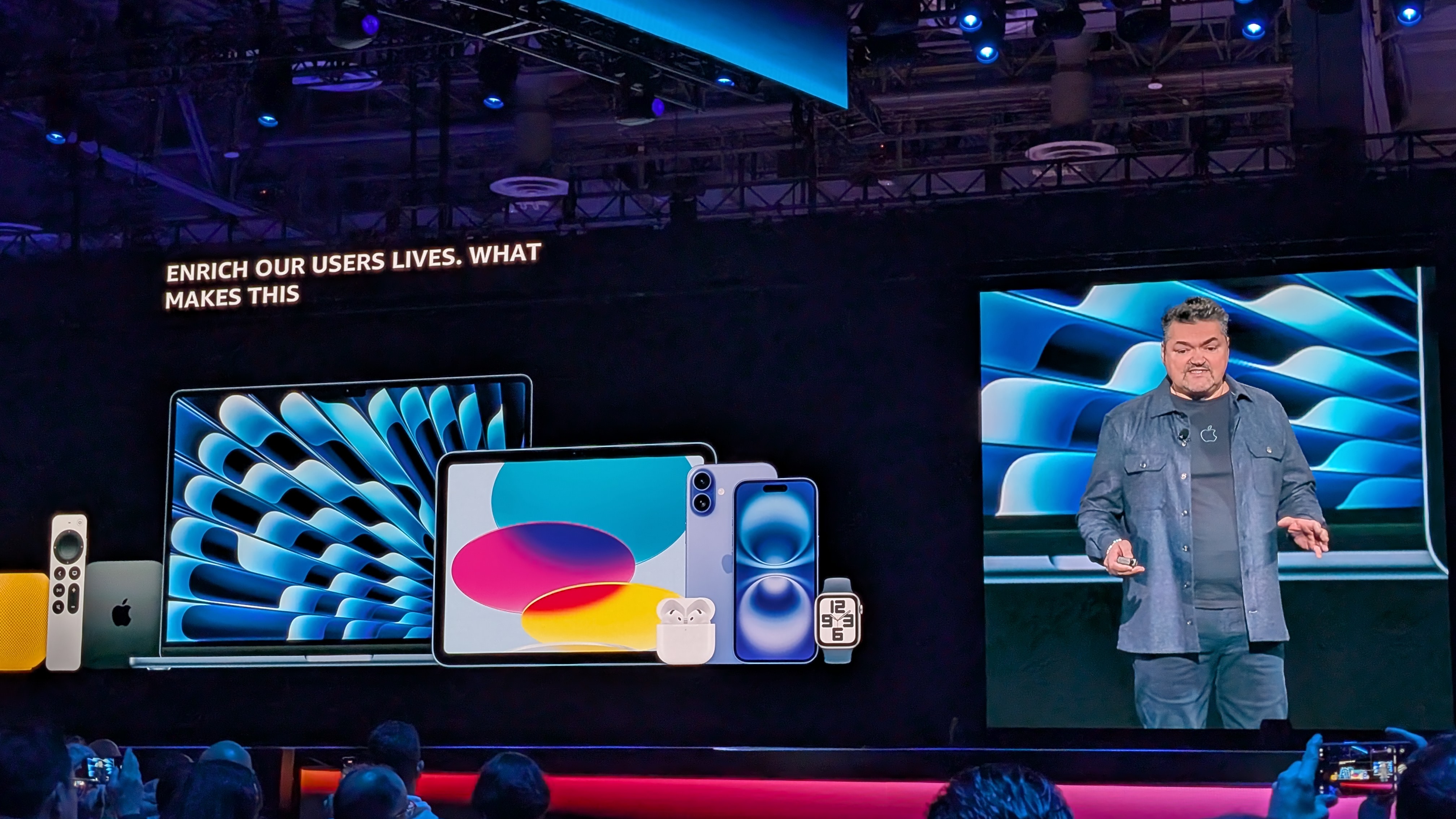

Garman now introduces Benoit Dupin, senior director, machine learnign and AI at Apple, to talk about how it is working with AWS on the next generation of models and training.

Dupin notes Apple has to bring together software, hardware and services to provide a complete set of experiences for customers, especially for businesses.

AWS is helping Apple the tools it needs to evolve its AI and ML work, offering reliable and robust infrastructure, Dupin says.

This is especially pertinent with the launch of Apple Intelligence, the company's AI platform, which is powered by Apple's own in-house models - but backing this up is AWS, which offered support across "virtually all faces of the AI and ML lifecycle", Dupin notes.

The pace of generative AI means there's a need for companies like AWS to continually innovate - and Trainium3 will be coming later in 2025.

Garman says it will offer 2x performance over Trainium2, and better energy efficiency and power, too - more to come soon.

130 million new EC2 instances are launched every day, Garman notes, moving on - it's time to talk about storage - after all, there's no room for compute without storage.

Amazon S3 has been an unrivalled success, built to handle huge spurts in growth, S3 now handles over 400 trillion objects, Garman notes - noting AWS now has thousands of customers storing over a petabyte on S3, some of which even have more than an exabyte.

But storage needs to be affordable as well as flexible, Garman notes, and S3 can offer a wide range of options for customers with variable needs.

This all makes S3 the best option to run data lakes around the world, he notes - but there's still room to improve - particularly when supporting large analytics and AI use cases.

Garman notes that there has been a huge growth of tabular data - but it's easy, especially for bigger customers, to lose track of what this can actually do for your business.

In order to help with this, AWS is announcing S3 Tables - a new way of getting the information you need from your data, pulling the control in-house, and improving performance.

S3 Tables offeres up to 3x faster query performance, and up to 10x transactions per second for Apache Iceberg tables.

Managing metadata is another big challenge for major companies looking to get the most out of their data - and finding this information can be a major challenge, needing huge workloads and tough management.

To help with that, AWS is announcing S3 Metadata - an easier, more queryable way for customers to find and use their data in S3, updating in real time.

"From day one, we've been pushing the boundaries of what's possible with cloud storage," Garman notes - and AWS isn't done, aiming to continue solving the biggest challenges when it comes to storage and compute going forward.

After another start-up showcase, Garman is back, and we're moving on to databases.

One of the most challenging options when it comes to managing data for businesses, Garman says even AWS finds it tough to handle some of the biggest challenges.

Although purpose-built databases are still popular, relational options are still popular, he notes - especially Amazon Aurora, which celebrates its 10th birthday this year.

As a present, and a reward for Aurora's top growth, AWS is upgrading the plaform once again, with Aurora DSQL.

Making it the fastest distributed SQL database around, this is "the new era of Aurora", delivering virtually unlimited scale across regions with zero infrastructure management.

We're now hearing from Lori Beer, global CIO and JPMorganChasse, about how it is using AWS to keep modernizing and innovating - where it uses the likes of EC2, Aurora and S3 to push the boundaries.

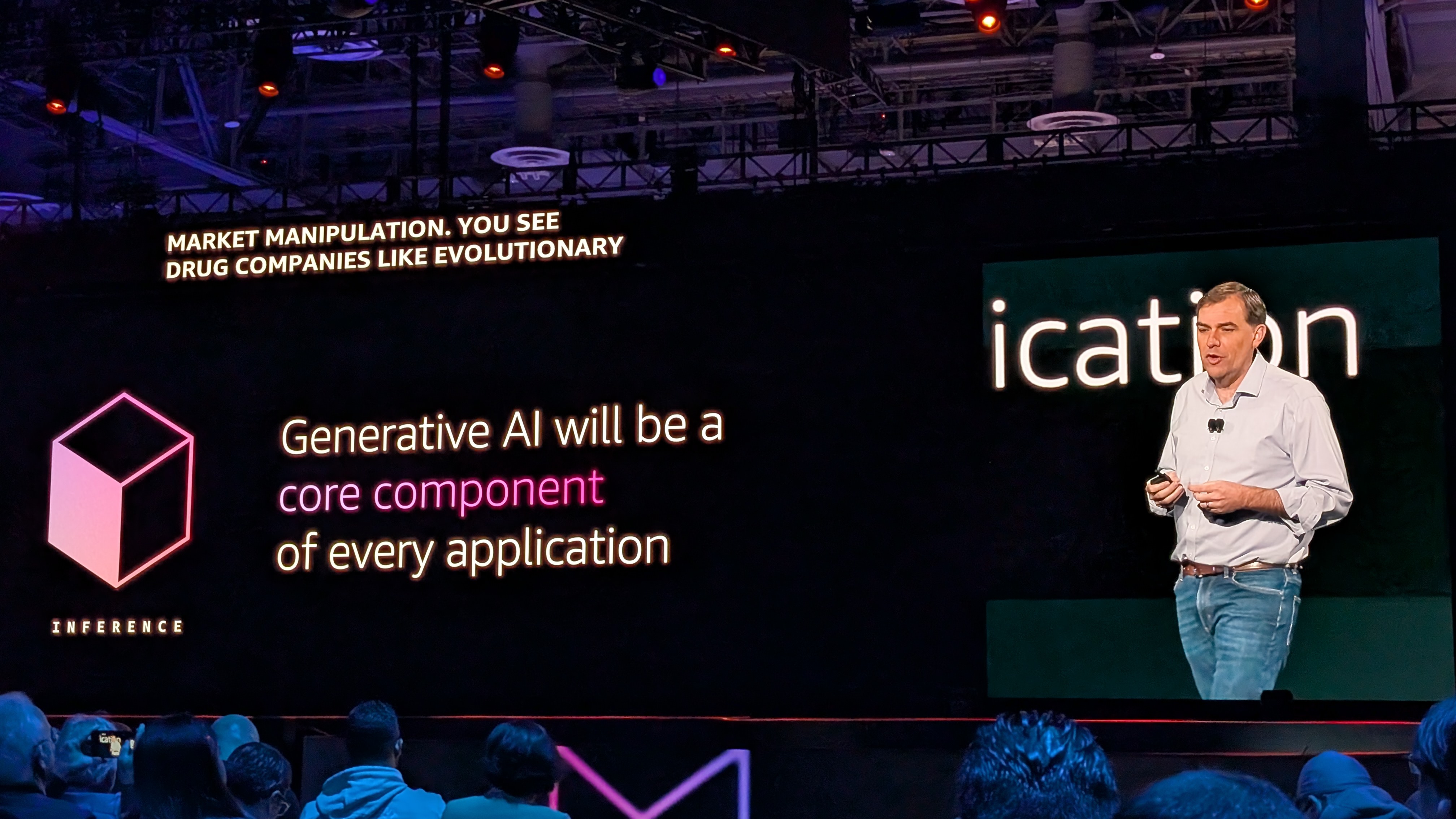

Garman is back, and now it's time to dig a little deeper into AI - particularly inference.

"AI inference is going to be the building block for everything application - it has the opportunity to trasnfrom every single organization," he notes, "We;re just at the beginning...every application is going to use inference in some way."

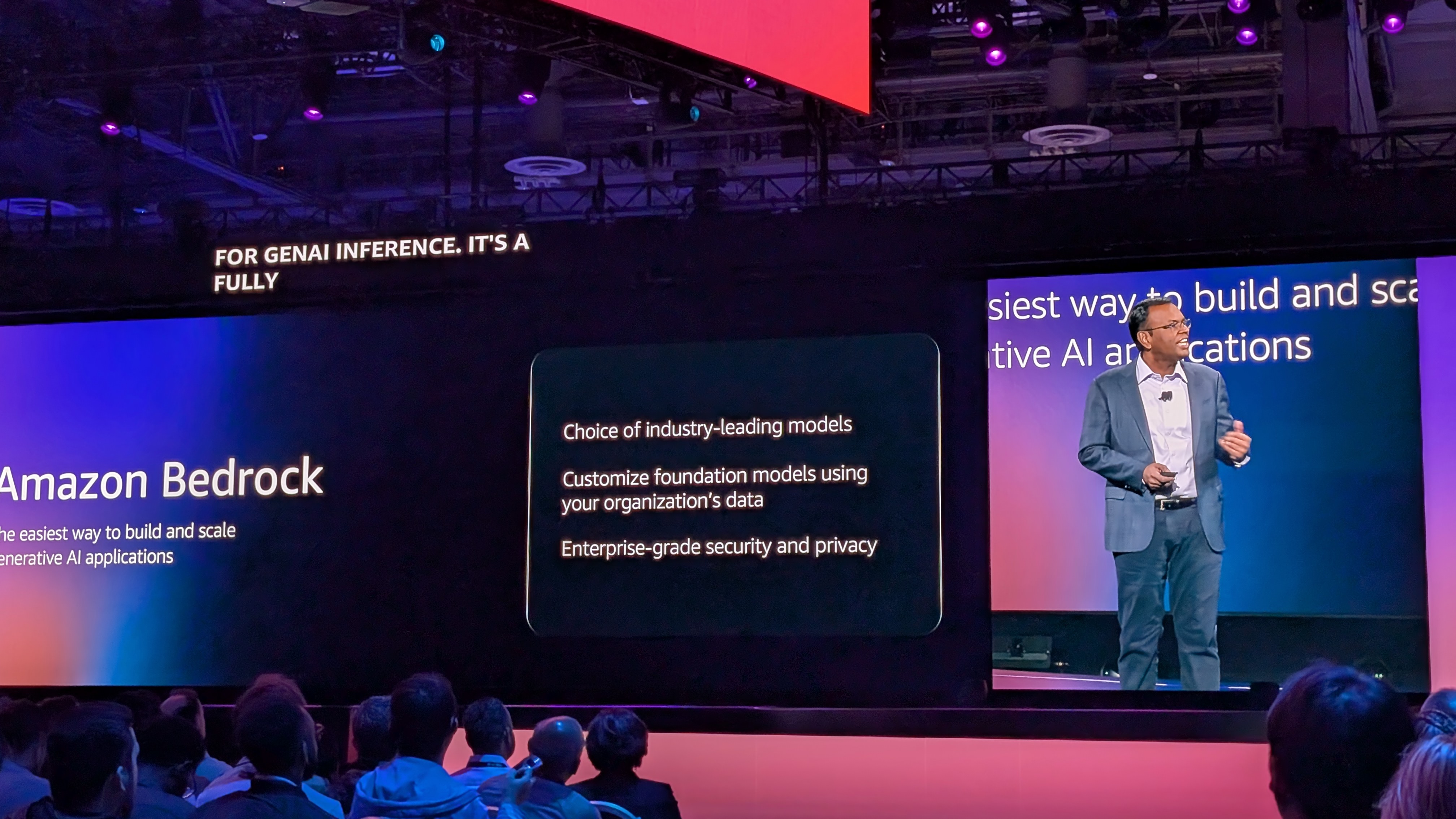

But in order to do that, of course, you need the right platform - Amazon Bedrock.

Many top companies are using Bedrock already to help deploy generative AI applications, Garman notes - and to push this forward, it's time for a couple of updates and upgrades...

"This is a space where innovation is happening really fast," Garman notes - but even so, it's still often tough to find the right model for your business.

Bedrock offers access to model distillation, where you add in prompts to a large model, before transferring knowledge to a smaller, more nimble model, and knows how to answer questions in the right way.

AWS is making that easier with a specific Bedrock Model Distillation tool - meaning models can run much faster and cheaper, including Anthropic, Meta and Amazon models.

Getting the right model is just the first step, though - Garman says you need to make sure you pick the right, customized options.

Along with Bedrock Guardrails, which allows your gen AI applications to only focus on the areas it should know about.

But hallucinations are also a major worry, as they could derail huge amounts of work for a business. In order to help offset this, AWS is announcing Automated Reasoning checks, a new feature which could help stop factual errors being caused by hallucinations.

The tool will look to detect issues, and will resend anything it isn't sure about back to the model - a capability you can't get anywhere else, Garman notes.

Agents are another major focus for companies of all sizes these days, and Bedrock already allows users to easily build agents for complex tasks.

In order to tackle growing customer needs, AWS is announcing support for Bedrock multi-agent collaboration - so agents can support ever more complex workflows, without complex coding.

We're still in the early stages of generative AI, Garman notes - and AWS wants to try and help power customers of all sizes.

To hear more about how Amazon is utilizing AI, we welcome a special guest to the stage - current CEO and President, Andy Jassy.

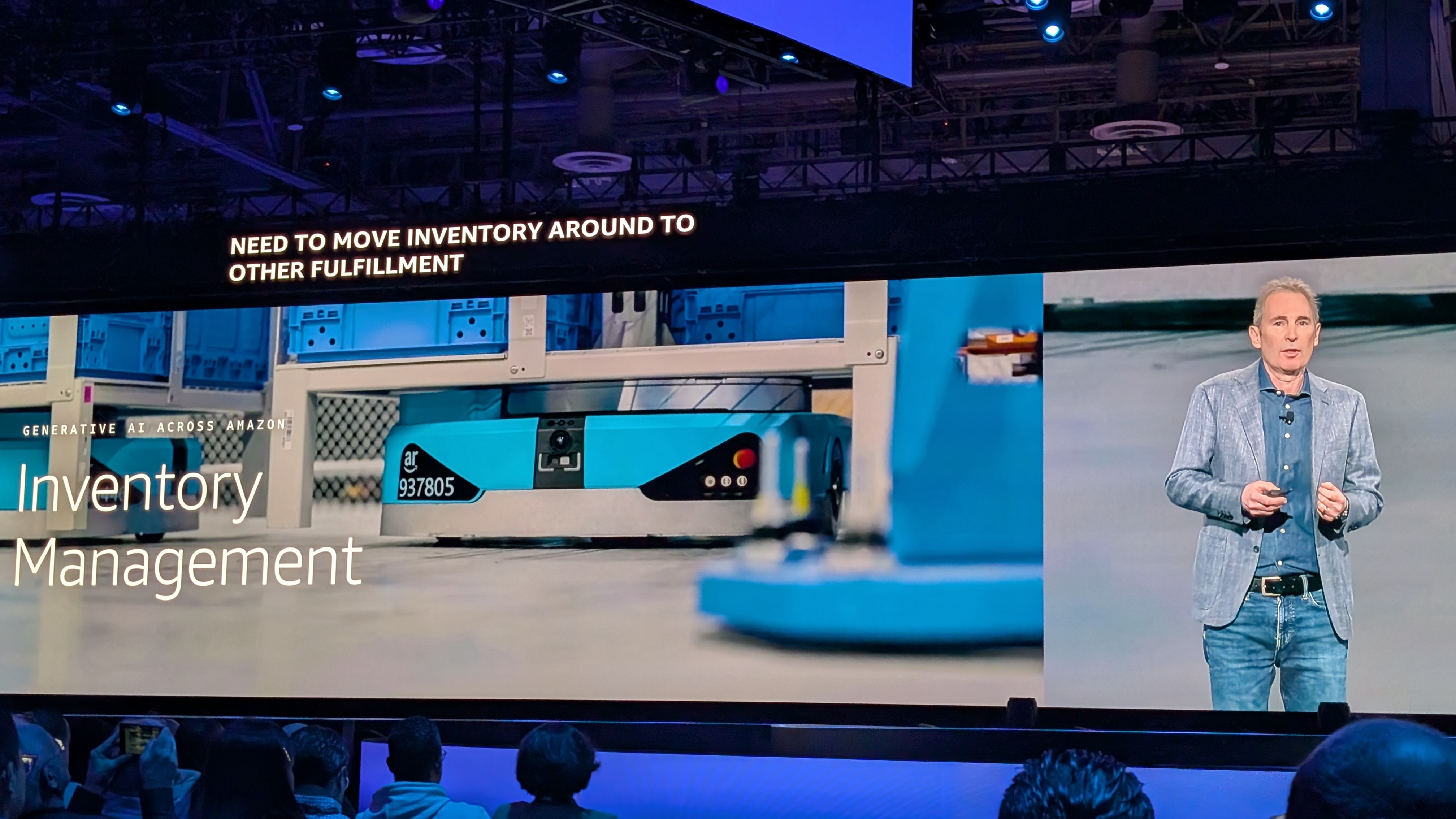

Jassy is here to talk about Amazon itself uses AI across its business - everything from Alexa, to Just Walk Out, to AWS.

"We prioritize technolgoy that we think is really going to matter to customers," he notes, highlighting a focus on "practical AI".

"We're trying to solve problems for you," he adds.

Jassy gives us a couple of examples around generative AI being used at Amazon, from customer service to creating pages for sellers, to inventory management.

There's also a focus on robotics - which is becoming much more important in its fulfilment centers, providing huge increases in efficiency and productivity, all while lowering costs across the board.

Jassy also focuses on Rufus, its generative AI chatbot, which is reportedly getting better and smarter at recommending products to customers across the world.

Jassy also shouts out Alexa, which he says has over 500 million active endpoints across the world, and is currently undergoing a major remodelling with new AI insights and models, to anticipate your needs and take action for you...

There's also a focus on Amazon Lens, the company's visual search engine, helping you track down the products you might have seen out and about.

There are almost a thousand generative AI applications being built, or already in operation, at AWS, Jassy says.

He says the learnings from this have taught the company that price performance is vital, but that "it is also quite difficult to build a good generative AI application".

You need the right guardrails, and the reality is that customers won't access "wonkiness", he adds.

Also, the diversity of apps being used has been a surprise, with an appetite for varied models proving very popular, Jassy says.

"There is never going to be one tool to rule the world," he notes - choice matters when it comes to model selection.

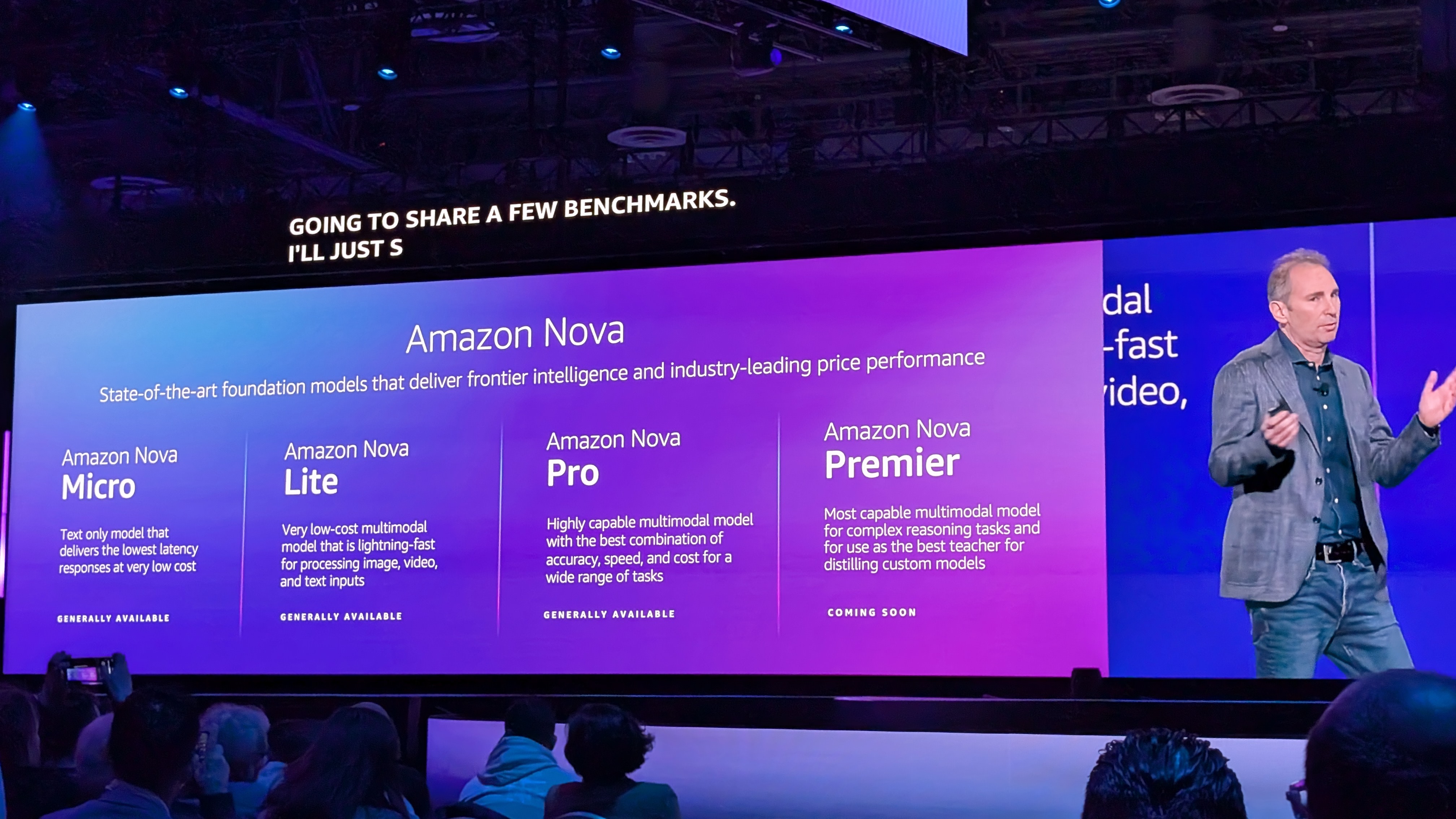

A bit of a surprise - Jassy announces the launch of Amazon Nova, state of the art foundation models.

Available in four tiers, from Micro to Premier, the models offer a range of options and choices for companies looking to get on the Gen AI train.

Jassy says the new models are very cost-effective, and the fastest in their respective classes in Bedrock, as well as offering improved accuracy thanks to on-going fine-tuning.

It's clear Amazon (and AWS) feels there is a gap in the market here to be exploited, and the Nova models are set up to take advantage of a growing need for more flexible models.

There's also two other models being announced - Nova Canvas, a state-of-the-art image generation model, allowing users to edit images using natural language text inputs - and Nova Reel, for video generation - providing "studio-quality" video, with features such as camera motion control, 360-degree rotation, and zoom.

But that's not all - Jassy says there's a lot coming on Nova, with a second-generation already in the works.

There's also a Speech-to-Speech model coming in Q1 2025, and Any-to-Any later in the year - a true multi-modal model.

Choice is important, Jassy notes - much like in real life, you don't rely on one specific person or company for everything.

"At AWS, we're going to give you the very best combination of all of these - like we always do," Jassy adds.

With that, he's off, to a rapturous reception, and Garman returns.

Garman now turns to developers - and specifically, Amazon Q.

Three new agents are coming to Amazon Q for developers, including generating end-to-end user tests, accurate documentation, and code reviews.

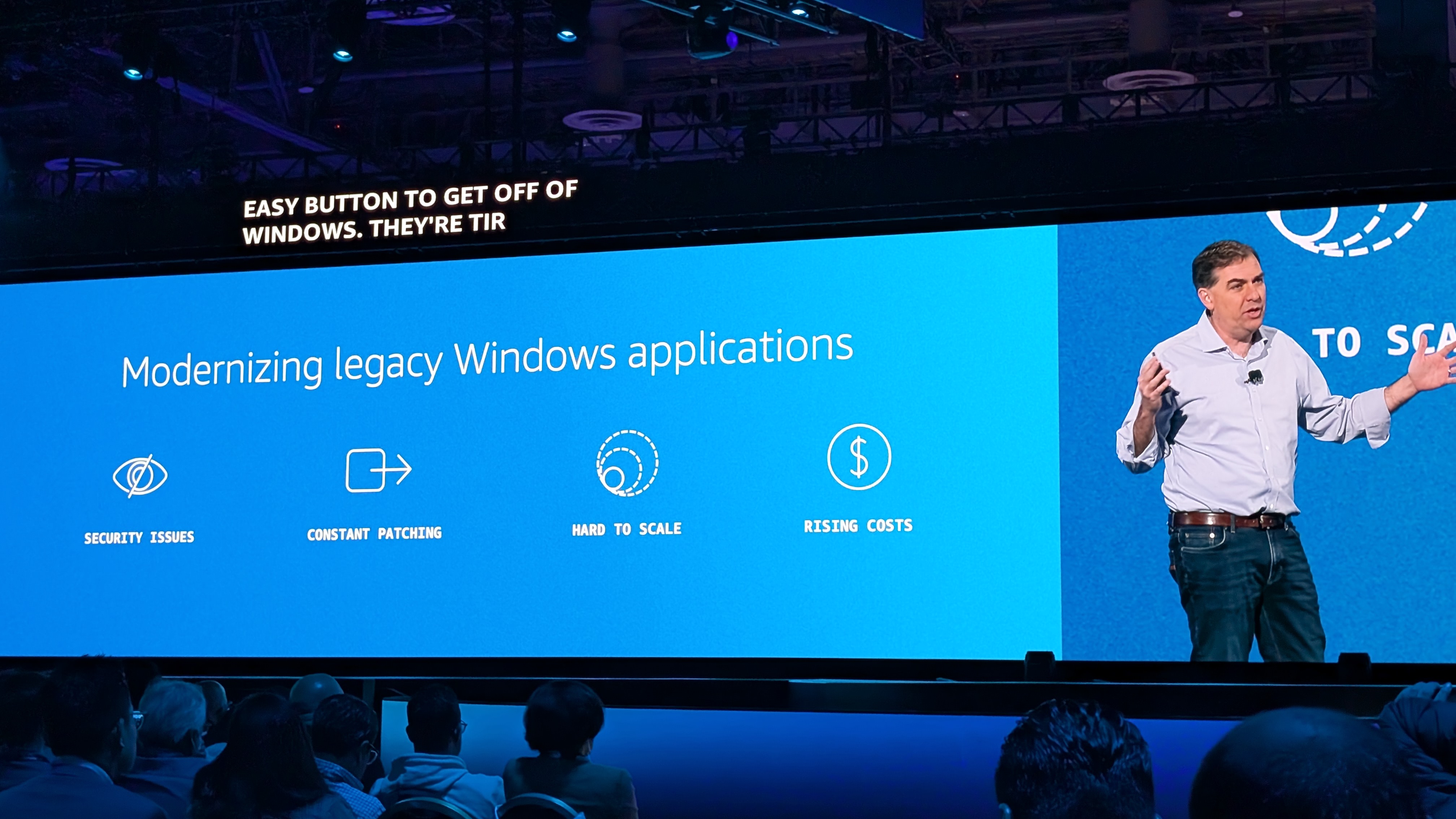

Q Developer is also getting transformation support for transforming .NET apps, a great help for modernizing legacy Windows applications, allowing transforming from Windows to Linux.

There's also a new elastic service, moving VMware subscriptions to AWS, but also a new tool allowing transforming VMware workloads to cloud native architectures.

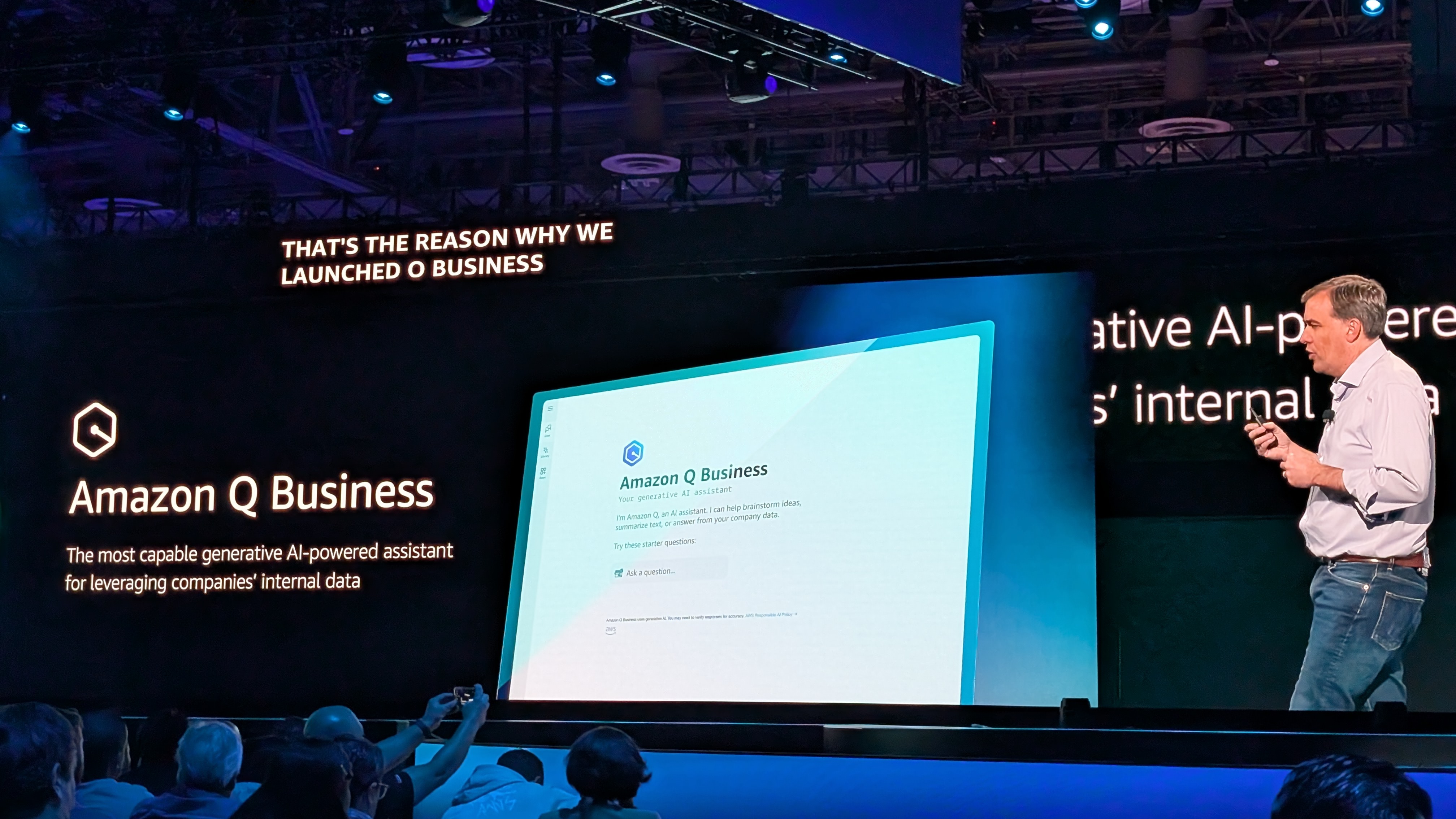

Now we turn to Q Business, which Garman says can benefit roles across your entire business.

Looking to combine all your company's internal data sources, Q Business creates an index of all your information - which Garman says is a service only AWS provides - but it can be tricky to track down exactly what you need.

To make this less painful, Garman reveals Amazon Q Business integration with Amazon QuickSight, bringing together structured and unstructured data out of their siloes.

There's also a push to automate complex workflows, and in order to help out, Amazon Q Business will soon be able to do just this, automatically building workflows based on documents or recordings, helping streamline the most annoying part of your job.

Garman shows a demo where Q Business is able to create an automated workflow simply by watching a video of how it's done - a great boost for companies everywhere.

We're on the final stretch now - it's time to talk about analytics - particularly, Amazon SageMaker.

Garman says SageMaker is being used more and more to prepare data for AI and ML workloads - it's changing how customers think about analytics overall, he notes, as historical analytical data is being used to train these models.

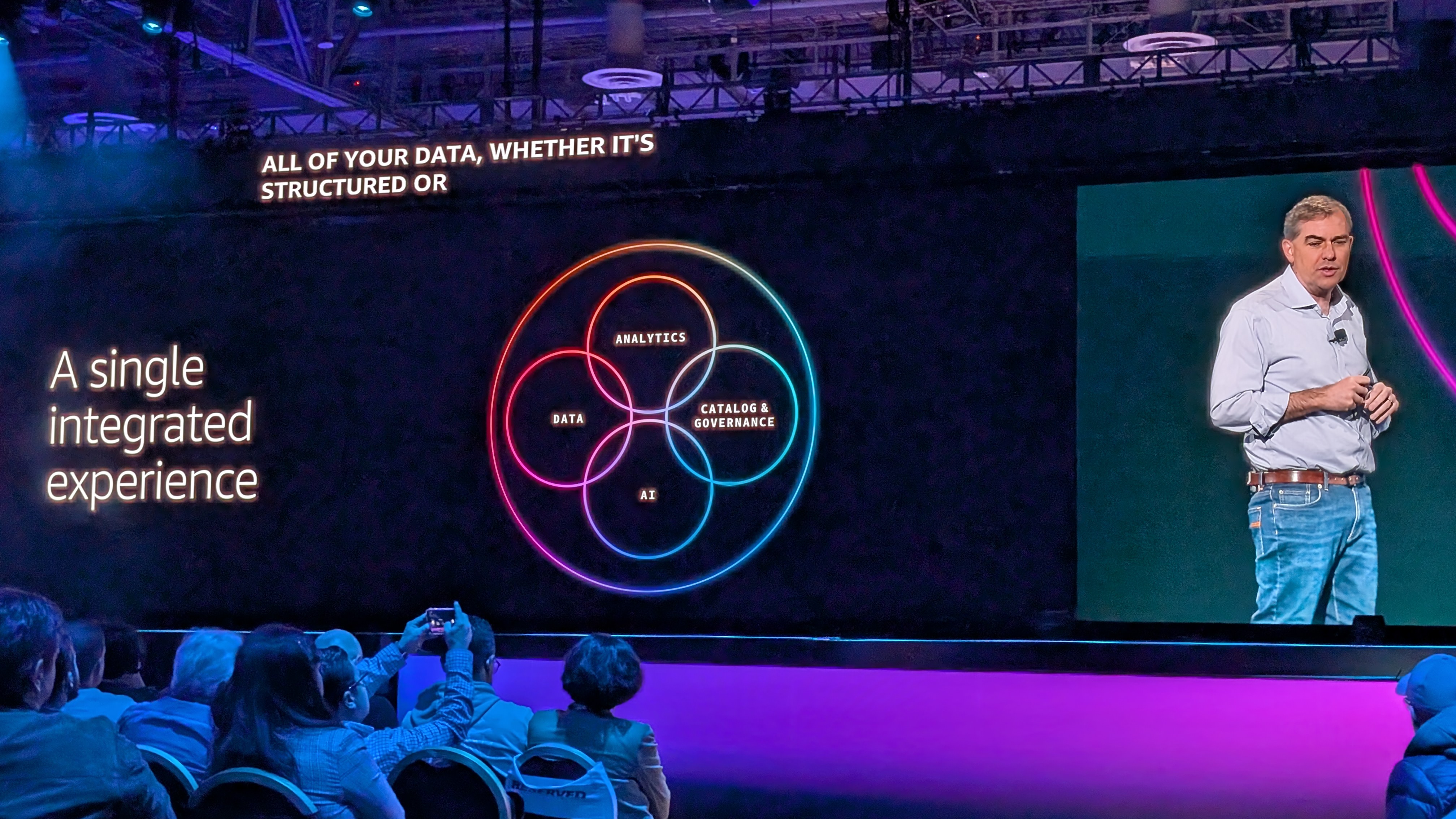

How can we make this easier, Garman wonders - bringing together analytics, data governance, AI and the data itself.

This is a new generation of SageMaker, he notes, a center for all your data, analytics and AI needs - taking the program everyone knows, and expanding it in a whole new way.

There's also SageMaker Unified Studio - a new, single environment to access all of your organization's data, and act on it in new ways.

Zero-ETL for applications is another crucial new announcement, hopefully giving users much greater access and analysis of your data across popular SaaS applications, without needing to build data pipelines.

Finally, there's SageMaker Lakehouse - a new way to simplify analytics and AI with an open, unified and secure data lake house - providing easy access right across all of your data.

And with that, it's a wrap on the opening keynote of AWS re:Invent 2024!

"I have never been as excited as I am today about the future," Garman notes, "the amount of innovation we're seeing out there is really incredible."

"There has never been a better time to be innovating."

That was a mammoth undertaking, so well done for sticking with us.

We're off for a coffee - and a deeper dive into some of the news we've heard about today - and we'll be back tomorrow, with live coverage of the day two keynote from Dr. Swami Sivasubramanian, VP of AI and Data at AWS.

Good morning from day 2 of AWS re:Invent 2024!

It's another early start for us, as we head out for this morning's keynote from Dr. Swami Sivasubramanian, VP, AI and Data, AWS.

What's on the agenda? More AI for sure, but probably also a deeper dive into some of the news from yesterday, possibly on SageMaker, Aurora and Amazon Q...stay tuned to find out!

It's another packed house for Dr. Swami Sivasubramanian's keynote this morning - and a slightly gentler music choice - more Motown than EDM today, which is a relief.

After an introductory video extolling the power of innovation over the years - all the way up tp the current AI era, the lights go up and Dr. Swami Sivasubramanian, VP, AI and Data, AWS, takes to the stage.

"Disruption is the new normal, and rapid innovation is the new high-table stakes," Sivasubramanian declares, noting how today's tehcnology is only possible due to the big steps taken beforehand.

He mentions the "new era of AI" as a key age of innovation, with "massive potential going forward" - but notes now is only the beginning.

Looking back over his 18-year career at AWS, Sivasubramanian notes how even he didn't know what form his early work would lead to - no one could have expected the huge steps forward offered by AI.

There's an "enormous opportunity" to combine AI, data, analytics and more in a whole new platform, he notes.

Amazon SageMaker is set to play a huge role in this, with a "next generation" of the platform announced yesterday.

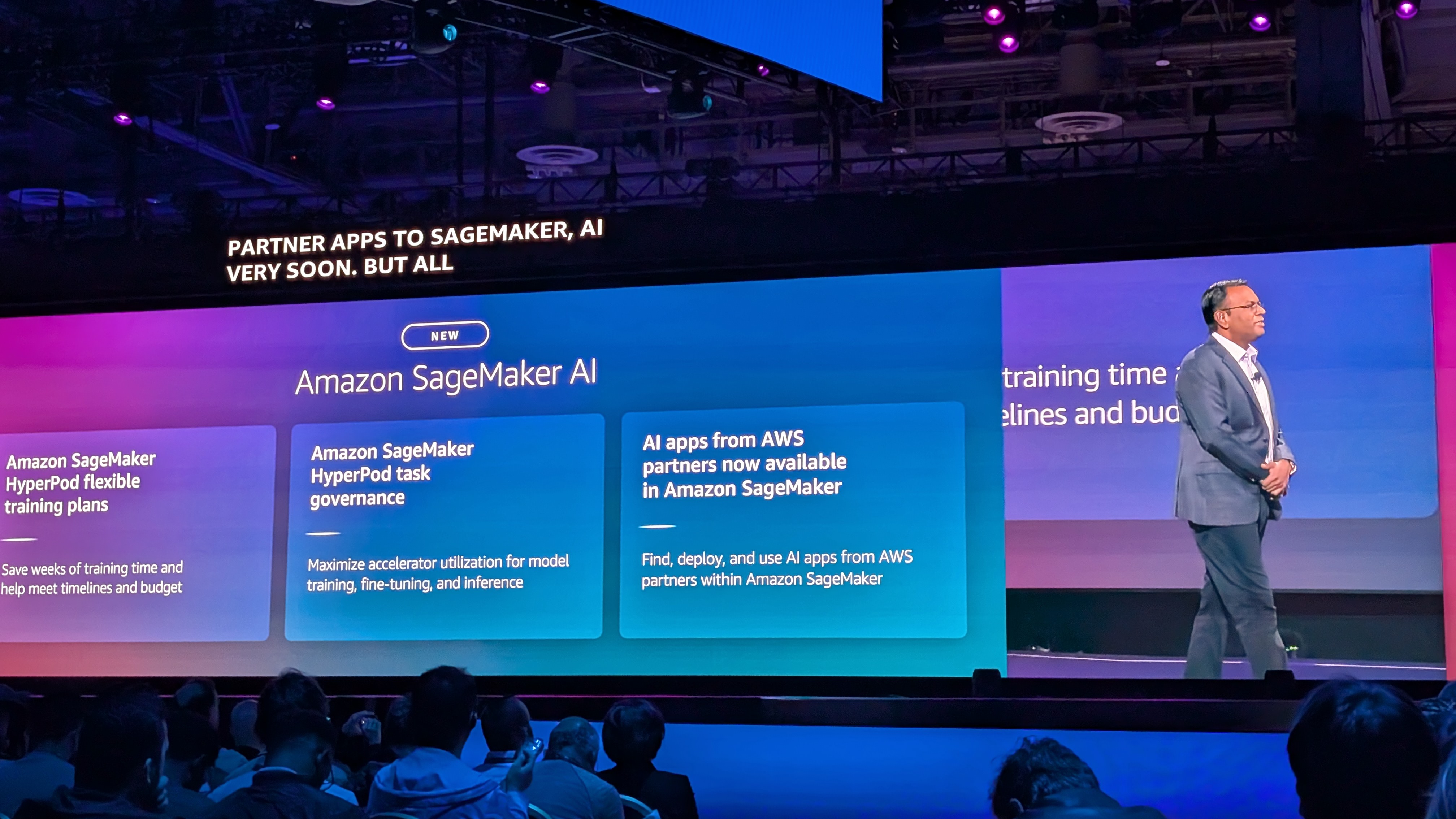

The new Amazon SageMaker AI is helping thousands of customers to train and deploy their foundational models on their data, Sivasubramanian notes.

But as GenAI gets bigger and more powerful, there's also a need for new tools - and that's why we're here today.

Last year, AWS revealed SageMaker HyperPod, and it's now getting a number of new features to deal with the fast pace and growth of AI, Sivasubramanian reveals.

This includes SageMaker HyperPod flexible training plans, which allows users to quickly build a training plan to automatically reserve capacity. This will help save weeks of training time, and help meet timelines and budget.

Next up, in order to help with challenges around allocating resources when it comes to compute and boost utilization of accelerate compute, Sivasubramanian reveals SageMaker HyperPod task governance.

This will help maximize compute resource allocation by automating the priorotization and management of AI tasks, ensuring high-priority tasks are completed on time.

Customers are also keen to make sure the third-party apps they use for AI development are maintained, without disruption - as putting them together with SageMaker can be time-consuming.

To address this, Sivasubramanian reveals AI apps from AWS partners are now available within SageMaker environments, including apps such as Comet, Deepchecks, Fiddler, Lakera.

"These apps are going to help you accelerate the model development life cycle," he declares.

After a brief interlude from customer AutoDesk to hear about how it is using AWS to develop its own foundational models, Sivasubramanian returns.

Now it's time to talk GenAI apps and inference, which has become a hugely important area for companies building and running new models.

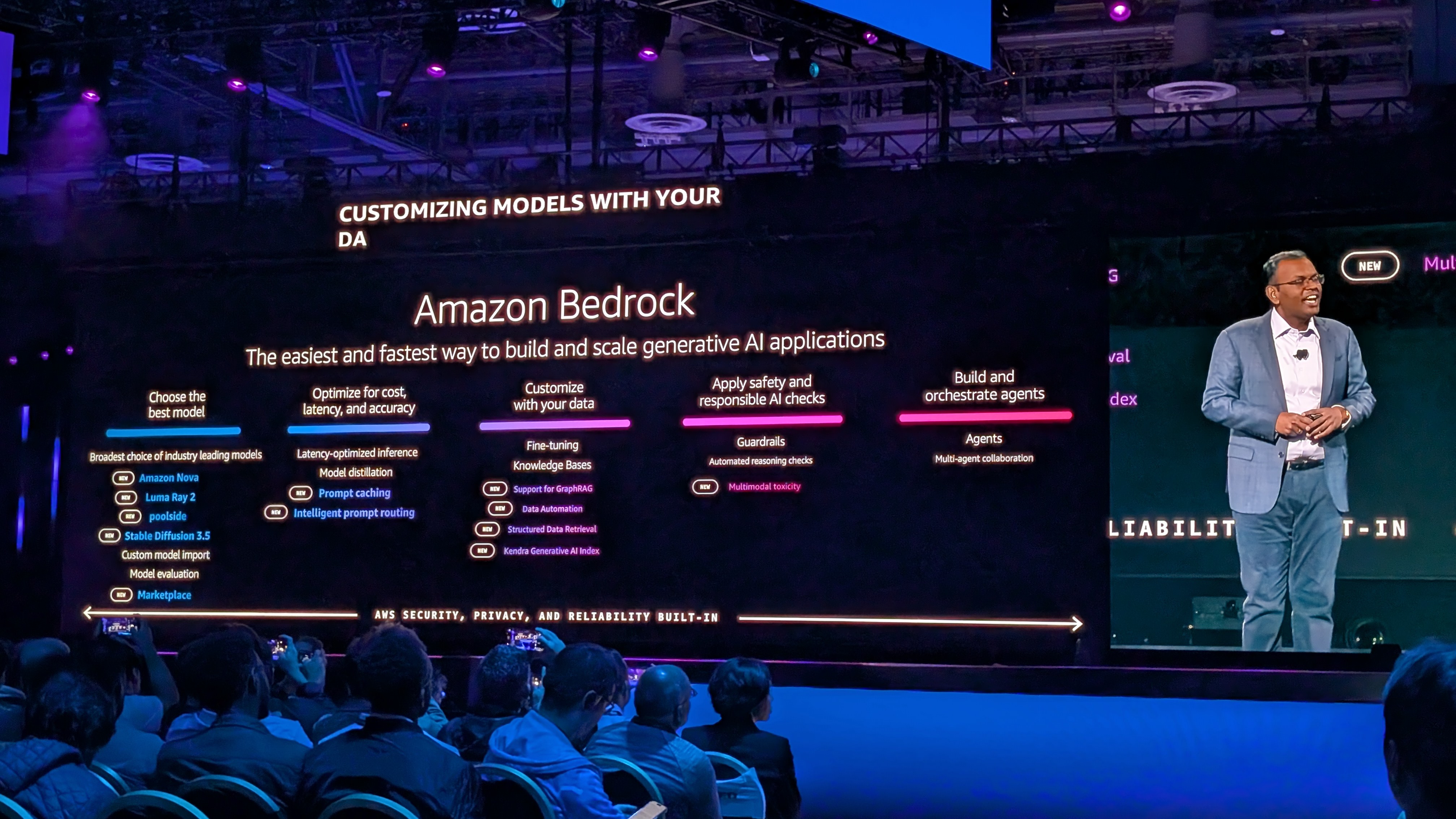

Sivasubramanian says there is often a lack of proper tools to help build and scale AI apps - but that's where Amazon Bedrock comes in.

AWS is hoping to tackle a number of key developer challenges - starting with selecting and optimizing the right models.

Amazon is keen to continue providing support for the latest and greatest AI models, including those from Mistral, Meta, stability.ai and Anthropic - along with its newly-announced Nova series of foundational models.

And now, it's adding poolside Assistant, stability.ai Stable Diffusion 3.5 and Luma AI video generation to Amazon Bedrock, offering a whole heap of new options for developers.

Next up, Sivasubramanian turns to looking at more emerging and evolving smaller models, which could be perfect for a specific need, but can be hard to track down.

To mitigate this, he reveals Amazon Bedrock Marketplace, featuring over 100 top models within Bedrock, allowing customers to test and discover models. Once deployed, users can also enjoy Bedrock APIs, tools and security.

"We are committed to giving you access to the best model for all your uses," Sivasubramanian says.

Optimizing can be an incredibly tough challenge for businesses of all sizes, Sivasubramanian notes, and AWS is keen to help.

He runs through a number of examples of specialized models, and how AWS wants to make the process smoother, without having to compromise on performance - via new prompt caching in Amazon Bedrock, allowing users to cache repetitive context in prompts across multiple API calls.

There also new Intelligent Prompt Routing on Bedrock, which can route prompts to different foundation models, optimizing response quality, and lowering costs.

Sivasubramanian now moves on to Retrieval Augmented Generation, or RAG, a key component of building new Gen AI-powered apps and services.

RAG may look simple, but in real lfie does require a lot of manual heavy lifting, Sivasubramanian notes, makign it hard to build RAG easily.

Luckily, AWS is announcing new Kendra Gen AI Index, connecting to more than 40 entrerpise data sources with Bedrock Knowledge Bases for structured data retrieval, meaning users can now easily build more accurate and engaging outputs.

That's not all, though, as Sivasubramanian moves to structured data. Making this accessible for RAG is quite complex, as you can't just look up a single line or table.

Bedrock Knowledge Bases now support structured data retrieval, he reveals, one of the first fully managed out of the box rack solution to help get to the data you need, integrating structured data for RAG.

Also, there's new GraphRAG support on Bedrock Knowledge Bases, meaning users can generate more relevant RAG responses for Gen AI apps using knowledge graphs, offering richer and more accurate answers.

Finally, there's Bedrock Data Automation, which will transform unstructured multimodal data for gen AI apps and analytics - again offering the chance to create richer and smarter answers.

All these new use cases for Gen AI do require controls, though, Sivasubramanian notes, highlighting the work done by Bedrock Guardrails, which offers configurable safegurds, automated reasoning checks.

But also coming today is Amazon Bedrock Guardrails Multimodal toxicity detection - configurable safeguards for image content, cutting out violence, hate or misconduct images.

Sivasubramanian now moves on to talk about agents - the latest big thing in the AI world.

Amazon Bedrock Agents can already execute multi-step tasks across company systems and data sources - and now support multi-agent collaboration too.

Bedrock offers the easiest and dastest way to build and scale gen AI applications, Sivasubramanian says - so get involved today.

After another customer showcase, Sivasubramanian returns, and it's time to move on to Amazon Q.

The company's virtual assistant got a lot of attention in Matt Garman's keynote yesterday, and Sivasubramanian has a number of extra announcements to make.

Sivasubramanian notes that the capabilities of Q mean it has wider potential than everday developer tasks, and the company is keen to embrace this expansion.

First up to help with this is making Amazon Q Developer available in SageMaker Canvas - meaning developers can use natural language prompts to build, evaluate and deploy ML models, helping democratize ML development, even to non-experts.

Amazon Q Business also helps empower users, Sivasubramanian notes, and to help boost this, he announces Amazon Q coming to QuickSight scenarios - so users can ask ask Q questions about complex business problems using natural language, quickly identifying relevant data and perform a step-by-step scenario analysis.

It's time for some demos - Sivasubramanian welcomes Shannon Kalisky from AWS to the stage.

She's here to run us through some SageMaker demos, "bringing order to the chaos" as she puts it.

Sivasubramanian is back, and to close up, he turns to something asides from technology.

Every innovation needs the right conditions to thrive, he notes - potential cannot be realised if it exists in an unhealthy environment.

Referencing Malcolm Gladwell's "tipping point" thesis, he highlights how the need for education is paramount for the next generation. Students need the right environment to flourish in the gen AI era, he says.

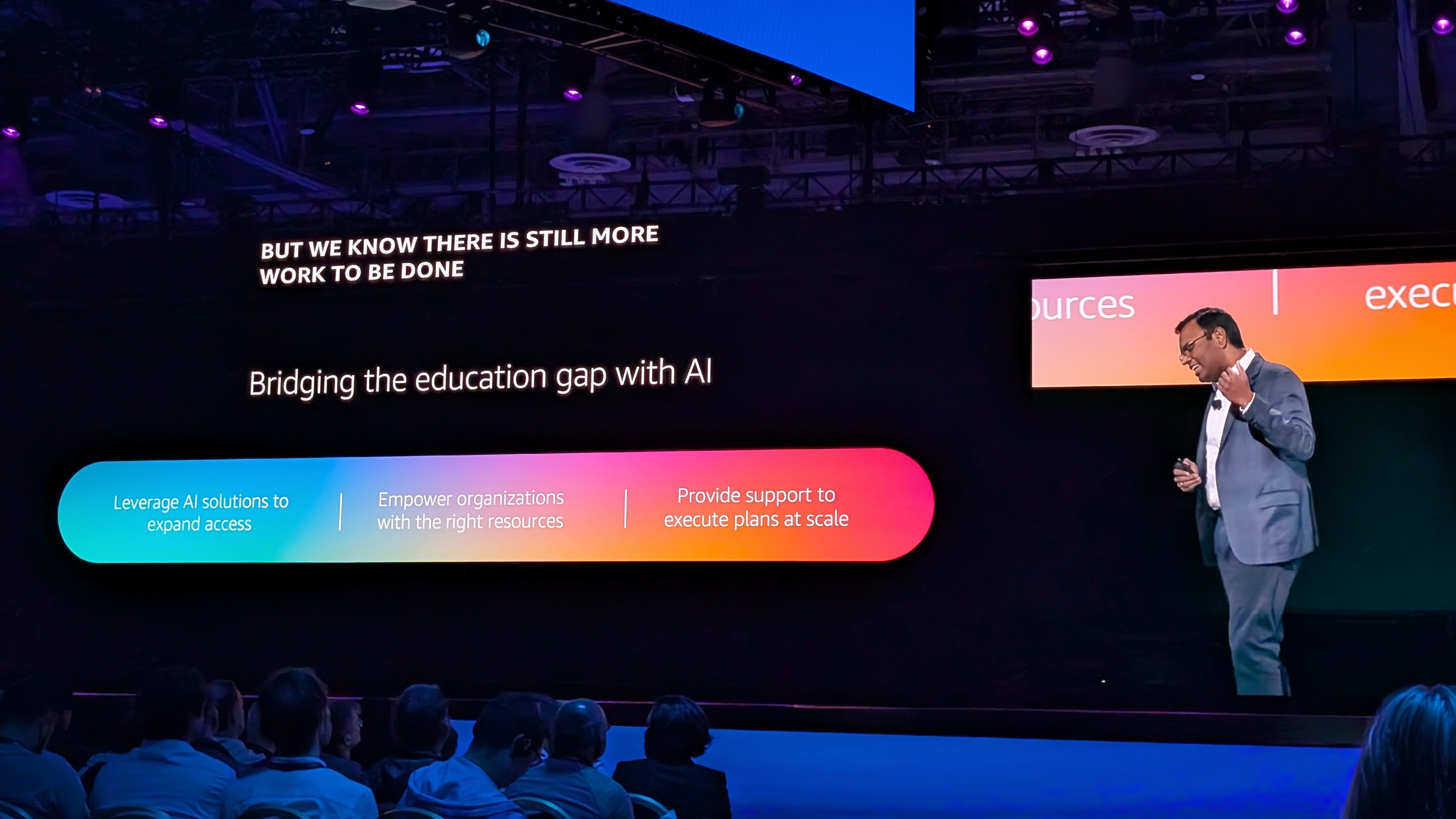

AWS has a long-standing commitment to boost learning and education, especially from poorer environments - and the company is going further today.

The company has provided free cloud computing skills training to 29 million people worldwide - a year ahead of schedule, Sivasubramanian notes.

And to go further, he announces an expansion to the Amazon Education Equity Initiative, which will provide up to $100m in cloud credits to boost education across the world, helping reduce the financial barriers to building AI and cloud computing apps.

Sivasubramanian says this is a "historical moment of convergence...we are not just shaping our present, but laying the groundwork for new innovations to take flight."

And that's a wrap! Another jam-packed keynote, full of intriguing new announcements and services. We're off to track down some more information, but we'll be back tomorrow for the closing keynote, hosted as always by Amazon CTO Werner Vogels.

If you want to check out all the top AWS re:Invent 2024, you can check out our news round-up list here.

Good morning from the final day of AWS re:Invent 2024! We're down at the expo to hear the day three keynote from Dr Werner Vogels, VP and CTO at Amazon.com

If you've never experienced a Werner keynote before, stay tuned - it's certainly a unique experience...

We're in and seated - today's music choice is a Vegas tech conference staple - a string quartet covering some familiar pop tunes, a slightly more appropriate choice for a slightly less full keynote theatre...last night's parties must be hitting hard.

After a packed setlist that includes classical covers of Rihanna, Coldplay, Nirvana and Snoop Dogg...the lights go down, and it's keynote time.

As we mentioned, and seasoned AWS heads will know, Werner keynotes are always...unique, and this year's video introduction runs us through a fantastical journey of innovation, complexity and...pizza.

It's exactly what it sounds like.

Werner is here! And for anyone curious - he's wearing an L9 t-shirt (lots of AWS fans will be keen to know).

"Every year I get more humbled by your enthusiasm," he says, harking back to his 20 years at Amazon, including 13 re:Invents.

Vogels runs us through his initial plan for "21st century architectures" set out at the very first AWS re:Invent back in 2012.

Featuring ideas such as letting business levers control the system, and architecting with cost in mind, it's clear that while some things have clearly changed - a lot has stayed the same...

In order to take proper control of your systems, you need to control complexity, Vogels says - he introduces the idea of "simplexity" - and is here to talk to us about how Amazon manages it.

"Not all complexity is created equal," he notes - intentional complexity can serve a key purpose, but "unintended complexity" can be a real issue.

"The key is to recognize both types," he says.

There are a lot of warning signs, however - from declining feature velocity, frequent escalations, time-consuming debugging, and inconsistent patterns.

"Simplicity isn't accidental - keeping things simple, while adding complexity, requires discipline," he notes.

Vogels introduces the CTO of Canva, Brendan Humphries, who talks us through how the company dealt with its explosive growth, and embraced Amazon services such as DynamoDB to deal with this.

Vogels returns, and it's time to talk the next steps.

"Evolution is fundamental," he notes, "even Darwin had figured out that the only way to a successful organization was through a series of slight modifications."

He also quotes Heraclitus, who noted the world is in a constant series of flux.

If you don't continue to evolve and improve, customers may see your company as falling behind - in order to help with this, Vogels introduces his six lessons in "simplexity".

Lesson number one - make evolvability a requirement, you need to know your systems will change over time.

Constructing architectures than can be evolved in a controllable way is crucial if you want to survive complexity, he notes.

Vogels highlights S3 as an example of a system that looks simple, but has continued to grow more complex under the covers.

Starting with 6 microservices at launch in 2006, it now has more than 300.

Don't ignore the warning signs - small things can grow, and can be become more complex to manage and understand, Vogels warns.

He shows off a few examples internally at Amazon, and notes you have two options when you get new functionality - extend, or build a whole new services - although this requires more effort, and can improve complexity!

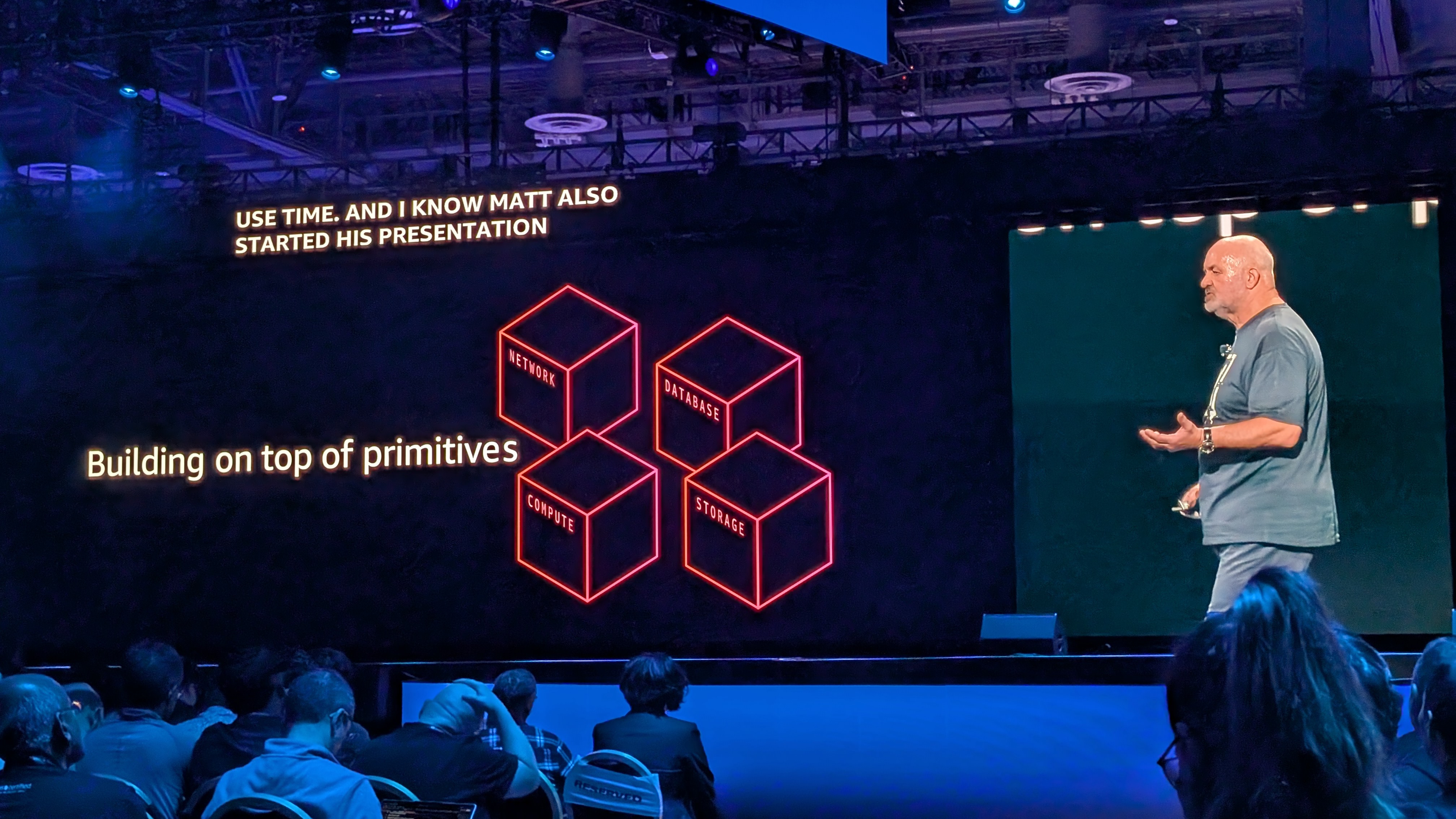

This leads us to lesson 2 - break complexity into pieces.

Now it's time to look at how you can align your organization to deal with complexity - and to talk us through, we welcome distinguished AWS engineer Andy Barfield.

He's here to talk mainly about how Amazon S3 in particular has been a significant work in progress, and brought a wide range of challenges, even for a company of AWS' size.

"Your organiation is at least as complex as the software you build - and we need to give them the same attention," he notes.

Even the most successful teams are scared they're doing things wrong, he reassures us.

Focusing on ownership is another key facet, he notes. Ownership can be hard, but it can be worth it when the success is realized.

Vogels is back, and moves on to lesson 4 - organizing into cells - even smaller building blocks!

Mkaing sure operational complexity is handled is also crucial, he says - Amazon is a big believer in this so-called cell-based architecture.

Breaking things into smaller building blocks that operate independently can be vital in reducing the scope of impact, essential in complex architectures, Vogels notes.

Uncertainty is another key issues, Vogels notes, so you need to offset this by designing predictable systems.

An event-driven architecture can be incredibly useful to deal with this, he outlines - describing how Amazon took a simple approach to making changes and updates, meaning unexpected incidents are rare.

Automating complexity can be another key lesson, Vogels says - but what exactly should you auotmate?

Humans need to be in the loop on some processes, but for lots of functions, automating can be the key, he believes.

Often, it's humans who make the mistakes, not automation, he notes - especially in security, a key Amazon automation pillar.

AWS deals with over a trillion DNS requests per day, and spots over 124,000 malicious domains on a daily basis - all through an automated process that humans simply wouldn't be able to replicate.

"Automation makes complexity manageable," Vogels declares.

Vogels outlines how using agents has helped AWS greatly simplify its ticketing triage system, helping find the right answers to critical issues much more quickly and simply.

That's Vogels' six lessons in simplexity - time for the audience to go and take his learnings to their own businesses.

After another customer showcase, Vogels returns, with the message of "the burden of complexity" - making sure AWS is taking more of that by building simpler and more effective systems.

He highlights Amazon Aurora as a particularly good example of this, offering customers a much smoother way of doing things - especially with its DSQL expansion, announced earlier this week.

Vogels takes us back through some of the key features of Aurora DSQL, which makes it ideal for companies looking to cut down on their complexity.

Overall, the service will offer customers a much smoother and more effective way to get the most out of the data they need.

Vogels harks back to AWS CEO Matt Garman's openign day keynote about offering customers building blocks - and adds that adding time may be the most important building block of all.

And that's a wrap on Vogels keynote - he bids us farewell with another video

We're off to hit the show floor and mop up any extra news - remember you can view our list of AWS re:Invent 2024 news here if there's anything you think you might have missed!

That's a wrap on AWS re:Invent! Thanks for joining us, we'll see you again soon.