The weather at Apple Park in Cupertino, California couldn’t have been more perfect for hosting Apple’s WWDC 2022. Sunny with a temperature of around 75 degrees Fahrenheit.

Though the almost two-hour-long developer conference was prerecorded and livestreamed for a global audience of millions, a large cloud loomed overhead.

On the surface, Apple executives successfully clicked through software updates for all of its major platforms. Customizable lock screens for iOS 16, resizable app windows for iPadOS 16, wrangling apps with Stage Manager in macOS Ventura, and running and aFib metrics for watchOS 9, to name some of the biggest features for each platform. We even got the new MacBook Air with powerful M2 chip.

But the cloud still loomed overhead: The augmented reality (AR) cloud refused to pass. Looking at everything Apple showed off during its keynote, it’s hard to shake off the feeling that all of these features are a march toward its long-rumored mixed reality headset.

As my colleague Ian Carlos Campbell noted in Slack during the keynote: Stage Manager for macOS has serious VR vibes going for it. The way the apps are collected to the side of the screen — it sorta resembles a heads-up display (HUD), the kind of UI and UX that would be perfect for a headset that wraps around your eyes and peripheral vision. But that’s not all — there were AR hints everywhere during Apple’s WWDC 2022 keynote.

Mixed controls

Apple is known as a product company. Its products — Mac, iPod, iPhone, iPad, Apple Watch — will go down in history as having greatly influenced society over and over. The minimal and polished products are celebrated, but Apple’s greatest contribution, in my opinion, is actually computer input.

Yes, Steve Jobs copied the mouse from Xerox, but it was the Macintosh that popularized it. Apple made touchscreens mainstream (and actually good) with the iPhone and iPad. Voice control came next with Siri. And I guess you can throw Apple Watch’s Digital Crown in there.

Each device input has been profound — providing interaction with the digital in intuitive ways. But how do you control a mixed reality headset? Current VR and AR headsets have settled around hand controllers or hand-tracking. I think Apple’s mixed reality headset will go further. In iOS 16, Apple is beefing up its Dictation feature with simultaneous voice and touch input. Take that and apply it to a mixed reality headset where you can, perhaps, control features with hand-tracking and voice without interruption and you have the formula for powerful headset operation that blows away even the Meta Quest 2.

Intelligence everywhere

It’s been years in the making, but because mixed reality is a platform with limited input, AI and machine learning will need to provide more of an assist than it does on smartphones, tablets, and computers.

Look no further than iOS’s Live Text. Yes, Apple cloned the feature from Google, but the feature has only become smarter and more useful. Today, Apple announced Live Text works on video, allowing you to select, copy, or perform an action by tapping on text within a still image. Take that function and bring it into a headset. What if you could just reach out on a phone number that you see in a classified ad or on a poster and your Apple headset gives you the option to call or text it? What if you don’t even need to air touch it and it just works by looking at it?

Converting units/currency or translating a language — the barrier right now is still your device’s screen. You need to take a photo (or open the camera app for supported iPhones), then tap the Live Text button, and then select an action. A mixed reality headet would require more natural interaction — live translations or currency conversion done via its many cameras when you look at something? Doesn’t seem that unrealistic to me.

As it stands, Apple’s Live Text is still behind Google Translate’s real-time Lens capability. But if Apple were to come up with a clone, it’d be a damn good one. Below is an image of some Hello Kitty pasta that I got at Japanese mart. I can’t read it. But Google Translate can, and does so live using augmented reality.

Sharing everything

Sharing content was easily the connective theme running through all of Apple’s WWDC 2022 platform announcements. There’s more SharePlay support with third-party apps, and more APIs, and easier ways to share stuff from anywhere and everywhere. It was to the point where a bunch of us at Input joked that it was getting annoying how much collaboration Apple was pushing, especially the iWork stuff that seems made for Apple employees who live and breathe iWork the most.

By design, AR or VR headsets are not very collaborative. One person wears the headset and then they’re gone in the virtual world (I hate to call any virtual world a metaverse). Meta is making major efforts to make its Quest 2 more work and collaboration friendly — the bones are there, but the execution can still be clunky.

My gut tells me SharePlay and sharing will be a key part of Apple’s mixed reality headset. Bloomberg’s Mark Gurman says that Apple is planning “a VR version of FaceTime that can scan a person’s face to replicate their movements in a Memoji, a new VR version of Maps, and rOS variants of core Apple apps like Notes and Calendar.” Gurman also adds that the headset can be used to “extend a Mac’s display, bringing it into 3D.”

Watching TV and movies on a huge virtual display with friends and family and goofing around with AR Memoji superimposed on each other’s heads? It already passes my sniff test — it’s such an obvious application.

Oh, and that Visual Look Up feature that is going viral on my Twitter? The one where you can cut out a French Bulldog from a photo and throw it in iMessage as a sticker? Picture doing that in real life. Sounds ridiculous right? Not when you take a look at what Snapchat has been doing with its AR lenses and Snap Specs.

Or how about iCloud Shared Photo Library? You take POV photos with your headset and they’re shared with all your family members, never left unseen on device. Makes sense.

Virtual maps

Even more obvious is Apple Maps and the 3D maps Apple has slowly been building up over the years. Analysts believe that navigation is a no-brainer application for Apple’s mixed reality headset. Improvements to 3D imagery surely feels like a steady march toward navigation. Navigation was a major selling point for Google Glass a decade ago. Google was way ahead of its time. Everything that you see in Apple Maps in iOS 16 is likely a foundation for some kind of mapping system in the mixed reality headset. Not to mention, Apple also has RoomPlan, an augmented reality API for scanning and mapping out rooms. The clues are right there in front of us.

In Tripp Mickle’s After Steve: How Apple Became a Trillion-Dollar Company and Lost Its Soul, there’s a section about Jony Ive’s vision for a fully autonomous electric car. “As the imaginary car sped forward, Ive pretended to peer out its window. ‘Hey, Siri, what was that restaurant we just passed?’”

Ive’s concept was for a self-driving car. But if you know Apple, it often uses a lot of the research and development that it does from one failed project and applies it to another. Remember, the iPhone was born from a gigantic table-sized touchscreen interface. Robust mapping, Siri intelligence, and voice control could provide the kind of real-life search that Ive imagined, but through a headset.

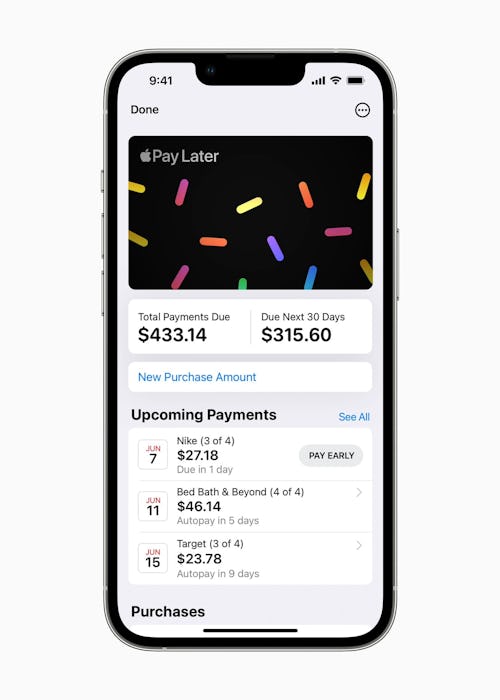

Easy headset payment

There are rumors that Apple’s headset could be really expensive — like $3,000 expensive. And how would you pay for that? Apple has a solution: Apple Pay Later. Buy its headset with Apple Pay Later and — boom — you can split the cost into four interest-free payments with zero fees.

I can already hear the “four easy payments” echoing in my ears. It’s a little depressing, but Apple hardware is only getting more advanced and more expensive. Installment plans and whatnot are the only way for many people to afford them.

Too far?

It’s possible that I’m reading too much into Apple’s WWDC 2022 announcements, but Apple is not a company that just wings anything. AR, VR, and mixed reality are platforms that will be built over decades. A headset might launch as early as next year, but Apple will be in it for the long haul.

Apple has been laying the groundwork for this mixed reality future for the better part of the last decade. ARKit, LiDAR scanners in iPhones and iPads, and skeletal tracking were all baby steps. You could also look at all of Apple’s advances in the entertainment space — original content with Apple TV+ and even courting hardcore game developers, like the people behind Resident Evil Village, with better graphics for its new Metal 3 API — as moves to create the backbone for a mixed reality headset. Meta has made content consumption and gaming core to the Quest 2. If Apple’s headset doesn’t have its own BeatSaber moment, the kids are not gonna go into debt trying to Apple Pay Later it off.

All of this is to say, the signs were all there at WWDC 2022. Apple’s AR/mixed reality future is coming and everything we’re using now is just a stepping stone to it.

.png?w=600)