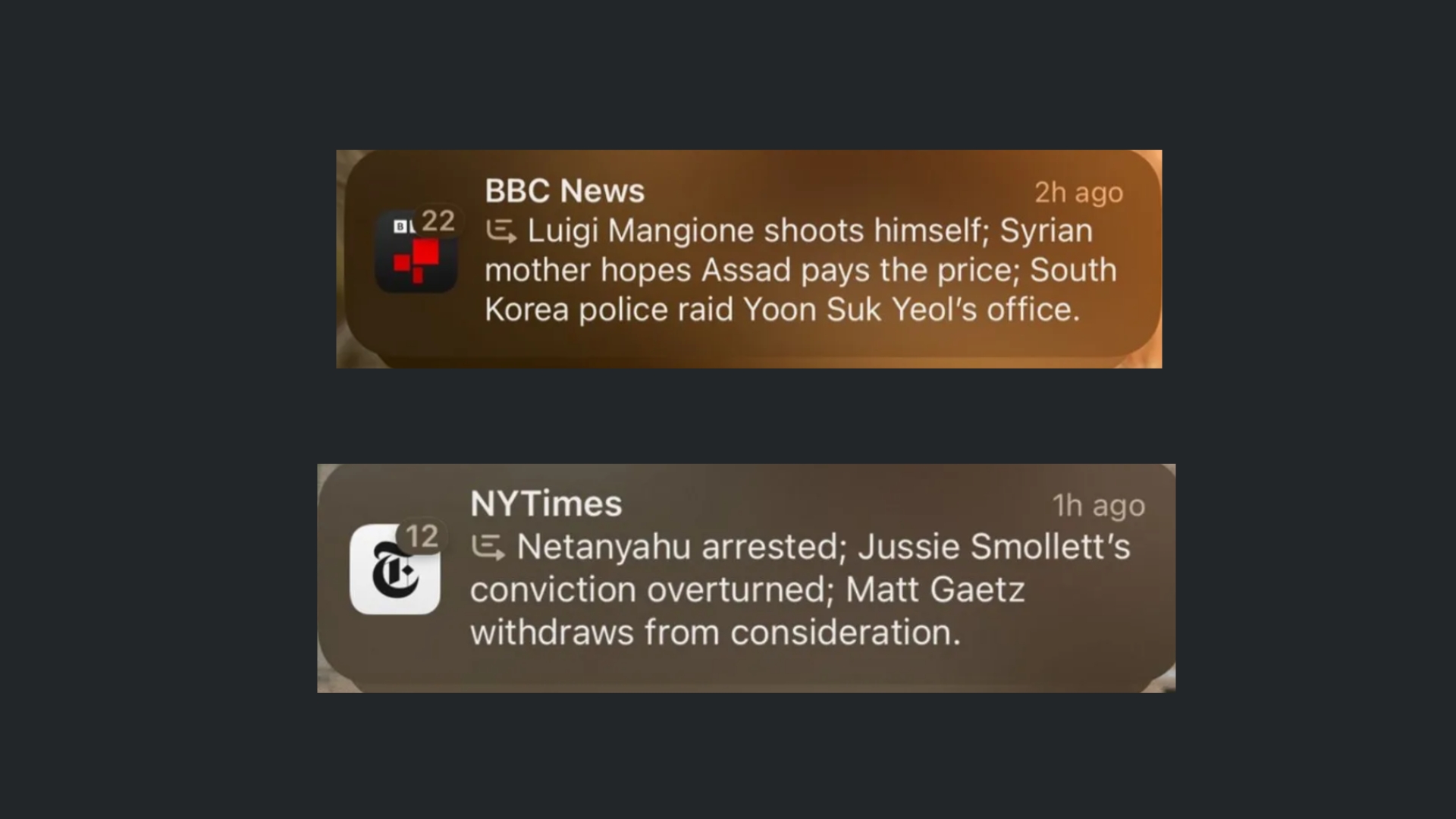

Apple is under fire after a recent text notification, attributed to BBC News, falsely claimed that Luigi Mangione, the accused in the murder of a prominent healthcare insurance CEO in New York, had shot himself. The shocking and false headline was generated using Apple Intelligence, which uses AI to summarize news notifications.

In reality, the event did not occur, yet soon after the summary was delivered, social media was already buzzing, spreading the false news rapidly. When it was confirmed that the AI-generated summary mistakenly issued details of the high-profile murder case, it sparked concern over the accuracy of Apple’s news summary feature.

The BBC has formally complained to Apple, requesting corrective measures to prevent such errors from recurring, further underscoring the importance of accountability. The media outlet's site states its editorial values, "The trust that our audience has in all our content underpins everything that we do. We are independent, impartial and honest. We are committed to achieving the highest standards of accuracy and impartiality and strive to avoid knowingly or materially misleading our audiences."

Media outlets invest heavily in maintaining their credibility, and errors made by third-party platforms threaten to erode that trust. Because misinformation can spread rapidly online, it is highly critical that automated news notifications are accurate.

Apple has yet to respond publicly to the BBC’s complaint. However, this incident is not the first time Apple Intelligence has faced criticism for spreading misinformation through its AI-powered summaries. On November 21, a notification attributed to the New York Times inaccurately suggested that Israeli Prime Minister Benjamin Netanyahu had been arrested. The actual story concerned the International Criminal Court issuing an arrest warrant for Netanyahu, but the AI summary significantly distorted the facts. The New York Times has chosen not to comment on the incident.

Calling for a ban

RSF is calling for a ban on the generative AI feature altogether. Reporters Without Borders made a statement on their site saying they are concerned about the risks of AI tools regarding false news alerts. The organization believes they are still too new to be used in reporting the news.

RSF's Head of Technology and Journalism Desk said on the site, “AIs are probability machines, and facts can’t be decided by a roll of the dice. RSF calls on Apple to act responsibly by removing this feature. The automated production of false information attributed to a media outlet is a blow to the outlet’s credibility and a danger to the public's right to reliable information on current affairs. The European AI Act — despite being the most advanced legislation in the world in this area — did not classified information-generating AIs as high-risk systems, leaving a critical legal vacuum. This gap must be filled immediately.”

Looking ahead

The issues with Apple Intelligence have raised broader concerns about the reliability of artificial intelligence in handling sensitive information. AI-driven tools, while designed to streamline and enhance user experiences, often struggle with context and nuance—key elements in accurate reporting. When trusted news sources are misrepresented through these errors, the potential for public misunderstanding grows exponentially.

The problem also highlights the broader implications of integrating AI into news delivery. As technology companies like Apple continue to adopt AI for content curation, there is growing pressure to ensure these systems are adequately tested and monitored. News organizations, for their part, are beginning to push back against errors that could damage their reputations.

This incident serves as a warning about the risks of relying too heavily on artificial intelligence for content delivery, raising the question: Does the risk of misinformation outweigh the convenience of automated news summaries? While AI holds promise to improve efficiency and accessibility, its limitations highlight the enduring need for human oversight in journalism. As Apple faces mounting pressure to address the flaws in Apple Intelligence, the debate over the role of AI in news media is likely to intensify.