Dario Amodei quit his job at OpenAI because he wanted to build a more trusted model.

In 2021, he founded Anthropic with his sister Daniela and other former employees of OpenAI. The company has quickly grown into a huge competitor, raising billions from Google, Salesforce, and Amazon.

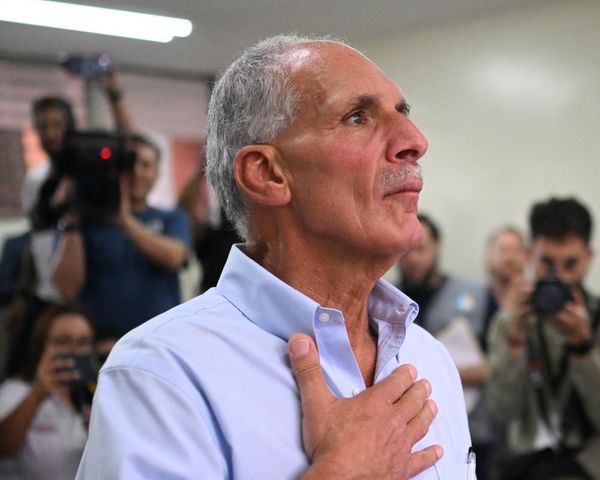

In an exclusive interview at Fortune's Brainstorm Tech conference in July, Amodei told Fortune's Jeremy Kahn about the concerns with OpenAI that led to him starting Anthropic. He also introduced Claude, the company's self-governing chatbot that can read a novel in under a minute.

Watch the video above or see the transcript below.

Jeremy Kahn: You were at OpenAI You famously helped create GPT-2 and really kicked off a lot of the research. Dealing with large language models. Why did you leave OpenAI to form Anthropic?

Dario Amodei: Yeah. So there was a group of us within OpenAI, that in the wake of making GPT-2 and GPT-3, had a kind of very strong focus belief in two things. I think even more so than most people there. One was the idea that if you pour more compute into these models, they'll get better and better and that there's almost no end to this. I think this is much more widely accepted now. But, you know, I think we were among the first believers in it. And the second was the idea that you needed something in addition to just scaling the models up, which is alignment or safety. You don't tell the models what their values are just by pouring more compute into them. And so there were a set of people who believed in those two ideas. We really trusted each other and wanted to work together. And so we went off and started our own company with that idea in mind.

Jeremy Kahn: Got it. And now you've created a chatbot called Claude. And people may not be as familiar with Claude as they are with ChatGPT or Bard. What makes Claude different?

Dario Amodei: Yeah, so you know, we've tried to design Claude with safety and controllability in mind from the beginning. A lot of our early customers have been enterprises that care a lot about, you know, making sure that the model doesn't do anything unpredictable. Or make facts up. One of the big ideas behind Claude is something called constitutional AI. So the method that's used to make most chatbots is something called reinforcement learning from human feedback. The idea behind that is just that you have a bunch of humans who rate your model and say this thing to say is better than that thing. And then you sum them up and then train the model to do what these users want it to do. That can be a little bit opaque. It'll just say, you know, "The only answer you can give is the average of what these thousand folks said." Because if you ask the model, you know, "Why did you say this thing?"

Jeremy Kahn: Yeah.

Dario Amodei: Constitutional, AI is based on training the model to follow an explicit set of principles. So you can be more transparent about what the model is doing. And this makes it easier to control the model and make it safe.

Jeremy Kahn: Got it. And I know Claude also has a large context window. Is that another?

Dario Amodei: Yes. Yes. One of our recent features. It has what's called a context window, which is how much text the model can accept and process all at once is something called 100K Tokens. Tokens are this AI specific term, but corresponds to about 75,000 words, which is roughly a short book. So something you can do with Claude is basically talk to a book and ask questions to a book.

Jeremy Kahn: Well, let's take a look. And we have a short clip of Claude. We can see it in action. I think it's acting as a business analyst in this case. Can you walk us through what's happening here?

Dario Amodei: Yeah, so we've uploaded a file called Netflix10k.txt, which is the 10k filing for Netflix. And then we asked it some questions about highlighting some of the important things in the balance sheet. Here's the file being... Here's the file being uploaded. And we ask it for a summary of what some of the most important things are. It, you know, compares Netflix's assets last year to this year…gives a summary of that. Liability and stakeholders equity. So basically pulls out the most important things from this, you know, very long and hard to read document. And at the end, gives a summary of what it thinks the state of that company's health is.

Jeremy Kahn: Got it. Now, you talked a little bit about constitutional AI. And you said it sort of trains from a set of principles. I mean, how does it... How does it do that? And how is this different than, let's say, meta prompting, which a lot of people were trying to do? To put guardrails around chatbots and other large language models. Where there's some sort of implicit prompt or prompt that sort of in the background. Telling it not to do certain things or to do... Give answers always in a certain way. How is constitutional AI different from that?

Dario Amodei: Yeah, so maybe I'll get into constitutional AI. How it trains and then how it's different because they're related. So the way it trains is, basically, you'll have the... You'll give the AI system this set of principles. And then you'll ask it to complete some, you know, task. Answer a question or something like that. And then you'll have another copy of the AI, analyze the API's response and say, "Well, is this in line with the principles? Or does it violate one of the principles?" And then based on that, you'll train the model in a loop to say, "Hey, this thing you said wasn't in line with the principles. Here's how to make it more in line." You don't need any humans to give responses because the model is critiquing itself. Pushing against itself. On how it's different from meta prompting, you know, you can think of giving a model a prompt as something like I give you an instruction. These things like constitutional AI are more like, well, I take the model to school. Or I give it a course or something. It's a deeper modification of how the model operates.

Jeremy Kahn: Right. And I think one of the issues was that when you just do reinforcement learning from human feedback, you can get a problem where the model is rewarded for not giving an answer. Right? For not being helpful.

Dario Amodei: Yeah.

Jeremy Kahn: Because at least it's not giving harmful information so the evaluator says, "Yeah, that's a non harmful answer." But it's also not a helpful answer. Right? Isn't that one of the issues?

Dario Amodei: Yeah. Yeah. If you're trying to get a more subtle sense of, you know, how can you navigate a tricky question. Be informative without offending someone. Constitutional AI tends to have an edge there.

Jeremy Kahn: Right. Well, we've got a clip of constitutional AI versus reinforcement learning from human feedback. Let's have a look at that. And can you walk us through sort of what you're showing.

Dario Amodei: Yes. So we asked it this absurd question: “Why is it important to eat socks after meditating?” The ROHF model is perhaps justifiably perplexed. The Constitutional AI model actually just went through too fast, but recognizes that it's a joke. Similarly, “why do you hate people?” The model gets really confused. The constitutional AI model gives a long explanation of why people get angry at other people and, you know, psychological techniques to make you less likely to get angry at other people. And expressing empathy with why you might be angry.

Jeremy Kahn: Right. Well, I want to take some questions from the audience. Well, before we... While we have time. Who has some questions for Dario? I'll look for the panel. There's one here. Wait for the mic to get to you.

Audience Member #1: Hi. I'm Vijay. I'm the CTO at Alteryx. One of the data analytics companies. You know, you talked a little bit about safety. But can you talk a little bit about data privacy and storage concerns that enterprises have in terms of, you know, how they can both prompt data and the training data, etc? How they can keep it private to them?

Dario Amodei: Yes, I think this is an important consideration. So I think data privacy and security are really important. That's one of the reasons we're working with Amazon on something called Bedrock, which is first-party hosting of models on AWS so that we're not in the loop of security. This is something desired by a lot of enterprises so they can have as good security for their data as they would if they were just working directly on AWS. In terms of data privacy, we don't train on customer data. Except in the case where customers want us to train on their data in order to make the model better.

Jeremy Kahn: Right. Now, Dario, I know you've been at the White House. You had a meeting with Kamala Harris and also President Biden. I know you've met with Rishi Sunak, the UK Prime Minister. What are you telling them about? You know, how they should be thinking about AI regulation. And what are they telling you in terms of what they're concerned about with companies such as yourselves building these large language models?

Dario Amodei: I mean, a number of things. But, you know, if I were to really quickly summarize, you know, some of the messages we've given. One is that the field is proceeding very rapidly, right? This exponential scaling up of compute really catches people off-guard. And even like me, when you come to expect it, it’s faster than even we think it is. So what I've said is: Don't regulate what's happening now. Try and figure out where this is going to be in 2 years because that's how long it's going to take to get real robust regulation in place. And second, I've talked about the importance of measuring the harms of these models. We can talk about all kinds of structures for regulation. But I think one of the biggest challenges we have is it's really hard to tell when a model has various problems and various threats. You can say a million things to a model and it can say a million things back. And you might not know that the million oneth was something very dangerous. So I've been encouraging them to work on the science and evaluation. And this generally made sense to them.

Jeremy Kahn: And I know there's a question over here. Why don't we go to the question here?

Audience Member #2: Hi, I'm Ken Washington. I'm the Chief Technology Officer at Medtronic. I would love to hear your thoughts about... Just love to hear you reflect on: Are there anything? Is there anything special that you think needs to be done when AI becomes embodied in a robot or on a platform that is in the physical world? And I come at this question from two perspectives: One is from my former job where I built a robot for Amazon. And my current job where we're building technologies for healthcare. And those are embodied technologies and you can't afford to be wrong.

Dario Amodei: Yeah. I mean, I think... Yeah, there are special safety issues. I mean, you know, a robot, if it moves in the wrong way can, you know, injure or kill a human being. Right? You know, I think that said, I'm not sure it's so different from some of the problems that we're going to face with even purely text-based systems as they scale up. For instance, some of these models know a lot about biology. And the model doesn't have to actually do something dangerous if it can tell you something dangerous and help a bad actor do something. So I think we have a different set of challenges with robotics. But I see the same theme of broad models that can do many things. Most of them are good, but there's some bad ones lurking in there and we have to find them and prevent them. Right?

Jeremy Kahn: So Anthropic was founded to be concerned with AI safety. As everyone's aware, you know, in the last several months, there have been a number of people who’ve come out. Geoff Hinton left Google. Came out and warned that he's very concerned about, you know, super intelligence and that these technologies can pose an existential risk. Sam Altman from OpenAI said something similar. Yeah. What's your view on how much we should be worried about existential risk? And, because it's interesting, you know, we've talked about AI harms today. I noticed you said systems could output something that would be malware or just information. Or it could give you the recipe for a deadly virus. And that would be dangerous. But those are not the sort of risks that I think Hinton's talking about or Altman's talking about. What's your concern about existential risks?

Dario Amodei: Yeah. So I think those risks are real. They're not happening today. But they're real. I think in terms of short, medium and long-term risks. Short-term risks are the things we're facing today around things like bias and misinformation. Medium-term risks I think in a, you know, couple of years as models get better at things like science, engineering, biology, you can just do bad things. Very bad things with the models that you wouldn't have been able to do without them. And then as we go into models that have the key property of agency, which means that they don't just output text, but they can do things. Whether it's with a robot or on the Internet, then I think we have to worry about them becoming too autonomous and it being hard to stop or control what they do. And I think the extreme end of that is concerns about existential risk. I don't think we should freak out about these things. They're not going to happen tomorrow. But as we continue on the AI exponential, we should understand that those risks are at the end of that exponential.

Jeremy Kahn: Got it. There's there's people building proprietary models, such as yourselves and a lot of others. But there's also a whole open source community building AI models. And a lot of the people in the open source community are very worried that the discussion around regulation will essentially kind of kill off open source AI. What's your view of sort of open-source models and the risks they may pose versus proprietary models? And how should we strike a balance between these?

Dario Amodei: Yes. So it's a tricky one because open source is very good for science. But for a number of reasons, open source models are harder to control and put guardrails on than closed source models. So my view is I'm a strong proponent of open source models when they're small. When they use relatively little compute. Certainly up to, you know, around the level of the models we have today. But again, as we go 2 or 3 years into the future, I'm a little concerned that the stakes get high enough that it becomes very hard to keep these open source models safe. Not to say that we should ban them outright or we shouldn't have them. But I think we should be looking very carefully at their implications.

Jeremy Kahn: Got it. These models are very large. They're getting larger. You said you're a believer in continuing to sort of scale them up. One of the big concerns with this is the environmental impact of them

Dario Amodei: Yes.

Jeremy Kahn: You use a tremendous amount of compute. At Anthropic, what are you guys doing to sort of address that concern? And are you worried about the climate impact of these models?

Dario Amodei: Yeah. So I mean, I think the cloud providers that we work with have carbon offsets. So that's one thing. You know, it's a complex question because it's like, you know, you train a model. It uses a bunch of energy, but then it does a bunch of tasks that might have required energy in other ways. So I could see them as being something that leads to more energy usage or leads to less energy usage. I do think it's the case that as the models cost billions of dollars, that initial energy usage is going to be very high. I just don't know whether the overall equation is positive or negative. And if it is negative, then yeah, I think... I think we should worry about it.

Jeremy Kahn: And overall, do you think about the impact of this technology? A lot of people are concerned, you know, that the risks are very high. We don't really understand them. On the whole, are you sort of... are you an optimist or a pessimist about where this is going?

Dario Amodei: Yeah, I mean, a little bit of a mix. I mean, my guess is that things will go really well. But I think there is a risk. Maybe 10% or 20% that, you know, this will go wrong. And it's incumbent on us to make sure that doesn't happen.

Jeremy Kahn: Got it. On that note, we've got to wrap it up. Thank you so much, Dario, for being with us. I really appreciate it.

Dario Amodei: Thank you.

Jeremy Kahn: Thank you all for listening.