Yesterday, it was reported that AMD's RDNA 4 GPUs will not get ROCm support at launch. Unsurprisingly, this upset many developers who were planning to upgrade their setups and hoped for a seamless transition. However, ROCm support might not be far behind the launch, as AMD's Vice President of AI Software has teased what appears to be an AMD GPU from the RX 9070 series running in a dedicated ROCm environment.

ROCm is an open-source software stack for GPU programming, enabling graphics cards to be used for much more than rendering graphics. ROCm enables select GPUs to power HPC and AI applications, including professional Instinct Accelerators, prosumer Radeon PRO GPUs, and a handful of consumer-grade Radeon GPUs as well.

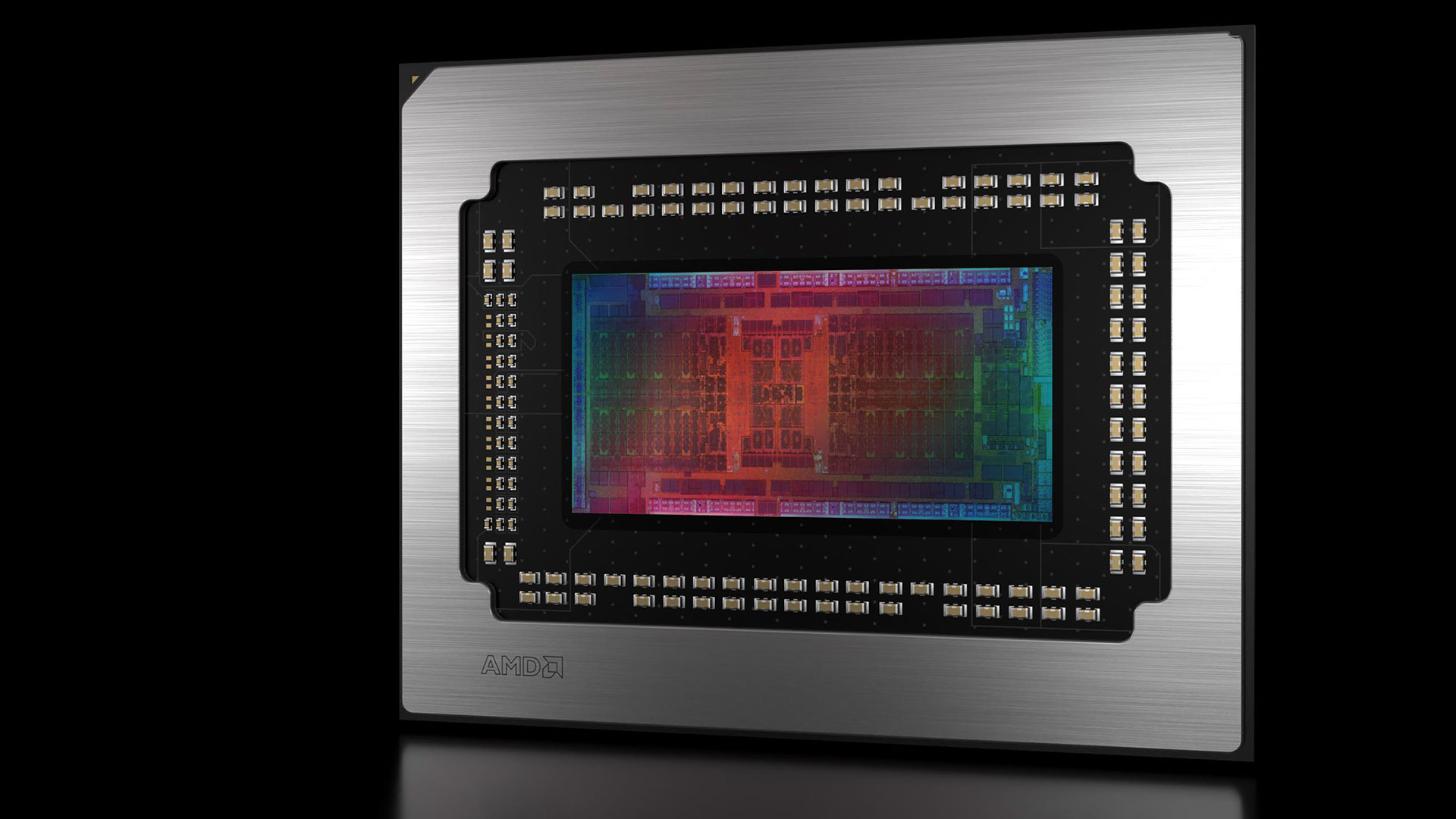

It seemed lacking for AMD to miss out on the opportunity of providing day-one support to developers. Ironically, the initial traces of Navi 48, the chip beneath RX 9070 GPUs, surfaced in ROCm updates last year. Anush Elangovan reported that RDNA 4 is "ROCm'ing on just fine," demonstrating a potential RX 9070 series GPU under rocm-smi (ROCm System Management Interface).

ROCm'ing on just fine https://t.co/KSquDUq34W pic.twitter.com/xhootvpzuEFebruary 28, 2025

Developers have repeatedly bemoaned AMD's slow adoption of new ROCm features and support on mainstream hardware. As of writing, AMD officially supports the RX 7000 and RX 6000 families of GPUs and the Radeon VII on Windows. Select RDNA 2 GPUs can only use the HIP runtime and don't have access to the full HIP SDK. Linux support, which is notably better and more consistent, only extends to the RX 7900 series and the Radeon VII. There are a few workarounds to get unsupported hardware up and running with ROCm, which is reportedly more successful in Linux environments.

Nvidia's CUDA, on the contrary, can be run using GPUs dating back to 2006. Of course, newer architectures offer updated feature sets and fancier instructions. Given the recent advances in AI, 90% of Nvidia's revenue is a result of its data-center accelerators, driven by CUDA at their heart. This shows how critical ROCm is for AMD if it wants to compete against the mammoth that Nvidia has become in the AI space.

To broaden ROCm support, the next addition will likely be Strix Halo, or the Ryzen AI MAX 300 series. With as much as 128GB of unified memory, Strix Halo is much more than a gaming laptop; it's more tailored for LLM inference and development, tackling Apple's M-series silicon.