Running large language models (LLMs) on PCs locally is becoming increasingly popular worldwide. In response, AMD is introducing its own LLM application, Gaia, an open-source project for running local LLMs on any Windows machine.

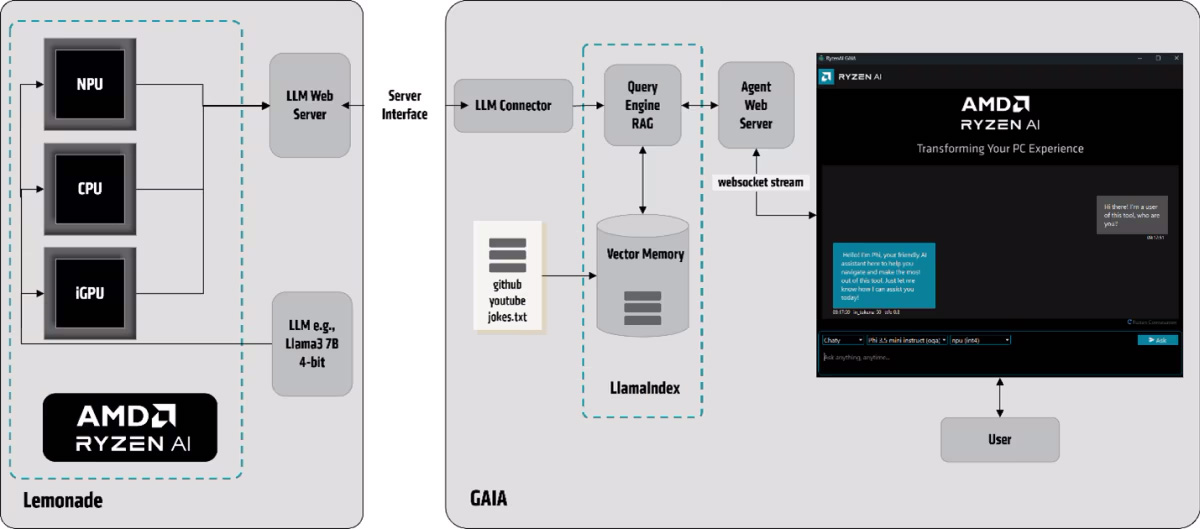

Gaia is designed to run various LLM models on Windows PCs and features further performance optimizations for machines equipped with its Ryzen AI processors (including the Ryzen AI Max 395+). Gaia uses the open-source Lemonade SDK from ONNX TurnkeyML for LLM inference. Models can allegedly adapt for different purposes with Gaia, including summarization and complex reasoning tasks.

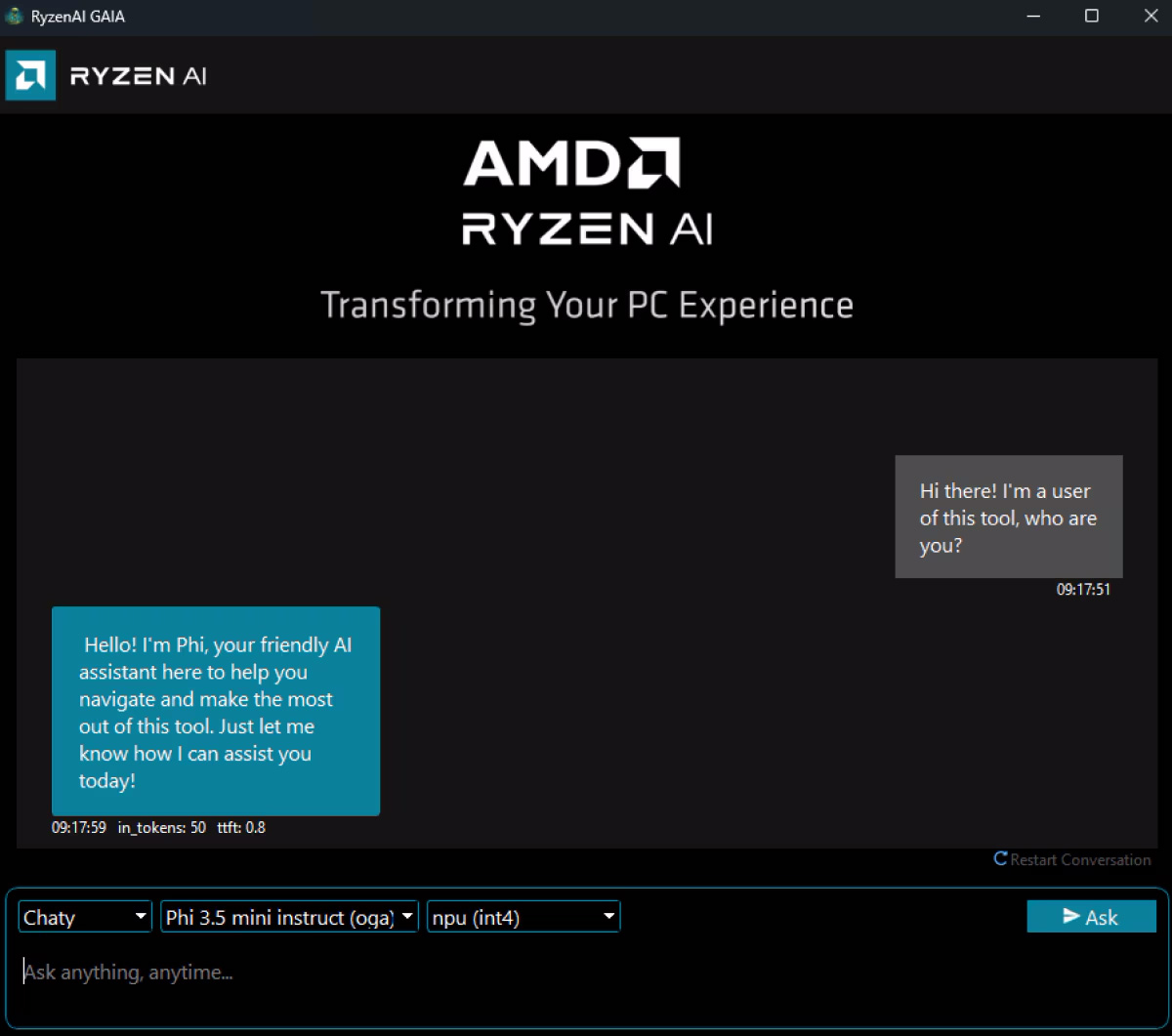

Gaia allegedly works through a Retrieval-Augmented Generation agent or RAG. RAG combines an LLM with a knowledge base, allowing the LLM to provide an interactive AI experience for the end-user along with more accurate and contextually aware responses. RAG currently incorporates four Gaia agents: Simple Prompt Completion, an agent designed for direct model interactions intended for testing and evaluation; Chaty, the chatbot portion of an LLM that interacts with the user; Clip, an agent with YouTube search and Q&A functionality; and Joker, a joke generator that adds a humoristic personality to the chatbot.

AMD's new open-source project works by providing LLM-specific tasks through the Lemonade SDK and serving them across multiple runtimes. Lemonade allegedly "exposes an LLM web service that communicates with the GAIA application...via an OpenAI compatible Rest API." Gaia itself acts as an AI-powered agent that retrieves and processes data. It also "vectorizes external content (e.g., GitHub, YouTube, text files) and stores it in a local vector index."

In other words, Gaia can enhance user queries before the LLM processes them, allegedly improving response accuracy and relevance.

The new AI chatbot has two installers: a mainstream installer that works on any Windows PC (whether that PC has AMD hardware or not) and a "Hybrid" installer optimized for Ryzen AI PCs. The latter specifically enables Gaia to run computations on a Ryzen AI CPU's neural processing unit (NPU) and integrated graphics for better performance.

Gaia is the latest competitor in the new sea of localized LLM applications, including LM Studio and ChatRTX. Running an LLM locally has significant advantages over cloud-based solutions, including greater security, lower latency, and, in some cases, better performance, depending on the system hardware. Best of all, local LLMs work offline and don't require an internet connection.