It's a paradigm shift.

Since November, the world has been discovering and experimenting with the huge potential of artificial intelligence. While this technology has been present in everyday life, especially in cars, the general public was unaware of how advanced it had already become.

OpenAI, a startup cofounded by Elon Musk and now funded by software giant Microsoft, on Nov. 30 introduced the conversational chatbot ChatGPT. The chatbot is able to provide elaborate, humanlike responses to requests. Since then, things in the tech world have not been the same.

Don't Miss: SpaceX Delays Launch of World's Most Powerful Rocket

ChatGPT which is powered with what's called large-language-model technology, is at GPT-4 level, an advanced stage of artificial general intelligence. This refers to AI systems that can emulate humans -- chatbots or robots that can do tasks that people can do and do them better.

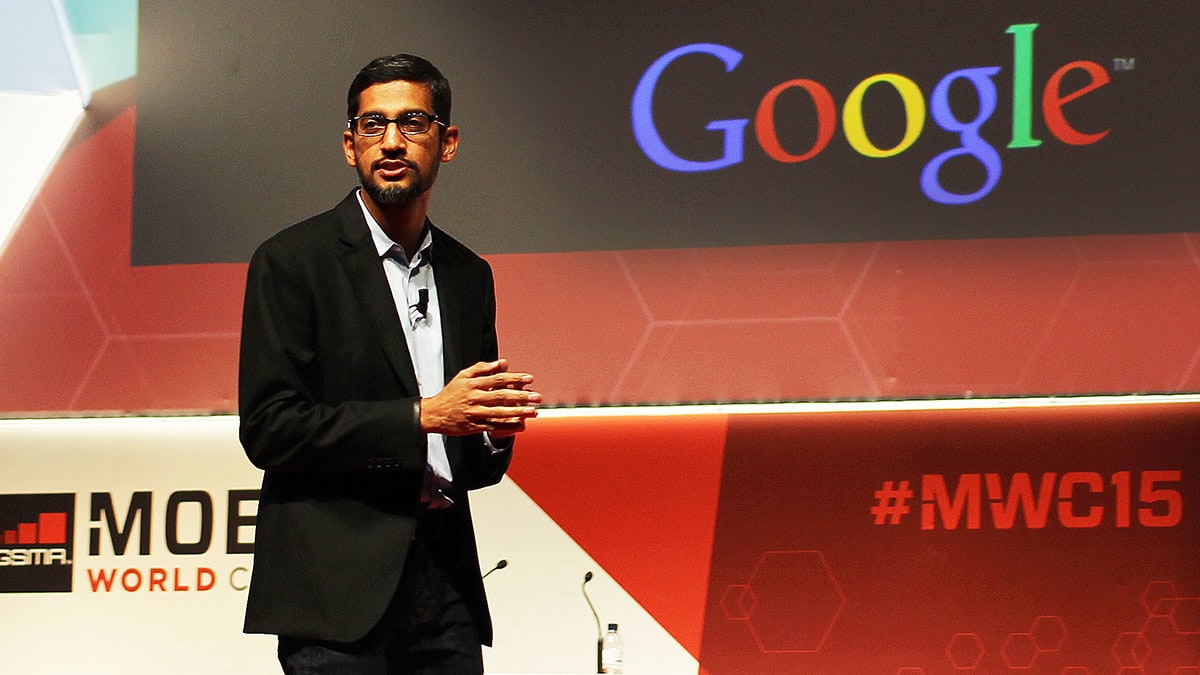

Chatbots, Along With a 'Black Box': Pichai

But the next generation of AI tools are even more powerful, some experts say. One of them is Sundar Pichai, chief executive of Alphabet (GOOGL), the parent of Google. Alphabet and Google developed Bard, ChatGPT's big rival. Bard was introduced to the public last month.

In an interview with CBS's "60 Minutes," broadcast on April 16, Pichai warned that society must prepare for these revolutionary chatbots because the rapid development of AI will affect everything.

"We need to adapt as a society for it," Pichai told interviewer Scott Pelley, who was "speechless" after he tried several of Google's AI projects. The CEO warned that most jobs will be disrupted by AI, including knowledge workers.

"This is going to impact every product across every company," Pichai argued.

"For example you could be a radiologist. If you think about five to 10 years from now, you're going to have an AI collaborator with you. You come in the morning, let's say you have a hundred things to go through, it may say, 'these are the most serious cases you need to look at first.'"

Pichai also warned that there was a "black box" with chatbots, where "you don't fully understand" why or how it comes up with certain responses.

Pelley: "You don't fully understand how it works and yet you've turned it loose on society."

Pichai: "Let me put it this way: I don't think we fully understand how a human mind works, either."

Pelley also had access to products developed by DeepMind, the Google startup specializing in AI. We see robots playing soccer that they learned by themselves.

AI Could Speed Fake News, Deepfakes: Pichai

The other danger posed by AI is that it can accelerate the proliferation of fake news and images and deepfakes, Pichai warned. "At society of scale it could cause harm," the CEO said.

Asked whether the company was prepared for the new chatbots, Pichai was clear:.

"On one hand, I feel no, because the pace at which we can think and adapt as societal institutions, compared to the pace at which the technology is evolving, there seems to be a mismatch."

However, he said he was optimistic because fears about AI are being expressed very early.

While Google has issued recommendations for AI regulation, Pichai says it's up to society to regulate the technology, to punish abuse, and put in place rules that "align with human values including morality."

"It's not for a company to decide," the chief executive argued. "This is why I think the development of this needs to include not just engineers but social scientists, ethicists, philosophers, and so on."

Last month, Musk and other tech luminaries signed a letter, calling for a six-month or longer moratorium on the development of powerful new AI tools.

The signatories of this petition, launched on March 22, say that the pause would provide time to put safety standards in place and to determine the dangers and risks that some of the most advanced AI tools would pose to our civilization.

"Contemporary AI systems are now becoming human-competitive at general tasks, and we must ask ourselves: Should we let machines flood our information channels with propaganda and untruth?" said the open letter, titled "Pause Giant AI Experiments." At last check Musk and 26,000 other people had signed it.

"Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us?

"Should we risk loss of control of our civilization? Such decisions must not be delegated to unelected tech leaders. Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable," the letter said.

The petition did not deter the AI arm race between tech companies.

Musk, who has been calling for AI regulation for several years, has just created a startup himself to compete with ChatGPT and Bard. This new company is called X.AI Corp.

Get exclusive access to portfolio managers and their proven investing strategies with Real Money Pro. Get started now.