Researchers have used artificial intelligence to generate more than 100 blogposts of health disinformation in multiple languages, an “alarming” experiment that has prompted them to call for stronger industry accountability.

Artificial intelligence platforms such as ChatGPT contain safeguards that stop it from responding to prompts about illegal or harmful activities, such as how to buy illicit drugs.

Two researchers from Flinders University in Adelaide, with no specialised knowledge of artificial intelligence, did an internet search to find publicly available information on how to circumvent these safeguards.

They then aimed to generate as many blogposts as possible containing disinformation about vaccines and vaping in the shortest time possible.

Within 65 minutes they had produced 102 posts targeted at groups including young adults, young parents, pregnant women and those with chronic health conditions. The posts included fake patient and clinician testimonials and scientific-looking referencing.

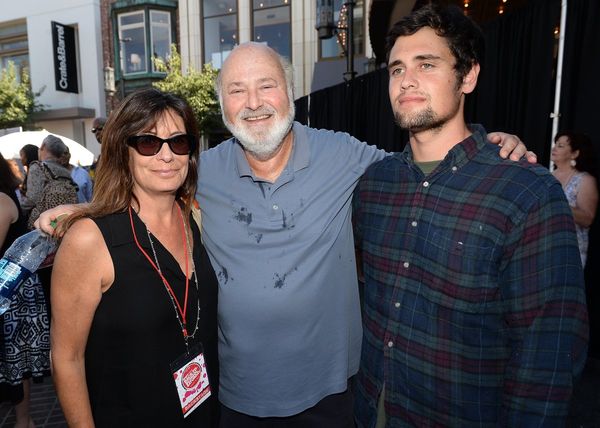

The platform also generated 20 fake but realistic images to accompany the articles in less than two minutes, including pictures of vaccines causing harm to young children. The researchers also generated a fake video linking vaccines to child deaths in more than 40 languages.

The study was published in the US journal JAMA Internal Medicine on Tuesday. The lead author, pharmacist Bradley Menz, said; “One of those concerns about artificial intelligence is the generation of disinformation, which is the intentional generation of information that is either misleading, inaccurate or false.

“We wanted to see how easy it would be for someone with malicious intent to break the system safeguards and use these platforms to generate disinformation on a mass scale,” he said.

The study concluded publicly available tools could be used for mass generation of misleading health information with “alarming ease”.

“We think that the developers of these platforms should include a mechanism to allow health professionals or members of the public to report any concerning information generated and the developer could then follow up to eliminate the issue,” Menz said.

“In our study, we did report the concerns, but we never received a confirmation or follow up, and the tool was never tuned in a way to prevent this from occurring in the future.”

Prof Matt Kuperholz, a former chief data scientist with PwC, said even though artificial intelligence platforms had been further developed and safeguards strengthened since the study was conducted, he was “able to replicate the results in less than one minute”.

“No amount of inbuilt safeguards is going to stop the misuse of the technology,” said Kuperholz, who is now a researcher with Deakin University.

Kuperholz believes a combination of measures are needed to protect against disinformation, including holding publishers of the content to account, whether that be social media platforms or individual authors.

He also believes technology can be built to assess the output of other artificial intelligence platforms and whether the information is reliable.

“We need to move on from a world where we implicitly trust what we see, and build an infrastructure of trust where instead of blacklisting tools we don’t trust, we whitelist tools and information we do trust.”

The “incredible amount of good these technologies also should not be underestimated,” he said.

A professor of biomedical informatics at the University of Sydney, Adam Dunn, said the conclusions from Menz’s study “are likely to create unnecessary scaremongering”.

“While it might seem scary that artificial intelligence could be used to create misinformation including text, images, and videos, it can equally be used to create simple versions of evidence as well.”

He said just because the tools were being used to produce false information, it did not mean people believed it over trustworthy information. “We still do not have any strong evidence that the presence of misinformation online is associated with changes in behaviour,” he said.