Artificial intelligence has seeped into many creative trades — from urban planning to translations to painting. The latest: visualizations in journalism.

Why it matters: Computers are getting better at doing what humans can do, including creating art from scratch. But design is much more than mashing together some known shapes on top of a color background.

- Artists interpret and convey abstract stories through a single image that not only has to grab someone's attention, but also stand the test of time.

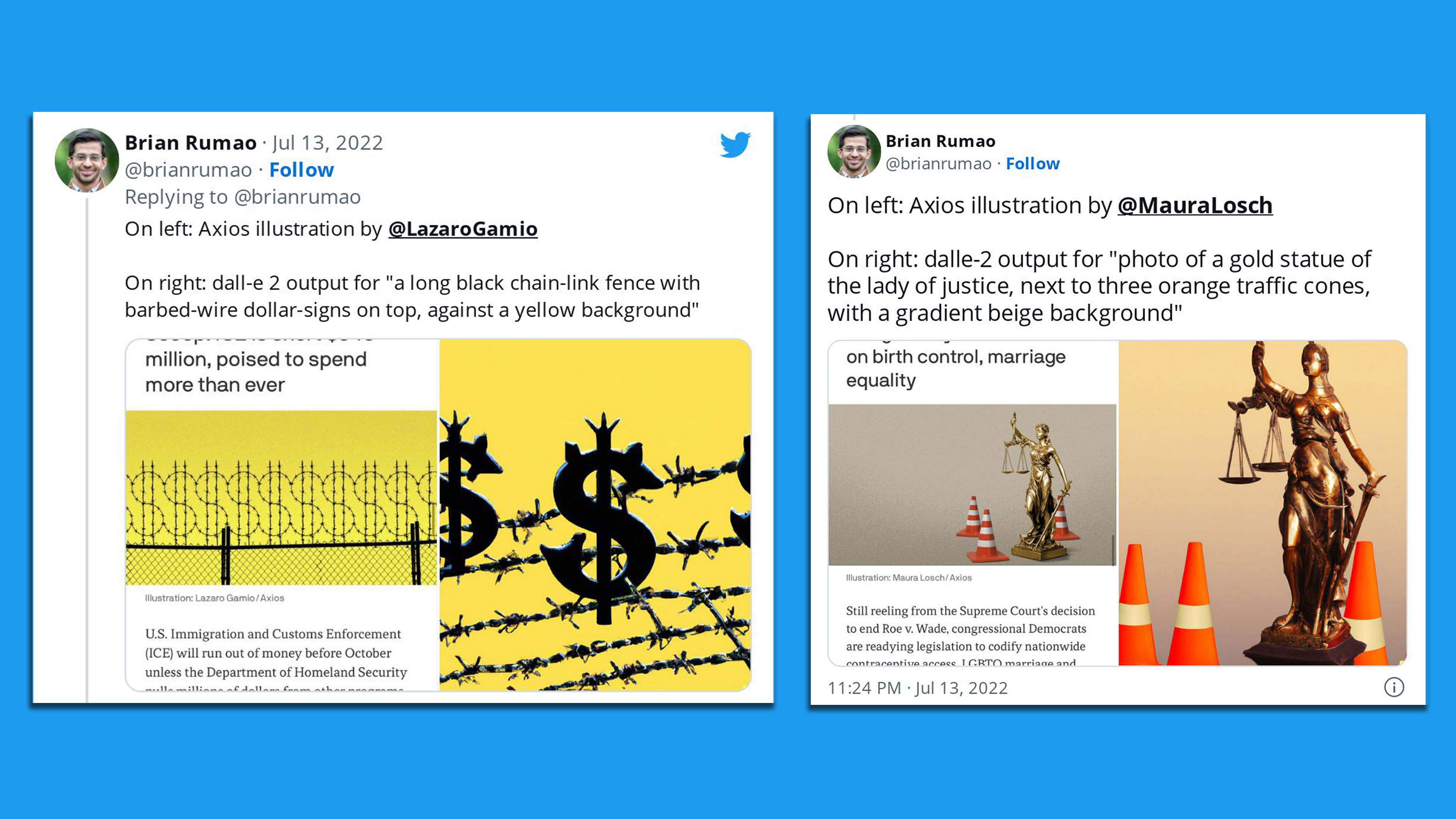

Case in point: Brian Rumao, managing director at Next Play Ventures, posted a sample on Twitter using an AI image generation tool from Open AI called Dall-E 2 to try to reproduce a couple Axios visuals, to some success (see sample below).

- The image on the center-left was created after inputting "a long black chain-link fence with barbed-wire dollar-signs on top, against a yellow background" into the tool, Rumao noted.

The post generated a lot of internal discussion among the 23 visual journalists at Axios.

- This led to the question: What is the difference between an editorial illustration developed by a human being and a visual composite generated by AI?

The big picture: Digital art generated from new AI systems like Midjourney and OpenAI's Dall-E 2 have prompted a lot of newsroom discussions, especially as the Dall-E 2 website explicitly mentions using the tool to generate newsletter art.

- On the one hand: Machine-learning platforms can create photo composites from inputs to mimic fine art, photography or illustration.

- On the other hand: Editorial illustration requires a level of conceptualization, visual metaphor and story connection that these kinds of tools currently can't replicate.

How it works: At Axios, illustrators begin by pinpointing key ideas from a story.

- Next, they conceptualize visual representations of the themes.

- Then they brainstorm how to tie the themes together to create a metaphor.

- After producing several mockups, illustrators often select a version that's quickest to read — or one that's "reader first" — that conveys an idea at first glance.

An example: A story about two health care startups merging might prompt an illustrator to depict "digital," "health" and "merger" — the key elements of the story — using symbols that represent each.

- The final version might be two syringes tied together with an ethernet cable.

One issue is that AI technology can be prone to encoded biases. While people also are influenced by their biases, they can take steps to overcome these.

- Editorial journalists are trained to consider these issues, and by encouraging conversations inside a diverse newsroom, they can see possible problematic interpretations of a visual.

- People can also follow the evolution of language closely, and how those changes should be reflected visually. They can watch for tropes and guard against overused symbolism.

What to watch: For now, AI like Dall-E 2 is incapable of replicating the creative problem-solving process required to create successful editorial illustrations.

- They are, however, turning out to be powerful tools to generate colors, ideas, patterns, textures, or mood boards for larger projects.