Developers wishing to work on advanced artificial intelligence projects in the UK should require a licence, the Labour Party has suggested.

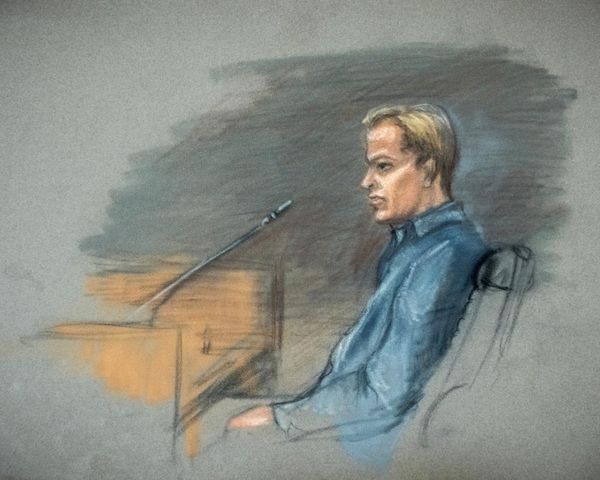

This level of regulation would allow artificial intelligence advancement, but with some regulation in place, similar to that which governs medicines and nuclear power, the party’s digital spokesperson Lucy Powell told The Guardian.

“That is the kind of model we should be thinking about, where you have to have a licence in order to build these models,” she said. “These seem to me to be the good examples of how this can be done.”

The suggestion comes shortly after Prime Minister Rishi Sunak’s advisor Matt Clifford told TalkTV that artificial intelligence could be just two years away from helping produce cyber and biological weapons.

There are other, more insidious risks of artificial intelligence beyond such headline-grabbing threats, though. For Powell, these include bias, discrimination, and surveillance — problems that she highlights as “unintended consequences”.

“This technology is moving so fast that it needs an active, interventionist government approach, rather than a laissez-faire one.”

Of course, the UK is limited in what it can do if acting alone. If a developer fails to get hold of a theoretical licence to build artificial intelligence products in Britain, there would be nothing stopping them from emigrating and producing something in a country with more lax regulation. The final product could still potentially be used in the UK and impact lives, regardless of the good intentions.

That goes some way to explaining the itinerary for Sunak’s upcoming visit to Washington, where the Financial Times reports the Prime Minister is set to discuss two possible models of international AI regulation with President Joe Biden.

The first is a ‘Cern for AI’ based on the international particle physics project, while the second is a global regulatory body, more akin to the International Atomic Energy Agency.

With the EU already seeking to ban public AI-based facial recognition, thereare signs of a global consensus on the need for regulation. However, even this global buy-in wouldn’t prevent a rogue state from going its own way with unethical AI research — one of the extinction threats concerning some experts.