Most broadcasters and content creators got their first taste of the potential of artificial intelligence from closed captioning (aka subtitling). Speech-to-text technology enabled by AI—something many of us already use in our daily lives—has been in use for more than a decade and improvements in its capabilities have become more evident, with far more accuracy in both transcribing as well as translating.

For broadcasters who have to comply with rigid FCC standards, however, the rules are explicit in terms of what type of content is covered as well as its accuracy and even placement on the screen. Vendors have responded to broadcasters’ needs with new tech that make the process more accurate and automated than ever.

Voice Interaction

Voice Interaction, which began as a live captioning service in 2001 was among the first companies to incorporate AI-based closed captioning when it launched in 2008. Its services and products include automatic live closed captioning, speech transcription, spoken term detection and indexing, spoken language identification and speech analytics for actionable knowledge, compliance logging and broadcast monitoring.

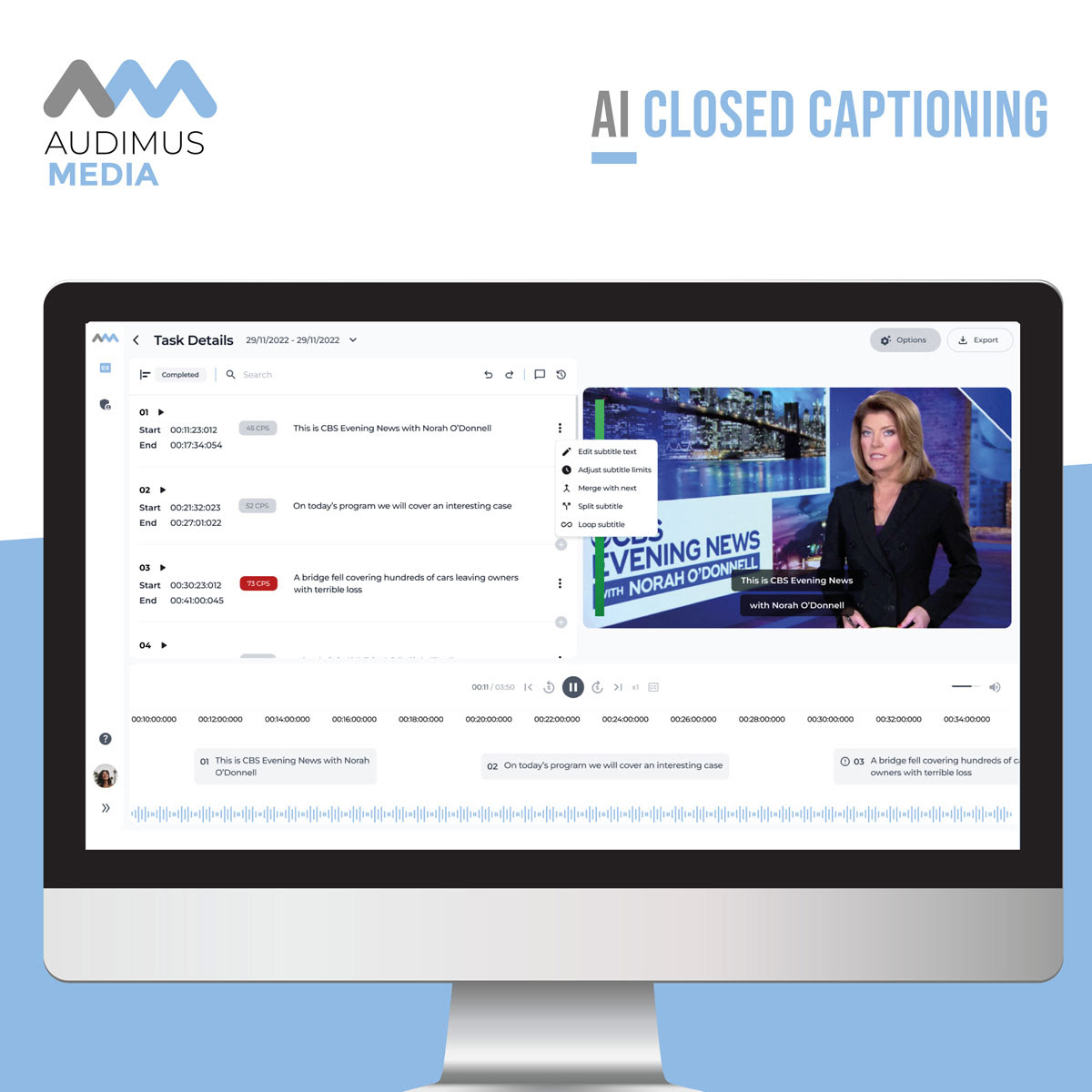

The Portugal-based company has steadily increased its presence in North America, with Scripps and Nexstar among its U.S. customers, and Bell Canada, most of whom use its Audimus.Media platform, a broadcast-grade, AI-driven solution for real-time, automatic closed captioning across multiple platforms, including live TV broadcasting (OTT/OTA), streaming and online meetings. Its speech recognition capabilities extend to 40 languages, with simultaneous translation and speaker differentiation.

Renato Cassaca, chief software development engineer, says the company takes pride in its ability to quickly resolve issues that could result in mispronounced names or inaccurate information on the screen.

“We have webcrawlers running 24 hours a day that get all the newspapers available online,” he said. “And we also get guest access to the newsroom computer system like AP’s ENPS or Avid iNnews—we have a permanent connection to those systems inside the TV stations and we collect all that data to improve their language models and every day they receive a retrained language model to cope with the changing reality.”

ENCO Systems

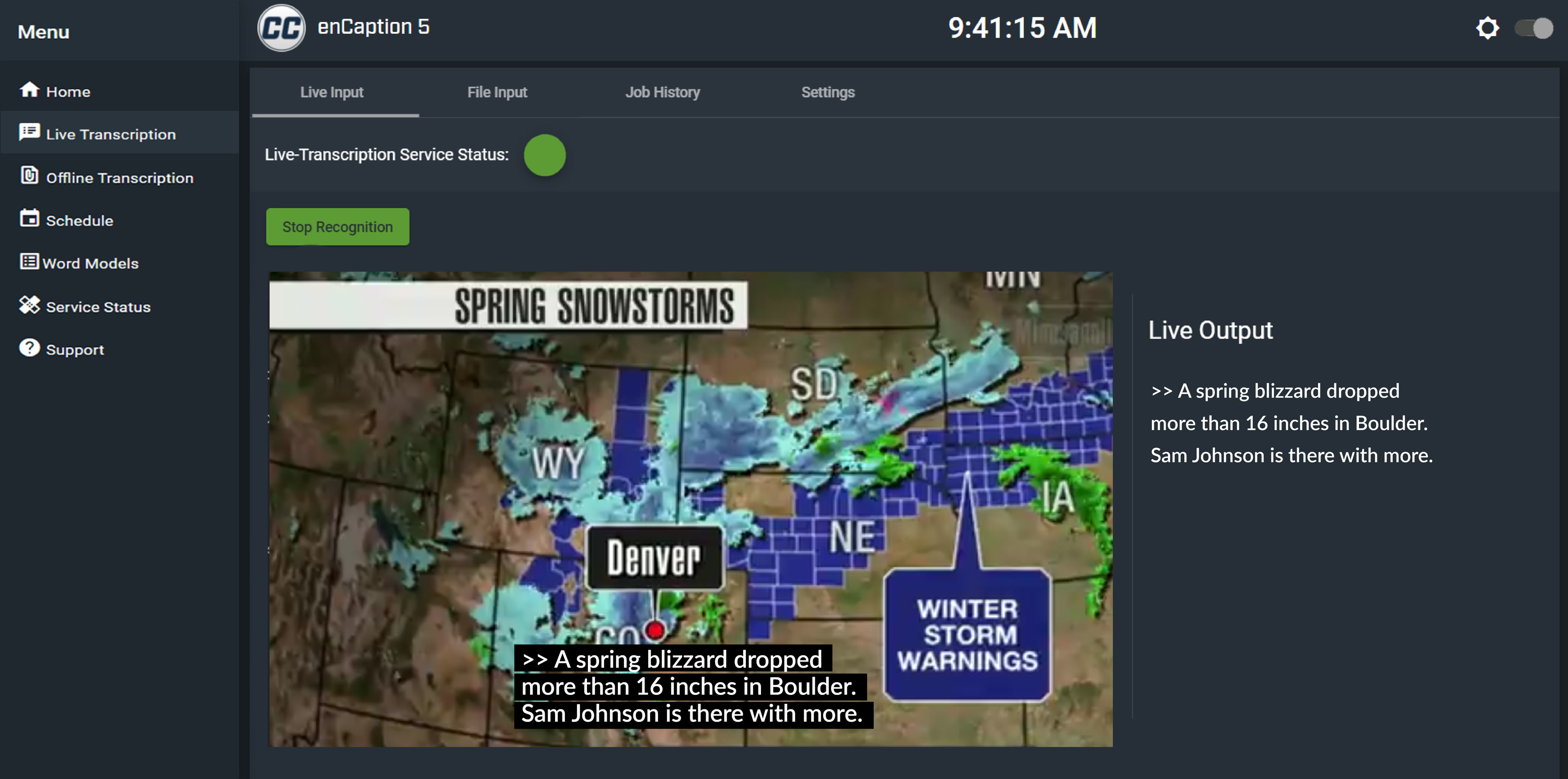

ENCO Systems has been a mainstay in the closed-caption market for years with its enCaption automated captioning platform, which it launched in 2010. Since then, AI has advanced far beyond its initial capabilities, and the company has focused on these advances.

“We’ve been leveraging AI and machine learning for years to get better accuracy and quality out of speech to text,” said Ken Frommert, ENCO CEO for the Detroit-based company.

“Our ASR [automated speech recognition] engine is actually better in certain situations than a human for live captioning or transcription. As AI has gotten better, it has allowed allowed broadcasters to offer live captioning at significantly less expense, which allows for more programming to be captioned, to not only meet FCC and other regulations but also being able to leverage captions to search file-based transcriptions.”

(Read more: Captioning for Sports Broadcasting and Venues)

At the NAB Show, ENCO rolled out enCaption5, a cloud-native, microservices-based solution, which adds new features and flexibility such as a caption editor to view and refine the results of offline or previous live transcriptions. enCaption 5 can now add captions to both live and pre-recorded content in 48 languages, combining machine learning with advanced speech-to-text conversion and grammatical structure analysis to deliver exceptional accuracy with extremely low latency. enCaption also now incorporates ENCO’s enTranslate systems as a plug-in.

It also launched AITrack and ENCO-GPT, which leverages the ChatGPT model to generate language responses from text-based queries added to automated TV and radio production workflows. Initially targeting radio, AITrack integrates with ENCO’s DAD radio automation system to generate and insert voice tracks between songs. ENCO-GPT simplifies copy creation within TV and radio broadcasts and program streams and can automatically create ad copy on behalf of sponsors.

AI-Media

Although the “AI” in Australian based Ai-Media actually stands for “accessibility innovation,” AI/ML is the current driving force behind the 20-year old company’s products and services.

Much of the company’s first two decades was focused on providing live captioning services but in 2021, the company acquired long-time partner EEG to begin offering EEG’s LEXI automated captioning platform to its customers as well as to help bolster its presence in the North American market.

It recently launched LEXI 3.0, an improved version of its flagship automatic captioning solution. Independent audits confirm LEXI 3.0 consistently delivers results with 35% fewer recognition, formatting and punctuation errors than the previous version, the company said. The latest version introduces new automated features, including speaker identification and AI-powered caption placement to avoid on-screen interference. The release boosts average quality results from 98.2% to 98.7% Number, Edition Error and Recognition Error (NER).

Combining both an automated AI-based captioning system like Lexi, along with continuing its live captioning service to customers worldwide helps set the company apart, according to John Peck, vice president of international sales.

“We’re the only captioning supplier where we have all the background of actually producing captioning, whether it be humans and all the different quality levels and the accessibility to make sure that the end user’s needs are met,” he said. “We’re able to be that one vendor that can go from the technology platform all the way through to the service as well.”

Interra Systems

Interra Systems’ BATON Captions is an automated solution that provides an end-to-end platform from caption generation to QC, auto corrections, review, regeneration and editing. The system is based on machine learning and automatic speech recognition, Natural Language Processing (NLP), which the company says can dramatically expedite the caption creation, QC and verification processes for both live and VOD content, while ensuring a high quality.

Ashish Basu, executive vice president, worldwide sales & business, emphasizes the need for QC in an environment where AI—particularly the buzz around ChatGPT—is hyped as the panacea for captioning accuracy. This attitude can run headlong into regulatory requirements, he added.

“While AI can generate closed captions with accuracy in the high 90% under ideal circumstances, the reality of television is that circumstances are not always ideal,” Basu recently wrote on tvtechnology.com. “Background noise and heavy accents can quickly degrade the accuracy of AI-generated closed captions. Content owners, however, have no latitude with regulators. They simply cannot blame AI for missing the regulatory mark.

“This is where the hype over ChatGPT and other generative AI solutions runs headlong into the reality of generating closed captions and subtitles,” he cautioned. “If closed captions do not meet regulatory requirements, content owners will be out of compliance and are potentially exposed to devastating business consequences.

“Not only will the money spent to generate sub-par closed captions have been wasted and more money spent to bring them up to snuff, but also the solid business relationships those content owners are trying to establish with local partners around the world will be threatened,” Basu concluded.

So while advances in AI are helping make closed captioning more accurate and accessible, we’re still in the early stages. As more broadcasters deploy AI, they will need to balance the need for speed and accuracy with the need to maintain compliance.