In May 2023, OpenAI founder Sam Altman testified before the Senate Judiciary Committee about ChatGPT. Altman demonstrated how his company's tool could massively reduce the cost of retrieving, processing, conveying, and perhaps even modifying the collective knowledge of mankind as stored in computer memories worldwide. A user with no special equipment or access can request a research report, story, poem, or visual presentation and receive in a matter of seconds a written response.

Because of ChatGPT's seemingly vast powers, Altman called for government regulation to "mitigate the risks of increasingly powerful AI systems" and recommended that U.S. or global leaders form an agency that would license AI systems and have the authority to "take that license away and ensure compliance with safety standards." Major AI players around the world quickly roared approval of Altman's "I want to be regulated" clarion call.

Welcome to the brave new world of AI and cozy crony capitalism, where industry players, interest groups, and government agents meet continuously to monitor and manage investor-owned firms.

Bootleggers and Baptists Have a 'Printing Press Moment'

ChatGPT has about 100 million weekly users worldwide, according to Altman. Some claim it had the most successful launch of a consumer product in history, and Altman anticipates far more future users. He's now seeking U.S. government approval to raise billions from United States, Middle East, and Asian investors to build a massive AI-chip manufacturing facility.

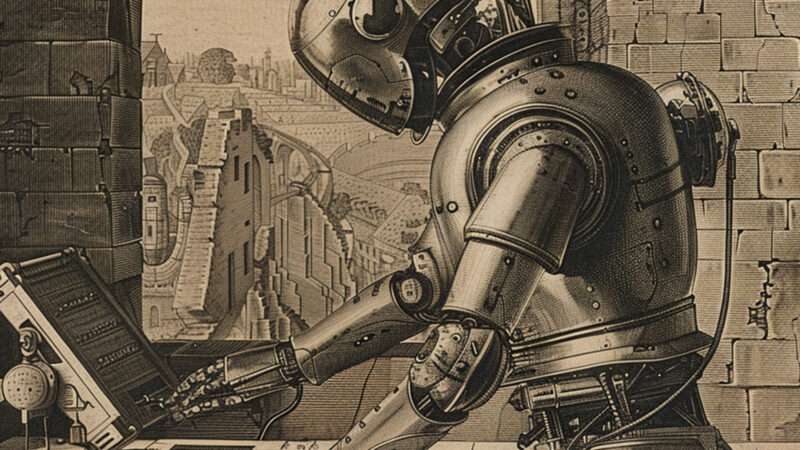

In his testimony, Altman referred to Johannes Gutenberg's printing press, which enabled the Enlightenment, revolutionized communication, and—following the dictum "knowledge is power"—destabilized political and religious regimes worldwide. Altman suggested that, once again, the world faces a "printing press moment": another time of profound change that could bring untold benefits as well as unimaginable disturbances to human well-being. A related letter signed by Altman, other AI industry executives, and scores of other leaders in the field, underlined their profound concern and said: "Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war."

Altman's call for regulation also has a parallel in the early history of printing. Something similar to the licensing control suggested by Altman was used by Queen Elizabeth I in 16th century England. She assigned transferable printing rights to a particular printing guild member in a frustrated effort to regulate and censor printing.

Altman's moral appeal rests on the notion of preserving a nation of people or their way of life. In that way, it satisfies the "Baptist" component of the bootleggers and Baptists theory of regulation, which I developed decades ago and which explains why calls to "regulate me" may be seen as just about businesses earning extra profits.

An especially durable form of government regulation takes hold when there are at least two interest groups supporting it, but for decidedly different reasons. One supports the pending regulation for widely held moral reasons (like old-school Baptists who want to bar legal Sunday liquor sales). The other is in it for the money (like the bootleggers who see opportunity in a day without any legal competition).

Altman's "Baptist" altar call may well be altruistic—who can know?—but it shows his hand as a potential bootlegger, too. After all, a government-sheltered AI market could provide a first-mover advantage as his entity helps to determine the appropriate standards that will be applied to everyone else. It could yield a newer, cozier crony capitalism that has not previously existed in quite the same form.

Apparently, some other potential bootleggers heard the altar call too and liked the idea of being in a cozy cartel. Soon, Microsoft insisted that thoroughgoing government regulation of AI would be necessary. In another Baptist-like appeal, Microsoft President Brad Smith said:"A licensing regime is fundamentally about ensuring a certain baseline of safety, of capability. We have to prove that we can drive before we get a license. If we drive recklessly, we can lose it. You can apply those same concepts, especially to AI uses that will implicate safety."

Google felt the call as well and provided a statement recommending AI regulation on a global and cross-agency basis. Google CEO Sundar Pichai emphasized, "AI is too important not to regulate and too important not to regulate well." Another February 2024 policy statement from Google contains a litany of plans to cooperate with competitors and government agencies to advance a safe and effective generative AI environment.

How AI Will Regulate AI

In a December 2023 report on U.S. government agency activity for FY 2022, the Government Accountability Office indicated that 20 of the 23 agencies surveyed reported some 1,200 AI activities, including everything from analyzing data from cameras and radar to preparing for planetary explorations.

Bootlegger and Baptist–inspired regulatory episodes in the past typically involved in-depth studies and hearings and ended up with lasting rules. That was the case through most of the 20th century, when the Interstate Commerce Commission regulated prices, entry, and service in specific industries, and in the 1970s, when the Environmental Protection Agency was equipped with command-and-control regulations in an alleged attempt to correct market failures.

Sometimes it took years to develop regulations to address a particular environmental problem. By the time the final rules were announced and frozen in time, the situation to be resolved may have changed fundamentally.

Cheap information enabled by generative AI changes all this. By using AI, the generative AI regulatory process—pending at the level of California, the federal government, and other governments worldwide—so far favors ongoing, never-ending governance processes. These will focus on major generative AI producers but involve collaboration among industry leaders, consumer and citizen groups, scientists, and government officials all engaged in a newly blossomed cozy cronyism.Under the European Union's AI Act, an AI Office will preside over processes that continually steer and affect generative AI outcomes.

If more traditional command-and-control or public utility regulation were used, which raises its own challenges, AI producers would be allowed to operate within a set of regulatory guardrails while responding to market incentives and opportunities. They would not be required to engage in cooperative engagement with regulators, their own competitors, and other supposed stakeholders in this larger enterprise. In this AI world, bootleggers and Baptists now sit together in the open.

Specifically, this burgeoning approach to AI regulation requires the implementation of "sandboxes," where regulated parties join with regulators and advisers in exploring new algorithms and other AI products. While sandboxes have some beneficial things to offer industries in new or uncertain regulatory environments, members of the unusually collaborative AI industry are poised to learn what competitors are developing as government referees look on.

The risk, should this environment persist, is that radically new products and approaches in the arena never get a chance to be developed and benefit consumers. The incentives to discover new AI products and profit from them will be blunted by cartel-like behavior in a new bootleggers and Baptists world. Countries and firms that refuse to play by these rules would likely become the only fountainhead for major new AI developments.

The End of the AI Wild West

No matter what form of regulation holds sway, generative AI is out of the box and will not go away. Already, computer capacity requirements for processing the software are falling, and already, generative AI's applications to new information challenges are exploding. At the same time, government agents' ability to regulate is improving and the payoff to the regulated for working hand-in-hand with government and organized interest groups is growing.

Generative AI's Wild West days may be drawing to a close. This is when invention, expansion, and growth can occur unconstrained by regulation while within the bounds of local courts, judges, property rights, and common law. Novel developments will still occur, but at a slower pace. The generative AI printing press moment may expand to become an era. But just as the Gutenberg invention led initially to the regulation and even outlawing of the printing press across many major countries, new information technologies emerged anyway and effective regulation of real knowledge became impossible.

A renaissance was set in motion despite official attempts to stymie the technology. Freer presses emerged, and eventually the telegraph, telephones, typewriters, mimeograph, Xerox, fax, and the internet itself. Knowledge flows cannot be stopped. We moderns may learn again that the human spirit cannot be forever bottled up, and over the long run market competition will be allowed, if not encouraged, to move mankind closer to a more prosperous era.

America's Founders were enlightened and well aware of Europe's troublesome efforts to regulate Gutenberg's printing press. They insisted on freedom of speech and a free press. We may eventually see similar wisdom applied to generative AI control—but don't hold your breath.

The post AI's Cozy Crony Capitalism appeared first on Reason.com.