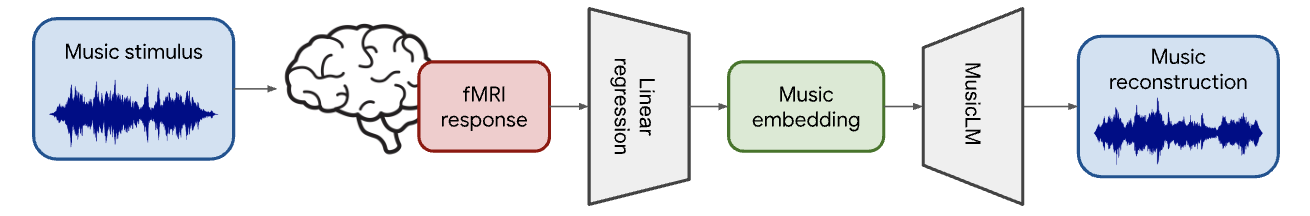

Researchers at Google and Osaka University have published the results of a study in which scans of brain waves taken while subjects listened to music in the laboratory were transformed into 'reconstructions' of the music they listened to using an AI model named Brain2Music.

Published on July 20, Brain2Music: Reconstructing Music from Human Brain Activity details a study in which subjects listened to 540 music samples across ten different genres while researchers measured their brain activity using fMRI scans. These readings were then used to train a deep neural network that identified patterns of brain activity associated with musical characteristics such as mood, genre and instrumentation.

Researchers then used MusicLM, Google's text-to-music AI music generator, in an attempt to transform the resulting data back into the music that the subjects listened to. According to Google's Timo Denk, one of the paper's authors, the results were promising, and the AI model managed to create music that sounded similar to the music the subjects originally heard using only the data derived from the brain scans.

"The generated music resembles the musical stimuli that human subjects experienced, with respect to semantic properties like genre, instrumentation and mood," Denk says. Google have shared several audio examples from the study; listening to Brain2Music's recreation of Beastie Boys' Fight for Your Right and Britney Spears' Oops!... I Did It Again, the resemblance to the original tracks is evident, if not exact.

Though Brain2Music hasn't successfully reproduced the songs note-for-note, its reconstructions are similar to the originals in aspects such as rhythm, instrumentation and overall style. Unintelligible vocals can be heard in the reconstructions, but the target songs' lyrics haven't been reproduced, as Brain2Music wasn't designed to reconstruct lyrical content.

Discussing where their research could lead in the future, the researchers suggest that "an exciting next step is to attempt the reconstruction of music or musical attributes from a subject’s imagination [...] This would qualify for an actual 'mind-reading' label."

It doesn't seem too far-fetched to speculate on whether this research could eventually lead to the development of technology that's capable of mind-reading melodies, chords, rhythms and timbres directly from your brain. We've got text-to-music AI tools, but how about 'thought-to-music' AI that lift the sounds you want to hear straight out of your imagination?

"Given the nascent stage of music generation models, this work is just a first step," the researchers admit, pointing to the cumbersome practicalities of fMRI scanning and bringing us right back down to earth with the observation that "the decoding technology described in this paper is unlikely to become practical in the near future".

Admittedly, then, mind-reading synths and thought-controlled DAWs might still be a little way off, but this research - and AI's ever-accelerating development - suggests that they're more than just a sci-fi fantasy; they're a concrete possibility, and one that could be closer than we think.