In an open letter to the AI community, a group of renowned AI researchers joined by Elon Musk and other tech luminaries called upon AI labs to cease training on large-scale AI projects that are more powerful than GPT-4, the tech behind ChatGPT and many other popular AI chatbots.

The Future of Life Institute doesn't mince words in its letter; opening with the warning that "AI systems with human-competitive intelligence can pose profound risks to society and humanity." They go on to warn that the development of these systems requires proper planning and management which is not happening in the current "out-of-control race to develop and deploy ever more powerful digital minds that no one — not even their creators — can understand, predict, or reliably control."

Risky AI business

The researchers aren't asking that all AI research come to a halt, they aren't looking to put themselves out of jobs, rather they want a six-month pause to what they call the "ever-larger unpredictable black-box models with emergent capabilities." During that time AI labs and independent experts would work together to create safety standards for AI development that can be "audited and overseen by independent outside experts" to ensure AI advancement is properly controlled.

OpenAI, the company behind ChatGPT, acknowledged some of these concerns in its recent statement about artificial general intelligence. The company said that "At some point, it may be important to get independent review before starting to train future systems, and for the most advanced efforts to agree to limit the rate of growth of compute used for creating new models." Where they differ is that the Future of Life Institute feels that we are already at that inflection point.

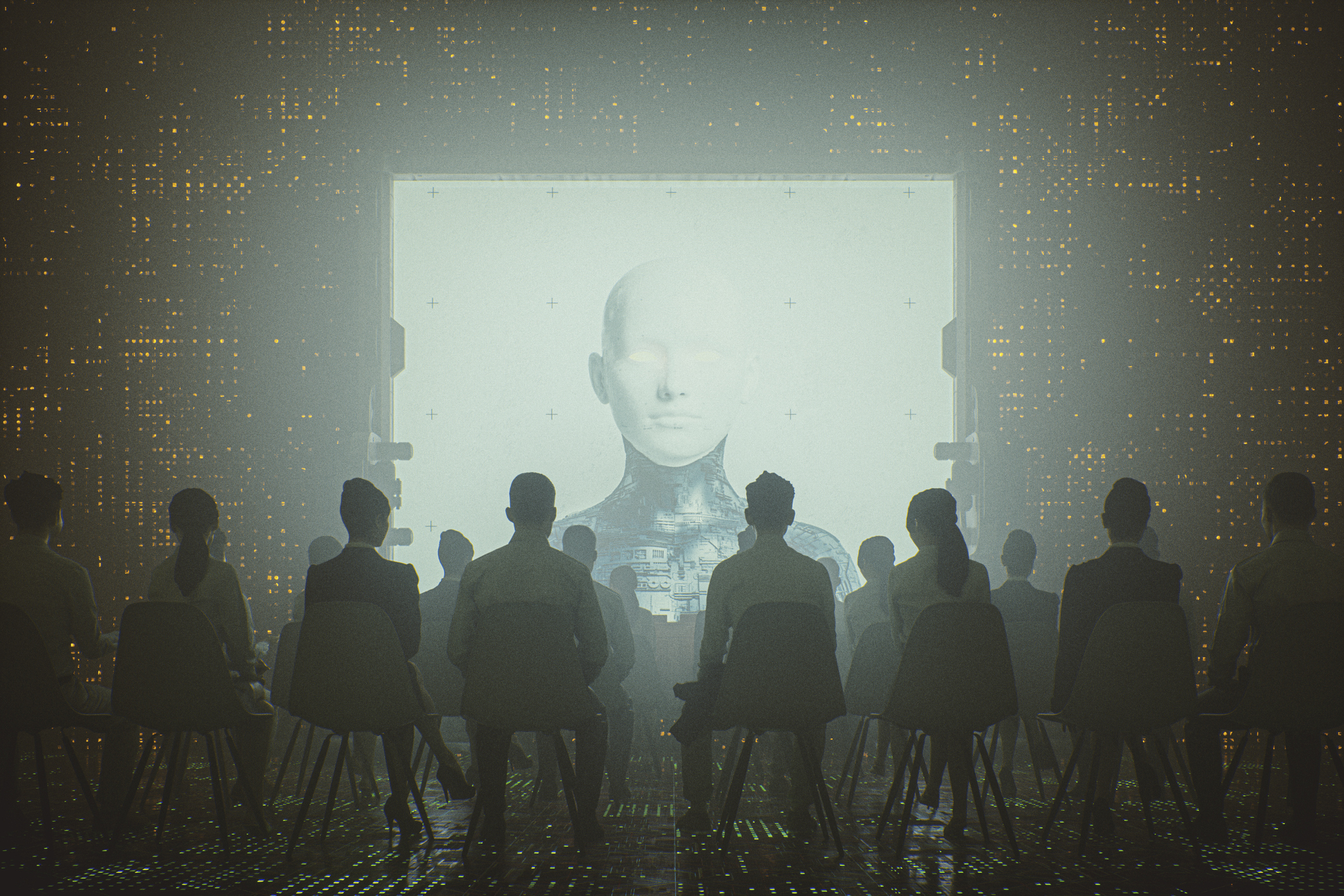

It's hard not to summon thoughts of apocalyptic AIs from popular culture in this discussion. Whether its Skynet from the Terminator series or the AI from The Matrix, the idea of an AI gaining sentience and waging war on or enslaving humanity has been a popular theme of apocalyptic science fiction for decades.

We aren't there yet, but that is precisely the level of concern that is raised here. The researchers are warning against letting this runaway train build up enough speed that we can't possibly stop it.

These AI projects feel like fun and useful tools, and the Future of Life Insitute wants them to remain exactly that by pausing them now and stepping back to consider the long-term goals and development strategy behind them, which will allow us to reap the benefits of these tools without disastrous consequences.