It might seem counterintuitive, but for AI assistants to work well together, they can’t always get along with one another, according to Babak Hodjat, chief technology officer of AI at Cognizant.

The next phase of the technology, according to Hodjat, will be a world in which various AI assistants, each an expert in their own area, will work together to help people with everyday questions, Hodjat said during a live interview at the Fortune COO Summit. (Disclosure: Cognizant sponsored the conference in Middleburg, Va., last week, and received coverage as part of its sponsorship agreement.)

But if each of those assistants turns into a yes-man, then users run the risk of getting bad information because consensus will be reached too quickly. That’s the current reality of AI chatbots.

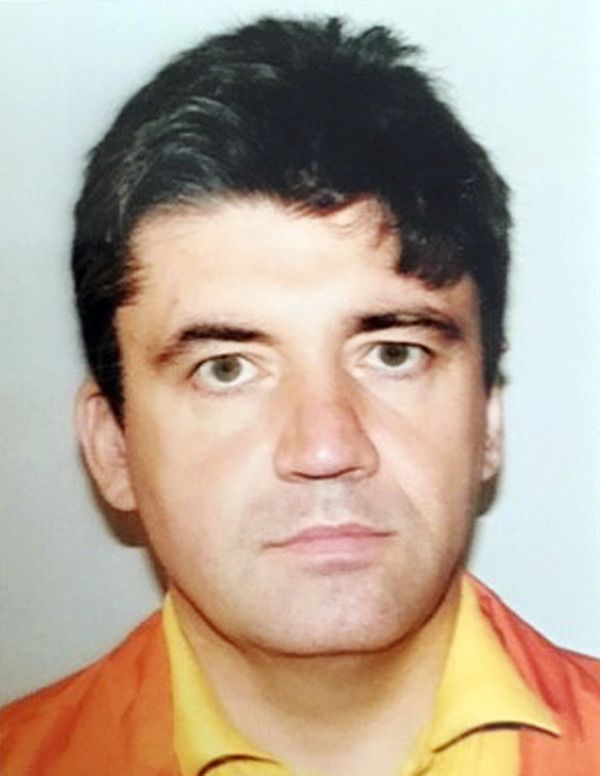

“They’re too agreeable,” said Hodjat, who invented the natural language technology that contributed to Apple’s Siri. “They’re too—I would say—soft.”

Hodjat envisions a world with specialized chatbots for various workplace functions: a human resources AI agent that could answer questions about paid-time-off policies, a finance one that could check on the pricing of the latest supplier contracts, and one from legal that could answer compliance questions (which Google DeepMind’s director of strategic initiatives Terra Terwilliger told Fortune at the COO Summit it’s already piloting).

Hodjat gave a real-world example of how an employee might use a network of AI assistants. In the case of a life-changing event, such as the death of a spouse, an employee would need to speak to HR about any changes to their benefits; to the payroll department about changes in deductions from their paycheck; and perhaps also get some legal advice.

To work effectively, these AI agents would be best served by some healthy discussion—just like how thoughtful coworkers exchange ideas to solve a complex problem. But as of now, AI agents still can’t replicate that uniquely human dynamic.

“That’s one of the frustrations when you set these systems into multi-agent setups, you want them to talk to each other, maybe debate each other, and [for] something to come out of it,” Hodjat said.

There’s a good reason why AI systems are programmed to be agreeable, especially at this still very early stage of their development.

“These models are fine-tuned a lot to safeguard them, like out of the box, from doing bad things and being biased and weird,” Hodjat said.

Among employees, workplace discussions—not arguments—are credited with improving collaboration and making people feel more energized about their work. They also lead, believe it or not, to higher job satisfaction because employees feel as if they’re allowed to voice their opinion without fear of repercussions. Without a good-natured back and forth, a company risks becoming an echo chamber.

“It’s harder to do that using the state of the generative AI of today because they’re so agreeable,” said Hodjat. “They immediately coalesce around, and that’s not the reality we see in social networks.”