Robots aren’t typically known for their flawless skin and 12-step care routines, but recent innovations in robotics and lab-grown body parts might spawn a fresh generation of YouTube beauty vloggers after all.

Instead of cold metal and dull silicon exteriors, next-generation robots may instead sport a more human birthday suit called electronic skin, or e-skin for short.

Beyond just making these robots look more human, engineers are excited about how the skin will enable robots to feel like humans do — a necessary skill to help them navigate our human-centric world.

HORIZONS is a newsletter on the innovations and ideas of today that will shape the world of tomorrow. This is an adapted version of the June 30 edition. Forecast the future by signing up for free.

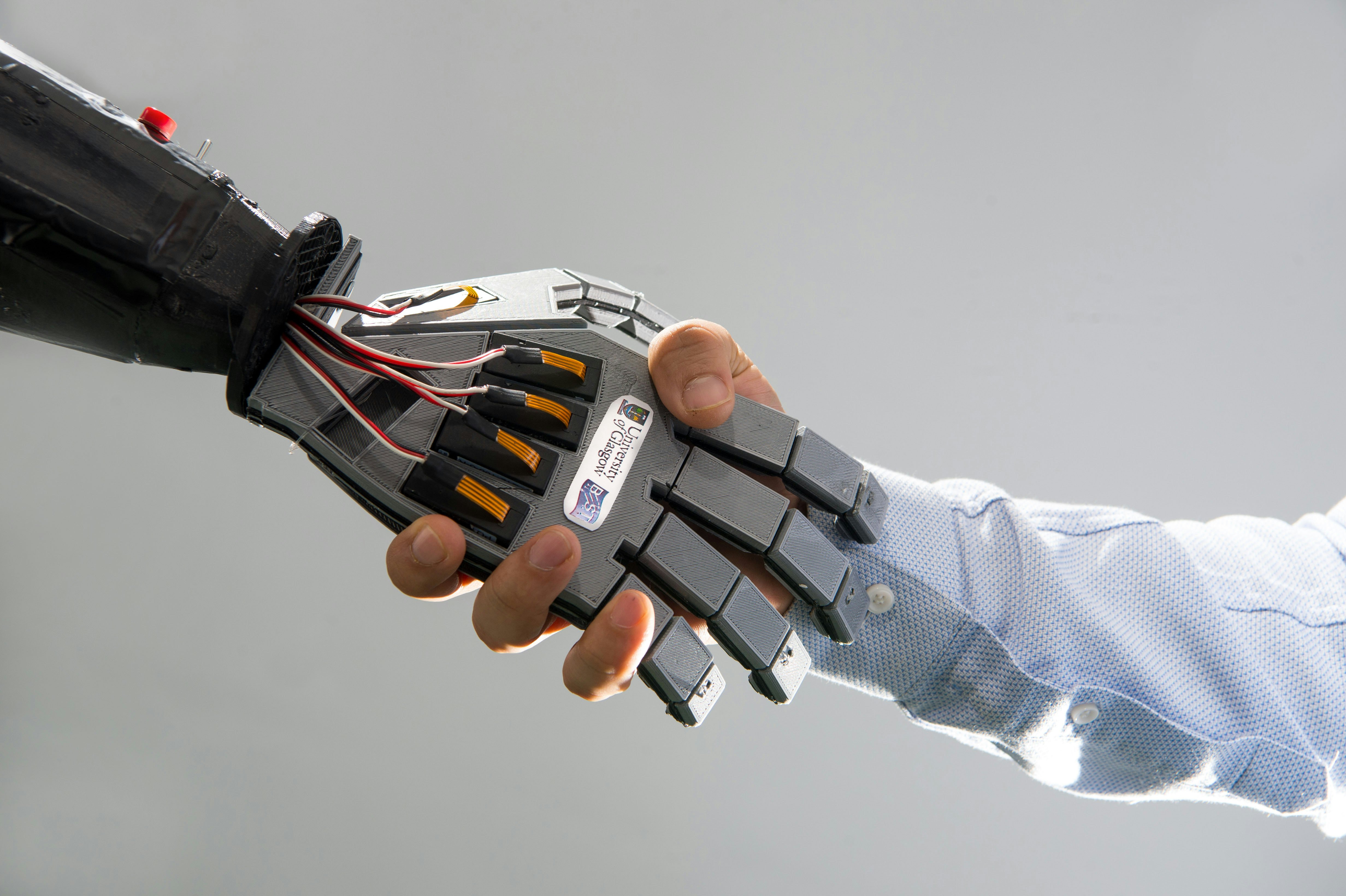

“E-skin will allow robots to have safe interaction with real-world objects,” Ravinder Dahiya, professor of electronics and nanoengineering at the University of Glasgow, tells Inverse.

“This is also important for social robots which may be helping elderly in care homes, for example, to serve a cup of tea.”

Dahiya is the lead author on one of several papers published this month in the journal Science Robotics detailing several advances in robotic skin that together overcome decades-worth of engineering obstacles.

Creating “electronic skin”

Dahiya and his colleagues’ latest work explores how robots’ skin can be used to feel and learn about their surroundings using synaptic transistors embedded in the artificial skin. Mimicking the sensory neural pathways of the human body and brain, their work demonstrates a robot skin that can learn from sensory experiences, like a child who learns not to touch a hot surface after getting a burn.

“A robot deployed in a nuclear power plant can have e-skin with radiation sensors.”

We take this kind of sensory ability for granted as a result of living in our acutely sensitive skin, but they’re much harder to imbue in e-skin, roboticist Kyungseo Park tells Inverse.

Park is a postdoctoral researcher at the University of Illinois, Urbana-Champaign, and the first author of another e-skin paper published this month in Science Robotics that shows how electrodes and microphones could be built into hydrogel and silicone e-skin to provide more sensitive tactile input.

While small-scale sensors might work for small-scale projects like responsive robot hands, Parks says these technologies struggle to scale up.

“Although these tactile sensors work well, it is challenging to cover the robot’s whole body with these sensors due to practical limitations such as wiring and fabrication,” Park says.

“It is required to develop a sensor configuration that can be freely scaled depending on the application.”

The human skin is the largest organ in the body. Our skin can process sensory input at nearly any location with very little energy, but replicating and scaling this ability in e-skin is a logistics and latency nightmare, Dahiya says.

“[Electronics] need to be developed or embedded in soft and flexible substrates so that the e-skin can conform to curvy surfaces,” Dahiya says.

“This [will] mean sensors and processing electronics can be distributed all over the body, which will reduce the latency and help address other challenges such as wiring complexity.”

If an e-skin cannot reliably process sensory inputs anywhere as they occur, it could be a major liability in the real world — especially because humans enjoy split-second sensory processing as a result of their biological skin.

Learning to feel

If building e-skin is so challenging, then why are scientists around the world still working hard to make it a reality? For robots, and the companies or governments that control them, e-skin could represent a new opportunity to record and process massive amounts of information beyond our own skin’s sensing abilities.

“Most commercial robot arms are not able to perceive physical contact, so they may cause serious injury to humans.”

“When we think about robots that are used in hazardous environments, the types of sensors can be much broader than basic five sensory modalities,” Dahiya says.

“For example, a robot deployed in a nuclear power plant can have e-skin with radiation sensors as well. Likewise, the e-skin may have photodetectors which can also augment human skin capability by allowing us to measure the excessive exposure to ultraviolet rays. We don’t have such capabilities.”

In the future, e-skin could also be used to measure things like proximity (guard robots), temperature, or even disease and chemical weapons. Such advanced sensing capabilities might enable remote robots to go into potentially dangerous situations and assess the problem without putting humans in harm’s way.

Beyond the vision of autonomous Terminator-like robots, e-skin could also be used to bring sensing capabilities to existing tools. For example, medical instruments that could allow clinicians to “feel” tissues inside the human body to make diagnoses, Dahiya says.

In his and colleagues’ work, Dahiya also explored how e-skin could be designed to sense injuries (like a cut or tear) and heal itself, much as our skin does. Right now, their e-skin needs a lot of assistance to accomplish this task, but in the future, this functionality could be essential for remote robots exploring potentially harmful terrain like the surface of Mars.

On the horizon

Beyond the advancement of robot sensing, Dahiya and Park say that e-skin will also play an important role in keeping humans safe in human-robot interactions as well. For many service robots, this is a particularly crucial concern.

“Most commercial robot arms are not able to perceive physical contact, so they may cause serious injury to humans,” Park says.

Take a greeter robot, for example, tasked with shaking the hand of everyone who crosses its path to welcome them into a place. Without tactile sensors to gauge the pressure of its handshakes, it could unwittingly crush someone’s hand.

“I think the elderly who need direct assistance from robots will probably benefit the most,” Park adds.

Advances in e-skin could also play a role in improving prosthetics, Park says. The same tactile sensors that help robots feel their environment could help restore or even advance the sensing capabilities of those with limb loss.

While the e-skin that Park and Dahiya worked on is making strides toward a robotic future, they’ve both got some fine-tuning to do before they’re put into practice. In the meantime one thing is certain: We should be thankful for the complex and capable skin we have evolved.

HORIZONS is a newsletter on the innovations and ideas of today that will shape the world of tomorrow. This is an adapted version of the June 30 edition. Forecast the future by signing up for free.