What content is safe for a child to view online? Who gets to decide what is OK and what is dangerous? And how much of a role should a government, a company or a parent play in that decision?

These questions were brought into focus by the inquest into the death of 14-year-old Molly Russell. And if there was one point during the two-week hearing when the case for tougher online regulation became overwhelming, it was during Meta executive Elizabeth Lagone’s testimony.

The head of health and wellbeing policy at Mark Zuckerberg’s company was taken through a selection of the Instagram posts the teenager had viewed in the six months before her death – deeming many of them to be “safe” for children to view. It was not an opinion shared by many in the room at North London coroner’s court.

Molly, from north-west London, died in 2017 after viewing extensive amounts of online content related to suicide, depression, self-harm and anxiety. In what the NSPCC described as a global first, the senior coroner said social media had contributed to Molly’s death, ruling that that Molly had died from “an act of self-harm while suffering from depression and the negative effects of online content”.

Lagone would be shown a post and then comment on whether it met Instagram guidelines at the time. Many were deemed permissible by Lagone, who would use phrases like “sharing of feelings” as she explained that suicide and self-harm content could be allowed if it represented an attempt to raise awareness of a user’s mental state and share their emotions.

The online safety bill is expected to resume its progress through parliament imminently and the new culture secretary, Michelle Donelan, said its provisions for protecting children would be strengthened. The bill places a duty of care on tech platforms to protect children from harmful content and systems.

In July, a written statement from Donelan’s predecessor, Nadine Dorries, made clear that the type of content seen by Molly would be covered by the bill. Children must be prevented from encountering content promoting self-harm and legal suicide content, although content about recovery from self-harm or suicidal feelings could be permitted if it is age appropriate. Meta’s method for assessing whether this content is allowed or appropriate will be assessed by the communications regulator Ofcom – it won’t be the company’s call.

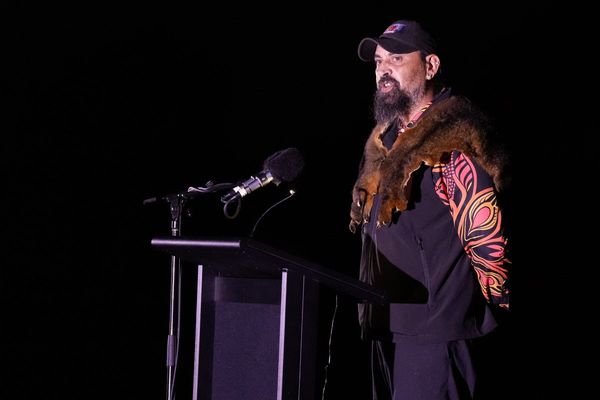

Molly’s father, Ian, was scathing about the “safe” assessment. Speaking at the end of the two-week hearing, he said: “If this demented trail of life-sucking content was safe, my daughter Molly would probably still be alive and instead of being a bereaved family of four, there would be five of us looking forward to a life full of purpose and promise that lay ahead for our adorable Molly.”

Lagone apologised for the fact that some of the content viewed by Molly did break Instagram guidelines at the time, which barred content that promoted or glorified suicide and self-harm.

In questioning Lagone, the Russell family’s legal representative, Oliver Sanders KC, said the entire situation was unsafe. “I suggest to you that it is an inherently unsafe environment … dangerous and toxic for 13- to 14-year-olds alone in their bedrooms scrolling through this rubbish on their phones.”

“I respectfully disagree,” Lagone responded. There were a lot of these tense exchanges, which at one point resulted in senior coroner Andrew Walker asking Lagone to answer “yes or no” whether one batch of content she had seen was safe for children.

The accumulated impact of this back and forth led to Sanders raising his voice at one point: “Why on Earth are you doing this?” He said Instagram was choosing to put content “in the bedrooms of depressed children”, adding: “You have no right to. You are not their parent. You are just a business in America.”

The impact of Lagone’s two-day appearance, and of what happened to Molly, was to damage faith that a major social media platform could be relied on to police its content and systems without a wider regulatory framework to ensure it is done properly.

As Ian Russell said afterwards: “It’s time for the government’s online safety bill to urgently deliver its long-promised legislation.”

Meta said its thoughts were with the Russell family and that it was “committed” to ensuring that people’s experience of Instagram was a “positive experience for everyone”. Pinterest, another platform that had shown Molly harmful content before she died, also said it was “committed to making ongoing improvements to help ensure that the platform is safe for everyone”.

If this UK government manages to pass the planned legislation they, and other platforms, will soon be answerable to Ofcom on those commitments.

If you want to read the complete version of the newsletter please subscribe to receive TechScape in your inbox every Wednesday.