When traveler Daniele Brito posed in front of the Temple Bar in Dublin, Ireland in late August, she likely didn’t realize the camera was watching her.

Yes, there was the one pointed at her, capturing a photograph that would later be shared to Brito’s more than 2,700 followers on Instagram. But there was at least one other one observing her: a surveillance camera stationed on the corner opposite the bar.

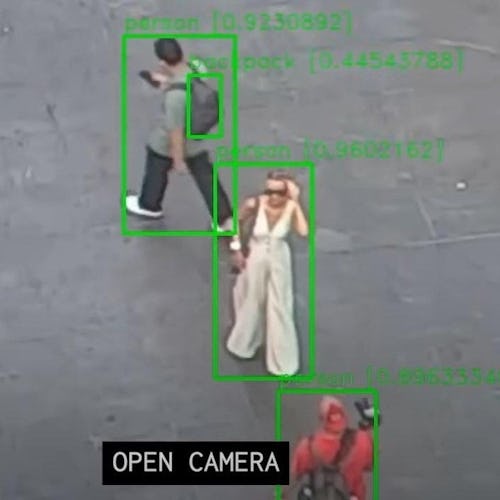

Brito’s photo — and the surveillance video of her — became part of Belgian artist Dries Depoorter’s project The Follower, which he unveiled on social media yesterday. Depoorter programmed an artificial intelligence system that uses open-access video footage from cameras stationed around the world in order to spot people. It then crosschecks those individuals with Instagram photographs posted from locations those cameras cover, seeing if it can find a match.

Depoorter, 31, gained attention last year for his project The Flemish Scrollers, which used AI to tag Belgian politicians distractedly scrolling on their phones during livestreamed meetings. Depoorter declines to share the usernames of any of those caught up in his latest project, though it is simple enough to find them through reverse-engineering. (That’s how Input figured out Brito’s identity; she did not respond to a request for comment.)

Depoorter, however, will reveal that The Follower came about out of boredom as he remotely observed the habits of an apparent influencer. “I’ve been doing projects with open cameras for years,” Depoorter says. “One day, I was watching one camera, and there was a person taking photos for like half an hour, really professionally. That was the starting point.” Depoorter manually searched for the resultant photos on Instagram using the platform’s location-tagging functionality, but couldn’t find them.

So he decided to code a tool that pulls in surveillance camera footage that is close to the ground — the better to identify people more accurately — and at tourist sites where people are likely to take photographs. Most of the software he uses is off-the-shelf. In all, Depoorter analyzed four weeks’ worth of photos, coming up with a number of hits.

Depoorter ducks discussion of what goals he hoped to achieve. “I know which questions it raises, this kind of project,” he says. “But I don’t answer the question itself. I don’t want to put a lesson into the world. I just want to show the dangers of new technologies.”

‘The digital gaze’

For Mariann Hardey at England’s Durham University, who has previously studied surveillance’s pervasiveness, the project is an indication of how deeply being watched is entrenched in our lives.

“The digital gaze permeates network architecture and social and cultural structures,” she says in an emailed response. “The Follower provides fascinating insight into creativity that is fundamentally dependent on smartphone technology and exposes the frivolity of digital culture in how influencers experiment with posed images and techniques within the social landscape.”

Francesca Sobande, senior lecturer at Cardiff University’s School of Journalism, Media, and Culture in Wales, is more circumspect about the impact of the project. “Art does many great things, including stir generative discussions and debate about life as we know it,” she tells Input. “However, art projects can also have harmful effects. Such harms should not be brushed aside in discussions about art and the technology that is sometimes central to it.”

“For me, it’s more about the technology and not about the people I used.”

Sobande highlights that extensive research has shown how AI and open cameras are used as “part of the structural surveillance of people, particularly individuals impacted by racism, xenophobia, and other intersecting forms of oppression. There is a history of AI and open cameras playing a core part in the violent policing of protests and dissent, and this history cannot be ignored in the name of art.

“For these reasons,” Sobande continues, “any project that involves AI and open cameras is a project that may, unfortunately, reinforce a societal state of surveillance that can put people’s lives at risk.”

Depoorter doesn’t seem to have such concerns. “For me, it’s more about the technology and not about the people I used,” he says. “I used a lot of people, so there’s no focus on one person.” When Input points out that he showed those peoples’ unblurred faces in his project, making it possible to identify them, Depoorter replies, “Yes, but they posted the pictures also.”

At least one of the project’s subjects is extremely unhappy. The Follower also identified Renata Costa and her partner Cleison kissing outside the Temple Bar. When contacted, Cleison declined to talk via Instagram video call, believing Input was behind the project. “I would like you to delete my photo... immediately,” Cleison writes. “It’s a crime to use the image of a person without permission.” Cleison says he’ll be contacting his lawyers.