Education isn’t about encouraging students to use information and repeat it in a different way – AI could actually help to encourage learners to critically question information online and discern fact from fiction

Opinion: The news has been dominated by developments and events in the field of Artificial Intelligence. Just this week, Sam Altman, the CEO of OpenAI, the company responsible for creating ChatGPT, called for regulations to prevent AI being used to weaponise information. In May Geoffrey Hinton, the ‘Godfather of AI’, quit his position at Google because of fears that AI developments lack oversight and could be exploited by bad actors for political gain. All this is particularly concerning as New Zealand heads towards an election later this year.

Central to their concerns is the convincingness of the content AI can produce. Indeed, what makes AI so fascinating is its increasing ability to produce content that appears to be human-generated, even if it is still sometimes ‘not quite right’.

READ MORE:

* Don’t write off AI to solve our doctor shortage

* AI chatbots will be a game-changer for educational assessment

* Artificial intelligence: Our dystopian future?

This is because AI has been created to think like humans, with the software being modelled on how biological brains work. It is this programming of AI software that reveals an even greater hidden danger to our democracies.

AI is trained on data, and depends on that data being correct. Therefore, if something incorrect is in the data set with enough regularity, the connections it picks up will be repeated by the AI programme

Newly developed AI software that is getting most media attention use neural networks. These are designed to imitate the way our brains work. After being given a task, they quickly search data online, interpret the data using probability and then produce outputs that respond to the task.

Essentially, neural networks look at data and behaviours of users online as clues to learn and to respond to the meaning of a question or command. They are set up by a software engineer, but then operate somewhat independently from the engineer, relying on online data to develop responses. AI programmes such as ChatGPT use neural networks.

One of the (several) debates about neural network AI software focuses on concerns that AI programmes are producing inaccurate or misleading content. Reports show that texts produced by ChatGPT have the tone and format that suggest confidence and infallibility of the facts and the arguments it is making, but closer inspection reveals the facts and arguments are often wrong.

This occurs because AI is trained on data, and depends on that data being correct. Therefore, if something incorrect is in the data set with enough regularity, the connections it picks up will be repeated by the AI programme.

AI doesn’t know what’s true and what’s false – it just uses what is present in the data to produce content

For example, if rumours circulate online about a public figure, the AI systems will use the regularity of the data online to assume its accuracy and perpetuate the rumours as facts. It only takes a rumour to start trending for the AI programme to use that idea to produce its output. Regularly espousing inaccurate or misleading claims therefore reinforce AI systems’ comprehension of the truth.

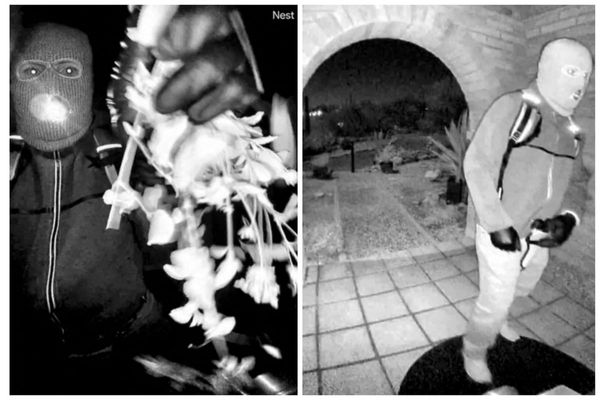

This adds a new, complex layer to the post-truth age and has the potential to produce slanderous and harmful content. Perhaps even more worryingly, in a world where political tribalism is rife and there is the tendency to characterise political leaders negatively for political gain, this has the potential to undermine democracy. Google chief executive Sundar Pichai has raised these concerns, suggesting AI could produce fake videos of people espousing hateful content.

However, what is interesting is what this might reveal about the way we interpret information and conceive ideas. If AI systems are designed to model human brain functions, then to what extent are we immune to inaccuracies being spread regularly online? How can we resist forming impressions of individuals, groups, cultures, movements, religions, events or places based on unsubstantiated rumours?

Essentially, AI doesn’t know what’s true and what’s false – it just uses what is present in the data to produce content. The difference between AI and us is our ability to use critical thoughts to decipher whether something is accurate.

AI programmes aren’t yet able to read the contextual particularities and nuances that shape the way information is presented or meant to be received. This includes ethical or moral judgments about what the information conveys.

However, human minds are capable of detecting contextual clues to interpret meaning in a way that the author or content producer intends. For instance, the human observer can use clues based on the time, place, sentiment and ideological orientation of an artist to interpret and understand the meaning of their art. AI just sees the art at face value – words on a page or colours and lines on a canvas.

As an educator, I see AI as presenting a challenge to encourage learners to critically question information online and discern fact from fiction; this is a vital prerequisite to putting learned information to use.

Rather than moving towards a more knowledge-led approach, as has been recently championed, there is a need to foster strong human characteristics that make us distinct from AI, concerning ethics, analysis and critical thinking.

Education isn’t about encouraging students to use information and repeat it in a different way. Instead, it’s about questioning what is “known”, to scrutinise issues and to produce critically informed learners who can distinguish between information and misinformation.

As humans, we have a unique ability to ask why something is, how was that conclusion reached, what biases may be involved, and what are the limits of truth in this piece of knowledge? Education needs to centralise these questions once more.

Future generations will rely on their education to prepare them for the challenge of being an informed citizen in a context where information and data might appear convincing but is often ‘not quite right’.